Artificial intelligence systems introduce security, ethical, and operational risks that can cause significant harm to organizations and individuals when not properly managed. AI dangers range from technical vulnerabilities like data poisoning to broader concerns about privacy violations and biased decision-making. These risks affect everything from financial systems to healthcare, making effective risk management essential for any organization deploying AI technologies.

The rapid adoption of AI across industries has outpaced the development of comprehensive safety measures. Companies often focus on AI capabilities while overlooking potential threats that can emerge during development, deployment, or operation. Understanding these dangers helps organizations build stronger defenses and more responsible AI systems.

Modern AI risks differ from traditional technology threats because they involve complex interactions between data, algorithms, and human decision-making. A single compromised AI system can affect thousands of decisions and impact multiple stakeholders simultaneously. The interconnected nature of these risks means that addressing one vulnerability often requires understanding several others.

Source: Mindgard

What Are the Primary AI Security Vulnerabilities

AI security vulnerabilities are weaknesses in artificial intelligence systems that attackers can exploit to cause harm, steal information, or manipulate system behavior. Unlike traditional cybersecurity threats that target hardware or software, AI vulnerabilities often exploit the mathematical properties of machine learning algorithms themselves.

The National Institute emphasizes that these attacks exploit fundamental characteristics of how AI systems learn and operate. This makes them particularly difficult to defend against with conventional security tools.

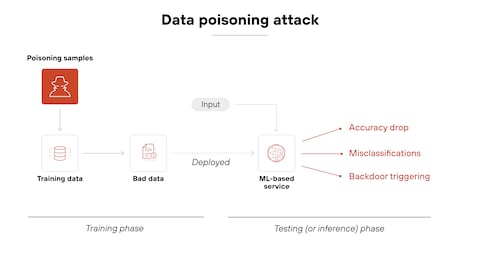

Data Poisoning Attacks Target Training Data

Source: Palo Alto Networks

Data poisoning occurs when attackers deliberately inject malicious or corrupted information into the datasets used to train AI models. Since AI systems learn patterns from training data, poisoned data can fundamentally alter how these systems behave and make decisions.

Attackers can compromise AI systems by controlling as few as several dozen training samples within massive datasets containing millions of examples. The corrupted training data teaches the AI model to recognize false patterns or make inappropriate associations that benefit the attacker.

Common data poisoning methods include:

- Label flipping — changing the correct labels on training data to teach wrong classifications

- Backdoor insertion — adding hidden triggers that cause specific responses when activated

- Dataset contamination — mixing legitimate data with false or misleading information

- Source corruption — compromising data feeds or repositories before training begins

Adversarial Attacks Fool AI Models

Adversarial attacks use carefully crafted inputs designed to fool AI systems into making incorrect decisions or classifications. These attacks exploit mathematical vulnerabilities in machine learning algorithms by finding input patterns that cause models to misinterpret information.

Unlike data poisoning that occurs during training, adversarial attacks happen when AI systems are already deployed and operational. The Department of Homeland Security notes that these attacks can target both AI systems and human users, creating complex deception scenarios.

Adversarial examples appear normal to humans but contain subtle modifications that completely confuse AI systems. For instance, changing a few pixels in an image can cause an AI system to misidentify a stop sign as a speed limit sign.

Model Theft Threatens Intellectual Property

Model theft occurs when competitors or malicious actors steal AI models through reverse engineering, unauthorized copying, or extraction of proprietary algorithms. Organizations invest significant resources in developing AI models, making them valuable intellectual property targets.

Reverse engineering attacks involve systematically querying AI systems with carefully chosen inputs and analyzing the outputs to reconstruct the model’s internal logic. Attackers can often recreate functional copies of AI models without accessing the original source code or training data.

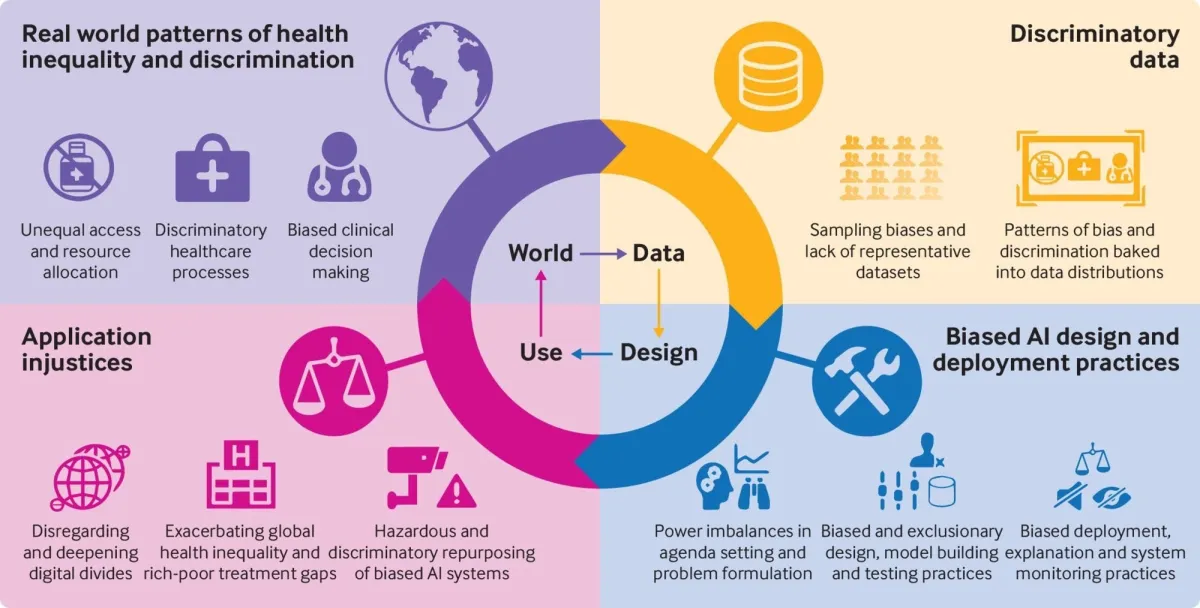

How Algorithmic Bias Threatens Enterprise Operations

Source: Research AIMultiple

Algorithmic bias occurs when AI systems make decisions that systematically favor or discriminate against certain groups of people. These unfair outcomes emerge from biased training data that reflects historical inequalities and flawed algorithms that amplify existing prejudices.

Training data creates bias when datasets contain incomplete information, overrepresent certain demographics, or include historical patterns of discrimination. AI systems learn from this biased information and replicate unfair patterns in their decisions.

Discriminatory Hiring and Employment Decisions

AI recruitment tools can perpetuate workplace discrimination by learning from historical hiring patterns that favor certain groups. These systems analyze resumes, conduct initial screenings, and rank candidates based on patterns that may contain embedded biases.

A well-documented example involved Amazon’s AI recruiting tool, which the company scrapped after discovering it systematically downgraded resumes that included words like “women’s” or female-associated names. The system had learned from historical hiring data that predominantly featured male employees in technical roles.

Common hiring bias patterns include:

- Gender discrimination — AI systems trained on historical data may favor male candidates for technical positions

- Educational bias — algorithms may overvalue graduates from prestigious universities while overlooking qualified candidates from different backgrounds

- Name-based discrimination — resume screening tools may assign lower scores to candidates with names suggesting certain ethnic backgrounds

- Geographic bias — systems may favor candidates from specific ZIP codes, inadvertently discriminating based on socioeconomic status

Financial Services Discrimination

Bias in lending and insurance decisions affects access to credit, mortgages, and insurance coverage. AI systems analyze credit histories, income patterns, and demographic data to make approval decisions and set interest rates.

Research has documented cases where AI lending systems provided different interest rates or loan terms to applicants with similar financial profiles but different demographic characteristics. These systems often use proxy variables that correlate with protected characteristics, creating indirect discrimination.

AI Privacy Risks and Data Protection Challenges

AI privacy risks are threats to personal information that occur when artificial intelligence systems collect, process, or store individual data. These risks emerge from how AI models learn from vast datasets and make decisions about people’s lives.

The General Data Protection Regulation in Europe sets strict rules for how organizations handle personal data in AI systems. Under GDPR, companies face significant penalties for privacy violations, with fines reaching up to four percent of global revenue.

Personal Data Exposure in Training Sets

Training datasets often contain sensitive personal information that AI models can memorize and later reveal. When organizations collect massive amounts of data to train AI systems, they frequently include personal details like names, addresses, medical records, and financial information without realizing the privacy implications.

Data anonymization failures create significant privacy risks. Organizations may remove obvious identifiers like names but leave indirect identifiers that can reveal identity. Attackers can combine anonymous datasets with other information sources to identify individuals.

Cross-Border Data Transfer Violations

International data transfer laws restrict how personal information moves between countries, creating compliance challenges for AI systems that operate globally. Different regions have varying requirements for protecting citizen data when it crosses borders.

The European Union requires adequacy decisions or specific safeguards for data transfers outside the EU. China’s Personal Information Protection Law mandates data localization for critical sectors. These requirements can conflict with AI systems that need to process data across multiple jurisdictions.

Environmental Impact of AI Implementation

Source: MarketWatch

Artificial intelligence systems consume substantial amounts of energy throughout their development and operation, creating significant environmental consequences. The computational power required to train large AI models demands massive electricity consumption, leading to increased carbon dioxide emissions.

Training OpenAI’s GPT-3 consumed approximately 1,287 megawatt hours of electricity, equivalent to powering 120 average U.S. homes for an entire year while generating about 552 tons of carbon dioxide emissions. Each query to systems like ChatGPT consumes approximately five times more electricity than simple web searches.

Energy-Intensive Training and Operation

AI training and deployment require unprecedented computational resources that translate directly into massive energy consumption and carbon emissions. The training phase of large language models represents the most energy-intensive aspect of AI development, requiring thousands of specialized processors running continuously for weeks or months.

Key energy consumption factors include training phase electricity demand, operational query processing, cooling system requirements, and hardware manufacturing energy. Data centers hosting AI workloads consume millions of gallons of water annually to remove heat generated by high-performance computing equipment.

Sustainable AI Development Practices

Green AI techniques focus on developing and deploying artificial intelligence systems that minimize environmental impact while maintaining performance requirements. Model efficiency optimization enables organizations to achieve AI objectives with reduced computational resources through architectural improvements.

Energy-efficient deployment strategies prioritize cloud platforms and data center providers that demonstrate commitment to renewable energy use. Organizations can significantly reduce environmental impacts through provider selection, choosing platforms powered by solar, wind, or hydroelectric energy sources.

Loss of Human Decision-Making Control

AI dependency occurs when people rely too heavily on artificial intelligence systems to make decisions instead of using their own judgment. Automation bias happens when humans automatically trust AI recommendations without questioning them, even when the AI might be wrong.

When organizations remove humans from decision-making processes entirely, they create dangerous gaps in judgment and accountability. Human oversight remains essential in critical decisions because people can consider context, ethics, and nuanced factors that AI systems cannot fully understand.

Over-Reliance on Automated Systems

Removing humans from decision loops creates significant risks across multiple organizational contexts. When systems operate without human verification, errors can compound and spread rapidly without detection. Automated decision-making can miss important contextual factors that require human judgment and experience.

Healthcare systems automatically prescribing medications without physician review pose serious safety risks. Financial institutions approving loans based solely on algorithmic assessments may miss important contextual factors about borrowers’ circumstances.

Maintaining Human Oversight and Authority

Source: Humans in the Loop

Human-in-the-loop design principles ensure that people retain meaningful control over important decisions while benefiting from AI capabilities. These frameworks position AI as a tool that enhances human judgment rather than replacing it entirely.

Effective human oversight requires clear roles, responsibilities, and decision-making authorities that cannot be overridden by automated systems. Organizations implement mandatory review periods, escalation procedures for complex situations, and training programs to maintain critical thinking skills.

AI Hallucinations and Information Reliability

AI hallucinations occur when artificial intelligence systems generate responses that appear confident and accurate but contain false, fabricated, or misleading information. These confident but incorrect outputs represent one of the most significant challenges in AI reliability.

The underlying causes stem from how these systems process and generate information. AI models learn patterns from training data, but they can’t distinguish between accurate and inaccurate information in their training sets. When faced with questions beyond their knowledge, AI systems may generate plausible-sounding responses that lack factual basis.

False Information Generation in Business Applications

AI systems create convincing but incorrect content through pattern recognition processes that prioritize coherence over accuracy. When AI encounters gaps in knowledge or ambiguous requests, it fills these gaps with information that follows linguistic patterns learned during training.

Common types of AI hallucinations include fabricated citations and references, false historical facts, invented statistics and data, fictional product information, and made-up legal precedents. These fabricated responses can appear authoritative and credible to users who lack expertise to verify the information.

Quality Control and Fact-Checking Requirements

Verification processes for AI-generated content require systematic approaches that combine automated tools with human oversight. Organizations implement multi-step validation methods that check AI outputs against authoritative sources before using the information for decision-making.

Retrieval Augmented Generation systems supplement AI responses with verified external knowledge bases, grounding the AI’s outputs in accurate source materials. Quality assurance practices include source verification protocols, expert review processes, and automated fact-checking tools.

Building Enterprise AI Risk Management Frameworks

Organizations benefit from structured approaches that combine technical safeguards, governance policies, and monitoring systems to address AI risks comprehensively. Enterprise AI risk management frameworks integrate multiple protection layers including data governance, bias detection, security controls, and incident response procedures.

Successful risk management frameworks address interconnected threats rather than isolated problems. Data poisoning attacks can amplify algorithmic bias, while privacy violations compound transparency failures.

Establishing Governance Structures and Policies

Source: NIST AI Resource Center

AI governance committees provide essential oversight for risk management activities across organizations. These committees include representatives from multiple departments who collaborate on policy development, risk assessment, and compliance monitoring.

Key organizational roles include Chief AI Officers who provide strategic direction, legal representatives who address regulatory requirements, technical leaders who oversee system development, and ethics specialists who evaluate bias and fairness considerations.

Policy development processes establish clear guidelines for AI system development, deployment, and operation. Organizations create comprehensive policy frameworks that address data usage, model validation, human oversight requirements, and acceptable use parameters.

Implementing Continuous Monitoring and Assessment

Ongoing risk assessment systems track AI performance, detect anomalies, and identify emerging threats throughout system lifecycles. Organizations implement automated monitoring tools that analyze model behavior, data quality, and user interactions to identify potential issues.

Essential monitoring tools include model performance dashboards, data quality monitoring systems, security information and event management tools, bias detection software, and privacy compliance scanners. These systems complement human oversight with technical capabilities for real-time threat detection.

Frequently Asked Questions About AI Risk Management

Source: National Institute of Standards and Technology

How long does implementing AI risk management take for large enterprises?

Implementation typically takes six to twelve months depending on organizational complexity and existing governance structures. Organizations with established risk management frameworks can move faster because they already have foundational processes in place. Companies starting from scratch require more time to build governance structures, train teams, and establish monitoring systems.

What percentage of AI project budgets goes toward risk mitigation?

Most enterprises allocate ten to fifteen percent of their AI project budgets specifically for risk management and governance activities. This percentage covers costs for specialized software tools, staff training, compliance assessments, and ongoing monitoring systems. Organizations in highly regulated industries like healthcare or financial services often allocate higher percentages due to stricter compliance requirements.

Which specific roles oversee AI governance in enterprise organizations?

AI governance requires collaboration between IT, legal, compliance, and business leaders with a dedicated chief AI officer or similar role. The chief AI officer coordinates between different departments to ensure consistent risk management practices across all AI projects. Legal teams handle regulatory compliance and liability concerns, while IT departments manage technical security measures.

How frequently do organizations conduct AI bias assessments?

Organizations typically conduct quarterly bias assessments for high-risk AI systems and annual assessments for lower-risk applications. High-risk systems include those making decisions about hiring, lending, or medical diagnoses where errors can significantly impact people’s lives. The frequency depends on how rapidly the AI system learns and changes and the severity of potential consequences from failures.

What penalties do companies face for AI governance violations under current regulations?

Poor AI risk management can result in regulatory fines, lawsuits, and reputational damage depending on jurisdiction and industry. The European Union’s AI Act imposes fines up to 35 million euros or seven percent of global annual revenue for the most serious violations. Legal consequences include discrimination lawsuits when AI systems produce biased outcomes and privacy violation penalties when personal data is mishandled.