AI bias occurs when artificial intelligence systems produce unfair or discriminatory results due to human prejudices embedded in training data or algorithms.

When a bank’s AI system approves loans for men more often than equally qualified women, bias has crept into the decision-making process. These biased outcomes happen because AI systems learn from data created by humans, and human data often contains historical patterns of discrimination.

AI bias represents one of the most pressing challenges in modern technology. As businesses increasingly rely on AI for hiring, lending, healthcare, and criminal justice decisions, understanding bias becomes essential for fair outcomes.

How AI Bias Develops

AI systems learn patterns from training data that reflects real-world human decisions and behaviors. When training data contains historical discrimination, AI algorithms absorb and replicate these patterns. For example, if a company’s past hiring data shows preferences for male engineers, an AI system trained on this data may continue discriminating against female candidates.

Source: ResearchGate

Human decisions during AI development also introduce bias. Programmers and data scientists bring their own assumptions and blind spots to algorithm design. The choice of which data to include, how to define success metrics, and which features to prioritize all reflect human judgment that can embed bias into AI models.

Sample bias occurs when training data fails to represent the full population the AI system will serve. An AI system trained primarily on data from one demographic group often performs poorly when applied to other groups. Medical AI systems trained mostly on data from white patients, for instance, may provide less accurate diagnoses for patients of other ethnicities.

Common Types of AI Bias

Historical bias emerges when AI systems learn from data that reflects past discrimination. Criminal justice AI systems trained on arrest records may perpetuate racial disparities in policing. Healthcare algorithms may reflect historical patterns where certain groups received less medical attention.

Representation bias happens when training data inadequately represents certain groups. Facial recognition systems perform worse on women and people with darker skin because training datasets contained fewer images of these populations. According to research from MIT Media Lab, some facial recognition systems had error rates as high as 35 percent for dark-skinned women compared to less than 1 percent for light-skinned men.

Source: MDPI

Measurement bias occurs when different groups are measured or evaluated using different standards. Credit scoring algorithms may use different proxy variables for creditworthiness across demographic groups, leading to unfair lending decisions.

Real-World Examples of AI Bias

Amazon discontinued an AI recruiting tool in 2018 after discovering the system discriminated against women. The algorithm learned from ten years of male-dominated hiring data and systematically downgraded resumes containing words like “women’s,” such as “women’s chess club captain.”

Healthcare algorithms have shown racial bias in patient care decisions. A widely used algorithm in U.S. hospitals incorrectly assessed the medical needs of Black patients because the system used healthcare spending as a proxy for medical need. Black patients historically spent less on healthcare due to access barriers, causing the algorithm to underestimate their medical requirements.

Source: Jonathan Hui – Medium

The COMPAS system used in criminal justice to predict recidivism rates demonstrated racial bias. According to ProPublica analysis, the system incorrectly flagged Black defendants as future criminals at twice the rate of white defendants. These false positives affected bail, sentencing, and parole decisions for thousands of people.

Detecting AI Bias

Fairness metrics provide quantitative methods for identifying bias in AI systems. These metrics compare outcomes across different demographic groups to identify disparities. Common metrics include equal opportunity, demographic parity, and equalized odds.

Source: The TensorFlow Blog

Statistical analysis reveals performance differences across groups by examining accuracy rates, false positive rates, and false negative rates for different populations. Significant disparities in these metrics often indicate bias.

Explainability tools help identify why AI systems make specific decisions. LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) reveal which features most influence AI predictions, helping analysts spot problematic patterns.

Regular auditing processes systematically evaluate AI systems for bias throughout their lifecycle. These audits examine training data composition, algorithm performance across groups, and real-world outcomes to identify emerging bias issues.

Preventing AI Bias

Diverse training data represents the first line of defense against AI bias. Organizations can ensure datasets include adequate representation from all groups the AI system will serve. Data collection processes benefit from actively seeking out underrepresented populations and scenarios.

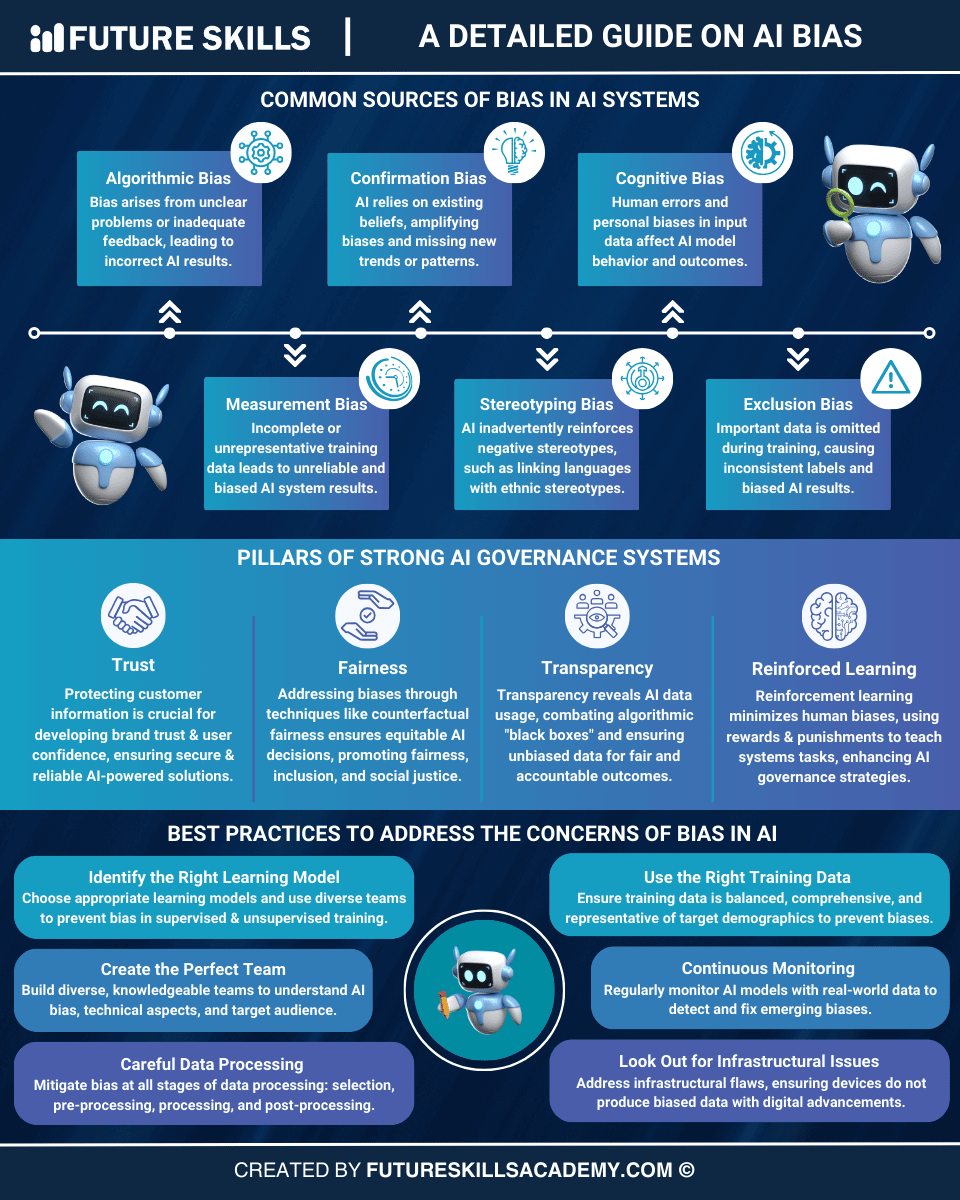

Source: Future Skills Academy

Cross-functional development teams bring different perspectives to AI projects. Teams including ethicists, domain experts, legal advisors, and representatives from affected communities can identify potential bias issues early in development.

- Bias testing during development — Testing evaluates AI performance across different demographic groups and scenarios to identify disparities before deployment

- Adversarial testing — Deliberately attempting to expose weaknesses and bias vulnerabilities in AI systems

- Human oversight mechanisms — Maintaining accountability in AI decision-making through human-in-the-loop systems

Human oversight allows people to review and override AI decisions when bias concerns arise. Clear escalation procedures ensure bias issues receive appropriate attention and response.

Legal and Regulatory Requirements

The European Union’s AI Act establishes comprehensive requirements for addressing bias in high-risk AI systems. The law mandates bias assessments, detailed documentation, and high data quality standards for AI applications in employment, law enforcement, and other sensitive domains.

U.S. civil rights laws apply to AI systems that create discriminatory outcomes. The Equal Employment Opportunity Commission provides guidance on AI bias in hiring, while other agencies address bias in their respective sectors. New York City requires annual bias audits for automated employment decision tools.

International standards from organizations like the National Institute of Standards and Technology provide frameworks for managing AI bias. These standards help organizations develop systematic approaches to bias identification, assessment, and mitigation.

Compliance requirements continue evolving as regulators develop new approaches to AI governance. Organizations benefit from staying current with emerging regulations and implementing robust bias mitigation capabilities to meet legal obligations.

Building Fair AI Systems

Data quality forms the foundation of fair AI systems. Representative datasets that include diverse perspectives help prevent bias from entering machine learning models. Quality assurance processes evaluate dataset composition across multiple dimensions including race, gender, age, and socioeconomic status.

Algorithmic design best practices incorporate equity considerations directly into system development. Preprocessing methods modify training data to ensure balanced representation before model development. In-processing techniques add fairness constraints to optimization objectives during training.

- Inclusive development teams — Cross-functional teams with diverse backgrounds help identify potential bias sources

- Model architecture choices — Ensemble methods and regularization techniques can reduce individual algorithm biases

- Validation processes — Testing algorithm performance across all relevant demographic groups

Continuous monitoring tracks system performance across demographic groups over time. Automated dashboards display fairness metrics alongside traditional performance indicators. Alert systems notify teams when bias indicators exceed acceptable thresholds.

Organizations implementing AI in retail or small business AI solutions must consider bias implications in customer-facing applications. AI chatbot development and marketing AI require careful attention to fair treatment across customer demographics.

Frequently Asked Questions About AI Bias

Can AI systems be completely free from bias?

Complete elimination of AI bias isn’t possible due to fundamental limitations in data, algorithms, and human oversight. Training datasets inevitably reflect historical patterns of discrimination and social inequities. Human cognitive biases influence every stage of AI development, from problem definition to model selection to evaluation criteria.

However, organizations can significantly reduce bias through systematic mitigation approaches. Effective bias reduction combines technical solutions with organizational processes and human oversight mechanisms.

How do organizations detect bias in their AI systems?

Organizations use statistical analysis to compare AI performance across different demographic groups. They examine accuracy rates, false positive rates, and prediction distributions to identify disparities that indicate potential discrimination.

Explainability tools like LIME and SHAP reveal which factors influence AI decisions, helping analysts spot problematic patterns. Regular bias auditing procedures assess AI system performance on an ongoing basis after deployment.

What are the legal consequences of deploying biased AI systems?

Organizations face civil rights violations under existing anti-discrimination laws like the Equal Employment Opportunity Act and Fair Housing Act. The EU AI Act imposes fines up to 35 million euros or 7 percent of global annual revenue for non-compliance with bias prevention requirements.

Recent legal developments show growing litigation risks. The Workday employment discrimination lawsuit received preliminary collective action certification, potentially affecting hundreds of thousands of job applicants and creating precedent for future AI bias litigation.

How often do AI systems require bias testing?

Organizations conduct bias testing at multiple stages throughout the AI lifecycle. Initial testing occurs during model development, with comprehensive bias audits performed before deployment to identify potential discriminatory patterns.

High-risk applications in employment, healthcare, or criminal justice undergo bias assessment every three to six months. Lower-risk applications may require annual comprehensive audits with ongoing automated monitoring.

What’s the difference between AI bias and random AI errors?

AI bias creates systematic unfairness toward specific demographic groups, while random AI errors affect users unpredictably without targeting particular populations. Bias produces consistent patterns of discrimination, whereas errors occur randomly across all user groups.

For example, facial recognition systems that consistently misidentify people with darker skin tones demonstrate bias, not random error. The systematic nature means certain groups experience worse outcomes repeatedly, creating cumulative harm over time.

AI bias emerges from human prejudices embedded in data and algorithms, creating discriminatory outcomes across many domains. Effective solutions require diverse data, cross-functional teams, systematic testing, human oversight, and compliance with evolving regulations. Organizations that proactively address bias can build fairer AI systems while meeting legal requirements and maintaining public trust.