AI agent perception is the process through which artificial intelligence systems gather, interpret, and process information from their environment to make informed decisions and take actions. Unlike simple rule-based programs that follow predetermined instructions, AI agents with perception capabilities can sense and respond to real-world changes in their surroundings. Modern perception systems integrate multiple types of sensory inputs — including visual, auditory, and sensor data — to create comprehensive understanding of environmental conditions.

AI agent perception process showing data collection, processing, and decision making. Source: AI Planet

The sophistication of AI agent perception extends far beyond basic data collection. Advanced systems employ machine learning algorithms and neural networks to recognize patterns, identify objects, and understand context within complex environments. These capabilities enable AI agents to adapt their behavior based on changing conditions rather than operating solely from fixed programming.

AI agent perception represents a foundational technology that distinguishes truly intelligent systems from traditional automation. The ability to perceive and interpret environmental information in real-time forms the basis for autonomous decision-making across applications ranging from self-driving vehicles to industrial monitoring systems. As artificial intelligence continues to evolve, perception capabilities become increasingly critical for creating systems that can operate effectively in dynamic, unpredictable real-world conditions.

What Is AI Agent Perception

AI agent perception refers to an artificial intelligence system’s ability to gather, interpret, and process information from its environment to make informed decisions and take appropriate actions. This foundational capability enables AI agents to understand their surroundings through various types of sensory inputs, similar to how humans use their five senses to navigate the world.

Unlike human perception, which relies on biological processes, AI agent perception operates through computational processing. AI systems use sensors, cameras, microphones, and other data collection devices to capture environmental information, then apply machine learning algorithms to interpret and analyze the data.

The perception process transforms raw environmental data into structured information that AI agents can use for decision-making. When an AI agent receives visual data from a camera, for example, computer vision algorithms identify objects, detect movement, and recognize patterns within the images. Similarly, speech recognition systems convert audio waves into text that natural language processing models can understand and respond to.

How AI Agent Perception Works

AI agent perception transforms raw environmental data into intelligent actions through a systematic three-stage process. This process mirrors how humans sense, think, and respond to their surroundings, but operates at machine speeds with digital precision.

The perception cycle begins when sensors collect information from the environment. Machine learning algorithms then analyze this data to identify patterns and extract meaningful insights. Finally, the system uses processed information to make decisions and take appropriate actions in real-time.

Data Collection Through Sensors and Inputs

AI agents gather information from their environment through various input channels, much like human senses but with greater range and precision. Cameras capture visual data including images, video streams, and depth information that help agents understand spatial relationships and identify objects in their surroundings. Microphones record audio data from conversations, environmental sounds, and acoustic signals that provide context about activities and conditions.

IoT sensors measure temperature, pressure, motion, humidity, and other physical properties that create a comprehensive picture of environmental conditions. Digital data streams flow from databases, application programming interfaces, network traffic, and electronic communications. These streams provide structured information about system states, user behaviors, and operational metrics that complement physical sensor data.

Processing and Pattern Recognition

Machine learning algorithms transform raw sensor data into structured information through sophisticated analysis techniques. Pattern matching identifies recurring shapes, sounds, or data signatures that correspond to known objects, events, or conditions in the environment.

Feature extraction isolates important characteristics from complex data streams, separating relevant signals from background noise. Computer vision systems extract edges, textures, and shapes from images, while audio processing identifies speech patterns, tones, and frequencies from sound recordings. Data transformation converts information between different formats and scales to enable comparison and analysis.

Neural networks learn to recognize complex relationships that traditional algorithms cannot detect. These processing algorithms work in layers, with early stages handling basic feature detection and later stages combining features into complex understanding.

Decision Making and Adaptive Response

Processed information flows into decision-making systems that evaluate options and select appropriate responses based on current conditions and programmed objectives. These systems operate autonomously, making choices without human intervention while following learned patterns and rules.

Real-time capabilities enable immediate responses to changing conditions, allowing agents to adjust their behavior as new information becomes available. Feedback loops continuously refine decision-making processes by incorporating results from previous actions into future choices. Adaptive responses allow agents to modify their behavior based on environmental changes and performance outcomes.

Types of AI Agent Perception

AI agent perception operates through several distinct modalities that enable machines to understand their environment. Each perception type processes different kinds of information and serves specific functions in creating intelligent behavior.

Visual Perception and Computer Vision

Visual perception allows AI agents to interpret and understand information from images and video feeds. Computer vision systems process visual data through sophisticated algorithms that can identify patterns, objects, and spatial relationships.

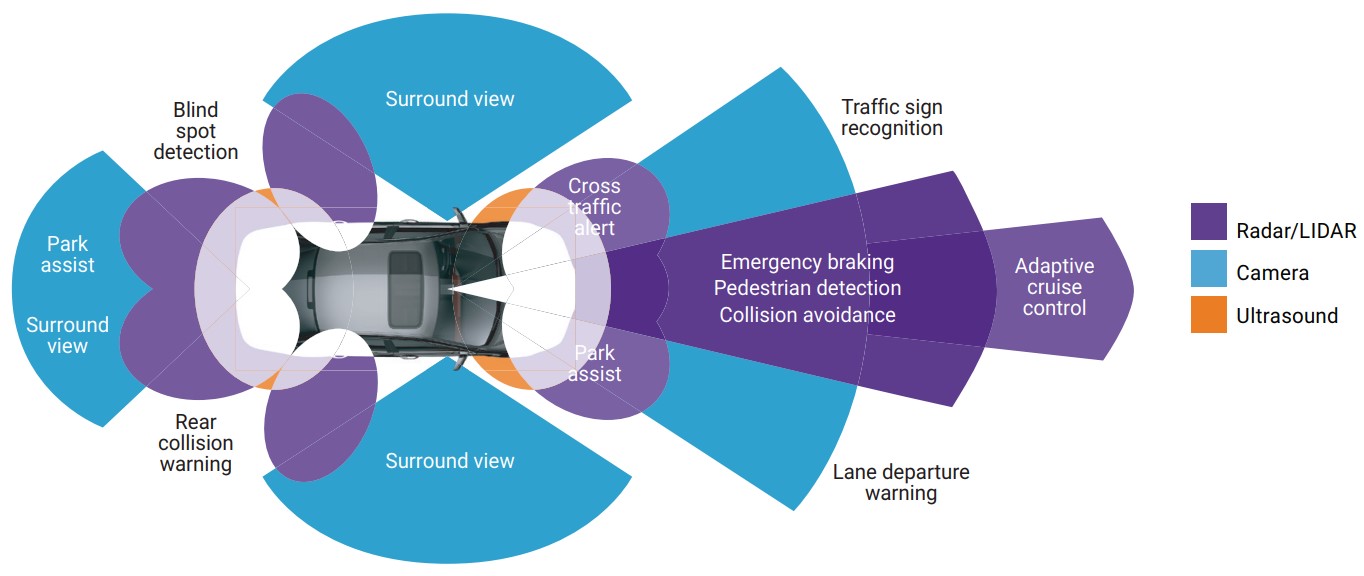

Computer vision applications in autonomous vehicles showing object detection capabilities. Source: Think Autonomous

Manufacturing companies use visual perception systems to inspect products on assembly lines, catching defects that human workers might miss. Security systems employ facial recognition to grant building access or identify individuals in surveillance footage. Autonomous vehicles rely on computer vision to recognize traffic signs, detect pedestrians, and navigate road conditions.

Auditory Perception and Sound Processing

Auditory perception enables AI agents to process and interpret sound information from their environment. Sound processing systems convert audio waves into digital data that machines can analyze for patterns, meaning, and context.

Voice assistants like Alexa and Siri use speech recognition to understand user commands and respond appropriately. Industrial facilities deploy acoustic monitoring systems to detect equipment malfunctions through unusual sound patterns. Healthcare applications analyze cough sounds to identify respiratory conditions or monitor patient breathing patterns.

Tactile and Sensor Based Perception

Tactile perception involves processing information from physical contact and environmental sensors. Touch-based systems gather data about pressure, texture, temperature, and physical properties of objects or environments.

Robotic systems use tactile sensors to handle delicate objects without crushing them, adjusting grip strength based on pressure feedback. Industrial applications monitor temperature sensors to prevent equipment overheating or ensure proper operating conditions. Medical devices employ pressure sensors to deliver precise medication dosages or monitor patient vital signs.

Multimodal Perception Integration

Multimodal perception combines information from multiple sensory channels to create comprehensive environmental understanding. Integration systems process visual, auditory, and tactile inputs simultaneously, creating more accurate and robust AI behavior than single-modality approaches.

Multi-sensor fusion architecture combining different sensor types for autonomous systems. Source: Edge AI and Vision Alliance

Autonomous vehicles integrate camera feeds, radar data, and GPS information to navigate safely through complex traffic situations. Smart home systems combine motion sensors, audio detection, and visual monitoring to distinguish between normal household activity and potential security threats.

Real World Applications of AI Agent Perception

AI agent perception transforms abstract technology into practical solutions that address concrete challenges across multiple industries. Organizations worldwide deploy perception-enabled systems to handle complex tasks that require environmental understanding, pattern recognition, and autonomous decision-making capabilities.

Autonomous Vehicles and Transportation

Self-driving cars represent the most visible application of AI agent perception technology. These vehicles integrate cameras, LiDAR sensors, radar systems, and GPS units to create comprehensive understanding of traffic environments.

Specific transportation applications include:

- Traffic signal recognition — Cameras identify red lights, green lights, and pedestrian crossing signals while processing their meaning within traffic flow context

- Pedestrian detection — Visual systems track people walking near roadways and predict their movement patterns to prevent collisions

- Lane keeping assistance — Sensors monitor road markings and vehicle position to maintain proper lane positioning during highway driving

- Obstacle avoidance — Multi-sensor fusion detects stationary objects, construction zones, and road debris to plan safe navigation routes

Perception enables safe autonomous operation by combining all recognized elements into holistic scene understanding. When approaching busy intersections, systems process traffic light status, pedestrian movements, other vehicle behaviors, and potential hazards simultaneously.

Healthcare Monitoring and Diagnostics

Healthcare applications leverage AI agent perception for continuous patient monitoring, diagnostic assistance, and treatment optimization. Medical environments present unique challenges due to noisy sensor data, missing information, and the critical nature of accurate interpretation.

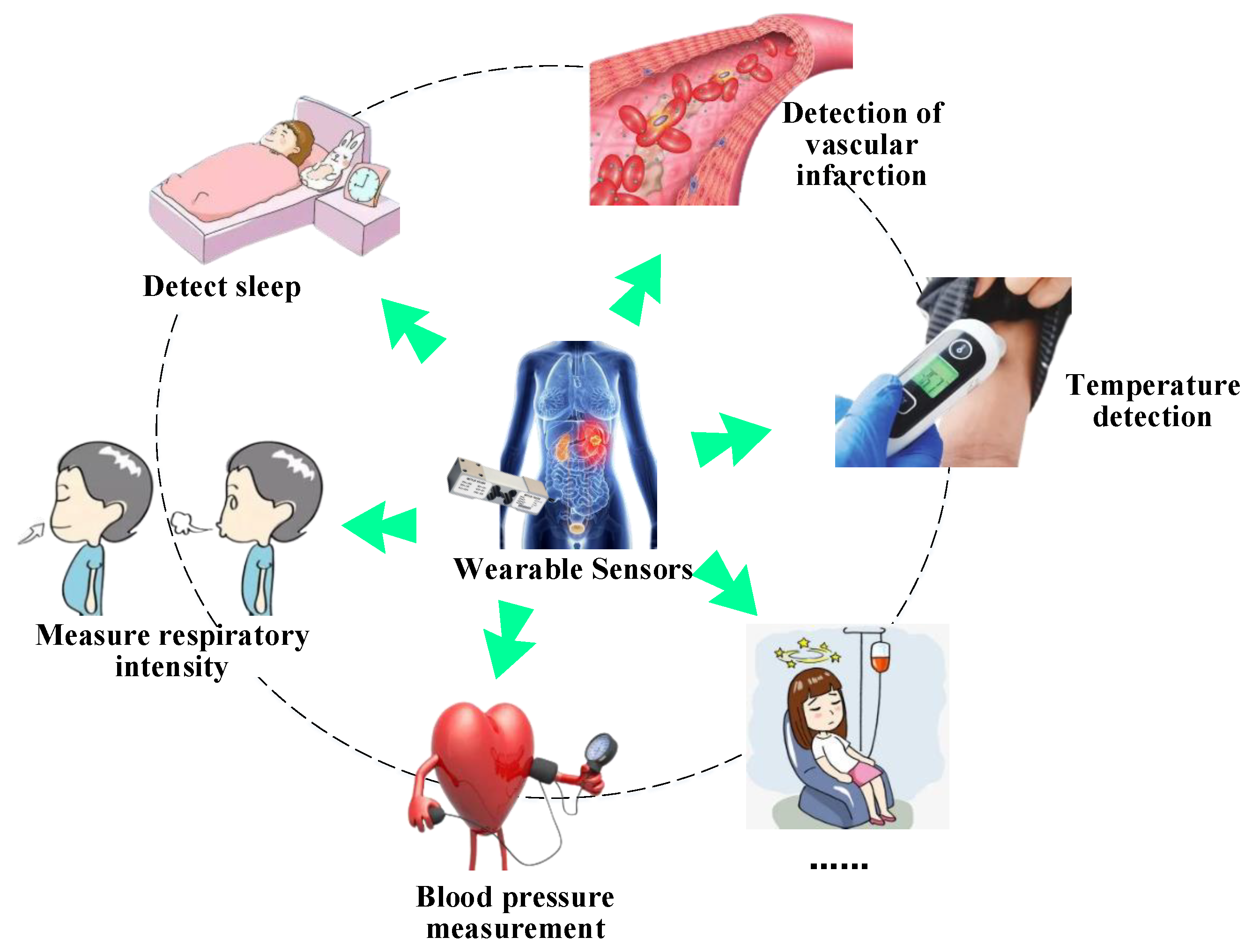

Healthcare AI monitoring system using wearable sensors for patient vital signs. Source: MDPI

Healthcare perception applications include vital sign monitoring through wearable sensors that track heart rate, blood pressure, and oxygen saturation while identifying abnormal patterns. Medical imaging analysis uses computer vision systems to examine X-rays, MRIs, and CT scans to detect tumors, fractures, and other abnormalities. Patient movement tracking through camera systems monitors elderly patients for fall detection and mobility assessment.

Advanced healthcare systems employ reliability-aware sensor weighting mechanisms that dynamically estimate confidence levels for each monitoring device. When sensors generate uncertain or noisy data, the system reduces their influence on medical decision-making processes.

Industrial Automation and Manufacturing

Manufacturing environments deploy AI agent perception for predictive maintenance, quality control, and process optimization. Industrial perception systems integrate visual inspection capabilities with sensor monitoring of temperature, vibration, pressure, and operational parameters.

AI-powered quality control system detecting defects on manufacturing production line. Source: Intel Newsroom

Edge AI deployment allows manufacturing systems to process sensor data locally, reducing latency and enabling immediate responses to critical conditions. When perception systems detect patterns indicating impending equipment failure, they automatically schedule maintenance activities to minimize production disruption.

Manufacturing perception applications include:

- Quality control inspection — Vision systems examine products for defects, color variations, and dimensional accuracy on production lines

- Predictive maintenance — Vibration sensors and thermal cameras detect equipment wear patterns before failures occur

- Robotic assembly guidance — Computer vision enables precise part placement and tool positioning during automated assembly

- Worker safety monitoring — Sensors detect when personnel enter hazardous areas and trigger appropriate safety protocols

Cybersecurity and Threat Detection

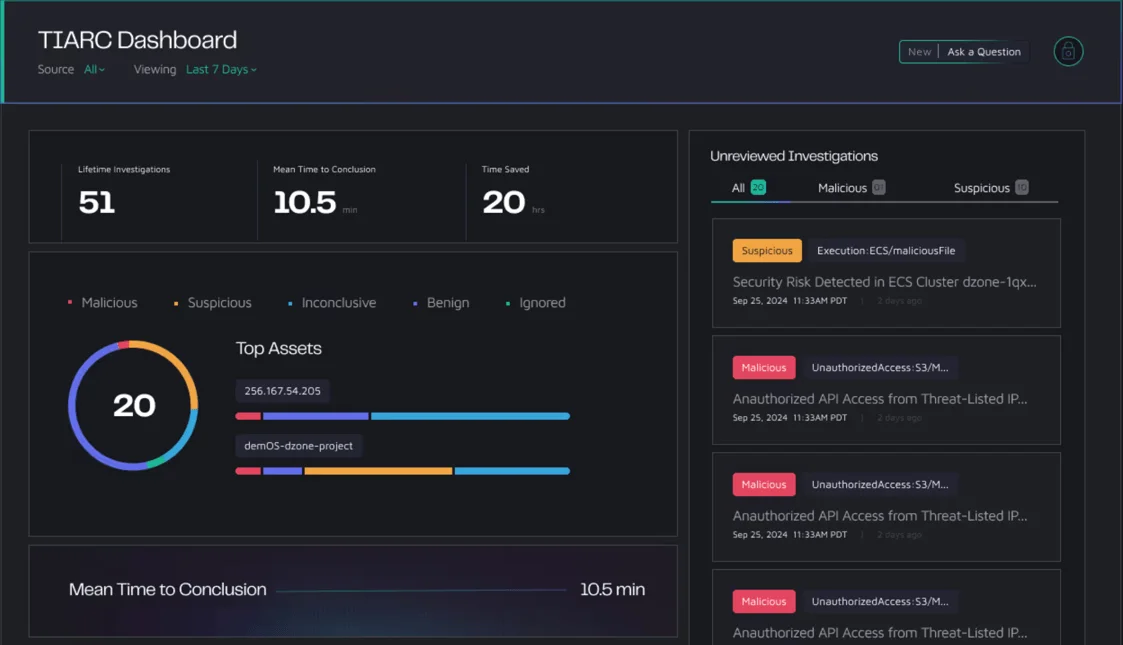

Cybersecurity applications employ AI agent perception for network monitoring, threat detection, and automated response systems. Security perception systems process network traffic, user behavior patterns, system logs, and performance metrics to identify potential threats.

AI-powered cybersecurity platform monitoring network threats and behavioral patterns. Source: SOCRadar

Cybersecurity perception applications include network intrusion detection through traffic analysis systems that identify unusual communication patterns. Malware identification uses behavioral analysis to detect software that exhibits suspicious file system or network activity. User behavior monitoring tracks login patterns, access requests, and application usage to spot compromised accounts.

AI agents detect and neutralize cybersecurity threats by continuously monitoring multiple data sources simultaneously. The perception capabilities extend beyond traditional signature-based detection to include behavioral analysis and pattern recognition across network communications, system performance, and user activities.

Technical Challenges in AI Agent Perception

Building effective AI agent perception systems presents several significant technical obstacles that developers encounter across different industries and applications. These challenges stem from the complexity of processing real-world data and the demanding requirements of autonomous systems operating in dynamic environments.

Sensor Fusion and Integration Complexity

Combining data from multiple sensors creates substantial coordination challenges for AI systems. When an autonomous vehicle uses cameras, radar, LiDAR, and GPS simultaneously, each sensor operates at different speeds and produces data in different formats. The system must align information from a camera capturing 30 frames per second with radar data updating 50 times per second while GPS provides location updates only once per second.

Synchronization issues emerge when sensors experience different processing delays or when network latency affects data transmission. A camera might detect an obstacle at the exact moment a distance sensor measures empty space due to timing mismatches. The system must reconcile these conflicting inputs to create accurate environmental understanding.

Calibration between sensors requires precise measurement and ongoing maintenance. Temperature changes, vibrations, or physical impacts can shift sensor alignments, causing the system to incorrectly map visual data with distance measurements.

Real Time Processing and Latency Requirements

AI perception systems require instant analysis and response capabilities that push computational limits. Autonomous vehicles must process visual data, detect objects, predict movements, and plan responses within milliseconds to avoid collisions. Medical monitoring systems need immediate analysis of patient vital signs to trigger alerts before critical situations develop.

Latency comparison between edge computing and cloud processing for real-time AI applications. Source: ResearchGate

Processing speed requirements increase exponentially with data complexity. A single high-resolution camera produces gigabytes of data per minute, while adding multiple cameras, audio sensors, and environmental monitors creates data streams that challenge even powerful computing systems.

Edge computing deployment faces constraints from power consumption, heat generation, and physical space limitations. Placing sufficient processing power in smartphones, vehicles, or industrial equipment requires balancing performance needs with practical engineering constraints.

Data Quality and Environmental Variability

Inconsistent data quality creates reliability problems for AI perception systems. Cameras produce different image quality under varying lighting conditions, while microphones capture audio with background noise levels that change throughout the day. Rain, fog, dust, or electromagnetic interference can degrade sensor performance unpredictably, forcing systems to operate with incomplete or corrupted information.

Environmental conditions change continuously in ways that affect sensor accuracy. Autonomous vehicles encounter rain that obscures cameras, bright sunlight that creates glare, or snow that blocks sensors entirely. Industrial monitoring systems face temperature fluctuations, humidity changes, or vibrations that alter sensor readings beyond expected ranges.

Training data limitations mean AI systems encounter real-world conditions not represented in their learning datasets. A facial recognition system trained primarily on well-lit indoor environments may fail outdoors or in crowded spaces. Speech recognition systems trained on clear audio recordings struggle with accents, background conversations, or poor audio quality from low-cost microphones.

Security, Privacy, and Ethical Considerations

AI governance brings unique challenges around data security, privacy protection, and ethical decision-making that organizations must address from day one. Companies implementing AI systems face complex responsibilities that extend far beyond traditional technology deployments, requiring comprehensive frameworks like ISO 42001 to protect both user information and organizational interests.

Enterprise AI implementations handle sensitive data across multiple touchpoints, creating expanded attack surfaces that bad actors can exploit. The interactive nature of AI agents amplifies privacy risks, as systems may process personal information, trade secrets, and confidential business data through various applications and tools.

Data Protection and Privacy Risks

AI systems collect and process vast amounts of personal and business data through multiple channels, creating significant privacy vulnerabilities that require robust protection measures. Modern AI agents can access email communications, financial information, travel schedules, and behavioral patterns, often gaining more comprehensive user profiles than organizations realize.

Data memorization presents a critical security risk where AI models inadvertently store sensitive information in their parameters during training. Both user account information and personal behavioral data can become embedded in language models through fine-tuning processes, making systems vulnerable to data extraction attacks where malicious actors attempt to retrieve stored information from trained models.

Access control mechanisms become complicated when AI agents operate autonomously across multiple systems and applications. Organizations require granular permission frameworks that limit AI access to only necessary data sources while maintaining audit trails of all data interactions.

User consent and transparency present ongoing challenges as AI systems often collect information beyond what users understand or explicitly authorize. The complexity and scope of AI data collection can make consent agreements unclear, increasing the risk of unintended privacy violations where users inadvertently surrender control of sensitive information.

Bias Mitigation and Fairness Concerns

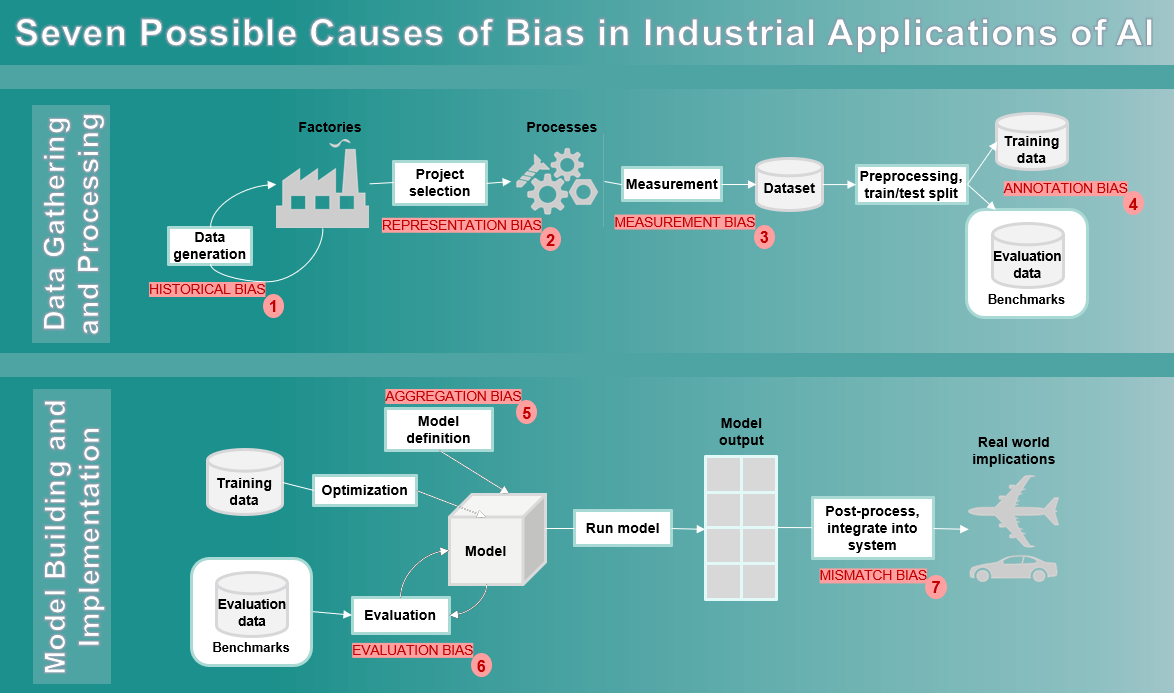

AI systems can perpetuate and amplify existing biases present in training data, leading to unfair outcomes for different demographic groups. Research shows that even small biases in training data become magnified through AI algorithms, creating feedback loops where biased AI outputs influence human behavior, which in turn reinforces the original bias.

Seven causes of AI bias and mitigation strategies in industrial applications. Source: Siemens Digital Industries

The NIST AI Risk Management Framework identifies three major bias categories: systemic bias present in datasets and organizational processes, computational bias emerging from algorithmic processes and non-representative samples, and human-cognitive bias arising from individual decision-making patterns. Each type can occur without intentional discrimination, making bias detection particularly challenging.

Testing and validation approaches require systematic evaluation of AI outputs across different demographic groups and use cases. Organizations conduct bias audits using diverse test datasets that represent various population segments, measuring system performance for accuracy, fairness, and consistency across different groups.

Algorithmic fairness techniques include pre-processing methods that modify training data to reduce bias, in-processing approaches that adjust learning algorithms during training, and post-processing methods that calibrate model outputs to achieve fairer results. Human oversight mechanisms provide checkpoints where subject matter experts review AI decisions for potential bias, particularly in high-stakes applications affecting individual outcomes.

Regulatory Compliance and Governance

Organizations deploying AI systems must navigate evolving regulatory landscapes that vary by industry, geography, and application domain. The EU AI Act establishes comprehensive risk-based requirements for AI systems, including prohibitions on certain high-risk applications and strict oversight requirements for systems that significantly impact individual rights or safety.

Data protection regulations like GDPR and CCPA require organizations to disclose data collection practices and provide consumers with opt-out choices, creating compliance challenges when AI systems operate autonomously or across multiple integrated platforms. Organizations must track data flows through complex AI architectures while maintaining transparency about how personal information influences automated decision-making.

Industry-specific regulations add additional compliance layers, particularly in healthcare, financial services, and transportation sectors where AI decisions directly impact consumer welfare. Banking regulations require robust model risk management practices, while healthcare AI systems must comply with HIPAA privacy requirements and FDA approval processes for medical applications.

Governance frameworks establish organizational structures and processes for responsible AI deployment throughout the system lifecycle. Effective frameworks include ethics review boards that evaluate AI projects before deployment, risk assessment procedures that identify potential harms and mitigation strategies, and incident response protocols for addressing AI-related problems after they occur.

Future of AI Agent Perception Technology

AI agent perception technology is advancing rapidly through several key trends that will reshape how businesses operate and make decisions. Organizations across industries are beginning to understand the practical implications of these developments for their daily operations.

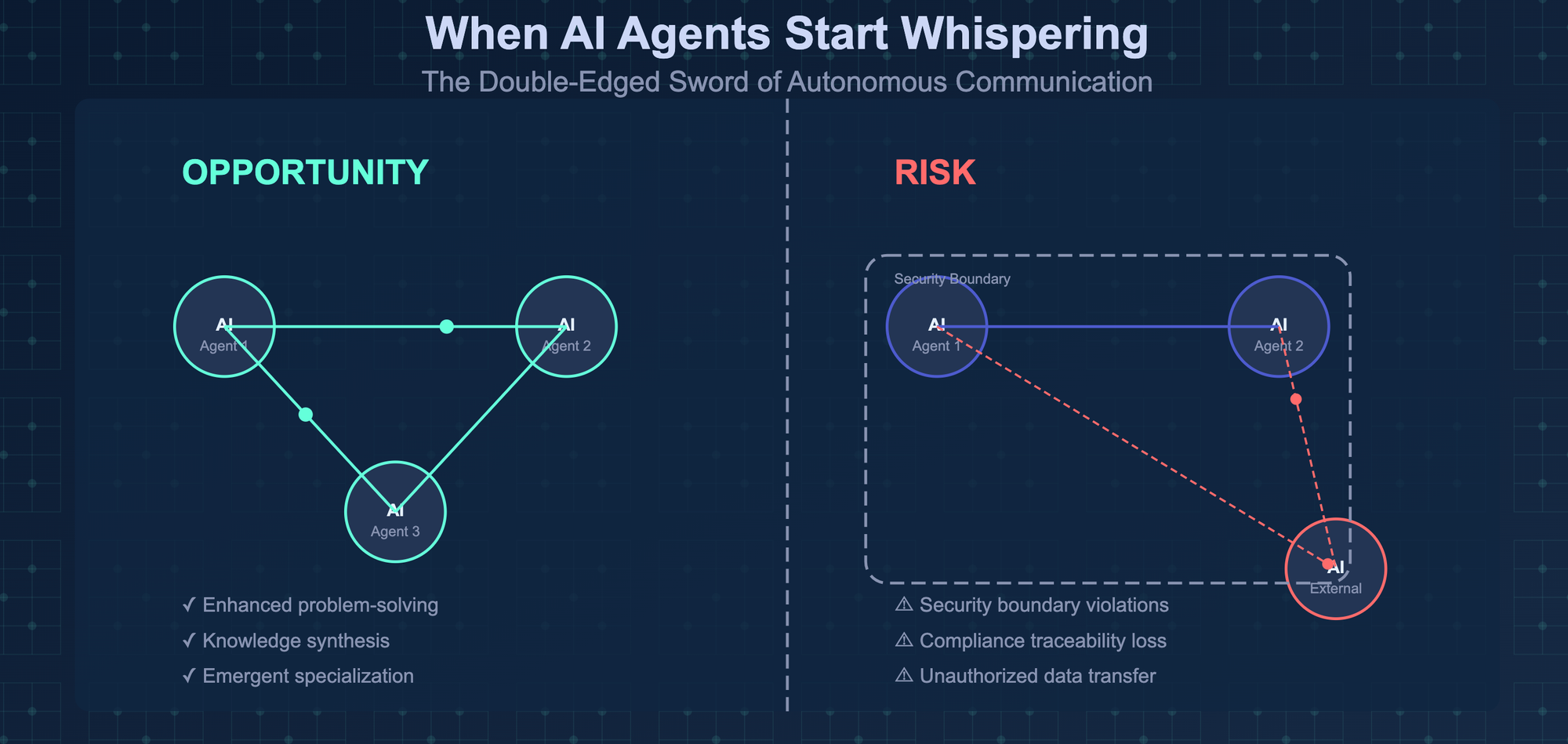

Future AI agent networks enabling autonomous communication and coordination. Source: Deepak Gupta

The next generation of AI systems will operate with greater independence from human oversight. Agentic AI systems can perceive their environment, make decisions, and take actions without constant human intervention. These systems learn continuously from their experiences and adapt their behavior based on environmental feedback.

For businesses, this means AI agents can handle complex, multi-step processes independently. A manufacturing AI agent might detect equipment vibrations that indicate potential failure, schedule maintenance automatically, and adjust production schedules to minimize downtime. Unlike current systems that require human confirmation at each step, future agents will complete entire workflows autonomously.

Edge Computing Integration

AI perception systems are moving from centralized cloud processing to local edge devices. Edge AI allows perception systems to process sensory data directly on location rather than sending information to remote servers for analysis.

This shift reduces response times from seconds to milliseconds and eliminates dependence on internet connectivity. A retail store’s inventory management system can instantly recognize when products are running low and automatically reorder supplies without waiting for cloud processing. Autonomous vehicles can make split-second decisions about braking or steering without waiting for data to travel to distant servers.

Multimodal Foundation Models

Future perception systems will combine different types of sensory input through unified architectures rather than separate processing systems for vision, audio, and text. Large language models and large image models are merging into integrated platforms that understand visual, textual, and auditory information simultaneously.

A customer service AI agent will simultaneously analyze a customer’s facial expressions through video, tone of voice through audio, and words through text to provide more accurate responses. Security systems will correlate visual movement patterns with audio signatures and access logs to identify potential threats more accurately than single-sensor approaches.

Organizations will deploy networks of AI agents that share perception data and coordinate responses. Rather than relying on individual agents working in isolation, distributed systems allow multiple agents to contribute different perspectives on the same environment.

Strategic Implementation of AI Agent Perception

Organizations considering AI agent perception systems face several fundamental business decisions that determine project success and long-term value. The complexity of perception systems requires careful evaluation of technical approaches, resource allocation, and integration strategies before implementation begins.

AI agent perception projects typically require six to 18 months for initial deployment, depending on system complexity and data requirements. Organizations must allocate resources for data collection and preparation, which often represents 60 to 80 percent of total project effort. Technical teams require specialized skills in machine learning, sensor integration, and system architecture that may necessitate training or external expertise.

Budget considerations extend beyond initial development costs to include ongoing operational expenses, maintenance requirements, and system updates. Organizations should plan for iterative development cycles as perception systems improve through continuous learning and environmental adaptation.

For small businesses considering AI perception solutions, working with experienced consultants can provide access to specialized expertise without requiring full-time technical staff. An AI Center of Excellence approach helps organizations develop structured capabilities for managing perception system implementations and ongoing operations.

Technical Architecture Decisions

Implementation approaches vary significantly in cost, complexity, and customization capabilities. Cloud-based solutions offer low initial investment and high scalability but involve shared security responsibility and subscription-based ongoing costs. On-premise deployments provide full data control and high customization but require substantial infrastructure investment and ongoing maintenance.

Custom development delivers complete control over system design but demands very high initial investment and extended development timelines. Pre-built solutions enable rapid deployment with limited customization and vendor-dependent security frameworks.

Data Strategy and Governance

Successful perception systems require comprehensive data strategies addressing collection, storage, processing, and governance requirements. Organizations must establish data quality standards, privacy protection protocols, and regulatory compliance frameworks before system deployment.

AI data management becomes critical for perception systems that process large volumes of sensor data continuously. Data diversity and representativeness directly impact perception system accuracy and fairness. Organizations should audit existing data sources, identify gaps in coverage, and establish procedures for continuous data quality monitoring and bias detection.

Performance monitoring ensures perception systems maintain accuracy and reliability over time. Organizations implement automated alerting systems that detect degradation in system performance or unexpected behavioral changes. Regular model retraining and system updates maintain perception accuracy as environmental conditions and business requirements evolve.

FAQs About AI Agent Perception

How does AI agent perception differ from human sensory perception?

AI agent perception processes data through computational algorithms rather than biological systems. While humans use five senses that operate through neural networks in the brain, AI agents use digital sensors like cameras, microphones, and environmental monitors that feed data into machine learning algorithms.

AI systems can process multiple sensor inputs simultaneously at speeds much faster than human perception. However, human perception includes emotional and contextual understanding that current AI systems struggle to replicate. Humans can make intuitive leaps and understand subtle social cues that AI perception systems cannot detect.

What types of sensors do AI perception systems typically use?

AI perception systems integrate various sensor types depending on their application. Visual sensors include standard cameras, infrared cameras, and depth sensors like LiDAR. Audio sensors range from standard microphones to specialized acoustic monitoring equipment. Environmental sensors measure temperature, humidity, pressure, motion, and chemical composition.

IoT sensors provide data about equipment status, energy consumption, and operational parameters. GPS systems offer location and positioning data. Accelerometers and gyroscopes track movement and orientation. Touch sensors detect pressure, texture, and physical contact with objects or surfaces.

Can AI agent perception work without internet connectivity?

Yes, AI agent perception can operate without internet connectivity through edge computing approaches. Edge AI processes sensor data locally on the device rather than sending information to cloud servers for analysis. This enables real-time responses even when internet connections are unavailable or unreliable.

Local processing reduces latency and improves privacy by keeping sensitive data on the device. However, edge computing requires more powerful hardware on the device and may have limitations in processing complex algorithms compared to cloud-based systems. Many AI perception systems use hybrid approaches that combine local processing for immediate responses with cloud processing for more complex analysis.

How accurate are current AI perception systems compared to human perception?

AI perception accuracy varies significantly depending on the specific task and environmental conditions. In controlled environments with good lighting and clear audio, AI systems can match or exceed human accuracy for specific tasks like object recognition or speech transcription.

However, AI systems struggle with contextual understanding and adapting to unexpected situations where humans excel. A human can recognize a partially obscured stop sign in poor lighting conditions, while an AI system might fail to detect it. AI perception works best in structured environments with predictable conditions and clear data inputs.

What industries benefit most from AI agent perception technology?

Manufacturing industries use AI perception for quality control, predictive maintenance, and robotic automation. Healthcare applications include patient monitoring, medical imaging analysis, and diagnostic assistance. Transportation companies deploy perception systems in autonomous vehicles and logistics optimization.

Retail organizations implement AI perception for inventory management, customer behavior analysis, and security monitoring. Financial services use perception technology for fraud detection and automated document processing. Agriculture applications include crop monitoring, pest detection, and automated harvesting systems.

Conclusion

AI agent perception represents a fundamental shift in how machines understand and interact with the world around them. This technology enables artificial intelligence systems to gather information through multiple sensors, process complex environmental data, and make autonomous decisions based on real-time conditions.

The practical applications span across industries, from autonomous vehicles navigating busy streets to healthcare systems monitoring patient vital signs. Manufacturing facilities use perception systems for quality control and predictive maintenance, while cybersecurity applications detect threats through behavioral analysis of network traffic and user activities.

Technical challenges remain significant, including sensor fusion complexity, real-time processing requirements, and data quality issues. Organizations must also address privacy concerns, bias mitigation, and regulatory compliance when implementing perception systems. Despite these challenges, the technology continues advancing through edge computing integration, multimodal foundation models, and distributed agent networks.

Success in implementing AI agent perception requires careful planning, appropriate technical architecture decisions, and comprehensive data governance strategies. Organizations that understand both the capabilities and limitations of perception technology will be better positioned to deploy these systems effectively and responsibly.

As AI agent perception technology matures, it will become increasingly important for organizations to develop expertise in this area. The ability to perceive and understand environmental conditions forms the foundation for truly autonomous AI systems that can operate independently in complex, real-world situations.