When artificial intelligence systems make decisions that affect people’s lives, understanding how those decisions happen becomes critical. AI transparency provides visibility into how AI systems work, what data they use, and why they produce specific outcomes. Unlike traditional software where programmers can trace each step, AI systems often operate as “black boxes” where the decision-making process remains hidden from human understanding.

Source: ResearchGate

The complexity of modern AI creates significant challenges for organizations and regulators who need to validate system behavior, diagnose errors, and ensure compliance with laws. AI systems now influence hiring decisions, loan approvals, medical diagnoses, and criminal justice outcomes. Without transparency, stakeholders cannot determine whether these systems operate fairly, accurately, or safely.

AI transparency has evolved from a technical preference to a regulatory requirement in many jurisdictions. The EU’s AI Act mandates specific transparency obligations for AI system providers and deployers. Organizations that deploy AI systems must now demonstrate not just that their systems work, but how they work and why their decisions can be trusted.

What Is AI Transparency

AI transparency refers to how open and understandable an AI system is to the people who interact with it. The National Institute of Standards and Technology defines transparency as a fundamental characteristic that enables stakeholders to understand, evaluate, and trust AI systems across various contexts and applications.

Unlike traditional software transparency, AI transparency addresses systems that learn patterns from data rather than following explicit programmed rules. Modern AI systems, particularly deep learning models, process information through multiple layers of interconnected computations that create complex decision pathways. These pathways become difficult or impossible for humans to follow without specialized tools and techniques.

Source: ResearchGate

AI transparency includes four primary levels. Data transparency documents data origins, collection methods, and processing steps to identify potential biases and ensure data quality. Algorithmic transparency involves making AI logic and decision-making processes understandable to relevant stakeholders.

Interaction transparency addresses how AI systems communicate with users, including clear presentation of information and limitations. Social transparency examines AI’s broader impact on individuals and society, particularly regarding fairness and accountability.

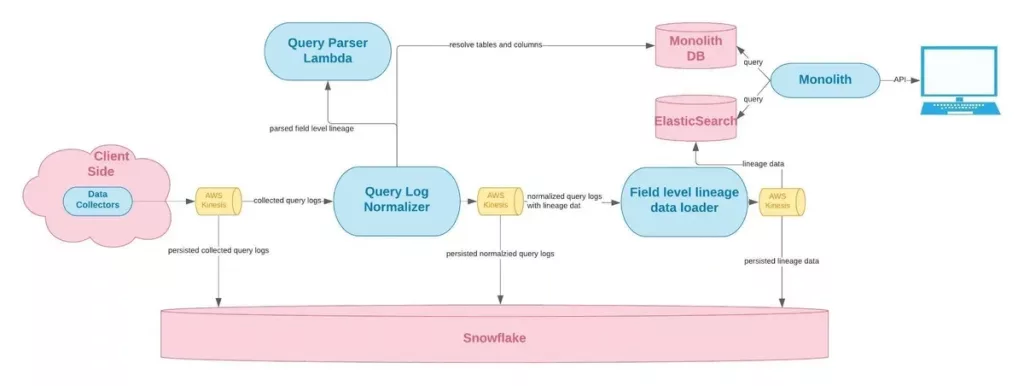

Data Transparency Shows Where Information Comes From

Data transparency involves providing visibility into the sources, quality, and collection methods of training data used to build AI systems. Organizations document where their data comes from, how it was gathered, and what steps were taken to ensure accuracy and completeness.

Source: Monte Carlo Data

This type of transparency helps identify potential biases in training datasets and enables stakeholders to evaluate whether AI systems were trained on appropriate information. For example, a hiring AI system’s data transparency might reveal that training data came primarily from resumes of successful employees at tech companies, which could indicate bias against candidates from other industries.

Key components include:

- Data lineage — tracking the complete journey of data from its original source through all processing steps

- Data governance — documenting policies and procedures for data collection, storage, and quality management

- Data usage documentation — recording how data was preprocessed, cleaned, and transformed before training

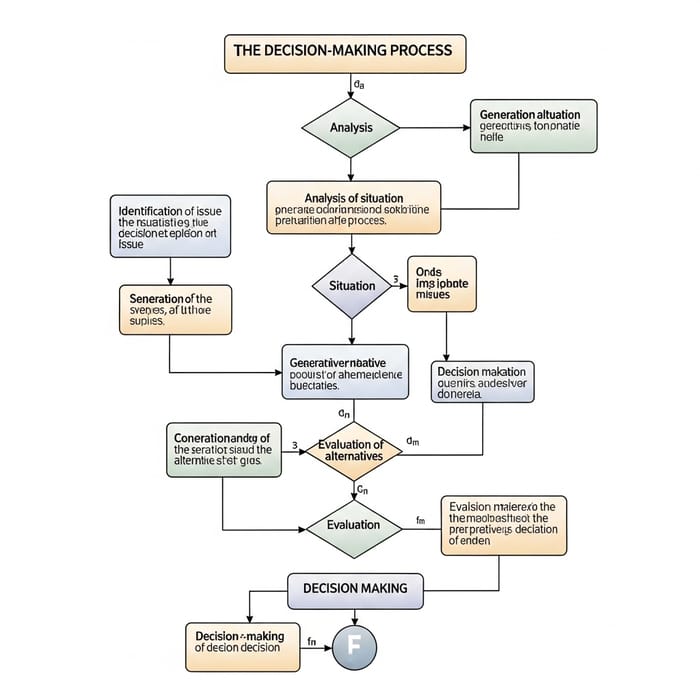

Algorithmic Transparency Explains How AI Makes Decisions

Algorithmic transparency focuses on understanding how AI algorithms process information and arrive at decisions. Rather than accepting AI outputs without explanation, this transparency reveals the computational steps and logical pathways that lead to specific results.

Source: Easy-Peasy.AI

Organizations achieve algorithmic transparency by documenting their model architectures, explaining decision-making logic, and providing rationales for choosing specific algorithms. A medical diagnostic AI might explain that it identified pneumonia by analyzing lung opacity patterns, texture variations, and comparison with thousands of similar cases.

Essential elements include:

- Model architecture disclosure — describing the structure and computational components that make up AI systems

- Decision logic explanation — providing clear descriptions of how algorithms weigh different factors

- Algorithm selection rationale — documenting why specific algorithms were chosen over alternatives

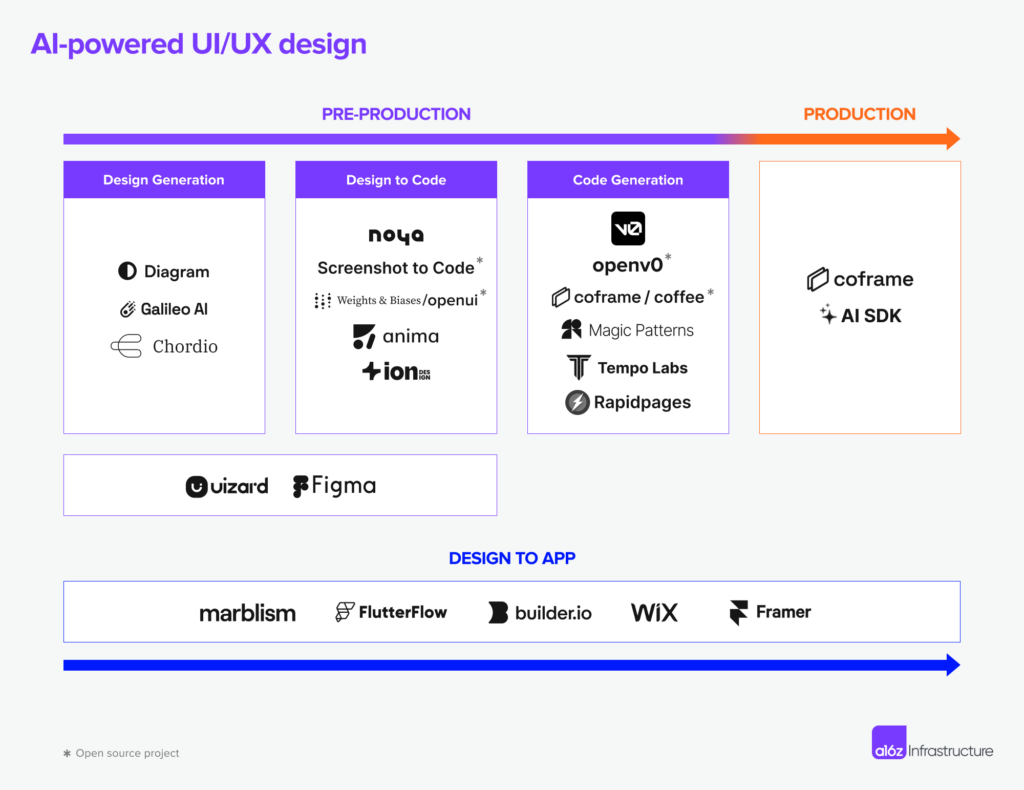

Interaction Transparency Keeps Users Informed

Interaction transparency ensures clear communication about AI capabilities, limitations, and when users are engaging with AI systems. Users receive explicit notification when they interact with AI rather than human agents, along with accurate information about what the AI system can accomplish.

Source: Andreessen Horowitz

This prevents deception and helps users form appropriate expectations about AI performance. Customer service chatbots exemplify interaction transparency when they clearly identify themselves as AI assistants and explain what types of questions they can answer effectively.

Core aspects include:

- User notification requirements — clearly identifying when people interact with AI systems

- Capability disclosure — providing accurate descriptions of what AI systems can and cannot do

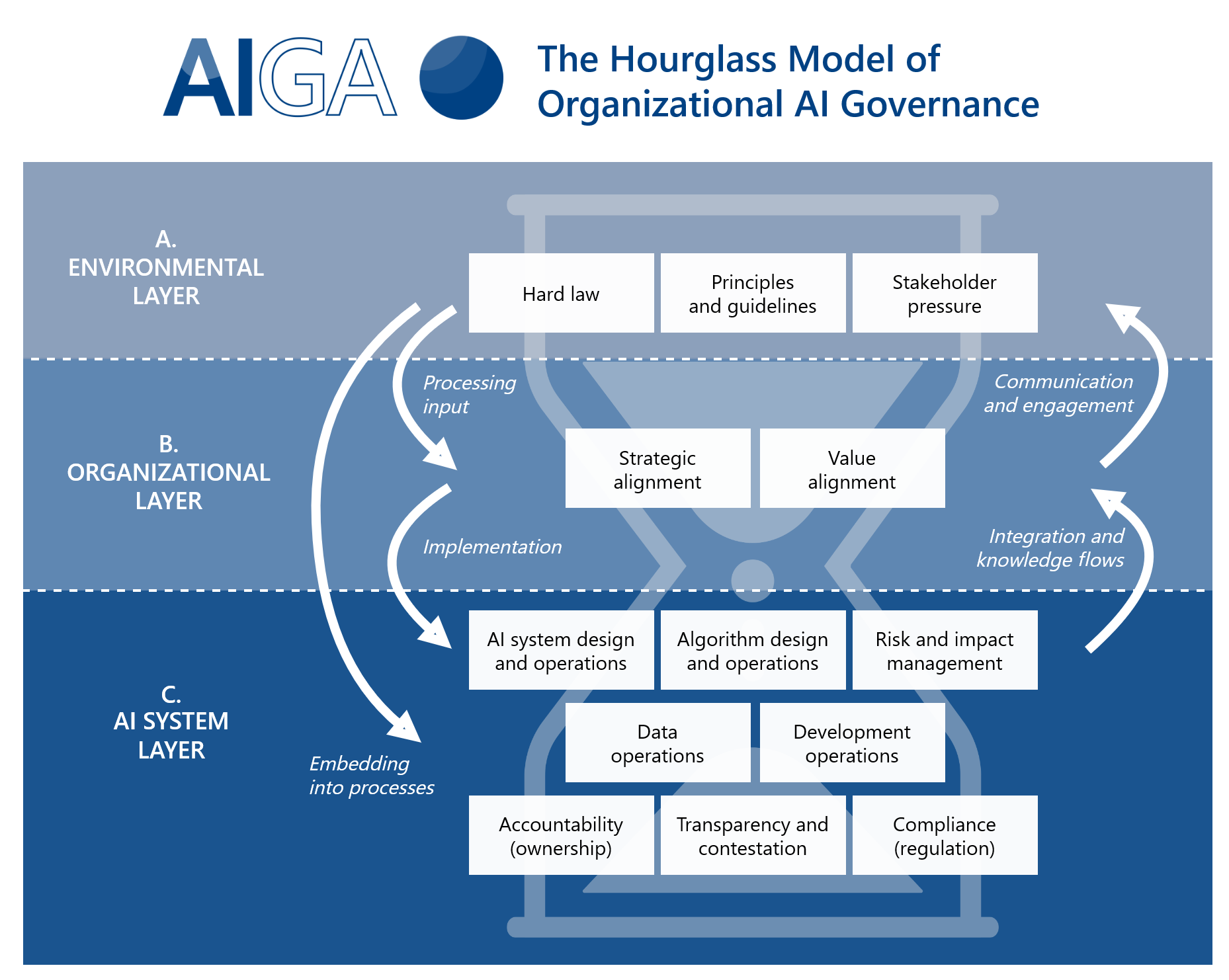

Organizational Transparency Shows Who’s Responsible

Organizational transparency addresses how companies and institutions manage AI governance, establish policies, and assign accountability for AI systems. Organizations document their decision-making structures, risk management approaches, and oversight mechanisms for AI development and deployment.

Source: AI Governance Framework

This enables stakeholders to understand who makes decisions about AI systems and how those decisions align with stated policies and values. When problems arise with AI systems, organizational transparency makes it clear who bears responsibility for fixes and improvements.

Key components include:

- Responsibility assignment — clearly defining who is accountable for AI system development and oversight

- Organizational AI policies — publishing governance frameworks and operational procedures that guide AI use

Why AI Transparency Matters for Organizations

AI transparency delivers measurable value through increased stakeholder confidence, regulatory compliance, and improved decision-making capabilities. Organizations with transparent AI systems experience higher user adoption rates and fewer operational disruptions compared to those using opaque systems.

Source: Medium

Transparent AI systems enable better performance monitoring through detailed visibility into model behavior and accuracy metrics. Organizations can identify performance degradation, optimize system parameters, and maintain consistent quality standards when they understand how their AI systems operate.

Building Trust Through Understanding

Transparency drives user adoption by eliminating uncertainty about AI system behavior and decision-making processes. When employees, customers, and partners understand how AI systems operate, they demonstrate greater willingness to engage with and rely on AI-driven processes.

Trust functions as a competitive advantage in AI implementation by reducing organizational resistance to technology adoption. Companies with transparent AI systems report faster deployment timelines and higher employee engagement during AI rollouts compared to organizations using black-box approaches.

Stakeholder confidence increases when organizations provide clear explanations of AI system capabilities and limitations. Users who receive transparent information about AI decision factors show higher satisfaction rates and generate fewer support requests related to system confusion or mistrust.

Meeting Regulatory Requirements

Emerging AI regulations require organizations to provide specific levels of transparency in AI system development and deployment. The EU AI Act establishes mandatory disclosure requirements for high-risk AI applications, while NIST frameworks provide guidance that has become industry standard practice.

Regulatory frameworks focus on transparency as a mechanism for accountability and public safety. Organizations operating in regulated industries face increasing scrutiny regarding AI system explainability, data governance, and decision audit trails.

Compliance requirements vary by industry and geographic location, creating complex obligations for multinational organizations. Financial services, healthcare, and employment sectors face the most stringent transparency mandates, with penalties for non-compliance including substantial fines and operational restrictions.

Reducing Risks and Improving Performance

Transparency enables early identification of AI system issues before they escalate into operational failures or legal liabilities. Organizations with comprehensive transparency frameworks detect bias, accuracy degradation, and system errors faster than organizations using opaque monitoring approaches.

Better decision-making results from combining human expertise with transparent AI insights. Teams that understand AI reasoning processes make more accurate strategic choices and avoid costly mistakes that result from blind reliance on automated recommendations.

Risk mitigation through transparency includes improved incident response capabilities and reduced system downtime. Clear understanding of AI system behavior enables faster troubleshooting and more effective corrective actions when problems occur.

How AI Transparency Differs From Related Concepts

Understanding the differences between transparency, explainability, and interpretability helps organizations choose the right approach for their specific needs and constraints.

Source: ResearchGate

Transparency refers to complete openness about how an AI system operates, including data sources, training methods, and decision processes. Organizations achieve transparency by publishing detailed documentation about how their systems were built and trained.

Explainability focuses on understanding specific outputs or decisions. An explainable AI system can provide reasons for individual predictions or recommendations, such as explaining why a loan application was rejected.

Interpretability addresses how understandable the AI model itself is to humans. Highly interpretable models use simple logic that humans can follow step-by-step, while complex models may require specialized explanation techniques.

When Each Approach Works Best

Transparency works best for regulatory compliance situations where organizations must demonstrate responsible AI practices and public sector applications where citizens have rights to understand government decision-making.

Explainability works best for customer-facing applications where users want to understand individual decisions and healthcare diagnostics where doctors need to validate AI recommendations.

Interpretability works best for medical diagnosis where doctors must understand the reasoning process completely and safety-critical systems where engineers need to predict AI behavior in all scenarios.

Key Components of Transparent AI Systems

Transparent AI systems require systematic approaches that cover the entire AI lifecycle. Each component serves a specific purpose in making AI systems understandable to different stakeholders, including technical teams, business users, and external regulators.

Organizations implement transparency through documentation standards, monitoring systems, communication protocols, and technical infrastructure that work together to provide comprehensive visibility into AI operations.

Documentation and Governance Requirements

Documentation requirements span the complete AI lifecycle, from initial data collection through model retirement. Organizations create standardized documentation that captures essential information in formats that stakeholders can understand and use for decision-making.

Governance frameworks establish the policies and procedures that guide transparent AI development and deployment. These frameworks define roles, responsibilities, and standards for maintaining documentation throughout the system lifecycle.

Key documentation includes:

- Model cards — standardized documents describing AI system capabilities, limitations, and performance metrics

- Data sheets — comprehensive records of training data sources, collection methods, and known quality issues

- Risk assessments — systematic evaluations of potential harms and mitigation strategies

Monitoring and Auditing Systems

Continuous monitoring systems track AI performance after deployment to detect changes that could affect system reliability or fairness. These systems generate automated alerts when performance metrics fall outside acceptable ranges.

Audit trails provide comprehensive records of all system activities, decisions, and changes that enable retrospective analysis and compliance verification. Organizations implement monitoring frameworks that capture both technical performance metrics and business impact measures.

Essential monitoring elements include:

- Performance tracking — automated measurement of accuracy and reliability metrics across different user segments

- Audit trails — complete logs of system decisions and administrative changes with timestamps

- Drift detection — algorithms that identify when model behavior changes significantly from baseline patterns

User Communication Standards

User communication protocols define how AI systems identify themselves and explain their capabilities to people who interact with them. These protocols address different communication contexts, from automated notifications to detailed explanation requests.

Effective communication protocols use language appropriate for the intended audience while providing sufficient information for informed decision-making. Organizations develop standardized templates that ensure consistent communication across different AI applications.

Communication standards include:

- Notification requirements — consistent methods for informing users when they interact with AI systems

- User education — programs that help users understand AI capabilities and limitations

- Explanation interfaces — user-friendly tools for requesting and receiving AI decision explanations

Essential Tools for AI Transparency

Organizations use various tool categories to make AI systems more transparent and understandable. The transparency tool landscape includes specialized platforms for model interpretation, documentation management, continuous monitoring, and compliance management.

Source: LinkedIn

These tools work together to create comprehensive transparency frameworks that help organizations understand, explain, and govern their AI systems effectively.

Model Explanation Tools

Model explanation tools help users understand how AI systems make decisions by analyzing model behavior and providing interpretable outputs. These tools address the black box problem in AI by revealing the factors that influence specific predictions.

Explanation tools fall into two main categories: model-agnostic methods that work with any AI model and model-specific approaches designed for particular algorithms. Popular tools include LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations).

Key explanation methods include:

- Feature importance analysis — identifying which input variables most strongly influence predictions

- Local explanations — explaining individual predictions by showing relevant factors for specific cases

- Global explanations — revealing overall model behavior patterns across entire datasets

Documentation Platforms

Documentation platforms centralize AI system information by maintaining comprehensive records of model development, deployment, and performance characteristics. These systems store technical specifications, training data details, and operational history in standardized formats.

Advanced platforms automatically capture metadata during model development workflows, reducing manual documentation burden while ensuring consistency. Documentation automation includes model card generation and data lineage tracking.

Monitoring Solutions

Monitoring platforms continuously track AI system performance to detect changes that could affect transparency or reliability. These tools analyze model outputs, input data quality, and operational metrics to identify potential issues.

Advanced monitoring systems compare current model behavior against baseline performance to detect drift, bias, or degradation patterns. Alerting mechanisms notify relevant stakeholders when significant changes occur.

Regulatory Requirements Driving Transparency

Governments worldwide are establishing laws that require organizations to explain how their AI systems work and make decisions. These regulatory requirements create legal obligations for companies to provide clear information about their AI systems.

Source: Data Crossroads

The regulatory landscape varies significantly between regions, with Europe taking the most comprehensive approach through binding legislation while the United States focuses on voluntary frameworks and guidance.

EU AI Act Requirements

The European Union’s AI Act, which became law in 2024, establishes comprehensive legal requirements for AI transparency. The Act requires companies to clearly inform users when they interact with AI systems, unless the interaction obviously involves AI.

Organizations deploying AI systems that generate synthetic content face specific marking requirements. AI-generated audio, images, videos, and text content must include machine-readable markers that identify the content as artificially created.

Business implications include mandatory documentation requirements for AI systems used in high-risk applications such as hiring, credit scoring, and healthcare. Companies operating in the EU market must maintain detailed records regardless of where the company is headquartered.

NIST Framework Guidance

The National Institute of Standards and Technology developed a voluntary framework that helps organizations manage AI-related risks, including transparency requirements. The NIST framework emphasizes that transparency means providing appropriate information based on who needs it and how they will use it.

The framework recognizes that different stakeholders require different types of transparency information. Technical teams need detailed algorithmic information, while end users need clear explanations of how AI decisions affect them.

Enterprise organizations often adopt the NIST framework as a foundation for their AI governance programs because it provides practical guidance without creating legal liability.

Industry-Specific Requirements

Different industries face unique transparency requirements based on existing regulations and the potential impact of AI decisions on consumers and society.

Healthcare regulations require AI diagnostic tools to provide explanations that healthcare professionals can validate, while financial services face fair lending laws that require explanations for AI-driven credit decisions.

Employment regulations increasingly require companies to audit AI recruitment tools and publish performance data, particularly regarding how AI systems affect different demographic groups.

Common Implementation Challenges

Organizations pursuing AI transparency face obstacles that extend beyond technical hurdles to encompass resource limitations, performance trade-offs, and strategic concerns. Real-world implementation reveals that transparency initiatives often compete with other priorities for attention and funding.

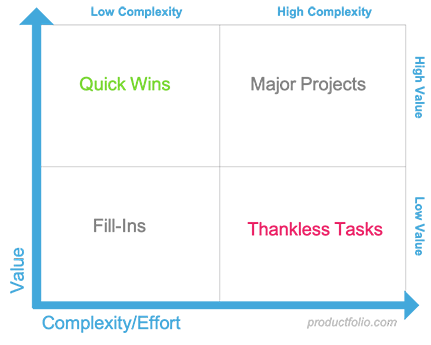

Source: Productfolio

Teams discover that creating comprehensive documentation and explanation systems demands substantial time from skilled data scientists and engineers who are already stretched across multiple projects.

Technical Complexity and Resource Constraints

Modern AI systems present inherent complexity that makes transparency expensive to implement and maintain. Deep learning models process information through hundreds of interconnected layers, creating decision pathways that resist simple explanation methods.

Organizations face difficult choices about resource allocation when transparency requirements conflict with development speed and budget constraints. Technical teams often lack the specialized expertise required to implement advanced transparency techniques.

Automated transparency tools reduce manual effort but require upfront investment in platforms and integration work. The cost of enterprise transparency solutions can reach hundreds of thousands of dollars annually, making them prohibitive for organizations with limited AI budgets.

Balancing Transparency with Performance

Transparency measures frequently reduce AI system performance through computational overhead and architectural constraints. Adding explanation generation to real-time systems might increase response times, potentially degrading user experience.

The most accurate AI models often resist interpretation due to their complexity. A system using complex ensemble methods might achieve higher accuracy but prove nearly impossible to explain in meaningful terms, while simpler models reach lower accuracy but provide clear decision logic.

Organizations develop strategies to optimize the transparency-performance trade-off through selective application and hybrid approaches. Critical decisions receive full explanation capabilities while routine decisions use faster, less transparent models.

Protecting Competitive Information

Comprehensive transparency can expose proprietary algorithms, data sources, and business logic that organizations consider competitive advantages. Organizations implement selective disclosure strategies that provide meaningful transparency while protecting sensitive information.

Technical approaches like differential privacy enable transparency while adding mathematical noise to protect proprietary information. Legal teams work with technical staff to define disclosure boundaries that satisfy regulatory requirements while maintaining intellectual property protection.

Scaling Across Large AI Portfolios

Organizations with dozens of AI models face exponential complexity in implementing consistent transparency standards. Each model may require different explanation techniques based on its architecture, use case, and risk profile.

Governance frameworks establish baseline transparency requirements while allowing flexibility for model-specific needs. Centralized model registries provide unified visibility into transparency compliance across entire AI portfolios.

Standardized templates and automated tools reduce the manual effort required to maintain transparency at scale. Organizations create model card generators that automatically populate standard fields from model metadata.

FAQs About AI Transparency

How does AI transparency differ from explainable AI?

AI transparency refers to openness about how an entire AI system was built and operates, including data sources, training methods, and decision processes. Transparency involves publishing comprehensive documentation about system development and capabilities.

Explainable AI focuses specifically on understanding individual decisions or outputs. An explainable system can tell you why it made a particular recommendation, while transparency tells you how the system was created and what it can do overall.

What types of AI systems require the most transparency?

High-risk AI systems that significantly impact people’s lives require the most comprehensive transparency. These include systems used for hiring decisions, medical diagnosis, criminal justice, and financial services like loan approvals or credit scoring.

The EU AI Act specifically categorizes AI systems by risk level, with high-risk applications facing the strictest transparency requirements including detailed documentation and user notification obligations.

Can small organizations implement AI transparency effectively?

Small organizations can implement AI transparency by focusing on documentation templates and automated tools rather than building custom solutions. Many cloud AI services now include built-in transparency features that reduce implementation complexity.

Starting with basic documentation like model cards and clear user notifications provides a foundation that organizations can expand over time. Open-source transparency tools offer cost-effective alternatives to expensive enterprise platforms.

Does AI transparency slow down system performance?

Transparency features can impact performance, but organizations use various strategies to minimize these effects. Explanation-on-demand architectures provide detailed transparency only when specifically requested, allowing routine operations to proceed without overhead.

Some transparency measures actually improve performance by enabling better monitoring and faster problem identification. Organizations often find that the operational benefits of transparency outweigh minor performance impacts.

What happens if our AI system can’t provide explanations?

Some AI architectures resist traditional explanation methods, but organizations can still achieve transparency through comprehensive documentation about system capabilities, limitations, and training data. Alternative approaches include using simpler, more interpretable models for high-stakes decisions.

Regulatory frameworks typically focus on appropriate transparency rather than requiring explanations for every decision. Organizations can demonstrate compliance through systematic documentation, monitoring, and clear communication about system limitations.