Evaluating an AI vendor involves systematically assessing a company’s technical capabilities, security practices, and business stability to determine whether their artificial intelligence solutions meet organizational requirements.

Organizations face increasing pressure to adopt AI technologies while navigating a complex vendor landscape filled with varying capabilities and claims. The National Institute of Standards reports that trustworthy AI systems require comprehensive evaluation approaches that consider technical performance, ethical implications, and operational requirements.

Recent research indicates that 71 percent of companies now use generative AI regularly in at least one business function, yet many organizations lack structured vendor assessment processes. The rapid evolution of AI technologies creates additional challenges as vendors introduce new capabilities while regulatory frameworks continue to develop.

Selecting the wrong AI vendor can result in failed implementations, security vulnerabilities, and significant financial losses. A systematic evaluation framework helps organizations identify vendors capable of delivering sustainable value while managing risks associated with AI implementation.

What Technical Capabilities Should You Evaluate in an AI Vendor

Technical due diligence for AI vendors means examining the core technology behind the marketing presentations. Non-technical executives can evaluate AI vendors by focusing on measurable performance metrics and specific capabilities that translate directly to business outcomes.

Technical evaluation differs from traditional software assessment because AI systems learn and adapt over time. The vendor’s underlying technology determines whether the AI solution will perform consistently in your specific business environment and scale with your organization’s growth.

AI Model Performance and Accuracy Standards

Accuracy metrics measure how often the AI system makes correct predictions or decisions. In business terms, accuracy represents the percentage of times the AI gets the right answer when processing real data from your operations.

Precision measures how many of the AI’s positive predictions are actually correct. For example, if an AI system identifies 100 potential leads and 80 of them convert to customers, the precision is 80 percent. Recall measures how many actual opportunities the AI successfully identifies.

Vendors demonstrate performance quality through benchmarking against industry standards and independent validation processes:

- Industry benchmarks — Standardized tests that allow comparison across different AI solutions

- Validation processes — Testing the AI system on data it has never seen before to confirm real-world performance

- Performance documentation — Specific metrics on datasets similar to your business context

Ask vendors for specific performance metrics and request documentation of testing methodologies. Performance claims without supporting evidence indicate insufficient technical rigor.

Integration APIs and Development Tools

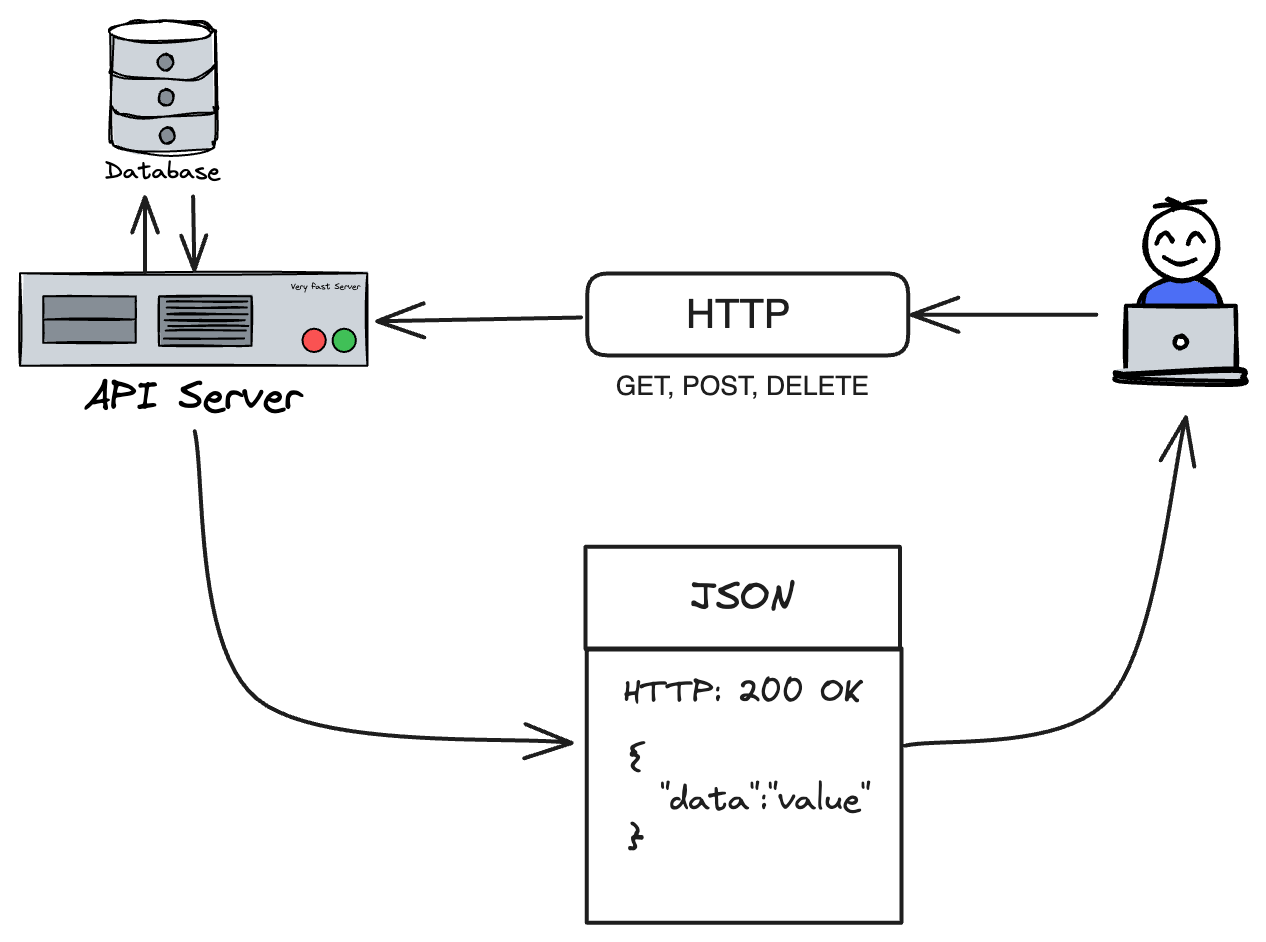

Application Programming Interfaces (APIs) allow different software systems to communicate with each other. REST APIs represent the most common integration standard, enabling your existing systems to send data to and receive results from the AI vendor’s platform.

Software Development Kits (SDKs) provide pre-written code components that simplify integration work for your development team. Compatibility with your existing technology stack determines how easily the AI solution connects to your current software systems, databases, and workflows.

Implementation complexity varies significantly between vendors based on their integration tools and documentation quality. Vendors with comprehensive APIs, clear documentation, and responsive developer support reduce the time and resources required for successful deployment.

How Do You Assess AI Vendor Security and Compliance Requirements

AI vendor security assessment evaluates whether a provider can protect your organization’s data and meet regulatory obligations. Security frameworks for AI implementations combine traditional cybersecurity practices with AI-specific protections that address unique risks like model theft, data poisoning, and algorithmic bias.

Enterprise security concerns for AI vendors include data protection during model training, secure API communications, and access controls that prevent unauthorized system use. Regulatory obligations vary by industry and geography, with requirements spanning data privacy laws, financial regulations, and healthcare compliance standards.

Data Encryption and Security Certifications

Data encryption protects information by converting it into unreadable code that requires a decryption key to access. Encryption at rest secures stored data on servers, databases, and backup systems using algorithms like AES-256. Encryption in transit protects data moving between systems through protocols like TLS 1.3 and secure API connections.

Security certifications provide independent validation of vendor security practices through standardized audit processes:

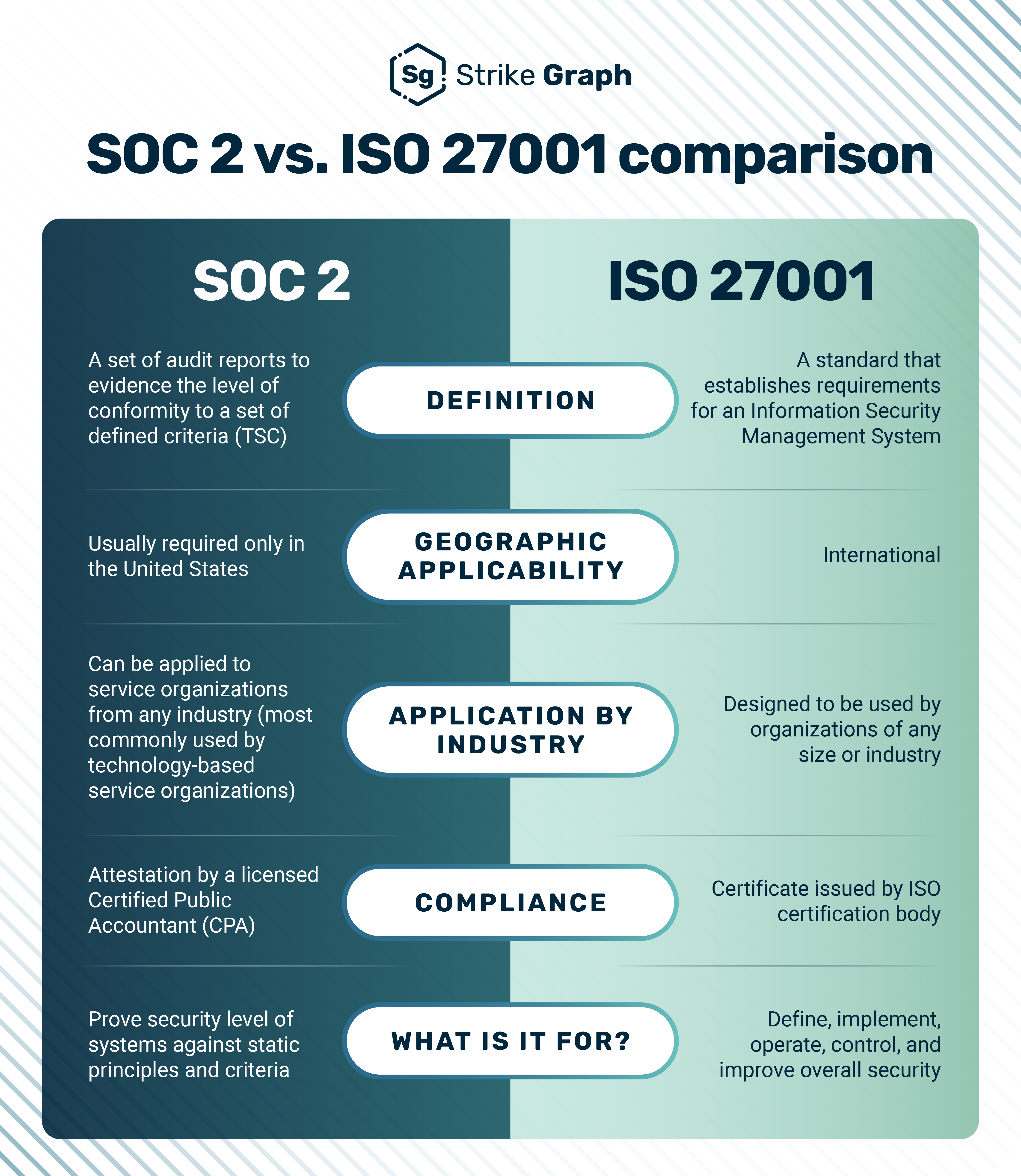

- SOC 2 Type II certification — Examines security, availability, processing integrity, confidentiality, and privacy controls over a minimum six-month period

- ISO 27001 certification — Demonstrates systematic information security management through comprehensive risk assessment and control implementation

- Industry-specific certifications — FedRAMP for government cloud services, HITRUST for healthcare data protection, and PCI DSS for payment card information

Regular certification renewals and audit reports provide ongoing validation of security practice effectiveness.

Regulatory Compliance Frameworks

GDPR governs personal data processing for EU residents regardless of where processing occurs. The regulation requires explicit consent for data collection, provides individuals with rights to access and delete personal information, and mandates data protection by design.

CCPA grants California residents rights to know what personal information businesses collect, request deletion of personal data, and opt out of personal information sales. The regulation applies to businesses meeting specific revenue or data processing thresholds.

HIPAA protects health information through security and privacy rules governing healthcare data handling. Covered entities and business associates must implement administrative, physical, and technical safeguards for protected health information.

What Integration and Scalability Questions Should You Ask

Organizations evaluating AI vendors face complex technical decisions that directly impact implementation success and long-term operational effectiveness. The integration and scalability assessment focuses on practical implementation challenges while ensuring investments can grow with business requirements.

Integration complexity determines how easily AI systems connect with existing technology infrastructure. Scalability characteristics affect whether AI solutions maintain performance as usage increases and business needs evolve.

System Compatibility and API Standards

System compatibility evaluation begins with understanding how AI solutions connect with existing enterprise software. Organizations operate diverse technology stacks that include customer relationship management systems, enterprise resource planning platforms, and data warehouses that require seamless integration.

API quality represents a critical factor in integration success. Well-designed APIs include comprehensive documentation, consistent naming conventions, and reliable versioning strategies. Poor API design creates ongoing maintenance burdens and limits future flexibility.

Data format compatibility affects how information flows between AI systems and existing applications. Organizations store data in various formats including SQL databases, NoSQL repositories, and file-based systems.

Performance Under Increasing Load

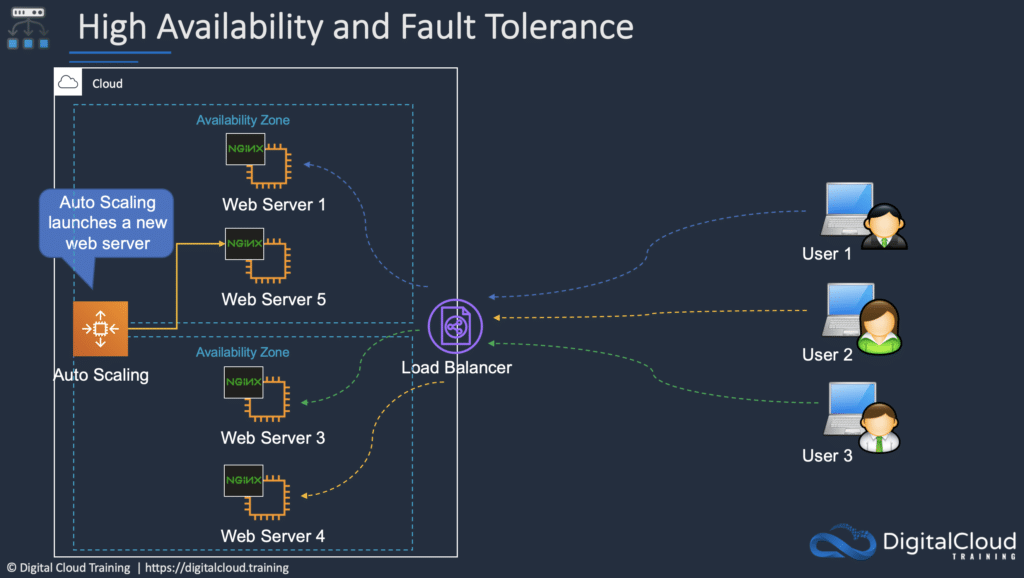

Load testing evaluates how AI systems perform when serving multiple users simultaneously. Load tests simulate realistic usage patterns to identify performance bottlenecks before deployment. Testing reveals whether systems maintain acceptable response times as user numbers increase.

Auto-scaling capabilities automatically adjust computing resources based on current demand:

- Resource scaling — Adds servers during high-usage periods and reduces resources when demand decreases

- Performance consistency — Maintains response times while controlling operational costs

- Latency characteristics — Determines how quickly AI systems respond to user requests

Different AI applications have varying latency requirements, with real-time applications requiring faster responses than batch processing systems.

How Do You Evaluate AI Vendor Stability and Support Quality

When organizations select AI vendors, they enter partnerships that can last several years and require significant investment. The vendor’s ability to remain financially stable and provide consistent support directly impacts the success of AI implementations.

Business continuity depends on choosing vendors that can maintain operations, continue product development, and provide support when issues arise. A vendor experiencing financial difficulties or lacking proper support infrastructure can leave organizations stranded with unsupported AI systems.

Financial Health and Market Position

Financial stability forms the foundation of vendor reliability. Organizations can assess vendor financial health through publicly available information and industry analysis without requiring access to private financial data.

Public companies provide quarterly earnings reports, annual filings, and investor presentations that reveal revenue trends, profitability, and market performance. Private companies present greater evaluation challenges but still offer indicators through recent funding rounds, investor quality, and funding amounts.

Revenue growth patterns indicate market acceptance and business sustainability. Companies demonstrating consistent revenue growth across multiple quarters show market validation and customer satisfaction.

Customer Support Infrastructure and Response Times

Customer support infrastructure determines how effectively vendors assist customers during implementation and ongoing operations. Well-structured support organizations provide multiple channels, clear escalation procedures, and defined response time commitments.

Support tier structures organize assistance based on issue severity:

- Tier 1 support — Handles general questions, account issues, and basic troubleshooting through self-service portals, chat systems, and phone support

- Tier 2 support — Addresses technical issues requiring specialized knowledge, configuration problems, and integration challenges

- Tier 3 support — Involves product specialists and engineers who handle complex technical problems, bugs, and feature requests

Response time commitments vary based on issue severity and customer service levels. Critical issues affecting production systems typically receive immediate response within one to four hours.

What Data Protection and Privacy Questions Are Critical

Data protection and privacy concerns form the foundation of responsible AI governance in enterprise environments. Organizations handle vast amounts of sensitive information through AI systems, creating complex challenges around data sovereignty, user rights, and regulatory compliance.

Data governance frameworks establish how organizations collect, process, store, and delete information throughout the AI lifecycle. Privacy regulations like GDPR, CCPA, and emerging AI-specific laws create binding requirements for data handling practices.

Data Residency and Geographic Requirements

Data sovereignty laws determine where organizations can store and process information based on the nationality or location of data subjects. Countries increasingly require certain types of data to remain within their borders or specific geographic regions.

Cross-border data transfer restrictions limit how organizations move information between countries and regions. The EU requires adequate protection levels or specific safeguards like Standard Contractual Clauses for transfers to countries without adequacy decisions.

Geographic storage requirements affect AI deployment architecture and vendor selection decisions. Cloud providers offer region-specific data centers to support compliance, but organizations must verify that all data processing occurs within approved locations.

Privacy Protection and Anonymization Methods

Data masking techniques replace sensitive information with fictional but realistic values that maintain data utility while protecting individual privacy. Organizations use masking for development environments, testing scenarios, and analytics where real personal data creates unnecessary privacy risks.

Tokenization replaces sensitive data elements with non-sensitive tokens that have no exploitable meaning or value. Unlike encryption, tokenization makes the original data irretrievable without access to the tokenization system.

Differential privacy adds controlled mathematical noise to datasets or query results to prevent individual identification while preserving statistical accuracy. This technique allows organizations to share useful data insights while protecting individual privacy.

Frequently Asked Questions About AI Vendor Evaluation

How long does comprehensive AI vendor evaluation typically take?

Most thorough evaluations require three to six months depending on project complexity and organizational requirements. Simple AI tools like chatbots might take closer to three months, while complex enterprise systems processing sensitive data often require the full six months. The timeline includes vendor research, technical evaluations, security assessments, legal reviews, and pilot projects.

Which specific team members participate in AI vendor evaluation?

Include IT leadership for technical requirements, business stakeholders for operational needs, legal counsel for compliance review, and security teams for data protection assessment. Finance representatives analyze costs and maximize AI ROI projections. Each team member brings essential expertise that contributes to comprehensive vendor evaluation.

When do organizations benefit from requesting AI vendor pilot projects?

Pilot projects provide valuable hands-on experience with vendor capabilities before full commitment. These limited-scope implementations allow teams to test AI performance with real organizational data rather than relying on vendor demonstrations. Pilots typically run for 30 to 90 days and focus on specific use cases that represent broader implementation goals.

How do organizations evaluate AI vendors across multiple business departments?

Create standardized evaluation criteria while allowing department-specific requirements. Establish core categories like security and integration that apply across all departments, then develop specific criteria addressing unique workflow requirements. Marketing teams might prioritize content generation, while finance teams emphasize compliance reporting capabilities. AI agents in sales and customer service have different evaluation priorities.

What actions work when no AI vendor meets all evaluation criteria?

Prioritize must-have requirements over nice-to-have features and consider phased implementation approaches. Distinguish between absolute necessities and convenience features. Organizations can implement AI solutions in phases, starting with core capabilities and adding advanced features through future partnerships or system upgrades.