Time series AI applies artificial intelligence to data collected over time to predict future values and identify patterns. Organizations across industries now use AI to forecast everything from stock prices and energy demand to patient health outcomes and supply chain needs. The technology has evolved far beyond traditional statistical methods to leverage deep learning architectures that can process continuous data streams and capture complex temporal dependencies.

The combination of AI with time series data addresses fundamental business challenges that every organization faces. Companies must predict sales, demand, revenue, and capacity requirements to operate effectively. Traditional forecasting methods often struggle with non-linear patterns, missing data, and the integration of multiple variables that influence outcomes simultaneously.

Modern time series AI systems can analyze millions of data points from sources ranging from financial markets to satellite imagery. These systems maintain the temporal order critical for understanding how past events influence future outcomes. The result is more accurate predictions that help organizations make better decisions and respond proactively to changing conditions.

Understanding Time Series Data

Time series data consists of observations recorded at regular intervals where the sequence order must be preserved. Each data point depends on previous values, creating temporal dependencies that distinguish time series from random samples. Unlike traditional datasets where order doesn’t matter, time series analysis recognizes that past events directly influence future outcomes.

Time series data contains four main components that shape how patterns emerge over time:

- Trend — Long-term increases or decreases in values over extended periods

- Seasonality — Regular patterns that repeat at fixed intervals, such as daily temperature cycles or weekly sales patterns

- Cyclicity — Longer-term patterns without fixed periods, like economic cycles that vary in length

- Irregularity — Random variations that don’t follow identifiable patterns

Source: Analytics Vidhya

The concept of stationarity plays a crucial role in time series analysis. Stationary time series maintain constant statistical properties over time, making patterns learned from one period applicable to others. Non-stationary data with trends or seasonal patterns requires transformation techniques such as differencing to achieve stationarity before modeling.

How AI Processes Time Series Data

AI systems transform sequential data into predictions through a systematic process that mimics how humans learn from patterns over time. The technology processes historical observations, identifies recurring trends, and generates forecasts about future values without requiring manual specification of which patterns to look for.

Data preprocessing begins by cleaning and preparing raw information to ensure accuracy and consistency. AI systems examine each data point for validity, checking whether values fall within expected ranges and maintaining proper chronological order. Missing values get handled through automated methods including interpolation between existing observations or extending known values to cover gaps.

Feature engineering creates informative inputs that capture temporal relationships within the data. AI systems automatically generate lagged features by shifting values backward in time, enabling models to recognize how past observations influence future outcomes. Rolling window features calculate summary statistics like averages over sliding time periods, capturing trends at different temporal scales.

Time-based features encode calendar information such as day of week, month of year, and holiday indicators. These features help models learn recurring patterns associated with specific temporal contexts. The AI automatically identifies which combinations of these engineered features provide the most predictive power.

Types of Time Series AI Models

Long Short-Term Memory Networks

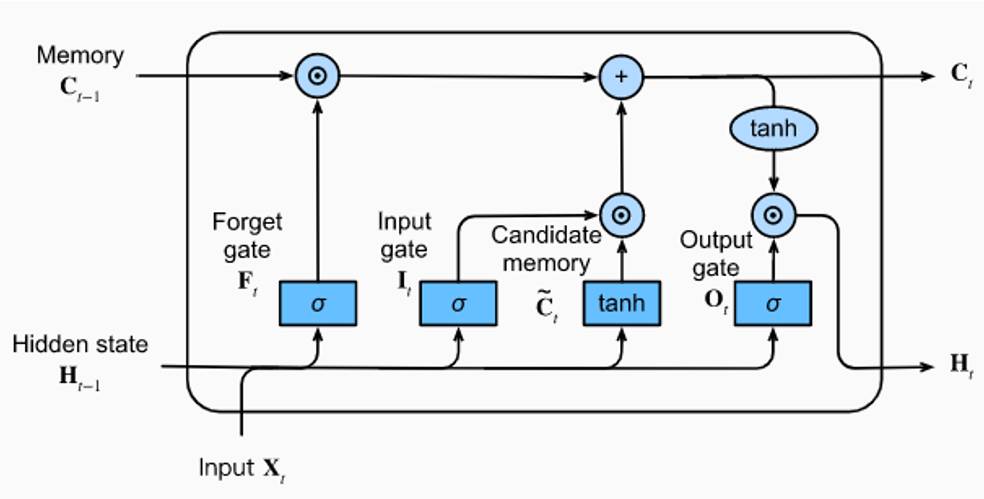

Long Short-Term Memory networks solve a fundamental problem in sequential data processing by remembering important information across long time periods. Standard neural networks struggle with long sequences because they lose track of earlier information, but LSTMs use specialized gating mechanisms to control what information to keep, update, or discard.

The LSTM architecture includes three types of gates that work together to manage information flow. The forget gate decides which information to remove from memory, the input gate determines what new information to store, and the output gate controls what information to use for predictions. These gates allow LSTMs to selectively retain patterns from weeks or months ago while adapting to recent changes in the data.

Source: Medium

LSTMs excel at complex pattern recognition tasks where long-term dependencies matter. In healthcare applications, they can predict disease progression by analyzing laboratory test results collected over months or years. Financial institutions use LSTMs to identify market patterns that develop across extended periods.

Transformer Architectures

Transformer architectures revolutionized time series analysis by introducing attention mechanisms that can focus on the most relevant parts of historical data when making predictions. Unlike LSTMs that process data sequentially, transformers examine all time points simultaneously and determine which historical observations are most important for current forecasts.

Recent advances in transformer architectures have made them particularly effective for handling multiple time series simultaneously. Models can process hundreds of related time series at once, learning how different variables influence each other while maintaining temporal relationships within each series.

Foundation Models

Foundation models represent pre-trained neural networks that learn generalizable patterns from vast amounts of temporal data. Google’s TimesFM demonstrates this approach by training on over 100 billion real-world time points across diverse datasets. The model performs univariate forecasting for context lengths up to 512 time points and can generate forecasts for any horizon length.

IBM’s TinyTimeMixer achieves competitive performance with fewer than one million parameters, proving that smaller architectures can deliver robust predictions without massive computational requirements. The model trains in approximately eight hours on limited data while outperforming billion-parameter alternatives on various forecasting tasks.

Transfer learning enables foundation models to apply knowledge gained from diverse training data to new forecasting problems. Models pre-trained on extensive time series collections can recognize seasonal patterns and trends in previously unseen data through fine-tuning with minimal domain-specific examples.

Real-World Applications

Healthcare and Patient Monitoring

Healthcare systems implement time series AI to monitor patient vital signs continuously and detect early warning signals of medical complications. LSTM networks analyze sequential laboratory test results to predict disease onsets, enabling healthcare providers to intervene proactively before conditions worsen. These models process continuous data streams from medical devices, identifying subtle changes in heart rate, blood pressure, and other physiological indicators.

Source: ResearchGate

Treatment response prediction uses historical patient data to forecast how individuals will respond to specific medications or therapeutic interventions. AI models combine patient demographics, medical history, and real-time monitoring data to personalize treatment plans and adjust dosages based on predicted outcomes.

Resource planning applications forecast patient expenditures on medications and treatments, enabling healthcare organizations to optimize staffing levels, equipment usage, and facility capacity. Time series models predict when emergency departments will experience high patient volumes, allowing hospitals to adjust staffing schedules accordingly.

Financial Services and Risk Assessment

Financial institutions apply time series AI for stock price prediction and market trend analysis, processing continuous streams of trading data to identify patterns and forecast future price movements. These models analyze historical price data alongside trading volumes, market sentiment indicators, and economic news to generate predictions about asset performance.

Fraud detection systems monitor transaction patterns to identify suspicious activities that deviate from normal customer behavior. Time series models establish baseline patterns for individual accounts and flag transactions that represent significant departures from established spending habits. These systems can detect credit card fraud and money laundering schemes by recognizing unusual temporal patterns in account activity.

Risk modeling applications use time series AI to assess the probability of loan defaults, market volatility, and other financial risks. Banks combine historical account performance data with external economic indicators to predict which customers are most likely to default on loans or credit obligations.

Energy and Utilities Management

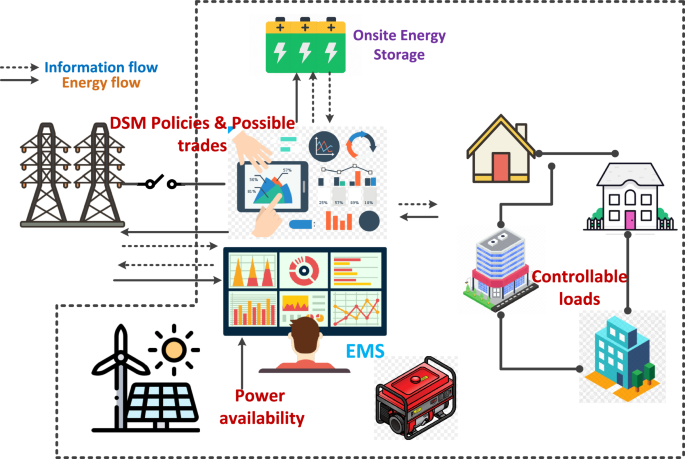

Energy companies use time series AI to predict electrical demand patterns by capturing both daily consumption cycles and seasonal variations. These models process data from smart meters across entire electrical grids, forecasting energy needs hours or days in advance to optimize power generation and distribution. Grid optimization applications balance supply and demand in real-time, reducing costs and preventing blackouts during peak usage periods.

Source: Nature

Renewable energy prediction presents unique challenges because solar and wind power generation depends heavily on weather conditions. Time series models integrate meteorological forecasts with historical generation data to predict renewable energy output, helping grid operators balance variable renewable sources with traditional power generation.

Smart meter data analysis processes millions of individual household and business consumption measurements to identify usage patterns and detect anomalies. AI models can identify potential equipment failures in the electrical grid by detecting unusual power consumption patterns or voltage fluctuations.

Choosing the Right Time Series AI Model

Assessing Your Data Requirements

Data frequency determines which models can effectively capture temporal patterns in your time series. High-frequency data collected at intervals of seconds or minutes benefits from models like LSTMs that can process rapid changes and maintain context across short time windows. Daily or weekly data often works well with transformer architectures that excel at identifying longer-term patterns and seasonal cycles.

The length of historical records influences model selection significantly. Traditional statistical methods like ARIMA require at least 50-100 observations to identify reliable patterns, while deep learning approaches typically need hundreds or thousands of data points to train effectively. Foundation models can work with shorter historical records because they leverage knowledge from pre-training on massive datasets.

Source: ResearchGate

- Seasonality patterns — Simple seasonal patterns with clear daily, weekly, or monthly cycles work well with traditional methods or basic neural networks

- Complex seasonality — Multiple overlapping cycles or irregular patterns require transformer architectures with attention mechanisms

- Data quality — Missing values and outliers affect different model types differently, with foundation models showing greater robustness

Performance and Implementation Considerations

Acceptable prediction accuracy levels determine the complexity of models worth considering for your application. Applications requiring high precision, such as financial trading or medical diagnosis, justify the computational cost of advanced transformer architectures or ensemble methods that combine multiple models. Use cases with moderate accuracy requirements may achieve sufficient performance with simpler approaches.

Latency requirements separate real-time applications from batch processing scenarios. Real-time forecasting for applications like algorithmic trading requires models that generate predictions within milliseconds. Compact architectures can deliver fast inference times suitable for real-time deployment, while batch processing applications can utilize larger, more accurate models.

Available technical infrastructure constrains the complexity of models that organizations can deploy effectively. Standard laptop computers can run compact models that require minimal computational resources while still delivering competitive accuracy. Organizations with graphics processing units can implement larger transformer architectures that benefit from parallel processing capabilities.

Getting Started With Time Series AI

Start by examining your existing data collection processes to understand what temporal information you already capture. Look for datasets with regular time intervals, sufficient historical depth, and minimal gaps in observations. Data stored in different formats or systems may require integration work before AI models can process it effectively.

Identify high-impact use cases by examining areas where improved predictions would create substantial value for your organization. Common opportunities include demand forecasting for inventory management, equipment maintenance scheduling based on sensor data, and financial planning through revenue predictions. Focus on problems where you have adequate historical data and where forecast accuracy directly influences important business decisions.

Select initial pilot projects based on data availability, potential impact, and implementation complexity. Successful pilots typically involve well-defined forecasting problems with clear success metrics and stakeholder buy-in. Choose use cases where you can measure prediction accuracy against known outcomes and where improved forecasts would lead to measurable operational improvements.

Establish baseline performance using simple statistical methods before implementing advanced AI approaches. Traditional techniques like moving averages or seasonal decomposition provide reference points for evaluating AI model improvements. Document current forecasting methods and their accuracy levels to quantify the value of AI-powered enhancements.

Consider starting with foundation models or pre-trained architectures that can be fine-tuned for your specific use cases. These approaches often require less historical data and shorter development cycles compared to training custom models from scratch. Cloud-based platforms and open-source tools provide accessible entry points for organizations without extensive AI expertise.

FAQs About Time Series AI

What minimum amount of historical data is required for time series AI models?

Foundation models can perform zero-shot forecasting with minimal historical data by leveraging pre-trained patterns, while traditional deep learning approaches typically need hundreds to thousands of data points. Google’s TimesFM can generate predictions without domain-specific training data by applying patterns learned from its massive pre-training dataset.

The amount of historical data needed depends on the complexity of patterns in your specific use case. Simple seasonal patterns might require only a few cycles of historical data, while complex multi-variable relationships benefit from longer historical records that capture various conditions and scenarios.

How do time series AI models handle missing values and data gaps?

Time series AI uses interpolation techniques and imputation methods to fill gaps in historical data. Linear interpolation draws straight lines between known values, while more sophisticated methods consider seasonal patterns or trends when filling gaps. Advanced models can learn to handle missing data patterns and maintain prediction accuracy without requiring all gaps to be filled manually.

Front filling extends the earliest known value backward to cover missing data at the beginning of a series, while back filling uses the last known value to cover gaps at the end. Some advanced architectures can learn to recognize missing data patterns and adjust their predictions accordingly.

What accuracy improvements can organizations expect from time series AI compared to traditional methods?

Source: ResearchGate

Research demonstrates that AI models can reduce forecasting errors by significant margins compared to statistical methods, particularly when dealing with large datasets containing multiple variables and extended time horizons. However, actual improvements vary based on data quality, pattern complexity, and implementation approach.

The most substantial accuracy gains typically occur when historical data contains complex, non-linear patterns that traditional statistical methods struggle to capture. Simple datasets with clear linear trends might see modest improvements, while complex multi-variable datasets often show dramatic accuracy gains with AI approaches.

How long does implementation typically take for time series AI projects?

Implementation timelines vary from several weeks for simple forecasting projects using pre-built models to several months for complex enterprise deployments. Simple forecasting projects using readily available data and foundation models can be deployed in three to six weeks.

Enterprise deployments require longer timelines because they involve multiple data sources, custom model development, and integration with existing business systems. Data preparation often consumes the largest portion of implementation time, particularly when historical records require extensive cleaning or exist in multiple formats across different departments.

What computational resources are required to run time series AI models?

Computational requirements vary significantly across different model types and deployment scenarios. Compact architectures like IBM’s TinyTimeMixer can run on standard laptop computers while delivering competitive accuracy, making sophisticated forecasting accessible without specialized hardware.

Cloud-based deployment enables access to specialized hardware for training and inference without local infrastructure investment. Organizations can start with minimal computational resources and scale up as their time series AI initiatives expand and demonstrate value.