ISO 42001 is an international standard that provides organizations with a framework for managing artificial intelligence systems responsibly and ethically.

Organizations across industries now face increasing pressure to govern their AI systems properly. The emergence of powerful AI technologies has created new risks around bias, privacy, security, and transparency. Without proper governance, AI systems can produce discriminatory outcomes, violate regulations, or damage organizational reputation.

ISO 42001 offers a structured approach to these challenges. The standard establishes requirements for creating an AI Management System that addresses risks throughout the AI lifecycle. Organizations that implement ISO 42001 gain a systematic way to develop, deploy, and monitor AI systems while maintaining accountability and trust.

Implementation requires significant organizational commitment spanning multiple departments. The process typically takes four to nine months and involves gap analysis, policy development, risk management, documentation, and staff training. Organizations with existing management systems like ISO 27001 can leverage their current governance structures to accelerate implementation.

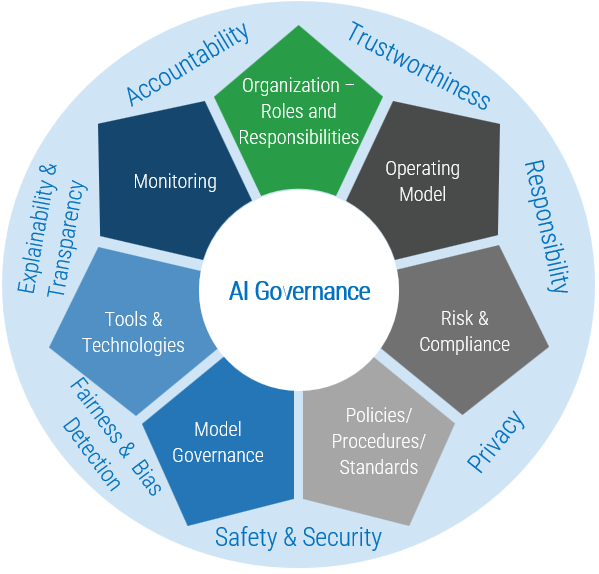

Understanding ISO 42001 for AI Governance

ISO 42001 helps organizations manage artificial intelligence systems through structured governance processes. Released in December 2023, this international standard applies to any organization that develops, provides, or uses AI-based products and services, regardless of size or industry.

AI systems create unique governance challenges that traditional software management can’t address. Unlike regular software, AI systems learn from data and make autonomous decisions. They can exhibit bias, produce incorrect outcomes, or cause unintended harm without proper oversight.

The standard follows a Plan-Do-Check-Act methodology similar to other ISO management systems. Organizations establish context and scope for their AI Management System first. Leadership commitment and resource allocation form the foundation for successful implementation.

Core Requirements of the AI Management System

ISO 42001 organizes its requirements into seven main areas that work together to create comprehensive AI oversight:

Context establishment involves identifying internal factors like governance structures and organizational objectives. Organizations document external factors including legal requirements and stakeholder expectations. They define interested parties and their expectations regarding AI governance.

Leadership commitment requires top management to demonstrate visible support through resource allocation. Organizations establish AI policies that outline their approach to responsible AI development. Clear roles and responsibilities for AI governance get assigned throughout the organization.

Planning includes conducting comprehensive risk assessments for AI systems across technical, ethical, legal, and societal dimensions. Organizations perform AI Impact Assessments for high-risk systems that significantly affect individuals or groups. Risk treatment plans with specific controls address identified risks.

Support requires allocating adequate financial, technological, and human resources for governance activities. Organizations ensure personnel have required competencies for their AI governance responsibilities. Communication channels for governance policies and requirements get established.

Operation implements processes for AI system development, acquisition, deployment, and monitoring. Organizations establish data governance procedures for collection, validation, preparation, and protection. Operational controls for AI system use and human oversight get created.

Performance evaluation monitors and measures AI governance effectiveness through defined metrics. Organizations conduct regular internal audits to assess compliance with requirements. Management reviews evaluate system performance and identify improvements.

Improvement addresses nonconformities through corrective actions. Organizations implement continuous improvement processes to enhance governance effectiveness. The management system adapts in response to new risks or technological developments.

Key Differences from Information Security Standards

ISO 42001 differs significantly from information security standards like ISO 27001 in scope and focus. While ISO 27001 concentrates on data confidentiality, integrity, and availability, ISO 42001 addresses AI system governance including fairness, transparency, safety, and ethics.

Risk scope varies between the standards. ISO 42001 evaluates algorithmic bias, model drift, explainability, and ethical impacts. ISO 27001 focuses on cybersecurity threats, data breaches, and access control.

Technical controls differ substantially. ISO 42001 requires bias testing, model monitoring, fairness validation, and human oversight. ISO 27001 emphasizes encryption, access controls, network security, and incident response.

Assessment requirements also diverge. ISO 42001 mandates AI Impact Assessments for high-risk systems. ISO 27001 requires security risk assessments for information assets.

Organizations with existing ISO 27001 certifications can leverage established governance structures when implementing ISO 42001. However, AI-specific requirements demand additional controls and expertise that information security frameworks don’t address.

Conducting Your ISO 42001 Gap Analysis

A comprehensive gap analysis identifies where current practices align with ISO 42001 requirements and where improvements are needed. This assessment serves as a roadmap that guides implementation priorities, resource allocation, and timeline development.

Organizations assemble cross-functional assessment teams that include representatives from IT, data science, compliance, legal, risk management, and relevant business units. This diverse team ensures technical, governance, ethical, legal, and business perspectives are represented throughout the evaluation.

The team systematically reviews each clause of ISO 42001 and evaluates current organizational practices against the standard’s requirements. Organizations document their compliance status for each requirement using categories like “Compliant,” “Partially Compliant,” or “Not Compliant” with detailed explanations.

Assessing Current AI Governance Maturity

Organizations evaluate their AI governance maturity across multiple dimensions to understand their starting point for ISO 42001 implementation. The assessment examines existing policies, procedures, controls, and organizational structures related to AI development and management.

The maturity assessment framework evaluates organizations across five levels:

- Initial Level — No formal AI governance policies or procedures exist, with ad hoc risk management

- Basic Level — Some AI policies exist but may be incomplete, with limited documentation

- Developing Level — Formal AI policies are established but may not cover all systems consistently

- Managed Level — Comprehensive AI policies and procedures are documented and consistently followed

- Optimized Level — AI governance is integrated throughout organizational culture with continuous improvement

Organizations typically fall into different maturity levels across various aspects of AI governance. An organization might have well-developed data governance practices but lack formal AI ethics policies or bias assessment procedures.

Identifying Implementation Resource Requirements

ISO 42001 implementation requires allocation of financial, human, and technological resources across multiple organizational functions. Organizations conduct resource requirements analysis to understand necessary investments for establishing and maintaining their AI management system.

Financial resources include external consulting services, software tools for AI governance and monitoring, training programs, and external audit fees. Small organizations with limited AI systems typically spend $4,000 to $20,000 for complete implementation and certification.

Human capital encompasses dedicated AI governance personnel, subject matter expertise in data science and ethics, and cross-functional team members contributing part-time to governance activities. Organizations typically need 2-5 full-time equivalents for mid-sized implementations.

Technology infrastructure involves AI monitoring and management platforms, documentation systems, risk assessment tools, and integration capabilities with existing IT systems. Training and development includes executive-level training for senior leadership and technical-level training for engineers.

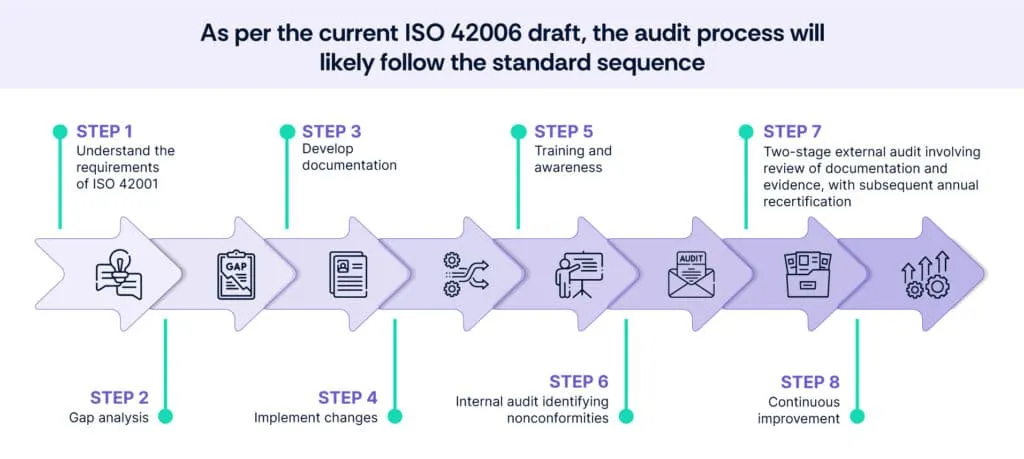

Creating Your Implementation Timeline

ISO 42001 implementation follows a phased approach that builds foundational governance elements before progressing to more complex operational controls. Organizations typically require four to nine months for complete implementation, though this timeline varies based on size and existing governance maturity.

The foundation phase establishes organizational context and scope definition. Organizations create AI policies and governance frameworks, assign roles and responsibilities, and conduct comprehensive risk assessments. This phase typically represents the longest portion of implementation.

The development phase implements operational procedures for AI system development and acquisition. Organizations establish data governance processes, create training programs, and develop documentation systems. This phase can’t begin until policies and risk frameworks are established.

The deployment phase implements monitoring systems, executes organization-wide training programs, and begins operational use of governance procedures. Deployment depends on completion of procedure development and requires coordination across multiple functions.

Critical path activities that influence overall timeline include leadership approval of policies, completion of risk assessments for high-risk AI systems, development of operational procedures, and organization-wide training program execution. Organizations often find that training delivery and internal audit completion represent timeline bottlenecks.

Building Your AI Governance Framework

Creating an effective AI governance framework requires systematic planning and clear organizational structure. Organizations work through three core phases: securing leadership commitment, defining scope and context, and assigning specific roles and responsibilities.

Establishing Leadership Commitment and Oversight

Executive sponsorship forms the foundation of successful AI governance implementation. Senior leadership demonstrates commitment through resource allocation, policy establishment, and visible participation in governance activities.

Organizations typically establish AI governance committees comprising senior representatives from key functions. These governance bodies meet regularly to review AI governance status, approve policies, oversee risk management, and monitor implementation progress.

Leadership responsibilities include providing adequate resources for governance implementation, approving AI governance policies aligned with organizational strategy, and designating specific individuals with defined authorities. The governance committee structure includes clear decision-making authority for AI system approvals and risk acceptance.

Defining Organizational Context and Scope

Organizations establish governance boundaries through comprehensive context analysis and scope definition. Context analysis identifies internal and external factors influencing AI governance decisions.

Internal factors encompass governance structures, organizational objectives, existing policies, contractual obligations, and technical capabilities. External factors include regulatory requirements, industry standards, competitive pressures, and societal expectations regarding AI ethics.

Scope definition establishes which AI systems, processes, and organizational functions fall within governance boundaries. Effective scope statements specify system coverage, lifecycle stages, organizational functions, geographic boundaries, and regulatory requirements.

Organizations create scope diagrams showing AI systems under governance, their relationships to organizational functions, and risk classifications. These visual representations help stakeholders understand governance coverage and provide auditors with clear scope boundaries.

Assigning AI Governance Roles and Responsibilities

Effective AI governance requires clearly defined roles with specific responsibilities and accountability structures. Organizations assign governance roles across technical, operational, and oversight functions.

Source: Info-Tech

Core governance roles include:

- Chief AI Officer or AI Governance Lead — Overall management system effectiveness and strategic direction

- AI Risk Manager — Risk assessment methodology development and risk register maintenance

- AI Ethics Lead — Fairness assessment and ethical review of high-risk applications

- Data Protection Officer — Data governance coordination and privacy compliance

- AI System Owners — Day-to-day governance for specific AI systems

Organizations document role definitions with required competencies, accountability relationships, and specific governance activities. Committee structures support individual roles through cross-functional coordination and clear escalation paths.

Implementing AI Risk Management Processes

Source: Saidot

AI risk management forms the backbone of effective AI governance, requiring organizations to systematically identify, evaluate, and address risks across technical, ethical, legal, and business dimensions. Unlike traditional IT risk management focused on data security, AI risk management encompasses unique challenges including algorithmic bias and model degradation.

The risk management process begins with establishing organizational risk criteria that define acceptable risk levels across different AI system categories. Organizations develop risk appetites that vary based on system criticality — accepting higher false positive rates for recommendation engines while requiring near-perfect accuracy for medical diagnosis systems.

Risk identification spans the entire AI lifecycle from data collection through deployment and monitoring. Common risks include biased training data during collection, algorithmic bias during development, and performance degradation during deployment.

Conducting AI Impact Assessments

Source: ResearchGate

AI Impact Assessments (AIIAs) represent specialized evaluations required for high-risk AI systems that could significantly affect individuals, groups, or society. These assessments extend beyond traditional risk analysis by examining how AI systems might impact human rights, autonomy, and fairness.

The AIIA process starts with clearly documenting the AI system’s intended purpose, scope of application, and expected benefits. Organizations then identify all stakeholders who might be affected by the system, including direct users and communities that could experience secondary effects.

Impact evaluation examines multiple dimensions:

- Fairness and non-discrimination impacts — Whether the system could produce different outcomes for protected classes

- Privacy and data protection impacts — How personal data is collected, processed, and stored

- Safety and security impacts — Whether system failures could endanger human health or safety

- Transparency and explainability impacts — Whether affected individuals can understand system decisions

- Human autonomy impacts — Whether the system preserves meaningful human choice

Each identified impact receives evaluation for likelihood and severity, considering both immediate effects and longer-term consequences. The assessment includes consultation with affected stakeholders when possible.

Developing Risk Treatment Strategies

Risk treatment involves selecting and implementing specific approaches to address identified risks based on organizational risk appetite and available resources. Organizations typically employ four primary strategies, often in combination.

Risk mitigation implements controls to reduce risk likelihood or impact. Common mitigation strategies include technical safeguards like input validation and output filtering, algorithmic controls including bias detection algorithms, and procedural controls such as human oversight requirements.

Risk acceptance occurs when organizations determine that risk levels are within acceptable tolerances given the system’s benefits. Acceptance decisions require formal documentation of rationale, clear definition of acceptable thresholds, and regular review processes.

Risk avoidance eliminates risks by not deploying AI systems or by modifying system design to remove risk sources. Avoidance strategies include choosing not to automate decisions in high-risk domains or using simpler, more interpretable models.

Risk transfer shifts responsibility to other parties through contractual arrangements or insurance products. Transfer mechanisms include insurance policies covering AI-related liabilities and contractual liability allocation with vendors.

Managing Third-Party AI Vendors and Systems

Third-party AI components introduce additional governance complexity because organizations retain ultimate responsibility for compliance while relying on external providers. Vendor management requires comprehensive due diligence, contractual protections, and ongoing monitoring.

Vendor assessment evaluates potential AI vendors across multiple governance dimensions before engaging their services. The due diligence process includes AI governance maturity assessment, security posture evaluation, technical capabilities review, and compliance documentation verification.

AI vendor contracts establish governance expectations and allocate responsibility for compliance activities. Key contractual provisions include data protection clauses restricting vendor use of customer data, performance guarantees specifying acceptable accuracy rates, and transparency requirements for model documentation.

Ongoing vendor oversight continues throughout the business relationship through regular performance monitoring, compliance verification, incident reporting, and periodic risk reassessment. Organizations establish escalation procedures for addressing vendor non-compliance.

Developing ISO 42001 Documentation

Source: Total Quality Management – WordPress.com

Documentation forms the backbone of ISO 42001 compliance, providing auditors with concrete evidence that an organization has established and implemented an artificial intelligence management system. The documentation framework includes governance policies, procedures that define work processes, and records that prove compliance activities occurred.

Organizations typically create 15-25 core documents to meet ISO 42001 requirements, though the exact number depends on organizational size and AI system complexity. Well-organized documentation enables personnel to understand their responsibilities and execute governance activities consistently.

Creating Your AI Governance Policy

The AI governance policy serves as the foundational document expressing organizational commitment to responsible AI development and use. This high-level policy statement articulates the organization’s position on AI ethics and establishes governance principles.

An effective AI governance policy typically spans 3-5 pages and addresses core elements systematically. The policy opens with a clear purpose statement explaining why the organization established AI governance. The scope section defines which AI systems and organizational functions fall under the policy’s authority.

The principles section establishes fundamental commitments such as fairness, transparency, accountability, security, and privacy protection. Each principle receives explanation describing what it means in the organization’s context. The transparency principle might state that the organization commits to ensuring affected individuals understand how AI systems make decisions impacting them.

The responsibilities section identifies key roles in AI governance, including executive leadership, governance committee members, system owners, and individual contributors. The compliance section establishes commitment to meeting applicable regulations and industry standards.

Policy approval requires formal endorsement by senior management through executive signature and effective date assignment. The organization distributes the policy to all personnel involved in AI development or oversight activities through training programs and organizational intranets.

Establishing Required Procedures and Controls

ISO 42001 requires organizations to document specific procedures that govern how AI systems are managed throughout their lifecycle. These procedures translate high-level policy commitments into detailed work instructions that personnel follow.

Core mandatory procedures include AI Risk Assessment Procedure defining how organizations identify and evaluate risks, AI Impact Assessment Procedure establishing requirements for high-risk system evaluation, and AI System Development Procedure specifying design and testing requirements.

Additional required procedures cover data governance controlling how data is acquired and protected, system deployment defining production environment requirements, and system monitoring establishing ongoing oversight requirements. Organizations also need incident response procedures and change management procedures.

Each procedure follows consistent structure including purpose, scope, responsibilities, detailed process steps, required inputs and outputs, and related documentation. Procedures specify who performs each activity, what qualifications they require, and what evidence they must create.

Control implementation procedures address specific ISO 42001 Annex A requirements that organizations have determined apply to their risk profile. Organizations select controls appropriate to their specific circumstances rather than implementing all available controls.

Maintaining Compliance Records and Evidence

ISO 42001 requires organizations to maintain comprehensive records demonstrating that governance activities occur as documented and achieve intended results. These records provide auditors with objective evidence of compliance.

Records fall into several categories based on purpose and retention requirements. Governance activity records document that required processes occurred, including risk assessment reports, impact assessment documentation, and training attendance records.

System-specific records track individual AI systems throughout their lifecycle, including design documentation, testing results, deployment approvals, and monitoring reports. Competence records prove that personnel possess necessary qualifications, including training certificates and competency assessments.

Evidence collection requirements specify what information organizations must capture during governance activities. Risk assessments must document identified risks, evaluation criteria, and treatment strategies. Impact assessments must document affected stakeholders and mitigation measures.

Retention periods vary based on record type and regulatory requirements. Governance framework documents remain active until superseded. Activity records typically require retention for at least three years to support multiple audit cycles.

Organizations establish document control systems ensuring records remain accessible, accurate, and protected from unauthorized modification. Electronic document management systems provide version control, access logging, and backup protection.

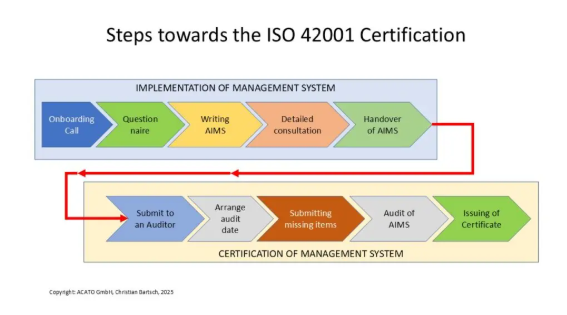

Preparing for ISO 42001 Certification

ISO 42001 certification requires organizations to undergo a formal audit process conducted by an accredited certification body. The certification process validates that an organization has implemented an effective Artificial Intelligence Management System that meets all standard requirements.

Organizations typically spend several months preparing for certification after implementing their governance framework. The preparation phase involves conducting readiness assessments, organizing documentation, training personnel, and addressing remaining gaps.

Conducting Pre-Audit Readiness Assessment

A pre-audit readiness assessment serves as a mock certification audit that identifies remaining gaps before the formal audit occurs. Organizations conduct this assessment using internal audit teams or external consultants to evaluate compliance against all ISO 42001 requirements.

The assessment evaluates multiple dimensions of implementation including documentation completeness, personnel competence, process implementation, and risk management effectiveness. Assessment teams examine whether technical and procedural controls function as designed and achieve intended purposes.

The assessment results in a gap analysis report identifying major nonconformities that represent fundamental failures to meet requirements. Major nonconformities might include missing AI policies or absent risk assessment processes. The assessment also identifies minor findings representing less serious deficiencies.

Organizations address identified gaps before proceeding to formal certification. Major nonconformities require resolution before certification can proceed, while minor findings can often be resolved during the certification timeframe.

Understanding the Certification Audit Process

Source: Scrut Automation

ISO 42001 certification follows a two-stage audit process mandated by ISO 17021. The certification body assigns qualified auditors who evaluate the organization’s management system against standard requirements over several weeks.

Stage 1 typically occurs one to two weeks before Stage 2 and focuses on evaluating documentation. Auditors review policies, procedures, risk assessments, and training records to determine whether the documented system meets requirements. This stage assesses system design rather than operational effectiveness.

Stage 2 typically lasts one to three weeks depending on organizational size and scope. This stage evaluates whether the organization actually implements its documented system effectively. Auditors conduct personnel interviews, observe governance activities, and examine evidence of implementation.

Following Stage 2, auditors prepare a comprehensive audit report documenting their findings. If no major nonconformities prevent certification, the organization receives an ISO 42001 certificate valid for three years.

Common Implementation Mistakes to Avoid

Organizations frequently encounter similar challenges during implementation that can delay certification or result in audit findings. Understanding common mistakes helps organizations prepare more effectively.

Risk assessment and management mistakes include conducting superficial assessments that fail to identify AI-specific risks, focusing only on technical risks while ignoring ethical dimensions, and creating treatment plans that lack specific controls to address identified risks.

Documentation mistakes involve developing policies that are too generic, creating procedures that personnel can’t understand, maintaining incomplete records, and failing to establish clear approval authority for governance documents.

Personnel and training mistakes include providing insufficient training on governance requirements, failing to define clear roles and responsibilities, not verifying personnel competencies, and neglecting to train management on their specific responsibilities.

Operational implementation mistakes involve creating governance processes that are too complex to maintain consistently, failing to integrate governance with existing business processes, and not establishing adequate monitoring mechanisms.

Maintaining Ongoing ISO 42001 Compliance

Achieving ISO 42001 certification marks the beginning of an ongoing compliance journey rather than reaching a finish line. Organizations with certification face requirements to maintain their governance systems continuously and demonstrate sustained compliance through regular surveillance audits.

The three-year certification cycle includes annual surveillance audits during years two and three, followed by comprehensive recertification. Organizations monitor their governance systems, conduct internal audits, and respond to findings throughout each year.

Implementing Continuous Monitoring Programs

Continuous monitoring under ISO 42001 encompasses monitoring governance control effectiveness and AI system performance. Governance monitoring evaluates whether established policies and procedures function as designed, while system monitoring tracks whether AI models perform within acceptable parameters.

Organizations establish key performance indicators measuring governance effectiveness across multiple dimensions. Common governance KPIs include the percentage of AI systems that completed required risk assessments, time elapsed between risk identification and control implementation, and percentage of personnel who completed required training.

Control effectiveness monitoring examines whether implemented controls achieve their intended purposes. If an organization implements bias testing controls to prevent discriminatory outcomes, monitoring tracks whether testing occurs before deployment and identifies bias issues requiring correction.

AI system performance monitoring tracks metrics specific to individual systems such as prediction accuracy, false positive and negative rates, and fairness metrics measuring performance across demographic groups. Organizations establish performance baselines during deployment and track deviations.

Monitoring data feeds into regular reports that management reviews during quarterly governance meetings. These reports highlight trends, identify emerging issues, and track progress on improvement initiatives.

Conducting Regular Internal Audits

Internal audits provide independent assessment of management system compliance with ISO 42001 requirements and organizational policies. Organizations conduct internal audits according to planned schedules, typically performing comprehensive annual audits supplemented by focused quarterly reviews.

Internal audit programs begin with audit planning that defines scope, objectives, and criteria for each audit. Comprehensive annual audits typically cover all ISO 42001 clauses and applicable Annex A controls, while focused audits might examine specific controls or recent process changes.

Organizations assign internal auditors who possess independence from activities being audited. Many organizations train existing personnel in auditing techniques and ISO 42001 requirements, while others engage external auditors for independent perspective.

During audit execution, auditors review documented procedures, examine implementation evidence, and interview personnel responsible for governance activities. Auditors evaluate whether procedures reflect requirements, whether personnel understand responsibilities, and whether controls operate as documented.

Audit findings classify observed issues as major nonconformities, minor nonconformities, or improvement opportunities. Organizations document findings in formal reports specifying what was examined, what was observed, and what corrective actions are recommended.

Managing Surveillance Audits and Recertification

Surveillance audits occur annually during years two and three of the certification cycle, providing external verification that organizations maintain compliance. These audits typically last one to three days and focus primarily on operational effectiveness.

Certification bodies plan surveillance audits to examine areas that changed since the previous audit, review outstanding findings, and sample different portions of the governance system. Auditors evaluate how organizations addressed previous findings and whether governance processes continue operating effectively.

Organizations prepare for surveillance audits by reviewing previous audit findings, ensuring corrective actions are completed, organizing evidence demonstrating ongoing compliance, and updating personnel on governance responsibilities.

Successful surveillance audits result in certificate maintenance for another year. If audits identify major nonconformities, organizations receive correction deadlines and may face certificate suspension if issues aren’t resolved within specified timeframes.

Recertification audits occur at the end of each three-year cycle and mirror the scope and intensity of initial certification audits. Organizations undergo comprehensive evaluation equivalent to their original certification process.

Successful recertification results in certificate renewal for another three-year period, while unsuccessful recertification requires correction of issues before renewal can occur.

Integrating ISO 42001 with Existing Management Systems

Source: Elevate Consult

Most organizations already operate under established management systems like ISO 27001 for information security or ISO 9001 for quality management. ISO 42001 implementation becomes more efficient when organizations build upon existing frameworks rather than creating separate governance structures.

Organizations can leverage existing management systems because ISO standards share common structural elements. All ISO management system standards follow the same high-level structure with ten clauses, creating natural integration points.

Leveraging ISO 27001 Infrastructure

Information security management systems provide strong foundation for ISO 42001 implementation. ISO 27001 and ISO 42001 share significant governance overlap, including risk assessment methodologies, internal audit programs, management review processes, and documentation systems.

Organizations with ISO 27001 certifications already maintain risk registers that track identified risks, likelihood and impact assessments, and treatment strategies. These risk management processes can be expanded to include AI-specific risks such as algorithmic bias and fairness concerns.

Internal audit programs established for ISO 27001 can be extended to cover AI governance requirements. Auditors trained in management system auditing can receive additional training in ISO 42001 requirements, allowing the same team to assess both security and AI governance compliance.

Management review meetings that ISO 27001 requires can incorporate AI governance topics alongside security discussions. Senior leadership familiar with reviewing security metrics and incident reports can expand oversight to include AI system performance and bias monitoring results.

Creating Unified Governance Frameworks

Organizations maintaining multiple ISO certifications can create integrated governance structures that address all management system requirements through coordinated processes. A single governance committee can oversee information security, quality management, and AI governance simultaneously.

Policy integration represents a particularly effective approach. Organizations can develop master policies that address multiple standards simultaneously. A data governance policy can incorporate ISO 27001 data protection requirements and ISO 42001 AI data management requirements.

Risk management integration allows organizations to operate unified risk registers that evaluate threats across multiple dimensions. A single risk assessment process can examine information security risks, operational risks, and AI-specific risks using consistent methodology.

Training programs can address multiple compliance requirements simultaneously. Personnel training sessions can cover security awareness and AI governance requirements in integrated curricula that reduce training time while improving comprehension.

Optimizing Resources Across Standards

Resource optimization across multiple management systems creates significant efficiency opportunities. Personnel can be trained to support multiple systems simultaneously, reducing staffing requirements while building comprehensive governance expertise.

Audit resources can be consolidated by conducting integrated audits that assess multiple standards during single events. External audit firms often provide combined audit services that evaluate ISO 27001 and ISO 42001 compliance simultaneously.

Technology infrastructure can support multiple management systems through shared platforms. Document management systems, risk assessment tools, and training platforms can be configured to address multiple standards, reducing costs while improving integration.

Frequently Asked Questions About ISO 42001 Implementation

How long does ISO 42001 implementation take for organizations with existing governance systems?

Organizations with established management systems like ISO 27001 typically complete ISO 42001 implementation in six to twelve months. Organizations starting without governance frameworks usually require twelve to eighteen months for full implementation.

Implementation timeline depends on several factors including organization size, number of AI systems requiring governance, and existing governance maturity. Organizations with basic AI policies and risk management processes often finish faster than those building governance from scratch.

The timeline includes four main phases: foundation establishment taking two to four months, procedure development requiring two to three months, deployment lasting one to two months, and validation requiring one to two months.

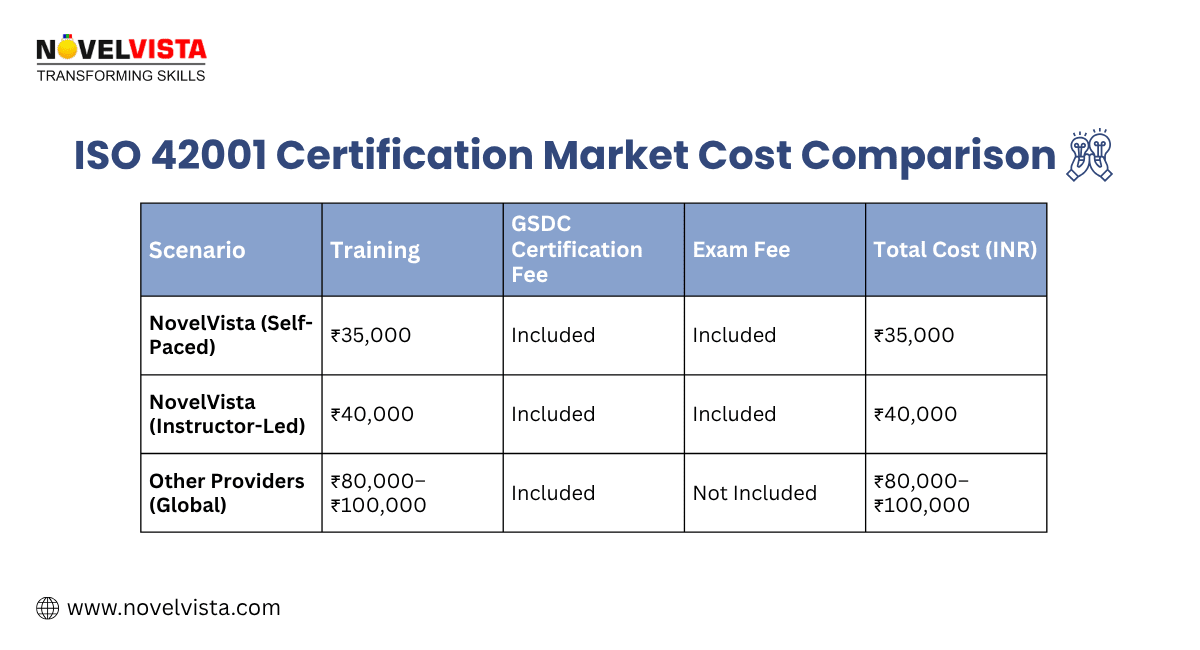

What specific costs are involved in ISO 42001 certification for mid-sized companies?

Source: NovelVista

Mid-sized organizations typically invest $15,000 to $50,000 for complete ISO 42001 implementation and certification. Cost components include external consultant fees ranging from $150 to $400 per hour, internal personnel time equivalent to one to two full-time employees for six to twelve months, and certification body audit fees between $8,000 and $15,000.

Additional costs involve technology investments for governance tools, training program development, and ongoing compliance activities. Organizations with existing management systems experience lower costs since foundational processes already exist.

The investment often pays for itself through reduced regulatory risk, improved stakeholder trust, and competitive advantages in markets where customers demand ethical AI practices.

Which AI systems require AI Impact Assessments under ISO 42001?

AI Impact Assessments are required for high-risk AI systems that could significantly affect individuals, groups, or society. The standard doesn’t specify exact criteria but generally includes systems making decisions about people in areas like employment, credit, healthcare, education, or criminal justice.

Systems requiring assessment typically include those processing personal data for automated decision-making, systems affecting vulnerable populations, systems with potential for widespread societal impact, and systems operating in regulated industries.

Organizations establish their own criteria for determining which systems require assessment based on their risk appetite and regulatory environment. The assessment evaluates fairness, privacy, safety, transparency, and human autonomy impacts.

Can organizations implement ISO 42001 without hiring external consultants?

Organizations can implement ISO 42001 independently when they have personnel with management system experience and adequate project management resources. Organizations with existing ISO 27001 or ISO 9001 certifications often possess sufficient foundational knowledge.

Independent implementation requires personnel who can interpret requirements, develop policies and procedures, conduct risk assessments, and prepare for audits. Success depends on having sufficient time allocation and expertise rather than organization size.

Many organizations choose hybrid approaches, using consultants for specific activities like gap analysis or certification preparation while handling most implementation internally.

What happens during annual surveillance audits after achieving certification?

Surveillance audits occur during years two and three of the certification cycle to verify continued compliance. Auditors review changes to AI systems and governance processes since the previous audit, examine incident reports and management responses, and assess whether the management system continues operating effectively.

Surveillance audits typically last one to three days depending on organization size. Auditors focus on operational effectiveness, reviewing whether procedures function as designed and whether personnel follow established processes.

Auditors sample different controls from previous audits to verify ongoing implementation and examine evidence of continuous improvement activities. Successful audits result in certificate maintenance, while major nonconformities can lead to certificate suspension if not corrected promptly.

How does ISO 42001 apply to organizations using only cloud-based AI services?

Organizations using third-party AI services remain responsible for governance of those systems within their defined scope. They must assess risks from vendor systems, establish contractual governance requirements, and monitor vendor compliance with organizational standards.

Third-party governance includes evaluating vendor AI governance practices before system acquisition, requiring vendors to provide model testing and bias assessment documentation, and establishing contractual transparency requirements about model performance.

Organizations can’t transfer governance responsibility to vendors but must maintain oversight of all AI systems affecting their operations. This includes systems they purchase, lease, or access through cloud services.