ISO 42001 isn’t legally required by any government anywhere in the world as of late 2025. No country, state, or regulatory body has passed laws that specifically mandate ISO 42001 certification for organizations developing or deploying artificial intelligence systems. The standard remains officially voluntary despite growing market pressure for compliance.

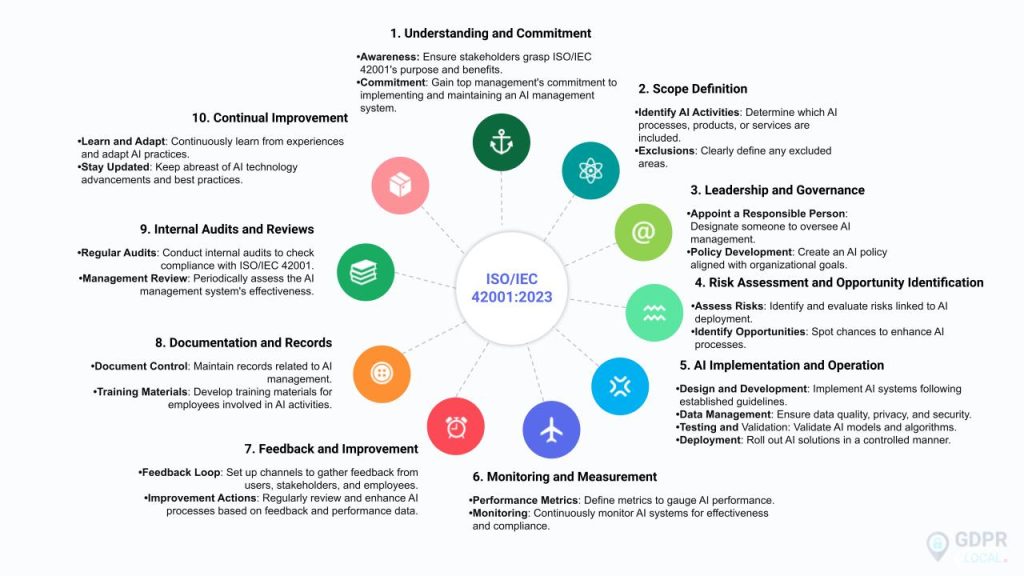

ISO 42001 is an international standard that provides a framework for establishing, implementing, and maintaining an artificial intelligence management system. The International Organization for Standardization published ISO 42001:2023 to help organizations manage AI-related risks and opportunities through structured governance processes. Like other ISO standards, ISO 42001 operates as guidance rather than legal requirement.

ISO standards are inherently voluntary by design. The International Organization for Standardization creates standards through international consensus to provide best practice frameworks that organizations can adopt if they choose. Individual governments or regulatory bodies may reference ISO standards in their regulations, but the standards themselves don’t carry legal force unless specifically incorporated into binding laws or regulations.

Source: Integrated Standards

Why Organizations Pursue ISO 42001 Without Legal Requirements

Organizations increasingly treat ISO 42001 as functionally mandatory despite its voluntary legal status. Major enterprise buyers now include ISO 42001 compliance requirements in procurement processes, effectively excluding vendors who can’t demonstrate alignment with the standard.

Insurance companies factor AI governance frameworks into coverage decisions and premium calculations. Investment firms and capital providers incorporate AI governance assessments into due diligence processes. Organizations seeking funding or pursuing mergers find that demonstrating structured AI management through frameworks like ISO 42001 improves their competitive position.

Contract terms increasingly specify ISO 42001 alignment as a baseline qualification for partnerships and service agreements. Organizations operating without demonstrable AI governance frameworks face exclusion from major business opportunities regardless of legal requirements in their jurisdiction.

How Regional Regulations Create Indirect Pressure

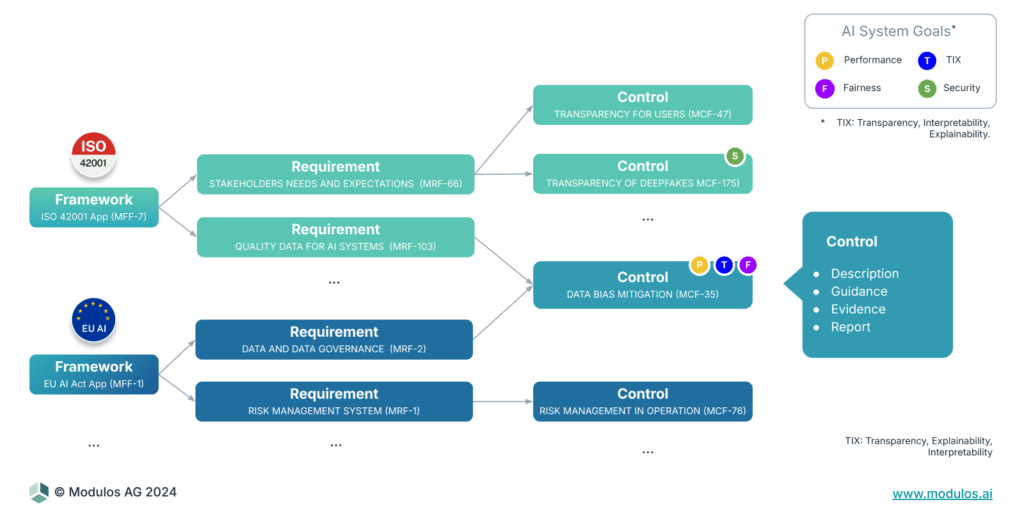

The European Union’s AI Act establishes mandatory requirements for AI management systems without naming specific standards. The regulation requires organizations to implement risk management, documentation, human oversight, and transparency measures for high-risk AI systems. ISO 42001 directly addresses these functional requirements, making it the de facto standard for EU AI Act compliance.

Healthcare organizations must comply with strengthened HIPAA Security Rule requirements that now mandate multi-factor authentication, asset inventories, and risk assessments. Financial services firms face obligations under the Digital Operational Resilience Act and cybersecurity regulations.

Organizations operating across multiple jurisdictions find that ISO 42001 offers a unified approach to meeting various regulatory requirements simultaneously. Rather than maintaining separate compliance systems for different regulations, organizations can implement a single framework that addresses multiple obligations.

Current Global Legal Status

No major regulatory framework explicitly mandates ISO 42001 certification as of November 2025. The EU AI Act became legally binding in 2024 but allows organizations to demonstrate compliance through alternative means. The United States maintains a deregulatory approach to AI governance at the federal level, relying on existing sector-specific regulations.

Individual states like Colorado have enacted AI regulations with specific requirements, but these laws don’t reference ISO 42001 by name. The United Kingdom plans to implement comprehensive AI legislation by mid-2026 but hasn’t indicated whether such legislation will mandate specific standards.

Canada, Australia, and Singapore have developed AI governance frameworks that encourage responsible AI development without mandating particular certification schemes. This pattern reflects the global tendency to establish functional requirements while leaving implementation mechanisms to organizational choice.

Source: Data Crossroads

Is ISO 42001 Legally Mandatory Anywhere in the World?

No country or government has made ISO 42001 certification legally mandatory as of November 2025. Organizations can legally operate AI systems without ISO 42001 certification in every jurisdiction worldwide. No statute, regulation, or law explicitly requires organizations to achieve ISO 42001 compliance as a condition for developing, deploying, or operating artificial intelligence systems.

ISO 42001 differs from mandatory standards in other industries. Financial institutions must comply with Basel III banking regulations, and medical device manufacturers must obtain FDA approval before selling products in the United States. These represent legal requirements backed by regulatory enforcement. ISO 42001 operates as voluntary guidance rather than enforceable law.

The International Organization for Standardization designed ISO 42001:2023 as a management system framework providing best practices rather than creating legal obligations. Organizations face no legal penalties for choosing not to pursue ISO 42001 certification, and regulatory agencies can’t force compliance with the standard through statutory authority.

What Market Forces Make ISO 42001 Functionally Required?

While no law mandates ISO 42001 certification anywhere in the world, business pressures have created “functional requirements” that make the standard practically mandatory for many organizations. These market forces operate faster than legislation and create consequences equivalent to legal mandates.

Several key pressure sources drive organizations toward ISO 42001 adoption:

- Procurement gatekeeping — Major buyers exclude vendors without AI governance certification from consideration

- Insurance requirements — Carriers demand AI risk management evidence before providing coverage

- Investment scrutiny — Capital providers assess AI governance maturity during due diligence

- Supply chain compliance — Enterprise customers require vendors to meet AI governance standards

- Competitive positioning — Organizations with certification gain advantages in competitive situations

Procurement and Contract Requirements

Government agencies and enterprise customers increasingly treat ISO 42001 compliance as an entry-level requirement in procurement processes. Procurement teams in banking, healthcare, energy, and technology sectors now routinely list AI governance certification in requests for proposals, excluding organizations that can’t demonstrate compliance before detailed evaluation begins.

Major cloud providers and software vendors recognize their business models depend on showing operational AI management systems to enterprise customers. Organizations pursuing government contracts find AI governance requirements embedded in statements of work and master service agreements.

When contract renewals or major deals require “evidence mapped to ISO 42001,” the voluntary status of the standard becomes irrelevant from a business perspective. Procurement departments in regulated industries treat ISO 42001 evidence as baseline qualification rather than a differentiator.

Insurance and Capital Market Pressure

Insurance companies seeking to limit exposure to AI-related risks demand evidence of AI governance structures before renewing policies or providing coverage. Underwriters treat ISO 42001 certification as a risk mitigation factor when determining premiums and coverage terms. Organizations lacking demonstrable AI governance frameworks face higher insurance costs or inability to obtain coverage.

Major investment firms incorporate AI governance maturity assessments into due diligence processes, with ISO 42001 compliance serving as a recognized benchmark. Environmental, social, and governance reviews conducted by investors frequently include AI governance assessments that reference ISO 42001 standards.

Organizations seeking to raise capital, go public, or attract strategic investment find that demonstrating compliance improves their competitive position and reduces investor questions. Capital providers evaluate operational resilience and risk management capabilities as part of funding decisions.

Which Industries Face ISO 42001 Compliance Pressure?

Several industries face significant pressure to adopt ISO 42001 compliance due to high AI risk profiles or existing regulatory oversight. While no law currently mandates ISO 42001 certification anywhere in the world, market forces create functional requirements that affect specific sectors more intensely than others.

Source: Northwest AI Consulting

Healthcare organizations face unique pressures around AI governance due to patient safety considerations and medical device regulations. The FDA published guidance in 2024 and January 2025 requiring AI-enabled medical device manufacturers to demonstrate systematic risk management, validation processes, and ongoing performance monitoring for AI components.

Financial institutions face complex AI governance requirements through multiple regulatory frameworks. The Digital Operational Resilience Act entered application on January 17, 2025, requiring financial entities to establish comprehensive operational resilience frameworks addressing information and communication technology risks.

Technology companies face market-driven pressure for ISO 42001 compliance rather than direct regulatory requirements. Enterprise software buyers routinely include ISO 42001 alignment as baseline requirements in procurement processes, creating competitive pressure for certification. Organizations involved in AI for sales prospecting and AI in call centers find customers demanding governance frameworks before contract execution.

How Do Regional Regulations Affect ISO 42001 Requirements?

Different regions around the world are taking vastly different approaches to AI regulation, yet they’re all creating pressure for organizations to adopt ISO 42001 — even though no law explicitly requires it. Regional regulatory frameworks establish requirements for AI governance and risk management without naming specific standards, but ISO 42001 has emerged as the go-to framework for meeting these varied obligations efficiently.

European Union Comprehensive AI Framework

The EU AI Act represents the world’s most comprehensive legal framework for artificial intelligence, creating mandatory obligations that drive ISO 42001 adoption even without explicitly requiring it. The regulation uses a risk-based approach that categorizes AI systems into four levels: unacceptable risk, high-risk, limited risk, and minimal risk.

Organizations operating high-risk AI systems must establish quality management systems, conduct risk assessments, maintain detailed documentation, implement human oversight, and ensure transparency. ISO 42001 directly addresses these obligations through its systematic approach to AI lifecycle management and governance structures.

Source: Modulos AI

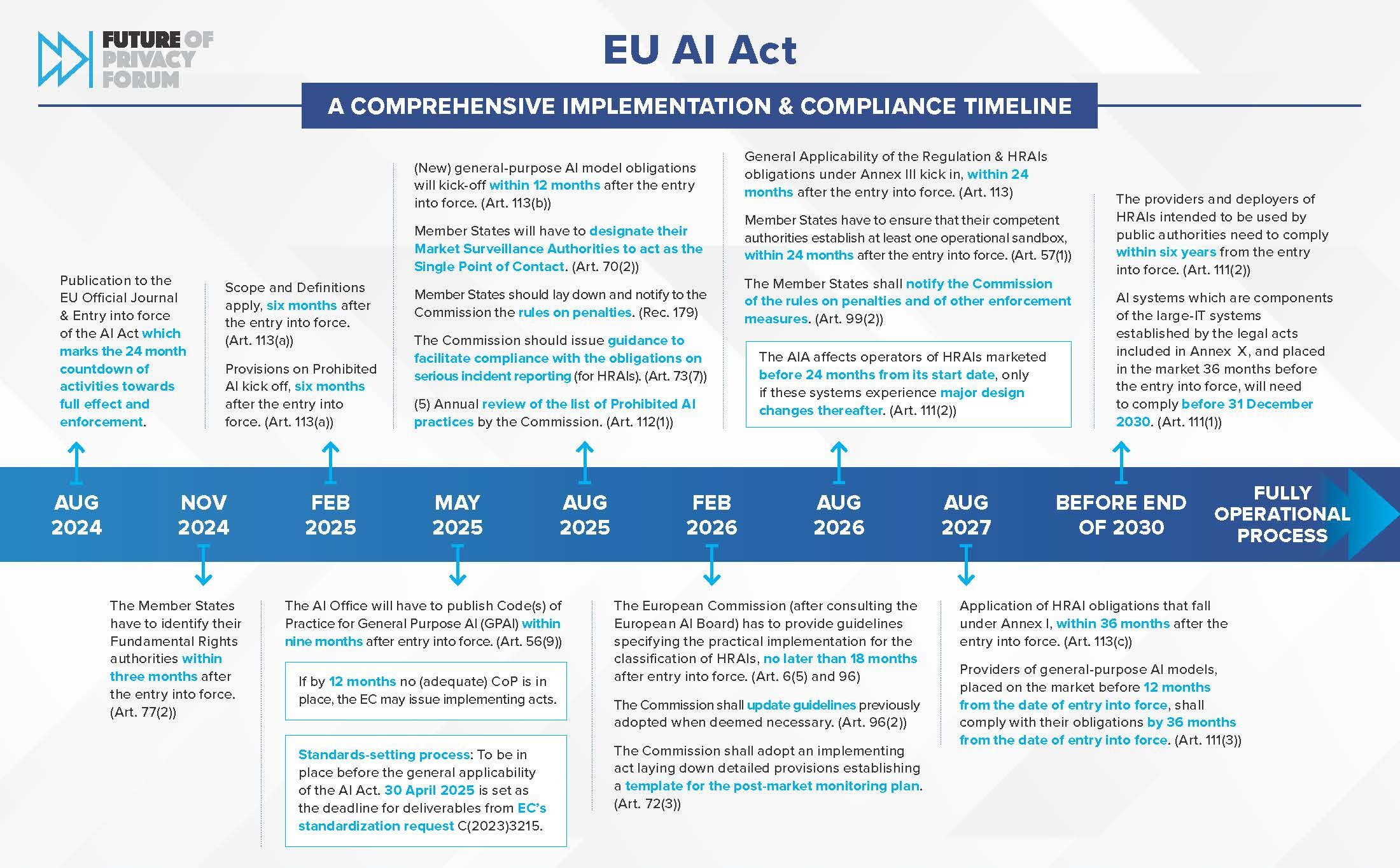

The enforcement timeline creates urgency for ISO 42001 adoption. General-purpose AI model requirements became legally binding in August 2025, while comprehensive high-risk AI system requirements take full effect by August 2026.

United States Market-Driven Compliance Approach

The United States has deliberately avoided comprehensive federal AI legislation, instead emphasizing innovation and market-driven governance. The Trump Administration’s 2025 AI Action Plan explicitly removed federal AI regulations and directed agencies to eliminate barriers to AI development.

This deregulatory approach creates a patchwork of state and local requirements. Colorado established AI risk management requirements, while New York’s cybersecurity regulations include AI-specific guidance. California implemented AI content labeling requirements for large platforms.

The NIST AI Risk Management Framework provides voluntary guidance that aligns well with ISO 42001 principles. Organizations use NIST AI RMF alongside ISO 42001 to address federal expectations while meeting market requirements.

When Will ISO 42001 Become Legally Required?

ISO 42001 remains a voluntary standard worldwide as of late 2025, yet market forces have created de facto requirements that function like legal mandates. The progression toward formal legal mandates follows predictable patterns based on how previous standards evolved. Regulatory agencies typically observe market adoption before incorporating standards into formal frameworks.

EU Harmonized Standards Development Timeline

The European Commission maintains authority to designate harmonized standards under Article 40 of the EU AI Act. When the Commission publishes these standards in the Official Journal of the European Union, organizations demonstrating compliance receive legal presumption of conformity with AI Act requirements.

ISO 42001 currently lacks formal recognition as a harmonized standard for EU AI Act compliance. The Commission is developing these standards through its standardization process, which typically requires 18 to 36 months from initial development to final publication.

Organizations following ISO 42001 frameworks position themselves well for eventual harmonized standards recognition. The standard addresses core EU AI Act requirements including risk management systems, documentation, transparency, and human oversight.

United States Federal AI Regulations

The United States currently follows a deregulatory approach that emphasizes innovation over comprehensive AI governance mandates. The Trump Administration’s January 2025 Executive Order “Removing Barriers to American Leadership in Artificial Intelligence” explicitly removed previous AI oversight requirements.

Federal AI legislation remains unlikely in the near term given the current administration’s policy priorities. Congress has introduced various AI bills, but none have gained sufficient momentum to suggest imminent passage of comprehensive federal AI legislation.

State-level regulations create a different dynamic that may eventually drive federal action. Colorado’s AI Act, New York’s AI cybersecurity requirements, and California’s content labeling mandates create compliance complexity for national organizations.

What Are the Risks of Delaying ISO 42001 Implementation?

Organizations delaying ISO 42001 implementation face significant business consequences even though no law currently requires certification. The risks span operational, financial, and competitive dimensions that can impact revenue and market position.

Lost Business Opportunities and Contract Exclusions

Procurement departments in major industries now list ISO 42001 compliance as a baseline requirement in requests for proposals. Organizations without certification find themselves excluded before detailed evaluation stages begin.

Enterprise buyers in banking, healthcare, technology, and energy sectors routinely embed ISO 42001 requirements into master service agreements and vendor onboarding processes. When contracts require “evidence mapped to ISO 42001,” organizations lacking documentation can’t compete effectively.

Large cloud providers, software vendors, and AI service providers recognize their business models depend on demonstrating operational AI management systems to enterprise customers. Organizations pursuing government contracts or regulated industry partnerships face automatic disqualification without demonstrated AI governance frameworks. Companies offering retail AI consulting services find clients requiring ISO 42001 compliance before engagement.

Increased Insurance Costs and Coverage Limitations

Insurance underwriters treat ISO 42001 certification as a risk mitigation factor when determining premiums and coverage terms. Organizations lacking demonstrable AI governance frameworks face higher insurance costs or inability to obtain coverage.

Insurers assess AI governance maturity through structured evaluations that examine risk management processes, documentation standards, and operational controls. Organizations with ISO 42001 alignment demonstrate lower risk profiles compared to those with ad hoc or informal AI governance approaches.

Major insurers seeking to limit exposure to AI-related risks require evidence of structured governance before renewing policies or providing coverage. Organizations in regulated industries can’t operate without insurance, making insurance-required compliance functionally mandatory.

How to Build a Strategic AI Governance Implementation Plan

Building an AI governance program requires systematic planning across three phases: assessment, gap analysis, and implementation. Organizations typically start by conducting a comprehensive audit of their current AI systems, data practices, and existing governance structures.

The assessment phase identifies all AI applications currently in use, documents how decisions are made about AI development and deployment, and maps existing policies that relate to data protection, risk management, and technology oversight. During the gap analysis phase, organizations compare their current state against governance frameworks like ISO 42001 or regulatory requirements such as the EU AI Act.

Source: Future of Privacy Forum

The implementation phase begins with establishing an AI governance committee that includes representatives from legal, compliance, IT, and business units. Organizations typically start with high-risk AI systems and gradually expand governance coverage to all AI applications.

Successful AI governance implementation requires balancing multiple priorities including regulatory compliance, risk mitigation, and operational efficiency. Organizations often benefit from expert guidance when navigating complex regulatory landscapes and developing governance structures that align with business objectives. This includes understanding concepts like explainable AI and how to prevent AI bias as fundamental components of responsible AI governance.

Frequently Asked Questions About ISO 42001 Legal Requirements

How much does ISO 42001 certification typically cost for small businesses versus large enterprises?

ISO 42001 certification costs vary significantly based on organization size and AI system complexity. Small businesses typically spend $50,000 to $100,000, while large enterprises often invest $200,000 to $500,000 or more when including consultant fees, internal resources, documentation development, and certification body assessments. Organizations with existing governance frameworks typically pay less than those building AI management systems from scratch.

Can organizations implement ISO 42001 principles without pursuing formal third-party certification?

Organizations can implement ISO 42001 principles without pursuing formal third-party certification. Following the standard’s framework for AI risk management, governance, and documentation provides operational benefits and prepares organizations for potential future certification. However, only formal certification from accredited certification bodies provides the external validation that many customers, partners, and regulators recognize in procurement and compliance evaluations.

What happens if organizations implement ISO 42001 before any country makes it legally required?

Organizations implementing ISO 42001 before legal mandates gain competitive advantages in procurement processes and may benefit from grandfathering provisions when regulations eventually reference the standard. Early adopters position themselves advantageously regardless of whether explicit legal requirements materialize, as they demonstrate proactive risk management and operational maturity to stakeholders.

How long does complete ISO 42001 implementation typically take for enterprise organizations?

Enterprise organizations typically require 12 to 18 months for complete ISO 42001 implementation and certification. Organizations with existing information security management systems or AI governance frameworks may complete implementation in eight to 12 months, while those starting without established governance structures often need 18 to 24 months. Implementation speed depends on organizational readiness, resource allocation, AI system complexity, and management commitment. Organizations should also consider developing AI upskilling strategies to ensure their teams can effectively implement and maintain ISO 42001 compliance.