AI agent types are distinct categories of artificial intelligence systems that perceive their environment, make decisions, and take actions to achieve specific goals.

Understanding AI agent classifications helps organizations select appropriate systems for different tasks. The field has evolved from simple reactive systems to sophisticated learning agents that adapt over time. Modern classifications now include foundation model-based agents that leverage large language models for complex reasoning.

Different agent types excel in different scenarios. Simple agents work well for predictable tasks like thermostats or basic sensors. Complex agents handle uncertain environments where learning and adaptation prove essential. Multi-agent systems coordinate multiple AI entities to solve problems beyond single-agent capabilities.

Source: IBM

What Are AI Agents

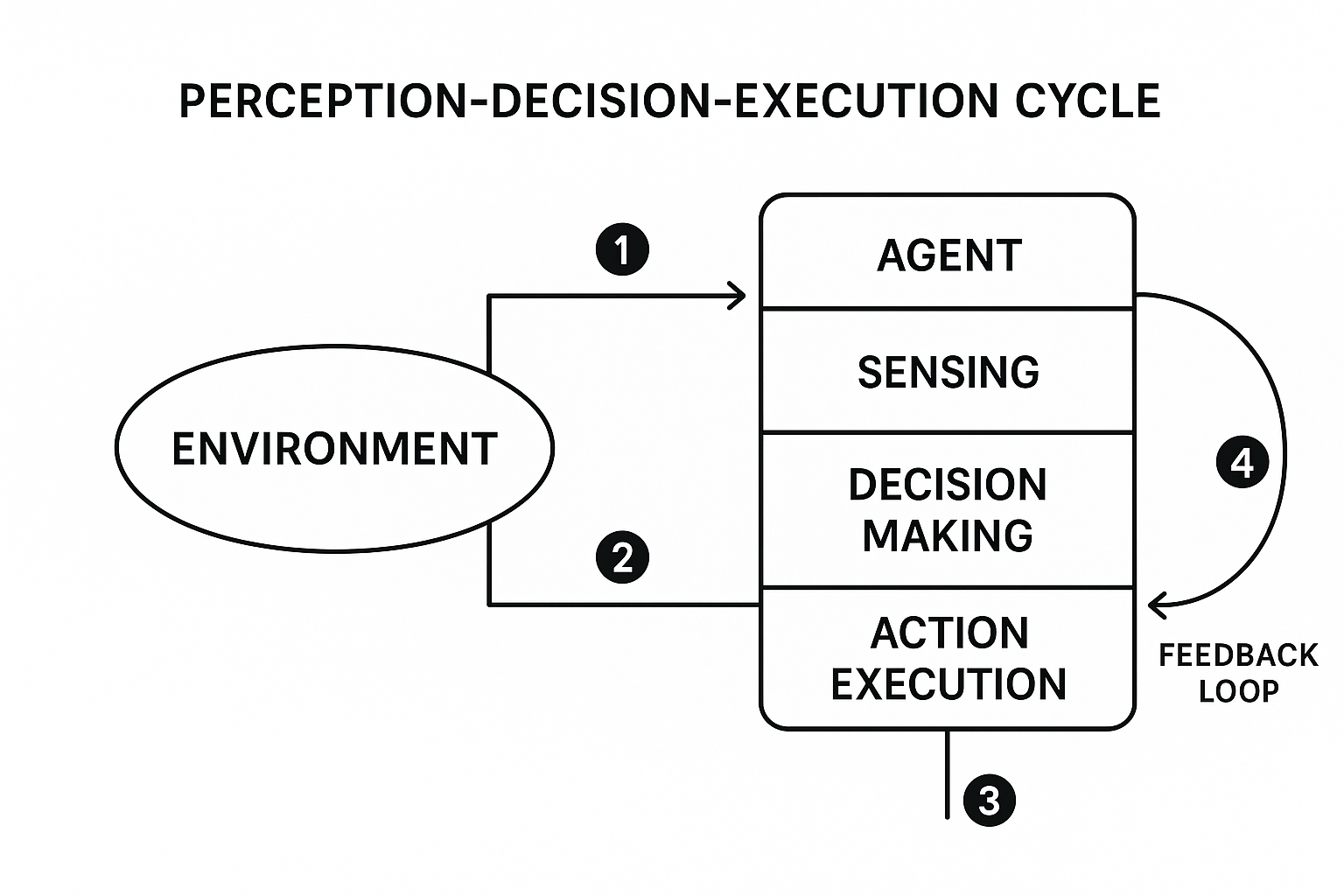

AI agents are software systems that perceive their environment, make decisions, and take actions without constant human direction. These systems operate through sensors that gather information, processors that analyze data and choose actions, and actuators that execute the chosen responses.

Unlike traditional automation that follows fixed rules, AI agents can adapt their behavior based on changing conditions. A simple thermostat reacts to temperature changes, while a smart home system learns your daily patterns and adjusts settings automatically.

Source: Medium

The basic architecture consists of three components working together: perception mechanisms collect environmental data, decision-making processes evaluate options, and action capabilities execute chosen responses. This cycle repeats continuously as the agent monitors results and adjusts future behavior.

Simple Reflex Agents

Simple reflex agents represent the most basic AI agent type. These systems follow straightforward if-then rules without memory of past events or ability to learn from experience. When sensors detect specific conditions, the agent immediately executes predetermined actions.

Source: GeeksforGeeks

How They Work

Simple reflex agents match current perceptions to predefined condition-action rules. The agent evaluates input against programmed conditions and executes corresponding actions when matches occur.

A traffic light system demonstrates this behavior clearly. When the timer reaches 60 seconds, the light changes from green to yellow. The system doesn’t consider previous traffic patterns or predict future conditions — it responds only to current timer status.

Real-World Applications

These agents excel in predictable environments where immediate responses matter most:

- Manufacturing sensors — Monitor production conditions and trigger immediate responses to temperature or pressure changes

- Security systems — Detect motion or unauthorized access and activate alarms instantly

- Home automation — Smart switches and automatic lights that respond to movement or time-based triggers

- Safety systems — Fire alarms and emergency shutdowns that activate when sensors detect dangerous conditions

Model-Based Reflex Agents

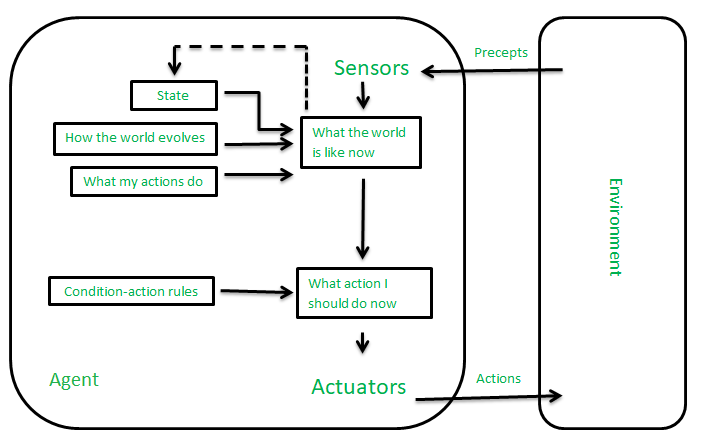

Model-based reflex agents advance beyond simple systems by maintaining an internal model of their environment. These agents track information that isn’t immediately visible, combining current perceptions with stored knowledge from past experiences.

Source: GeeksforGeeks

The internal model represents the agent’s understanding of how the world works and changes over time. This capability allows agents to make informed decisions even when complete environmental information isn’t available.

Internal State Management

These agents update their internal state continuously as they receive new information and perform actions. The agent combines sensor data with its existing model to maintain an accurate representation of current conditions.

A smart thermostat exemplifies this approach by remembering occupancy patterns and learning household routines. The system tracks when people typically arrive home, which rooms get used most, and how temperature preferences change throughout the day.

Handling Incomplete Information

Model-based agents fill gaps in perception by making reasonable inferences about unobservable conditions. When sensors can’t detect certain features, the agent relies on its internal model to estimate likely states.

Smart home lighting systems demonstrate this capability by adapting to daily routines and user preferences. These systems track movement patterns, learn typical usage times, and remember brightness preferences for different activities.

Goal-Based Agents

Goal-based agents make decisions by evaluating how different actions help achieve specific objectives. Unlike reactive systems that respond immediately to stimuli, these agents consider future consequences and plan action sequences to reach desired outcomes.

Source: HdM Stuttgart

These systems excel when the best immediate action isn’t obvious from current conditions alone. They can sacrifice short-term gains for long-term success and adapt strategies when circumstances change.

Planning and Problem Solving

Planning involves breaking complex goals into manageable action sequences. The agent analyzes current state, identifies target state, and determines steps required to bridge the gap between them.

The process begins with problem formulation where the agent defines initial state, goal state, and available actions. The agent then searches through possible action sequences to find paths from initial to goal state.

Search Algorithms

Search algorithms provide systematic methods for exploring solution spaces to reach goals:

- Breadth-first search — Explores all possible actions at each step before moving deeper, guaranteeing shortest paths but requiring significant memory

- Depth-first search — Explores one path completely before trying alternatives, using less memory but potentially missing shorter solutions

- A-star search — Uses heuristics to guide exploration toward promising areas, balancing efficiency with optimality

Navigation Applications

Self-driving cars demonstrate goal-based behavior through route planning and obstacle avoidance. When passengers input destinations, navigation systems formulate goals and plan optimal routes considering distance, travel time, and traffic conditions.

During navigation, vehicles encounter dynamic obstacles requiring real-time adjustments while maintaining progress toward destinations. The system replans routes when original paths become blocked while ensuring safety constraints are met.

Utility-Based Agents

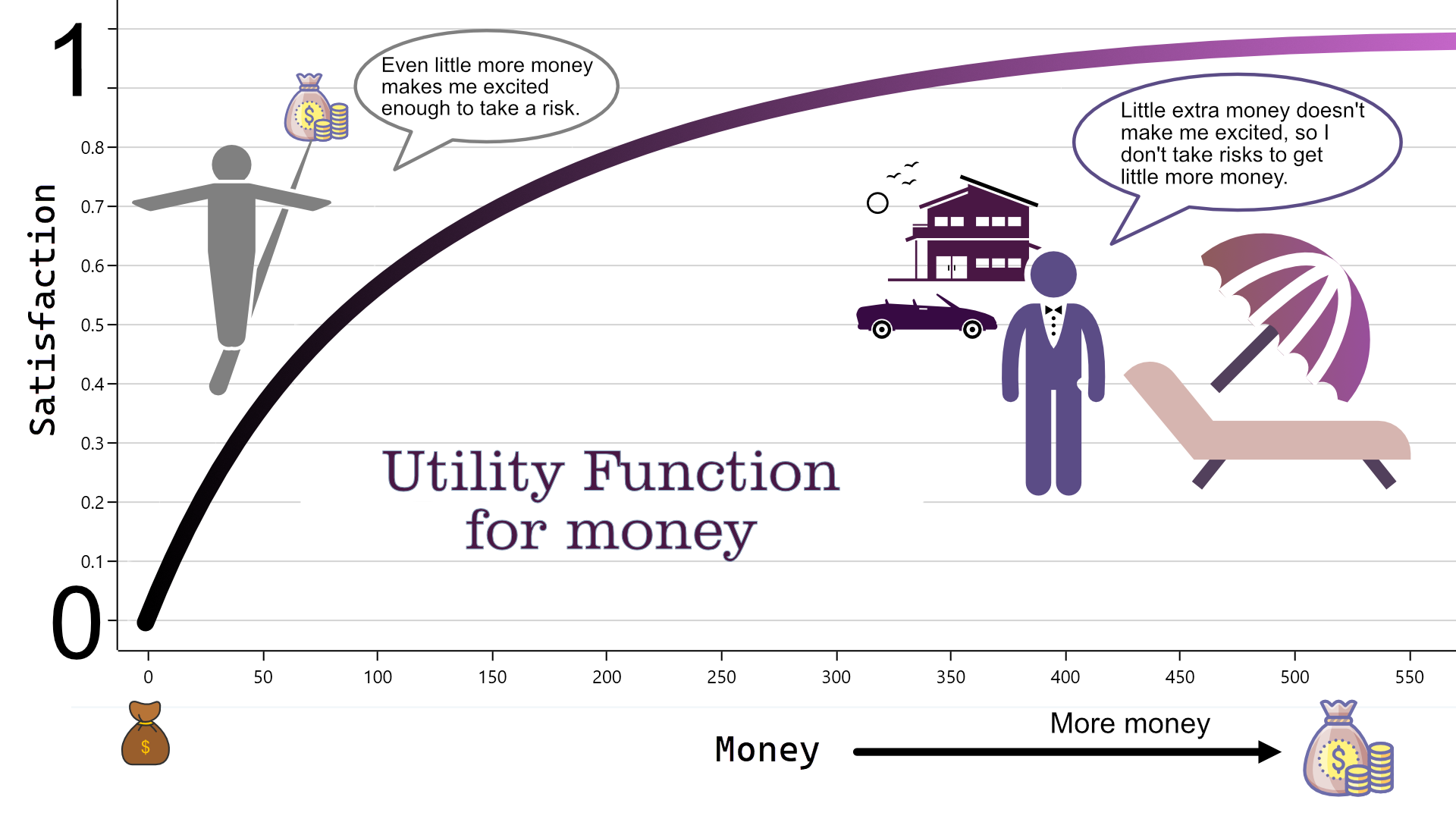

Utility-based agents advance beyond simple goal achievement by introducing quantitative measures of desirability for different outcomes. These systems use utility functions that assign numerical values to various world states, enabling rational decision-making with competing objectives.

Source: SpiceLogic

A utility function maps each possible outcome to a number representing how desirable that state is to the agent. This approach enables optimization across multiple criteria rather than binary goal achievement.

Decision Making Under Uncertainty

Utility-based agents handle uncertainty by calculating expected utility across possible outcomes. The agent multiplies probability of each result by its utility value, then sums these products to determine expected utility of actions.

Multi-criteria decision making involves evaluating options across several dimensions simultaneously. A smart home system might consider energy efficiency, comfort levels, and cost when adjusting temperature settings.

Optimization Applications

Financial trading systems demonstrate utility-based optimization through risk-reward calculations and portfolio management. Algorithmic trading agents evaluate potential trades considering expected returns, risk levels, transaction costs, and market impact.

Portfolio balancing represents utility maximization where agents optimize asset allocation across investments. The utility function incorporates expected returns, volatility measures, asset correlations, and risk tolerance parameters.

Learning Agents

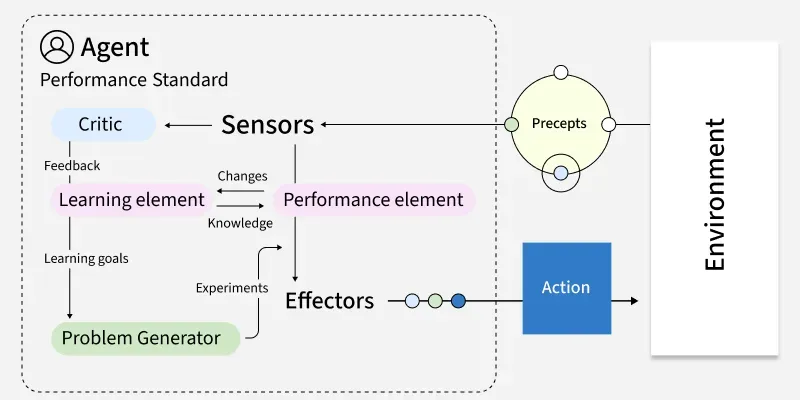

Learning agents represent the most sophisticated AI systems because they continuously improve performance through experience and feedback. Unlike static systems, these agents adapt behavior based on interaction outcomes and environmental changes.

Source: GeeksforGeeks

The learning process creates a cycle where agents take actions, receive feedback, and adjust approaches for future decisions. This enables systems that become more effective over time rather than remaining static.

Core Components

Learning agents consist of four essential elements:

- Performance element — Executes actions based on current knowledge and learned behaviors

- Learning element — Modifies knowledge base by incorporating feedback and recognizing patterns

- Critic — Evaluates performance by measuring outcomes against expected results

- Problem generator — Creates learning opportunities by suggesting scenarios to explore

Adaptive Applications

Recommendation systems demonstrate learning agent behavior by tracking user preferences and improving suggestions over time. Streaming services learn viewing patterns, seasonal preferences, and mood-based content choices to suggest increasingly relevant entertainment.

Fraud detection systems adapt to new threat patterns by analyzing transaction behaviors and identifying suspicious activities. These systems learn evolving criminal tactics without blocking legitimate purchases, continuously refining detection accuracy.

Multi-Agent Systems

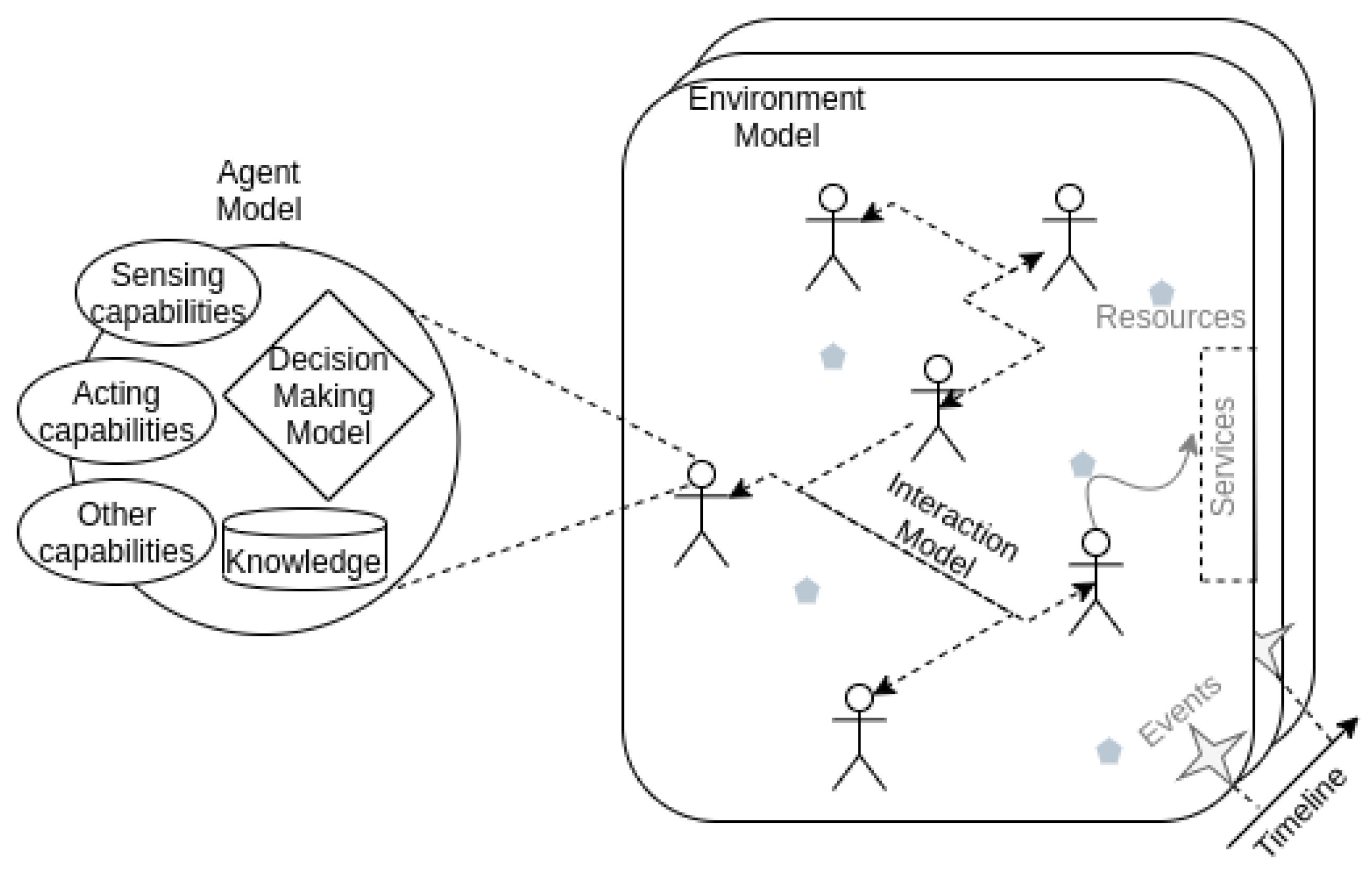

Multi-agent systems consist of multiple AI agents working together in shared environments. These systems involve autonomous entities that can perceive surroundings, make decisions, and take actions while interacting with other agents.

Source: MDPI

Each agent operates independently but contributes to solving larger problems that individual agents can’t handle alone. Interactions between agents create complex behaviors that emerge from individual actions and communications.

Coordination Mechanisms

Agent coordination requires structured approaches for managing communication and task distribution. Communication protocols establish rules and formats that agents use to exchange information and share progress updates.

Task allocation strategies determine how work gets distributed among available agents based on capabilities, current workload, and system objectives. Centralized coordination employs master agents that manage distribution, while decentralized approaches use peer-to-peer communication.

Enterprise Applications

Supply chain automation demonstrates multi-agent coordination across organizational boundaries. Procurement agents manage vendor relationships, inventory agents track stock levels, and logistics agents coordinate shipping and delivery.

Project management systems employ multiple agents handling different aspects including resource scheduling, progress tracking, deadline monitoring, and stakeholder communication. Each agent specializes in specific tasks while maintaining process continuity.

Foundation Model-Based Agents

Foundation model-based agents represent a new generation built on large language models like GPT and Claude. These agents use pre-trained neural networks as reasoning engines, enabling sophisticated natural language processing and complex problem-solving.

Source: LinkedIn

Unlike rule-based systems, foundation model agents leverage emergent abilities from training on vast text datasets. They can understand context, engage in multi-step reasoning, and adapt responses based on specific situations.

Large Language Model Capabilities

LLM agents excel at conversational AI and natural language understanding tasks. They process text input, interpret meaning and context, then generate appropriate responses based on training and conversation history.

Prompt-based behavior modification allows developers to shape agent responses without retraining underlying models. Crafting specific instructions or examples in initial prompts guides agents to adopt particular personas or focus on certain response types.

Tool Integration

Tool-augmented AI systems extend language model capabilities by integrating external tools and APIs. These agents can access databases, search engines, calculators, and other applications to gather information beyond text generation.

Function calling enables agents to invoke specific tools during reasoning processes. Agents analyze user requests, determine necessary tools, formulate appropriate function calls with correct parameters, and incorporate results into responses.

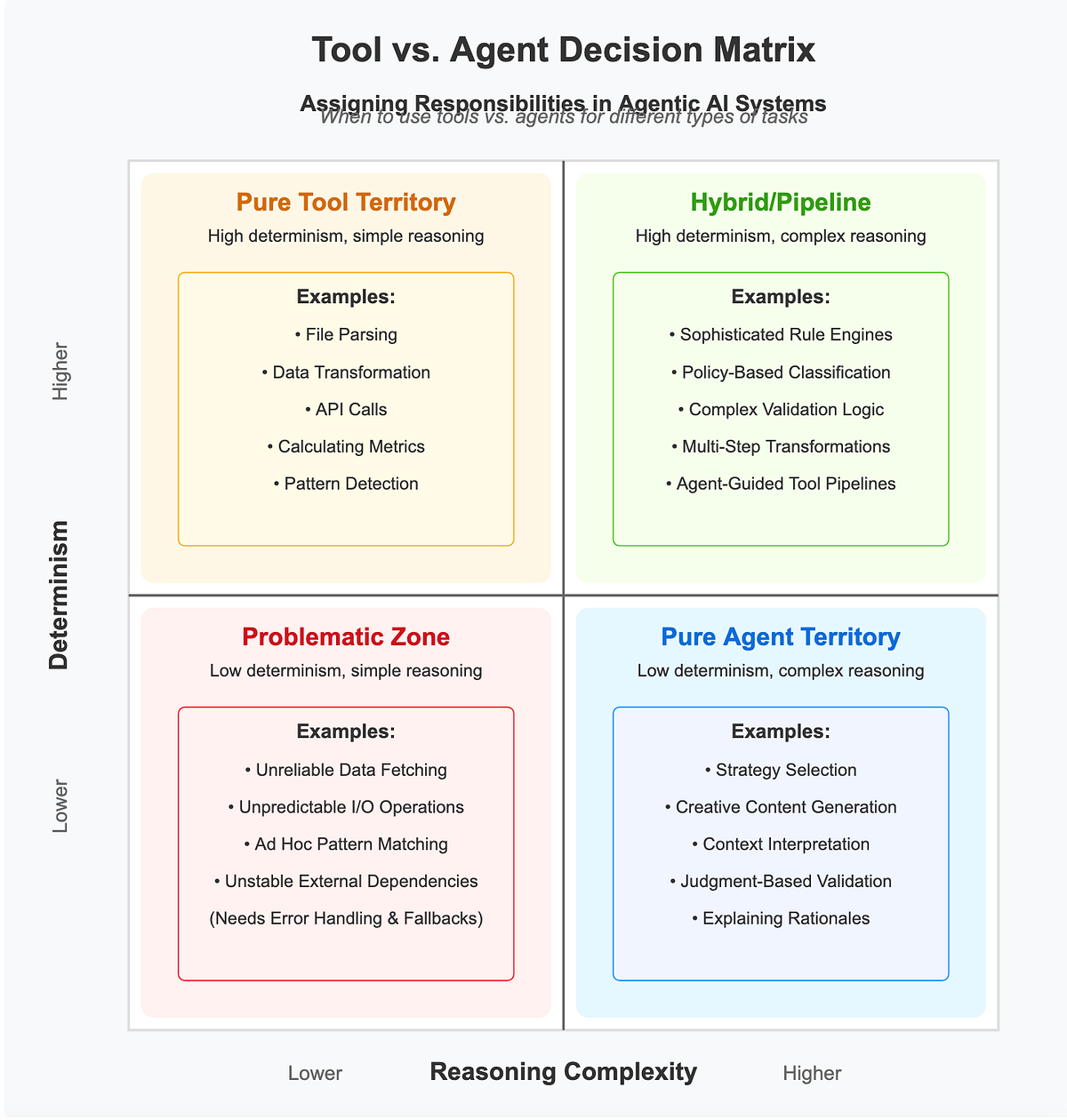

Choosing the Right AI Agent Type

Selecting appropriate AI agent architecture depends on matching requirements with agent capabilities. Each type offers different strengths that make them suitable for specific use cases and organizational needs.

Source: Medium

Complexity Considerations

Simple reflex agents provide fast, predictable responses with minimal resource requirements. These systems work well for straightforward automation where immediate reactions matter more than sophisticated reasoning.

Learning agents offer superior long-term performance but require substantial computational resources and training time. Initial performance may be poor while systems learn, but they often exceed static alternatives over time.

Implementation Factors

Resource requirements vary dramatically across agent types. Simple agents need minimal processing power and memory, while complex systems may require gigabytes of memory and specialized hardware for training and operation.

Integration complexity depends on existing system architecture and agent sophistication. Basic functional agents excel at bridging AI capabilities with existing APIs, while sophisticated agents may require custom integration layers and specialized data management solutions.

Organizations implementing AI agents should consider starting with simpler systems for well-defined tasks while exploring more sophisticated approaches for complex, adaptive requirements. AI consulting services can help evaluate which agent types align best with specific business objectives and technical constraints.

Frequently Asked Questions

What’s the difference between reactive and deliberative AI agents?

Reactive agents respond immediately to environmental stimuli using predefined rules, like a thermostat that turns on heating when temperature drops below a set point. These systems process inputs quickly but can’t handle unexpected situations or learn from experience.

Deliberative agents analyze situations and plan responses before acting. They consider multiple factors and future consequences, which takes more processing time but results in more thoughtful decisions. Self-driving cars use deliberative planning to navigate complex traffic situations safely.

How do learning agents improve their performance over time?

Learning agents modify their behavior based on feedback from previous actions and outcomes. They track which decisions led to successful results and adjust their approach for similar future situations.

For example, a recommendation system observes which movies users actually watch after suggestions, how long they watch, and their ratings. The agent identifies patterns like “users who enjoy science fiction often like space documentaries” and incorporates these insights into future recommendations.

Which AI agent type works best for customer service automation?

Customer service typically benefits from a combination of agent types working together. Simple reflex agents handle frequently asked questions using predefined responses, while model-based agents maintain conversation context across multiple interactions.

Learning agents improve response accuracy by analyzing customer interactions and satisfaction scores over time. Multi-agent systems coordinate between different support channels like email, chat, and phone to provide consistent service experiences.

What computational resources do different agent types require?

Simple reflex agents operate on basic microcontrollers with kilobytes of memory since they execute straightforward condition-action rules. Model-based agents typically need hundreds of kilobytes to several megabytes for maintaining internal state representations.

Learning agents have the highest requirements, potentially needing gigabytes of memory for complex models and substantial processing power for training. Foundation model-based agents may require specialized hardware like GPUs and significant cloud computing resources for optimal performance.

How do multi-agent systems coordinate activities between different agents?

Multi-agent coordination happens through communication protocols that establish rules for information exchange and task allocation strategies that distribute work based on agent capabilities and system objectives.

Centralized coordination uses a master agent that receives information from all agents and directs their activities, providing clear authority but creating potential bottlenecks. Decentralized approaches allow agents to negotiate directly with each other, offering greater flexibility but requiring more sophisticated coordination mechanisms.