AI compliance refers to the systematic approach organizations use to ensure their artificial intelligence systems operate within established legal, ethical, and regulatory boundaries.

Organizations across industries now face mounting pressure to implement AI systems responsibly. Recent high-profile incidents involving biased hiring algorithms and discriminatory loan approval systems have highlighted the real-world consequences of unchecked AI deployment. These failures have cost companies millions in legal settlements and damaged public trust.

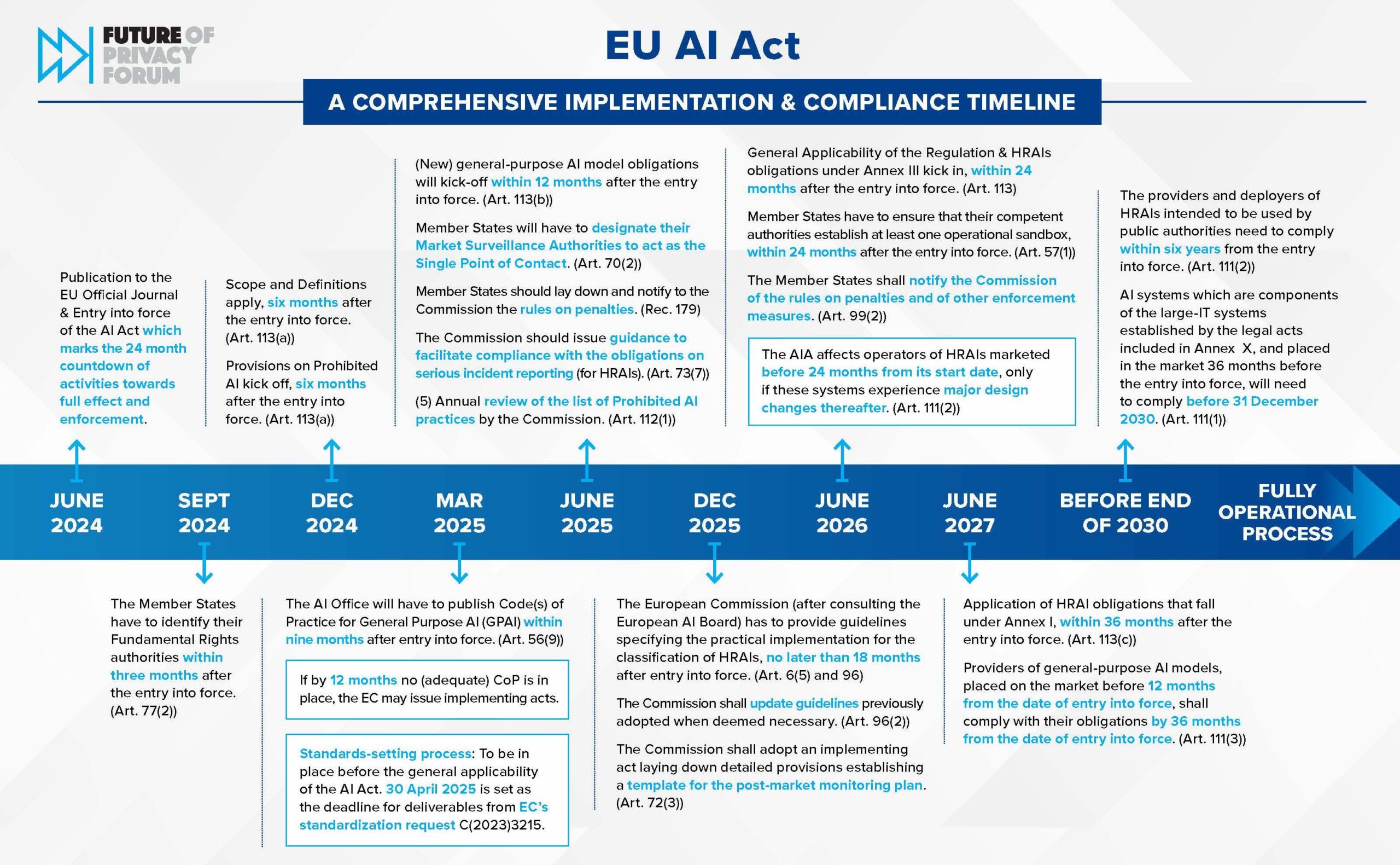

Regulatory frameworks are rapidly emerging worldwide to address AI-related risks. The European Union’s AI Act, which began enforcement in 2024, imposes substantial penalties for non-compliance — up to €35 million or 7 percent of global revenue. Similar regulations are developing across other major markets, creating a complex web of requirements that organizations navigate.

What AI Compliance Covers

AI compliance encompasses multiple interconnected areas that organizations address throughout the AI system lifecycle. Legal compliance forms the foundation, requiring adherence to existing laws around discrimination, privacy, and consumer protection that apply to AI-driven decisions.

Ethical considerations represent another critical dimension of AI compliance. These standards address fairness, transparency, and accountability in AI system behavior. Organizations establish ethics policies that guide AI development and deployment while preventing harmful outcomes for affected individuals and communities.

Technical requirements focus on the implementation of safeguards within AI systems themselves. These include bias detection mechanisms, explainability features, and monitoring systems that track AI performance over time. Technical compliance ensures that AI systems operate as intended while providing the transparency needed for regulatory oversight.

Source: ResearchGate

Major Regulatory Frameworks

The European Union AI Act represents the most comprehensive AI regulation currently in effect. The Act categorizes AI systems by risk level, with prohibited applications including social scoring and real-time facial recognition in public spaces. High-risk AI systems used in employment, credit decisions, and law enforcement face strict requirements for testing, documentation, and human oversight.

The United States approaches AI regulation through multiple channels rather than a single comprehensive law. The NIST AI Risk Management Framework provides voluntary guidance that many organizations adopt. Federal agencies comply with specific AI requirements under executive orders, while states like California are implementing their own AI transparency laws.

Industry-specific regulations add another layer of requirements. Financial services firms comply with fair lending laws when using AI for credit decisions. Healthcare organizations face medical device regulations for AI diagnostic tools. Employment-related AI systems meet anti-discrimination requirements that vary by jurisdiction.

Source: The Future of Privacy Forum

Key Compliance Requirements

Documentation requirements form a central component of AI compliance across most regulatory frameworks. Organizations maintain detailed records of AI system development, including training data sources, model architectures, testing results, and performance metrics.

- Training data documentation — Complete records of data sources, collection methods, and quality validation procedures

- Model development records — Version control histories tracking every change made to AI models with business justification

- Decision audit trails — Comprehensive logs of inputs, processing steps, and outputs for each AI decision

Source: Medium

Risk assessment processes require organizations to systematically evaluate potential harms from AI deployment. These assessments examine technical risks like model accuracy and bias, as well as broader societal impacts on affected populations.

Human oversight mechanisms ensure that people maintain meaningful control over AI-driven decisions. Regulatory frameworks typically require human review for high-stakes decisions like loan approvals or hiring choices. The level of required oversight varies based on the AI system’s risk classification and potential impact on individuals.

Implementation Strategies

Organizations typically begin AI compliance implementation by conducting comprehensive assessments of their current AI landscape. These assessments inventory existing AI systems, evaluate current governance practices, and identify gaps between current practices and regulatory requirements.

Governance structure development establishes the organizational framework needed to support ongoing AI compliance. Many organizations create AI governance committees that include representatives from legal, technical, and business functions. These committees oversee AI risk management, approve new AI deployments, and ensure compliance with established policies.

Policy development translates regulatory requirements into specific organizational standards and procedures. AI governance policies typically address acceptable use cases, risk assessment procedures, testing requirements, and approval processes for AI deployment.

- Acceptable use policies — Define appropriate AI applications and prohibited use cases

- Risk assessment protocols — Establish systematic evaluation procedures for AI deployments

- Testing standards — Specify validation requirements before AI system deployment

- Approval workflows — Create clear processes for AI project authorization

Getting Professional Support

Organizations frequently engage external expertise to supplement internal capabilities for AI compliance implementation. Law firms specializing in AI regulation provide guidance on interpreting complex regulatory requirements and developing compliant policies.

Technology consultants help organizations select and implement technical solutions for AI governance and monitoring. Compliance auditors conduct independent assessments of AI systems and governance practices to identify potential issues before regulatory reviews.

Industry associations and standards organizations offer resources for AI compliance including best practice guidance, training programs, and peer networking opportunities. Organizations can participate in working groups that develop industry-specific compliance approaches while staying current on regulatory developments.

Understanding AI Compliance Fundamentals

AI compliance refers to ensuring that artificial intelligence systems operate within established legal frameworks, industry standards, and ethical principles. Organizations developing or deploying AI technologies work to align their systems with regulations, professional guidelines, and moral standards that govern how AI affects people and society.

The concept encompasses the processes, controls, and oversight mechanisms that organizations implement to meet requirements set by governments, industry bodies, and internal policies. AI compliance addresses both mandatory legal obligations and voluntary ethical commitments that guide responsible AI development and deployment.

Core Components

AI compliance includes several fundamental areas that organizations address when developing and operating AI systems.

Legal adherence involves following laws and regulations that apply to AI systems. Organizations comply with data protection laws, anti-discrimination statutes, and sector-specific regulations that govern how AI systems operate in different industries like healthcare, finance, or employment.

Data privacy protects personal information used in AI systems. Organizations implement safeguards for collecting, storing, processing, and sharing data while ensuring individuals maintain control over their personal information according to privacy regulations and organizational policies.

Transparency provides visibility into how AI systems operate and make decisions. Organizations document AI system capabilities, limitations, and decision-making processes to enable users, regulators, and affected individuals to understand how the systems function.

AI Compliance vs Traditional IT Compliance

AI compliance differs from traditional IT compliance in several ways that reflect the unique characteristics of AI systems.

Bias and discrimination represent distinct challenges for AI systems. Traditional IT systems process data according to predetermined rules, while AI systems learn patterns from data that may contain historical biases or demographic disparities. Organizations monitor AI outputs for discriminatory patterns and implement fairness measures that traditional IT compliance frameworks don’t address.

Algorithmic decision-making creates new accountability challenges. Traditional IT systems execute programmed instructions with predictable outcomes, while AI systems make decisions through complex algorithms that even their creators may not fully understand.

Dynamic behavior complicates AI compliance monitoring. Traditional IT systems maintain consistent behavior once deployed, while AI systems may change their behavior as they process new data or adapt to different environments.

Why AI Compliance Matters for Your Business

AI compliance affects businesses through risk mitigation, trust building, and responsible innovation. Organizations face increasing regulatory scrutiny as AI systems become more prevalent in business operations.

Regulatory penalties and legal risks represent significant concerns. The EU AI Act imposes fines up to €35 million or 7 percent of global annual revenue for serious violations. These penalties apply to any organization whose AI systems impact people within the EU, regardless of where the systems are developed or operated.

Legal action from affected individuals represents another significant risk. AI systems that produce discriminatory outcomes in hiring, lending, or other decisions can trigger lawsuits under existing civil rights laws. Class action lawsuits involving algorithmic bias have already resulted in multi-million dollar settlements in the financial services and employment sectors.

Market access increasingly depends on demonstrating AI compliance capabilities. The EU AI Act and similar regulations create mandatory compliance requirements for organizations operating in regulated markets. Companies that develop comprehensive compliance capabilities gain access to markets where competitors may be excluded due to non-compliance.

Essential AI Risk Management Strategies

AI risk management involves systematic processes to identify, assess, and control potential problems that can arise from artificial intelligence systems. These strategies help organizations prevent harm while maintaining the benefits of AI technology.

Data Governance and Privacy Protection

Data governance establishes rules and processes for how organizations collect, store, use, and dispose of data in AI systems. Privacy protection ensures that personal information remains secure and that individuals maintain control over their data.

Organizations implement data governance through clear policies that specify who can access data, how long data is retained, and what purposes data can serve. Data lineage tracking records where data comes from and how it moves through AI systems, creating an audit trail for compliance and troubleshooting.

Privacy-preserving techniques allow AI systems to learn from data while protecting individual privacy. Differential privacy adds mathematical noise to datasets so that individual records can’t be identified while preserving overall statistical patterns. Federated learning trains AI models across multiple locations without moving raw data to a central server.

Bias Detection and Mitigation

Bias in AI systems occurs when algorithms produce unfair or discriminatory outcomes for different groups of people. Bias detection involves testing AI systems to identify when they treat similar situations differently based on protected characteristics like race, gender, or age.

Statistical testing measures how AI systems perform across different demographic groups. Common metrics include demographic parity, which checks if positive outcomes occur at equal rates across groups, and equalized odds, which examines whether error rates remain consistent across populations.

- Pre-processing methods — Modify training data to remove bias patterns or improve representation of underserved groups

- In-processing techniques — Incorporate fairness constraints directly into machine learning algorithms during training

- Post-processing approaches — Adjust AI system outputs to achieve more equitable outcomes while maintaining overall system performance

Transparency and Explainability

Transparency in AI systems means providing clear information about how the system works, what data it uses, and how it makes decisions. Explainability refers to the ability to understand and interpret specific AI decisions in terms that humans can comprehend.

Model documentation creates comprehensive records of AI system design, training procedures, performance characteristics, and limitations. This documentation includes information about training data sources, algorithm choices, hyperparameter settings, and validation results.

Explanation generation systems produce human-readable descriptions of how AI systems reach specific decisions. Local explanations focus on individual predictions, showing which input features most influenced a particular outcome. Global explanations describe overall system behavior patterns and decision-making tendencies.

Technical Requirements for AI Compliance

AI compliance requires specific technical implementations that go beyond writing policies or forming committees. Organizations implement technical systems to track, validate, and monitor their AI systems throughout their entire lifecycle.

Documentation and Audit Trails

AI compliance documentation creates comprehensive records of every aspect of AI system development and operation. This documentation serves as evidence that organizations followed proper procedures and made responsible decisions throughout the AI lifecycle.

Organizations maintain detailed records of model architecture decisions, algorithm selection rationale, and development methodology choices. These records include version control histories that track every change made to AI models, along with the business justification for each modification.

Complete training data documentation includes data source identification, collection methodologies, preprocessing steps, and quality validation procedures. Organizations record data lineage information that traces each data element from its original source through all transformations and modifications.

Model Validation and Testing

AI model validation establishes that systems perform as intended while meeting accuracy, fairness, and safety standards. Validation protocols create repeatable processes for evaluating AI systems before deployment and throughout their operational life.

Accuracy validation measures how well AI systems perform their intended functions across different scenarios and data conditions. Testing protocols establish baseline performance metrics during development and create benchmarks for ongoing evaluation.

Fairness testing evaluates whether AI systems produce equitable outcomes across different demographic groups and use cases. These procedures implement statistical tests that measure disparate impact and identify potential discriminatory effects.

Performance Monitoring

Continuous monitoring systems track AI performance in real-time and alert organizations when systems drift from expected behavior patterns. These monitoring capabilities enable rapid detection of compliance issues before they impact users or violate regulatory requirements.

Monitoring systems collect performance metrics continuously during AI system operation and compare current performance against established baselines. These systems track accuracy rates, response times, error frequencies, and user interaction patterns to identify performance trends and anomalies.

Ongoing bias monitoring evaluates AI system outputs across different population groups to detect emerging fairness issues. Monitoring systems implement statistical tests that run automatically on system outputs and flag potential discriminatory patterns.

Building Your AI Governance Framework

Creating an effective AI governance framework requires deliberate organizational design and clear processes that span multiple departments and expertise areas. A robust framework brings together technical teams, legal professionals, business stakeholders, and compliance specialists.

Organizational Structure and Roles

The AI ethics officer serves as the central point of accountability for AI governance decisions within an organization. This role typically reports to executive leadership and coordinates AI governance activities across different departments.

Cross-functional governance committees provide oversight and decision-making authority for AI-related policies and high-risk deployments. These committees typically include representatives from legal, compliance, technology, business operations, and external subject matter experts.

Specialized technical teams conduct detailed assessments of AI systems including bias testing, performance evaluation, and security reviews. These teams typically include data scientists, machine learning engineers, and software architects who possess deep technical knowledge of AI systems.

Source: VISCHER

Policy Development and Implementation

Organizations develop comprehensive policy frameworks that address AI ethics principles, risk management procedures, technical standards, and operational requirements. Policy frameworks typically start with high-level principles that reflect organizational values and regulatory requirements.

Systematic monitoring of regulatory developments enables organizations to identify changes that affect existing AI systems and compliance requirements. Teams track federal, state, and international regulatory developments while assessing their potential impact on current AI deployments and planned projects.

Detailed implementation guidelines translate high-level policies into specific procedures that technical teams and business users can follow in their daily work. Guidelines address topics such as risk assessment procedures, testing requirements, documentation standards, and approval workflows for AI system deployment.

Frequently Asked Questions About AI Compliance

What timeline do companies typically follow when implementing AI compliance programs?

Most organizations require 6 to 18 months to implement comprehensive AI compliance programs. Companies with existing governance structures and mature AI operations typically complete implementation faster, while organizations starting from minimal governance foundations need longer timelines.

The implementation phases include initial risk assessment taking 2 to 3 months, policy development and governance structure establishment requiring 3 to 6 months, technical implementation of monitoring systems needing 4 to 8 months, and staff training and testing consuming 2 to 4 months. Organizations deploying high-risk AI systems face longer timelines due to more stringent documentation and testing requirements.

How do small businesses manage AI compliance with limited resources?

Small businesses using AI systems face compliance obligations that depend on AI risk levels, industry sectors, and jurisdictions where they operate. Businesses using minimal-risk AI applications like basic chatbots or recommendation systems typically have limited compliance requirements, while those deploying high-risk AI systems face the same regulatory obligations as larger organizations.

The EU AI Act and similar regulations apply compliance requirements based on AI system risk levels rather than organization size. Small businesses can start with basic compliance frameworks, leverage cloud provider compliance tools, and engage external consultants for specialized expertise rather than hiring full-time compliance staff.

Which third-party AI compliance tools provide the best value for organizations?

Popular AI compliance platforms include comprehensive solutions like Dataiku’s AI governance features, H2O.ai’s MLOps platform, and specialized vendors like Credo AI and Arthur. These systems typically connect to model registries, data pipelines, and deployment infrastructure to collect compliance-relevant information automatically.

Organizations should evaluate tools based on their specific compliance requirements, existing technology infrastructure, and budget constraints. Many cloud providers offer integrated compliance features within their AI platforms, while independent vendors focus on specific compliance challenges like bias detection or explainability.

How often do organizations need to update their AI compliance programs?

Organizations typically review and update AI compliance programs quarterly to address regulatory changes, new AI system deployments, and evolving risk profiles. The dynamic nature of AI regulations requires more frequent monitoring than traditional compliance programs, with many companies conducting monthly regulatory updates and annual comprehensive program reviews.

Major updates occur when new regulations take effect, organizations deploy new AI technologies, or significant incidents reveal gaps in existing compliance frameworks. Organizations also update compliance programs following external audits, regulatory guidance changes, or shifts in business operations that affect AI system usage and risk profiles.