AI ethics in healthcare refers to the moral principles and guidelines that govern how artificial intelligence systems are developed, deployed, and used in medical settings to ensure patient safety, fairness, and respect for human dignity.

Healthcare organizations worldwide are rapidly adopting AI technologies to improve patient diagnosis, treatment planning, and care delivery. These systems analyze medical images, predict patient outcomes, and assist doctors in making complex clinical decisions. The power of AI to transform medicine brings tremendous potential benefits but also creates significant ethical challenges.

Source: Baishideng Publishing Group

AI systems in healthcare make decisions that directly impact patient lives, from determining treatment recommendations to allocating scarce medical resources. When these systems contain biases or fail to protect patient privacy, the consequences can be severe. Research has documented cases where AI algorithms performed less accurately for certain racial groups or failed to protect sensitive health information.

The complexity of AI ethics in healthcare stems from the intersection of rapidly advancing technology with fundamental medical principles like “do no harm” and patient autonomy. Healthcare leaders, technology developers, and policymakers work together to ensure AI serves all patients fairly while maintaining the trust that forms the foundation of medical care.

Understanding AI Ethics in Healthcare

AI ethics in healthcare addresses the moral challenges that arise when artificial intelligence systems help make medical decisions. These systems process patient data to diagnose diseases, recommend treatments, and predict health outcomes. Unlike AI used in other industries, healthcare AI directly affects human lives and safety.

Source: Bacancy Technology

The core challenge is balancing AI’s potential benefits with protecting patients from harm. Healthcare AI can improve diagnostic accuracy and reduce medical errors. However, these same systems can perpetuate biases against certain patient groups or expose sensitive medical information if not properly designed and monitored.

Healthcare AI applications include diagnostic imaging systems that detect cancer in X-rays and MRIs, treatment recommendation engines that suggest optimal therapies based on patient characteristics, and predictive models that identify patients at risk for complications. Each application presents unique ethical considerations.

Medical ethics provides the foundation for healthcare AI governance. The principle of beneficence requires AI systems to actively improve patient care. Non-maleficence means avoiding harm through biased algorithms or privacy breaches. Patient autonomy ensures people maintain control over their medical decisions even when AI assists providers. Justice demands fair access to AI benefits across all patient populations.

Core Ethical Principles for Healthcare AI

Healthcare AI operates under established medical ethics principles adapted for technological contexts. These principles guide how organizations develop, evaluate, and deploy AI systems in clinical settings.

Source: Pinterest

Beneficence requires AI systems to actively promote patient well-being. This means AI tools should improve diagnostic accuracy, enhance treatment effectiveness, or increase access to quality care. For example, AI systems that detect early-stage diseases missed by human doctors demonstrate clear beneficence.

Non-maleficence focuses on preventing harm from AI systems. Direct harm occurs when AI provides incorrect diagnoses or inappropriate treatment recommendations. Indirect harm results from privacy breaches, over-reliance on AI that reduces clinical skills, or biased algorithms that disadvantage certain patient groups.

Patient autonomy becomes complex with AI systems because patients may not understand how algorithms influence their care. Healthcare providers must explain AI’s role in medical decisions using clear, accessible language. Patients retain the right to refuse AI-assisted care when alternatives exist.

Justice in healthcare AI addresses fair distribution of benefits and risks across patient populations. AI systems trained primarily on data from certain demographic groups may perform poorly for underrepresented populations, creating health disparities.

Patient Autonomy and AI Transparency

Patients have the right to understand when AI systems influence their medical care and maintain control over treatment decisions. This creates challenges because many AI systems operate as “black boxes” that are difficult for doctors to explain.

Healthcare providers face the challenge of explaining complex AI algorithms to patients in understandable terms. When an AI system recommends a specific treatment or identifies a potential health risk, patients deserve to know how the technology reached that conclusion.

Informed consent processes require updates to address AI involvement in healthcare decisions. Patients need clear explanations about which aspects of their care involve AI assistance, how accurate these systems are, and what alternatives exist without AI support.

- Explanation requirements — Healthcare providers explain AI’s role using simple language that avoids technical jargon

- Alternative options — Patients learn about non-AI care alternatives when available

- Accuracy information — Providers share AI system performance data relevant to the patient’s situation

- Decision control — Patients maintain final authority over accepting or rejecting AI recommendations

Communication strategies help patients understand AI contributions to their care without overwhelming them with technical details. Visual aids can show how AI analyzes medical images or highlight risk factors in patient data. Layered explanations provide basic information initially with additional detail available for interested patients.

AI Bias and Healthcare Disparities

AI bias in healthcare occurs when algorithms make unfair or inaccurate decisions that systematically disadvantage certain patient groups. This bias can perpetuate existing health disparities or create new forms of discrimination in medical care.

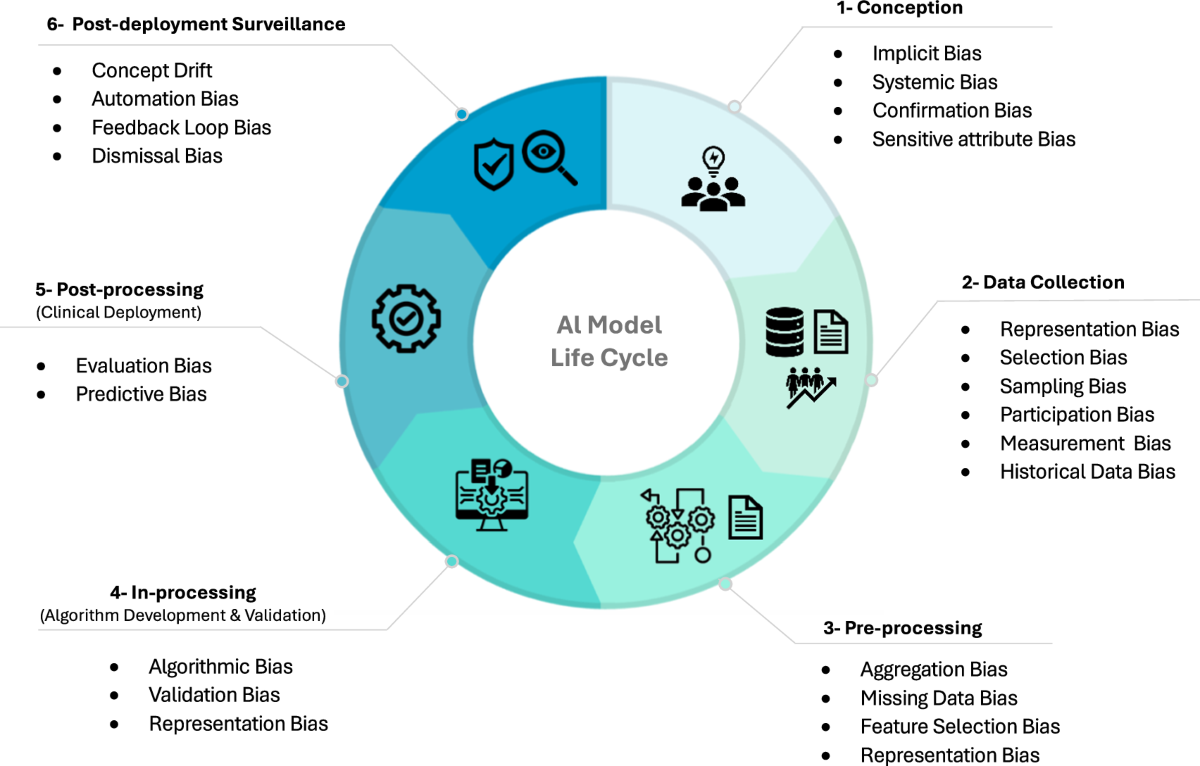

Source: Nature

Healthcare AI bias emerges from several sources. Training data may underrepresent certain populations, leading to algorithms that perform poorly for those groups. Historical medical data can contain embedded prejudices from past healthcare practices. Algorithm design choices may inadvertently favor certain patient characteristics over others.

Research has documented concerning examples of bias in healthcare AI systems. Cardiovascular risk assessment tools show reduced accuracy for African American patients compared to white patients. Skin cancer detection algorithms perform poorly on darker skin tones because training data included fewer images of dark-skinned patients. Kidney function calculators historically used race-based adjustments that potentially delayed appropriate care for Black patients.

Gender bias affects AI diagnostic accuracy in multiple medical specialties. Chest X-ray analysis algorithms trained predominantly on male patient data exhibit lower accuracy for female patients. Heart disease prediction models developed using mostly male subjects demonstrate reduced reliability for women.

Healthcare organizations can implement strategies to detect and reduce AI bias throughout the development and deployment process:

- Data auditing — Review training datasets to identify underrepresented populations and collect additional diverse data

- Algorithm testing — Evaluate AI performance across different demographic groups during development

- Performance monitoring — Track clinical outcomes and accuracy metrics by patient population after deployment

- Bias correction — Apply mathematical techniques to reduce disparate impacts on different groups

Privacy Protection in Healthcare AI

Healthcare AI systems handle extremely sensitive personal information including medical records, diagnostic images, treatment histories, and genetic data. Protecting this information requires comprehensive privacy safeguards that address the unique challenges of AI processing.

Source: The HIPAA Journal

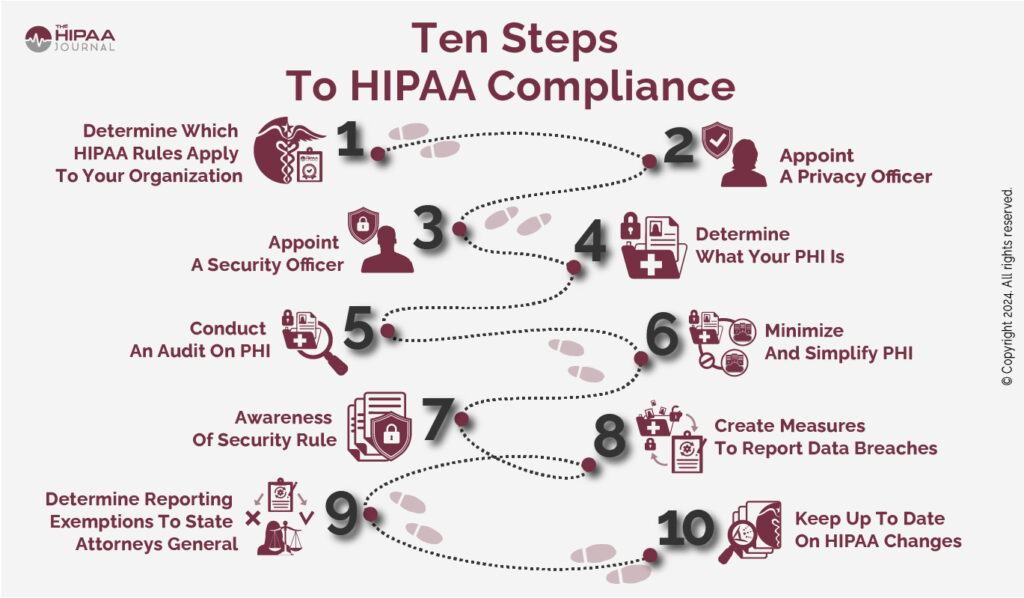

The Health Insurance Portability and Accountability Act (HIPAA) establishes strict rules for protecting patient health information that apply directly to AI systems. HIPAA covers “protected health information” (PHI), which includes any health data that can identify a specific patient.

AI systems that access, process, or store PHI must comply with HIPAA’s Privacy Rule, Security Rule, and Breach Notification Rule. Healthcare organizations remain ultimately responsible for HIPAA compliance even when using third-party AI vendors.

Technical safeguards protect patient data throughout the AI lifecycle. Data encryption secures information both at rest and during transmission. Role-based access controls ensure only authorized personnel can access PHI through AI systems. Comprehensive audit trails document every interaction with patient data.

- Encryption protocols — Protect data using advanced encryption standards during storage and transmission

- Access controls — Limit data access based on job responsibilities and clinical roles

- Audit logging — Document who accessed what data and when for compliance monitoring

- Data minimization — AI systems access only the health information necessary for their intended function

Business Associate Agreements with AI vendors establish specific responsibilities for protecting PHI. These agreements address how vendors can use patient data for model training, what security measures they implement, and how they handle data breaches or system failures.

Explainable AI in Medical Decision-Making

Healthcare artificial intelligence systems often operate as “black boxes” that make medical recommendations without revealing their reasoning process. This lack of transparency creates challenges for patient safety, professional accountability, and informed consent.

Source: Dataiku blog

Explainable AI refers to systems that provide clear, understandable explanations for their decisions and recommendations. In healthcare settings, explainable AI reveals which patient symptoms, test results, or medical history factors most strongly influenced a diagnostic or treatment recommendation.

Medical decisions carry life-or-death consequences, making transparency essential for patient safety. When an AI system recommends a specific cancer treatment, doctors must understand the reasoning to evaluate whether the recommendation aligns with their clinical judgment.

Several technical approaches make AI decision-making more transparent for healthcare professionals. Feature importance methods highlight which patient data points most strongly influenced an AI recommendation. Attention mechanisms show which parts of medical images an AI system focused on during analysis.

Healthcare providers face the challenge of explaining AI-assisted medical decisions to patients in understandable language. Patients need to know when AI systems contribute to their diagnosis or treatment recommendations without requiring technical details about algorithms.

Simple visual aids help patients understand AI contributions to their care. Doctors can show patients highlighted areas on medical images where AI detected abnormalities. Charts and graphs illustrate how different risk factors contribute to disease predictions.

Healthcare AI Governance Frameworks

Healthcare organizations require structured governance frameworks to ensure AI systems operate ethically and safely. These frameworks establish clear roles, responsibilities, and processes for overseeing AI implementation across all deployment stages.

Source: Frontiers

Effective governance includes organizational oversight through dedicated committees, systematic risk assessment processes, and comprehensive vendor evaluation protocols. Each component addresses different aspects of AI deployment while working together to maintain ethical standards and regulatory compliance.

AI ethics committees serve as the central decision-making body for healthcare AI governance. These committees provide oversight, guidance, and approval for AI systems throughout their lifecycle in healthcare settings.

Committee composition includes diverse expertise to address complex ethical challenges:

- Clinical leaders — Medical professionals with expertise in affected specialties

- Technical experts — Data scientists and AI specialists who understand system capabilities

- Legal counsel — Attorneys familiar with healthcare regulations and liability issues

- Patient advocates — Representatives who can speak to patient concerns and community needs

The committee’s primary responsibilities center on evaluating AI proposals and monitoring deployed systems. Key activities include reviewing AI system proposals for ethical compliance, establishing organizational policies for AI use, and investigating incidents involving AI systems.

Risk assessment processes identify potential harms associated with AI systems and establish mitigation strategies. Healthcare organizations conduct systematic evaluations to understand AI risks and implement appropriate safeguards.

Regulatory Compliance for Healthcare AI

Healthcare AI systems operate within a complex regulatory environment designed to protect patient safety while allowing medical innovation. Multiple government agencies have established rules that healthcare organizations follow when implementing AI technologies.

Source: LinkedIn

The Food and Drug Administration treats AI-enabled medical devices as software-based medical devices requiring regulatory oversight. The FDA has developed specific pathways for AI and machine learning technologies that differ from traditional medical device approval processes.

The FDA’s Software as a Medical Device framework categorizes AI tools based on their clinical risk and intended use. High-risk AI applications that influence critical medical decisions require extensive clinical validation and premarket approval. Lower-risk applications may qualify for streamlined review processes.

HIPAA requirements apply to AI systems that access, process, or store protected health information. Healthcare organizations must ensure their AI implementations comply with HIPAA’s Privacy Rule, Security Rule, and Breach Notification Rule.

Data minimization principles require AI systems to access only the health information necessary for their intended function. However, many AI applications perform better with comprehensive patient data, creating tension between privacy protection and system performance.

State governments have begun implementing AI-specific healthcare regulations that supplement federal requirements. California requires healthcare providers to disclose when AI tools facilitate clinical conversations with patients. Professional licensing boards in some states have issued guidance about physician responsibilities when using AI diagnostic tools.

Implementation Strategies for Ethical Healthcare AI

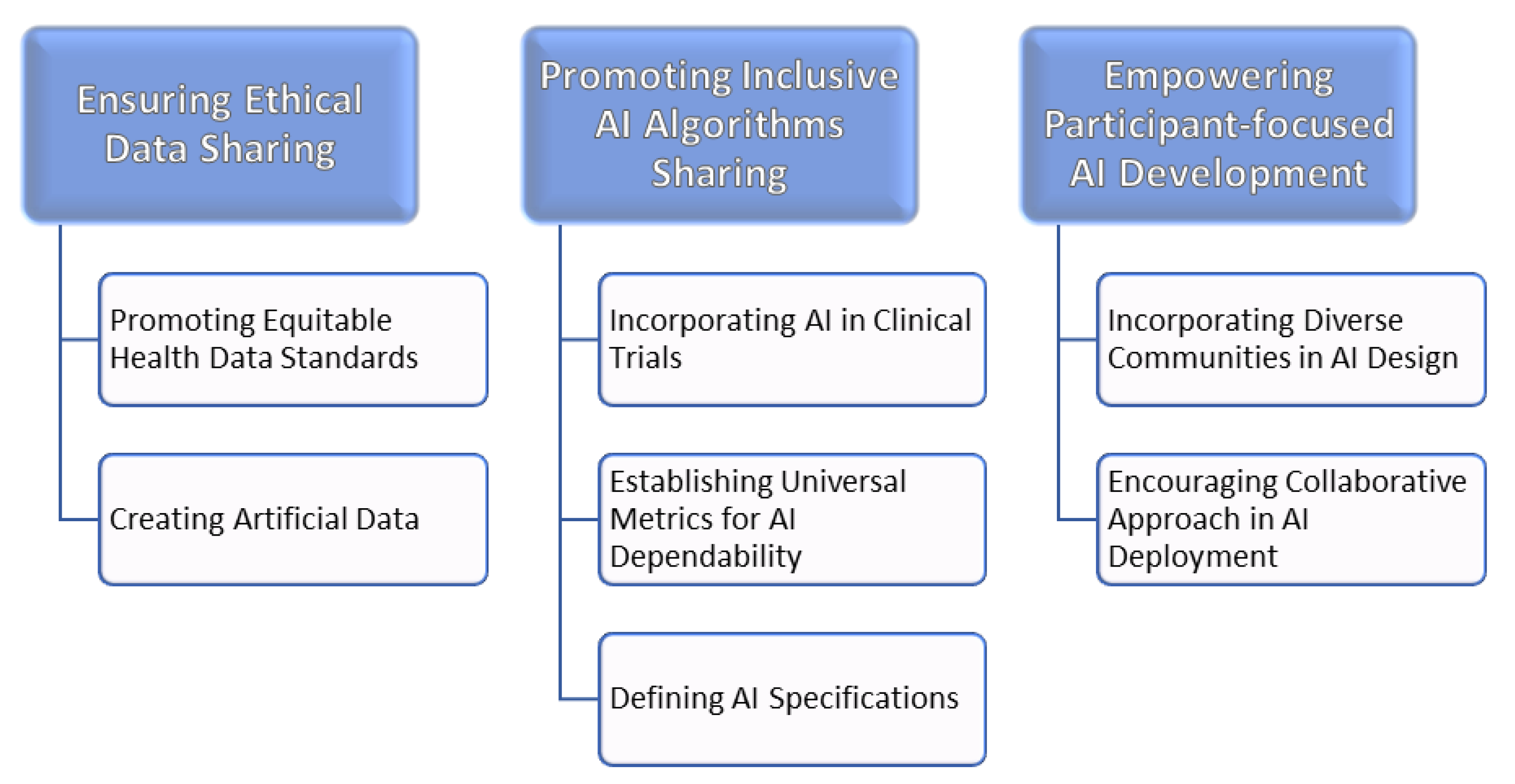

Healthcare organizations can follow structured approaches to implement AI systems while maintaining ethical standards and protecting patients. These strategies address technical, educational, and governance aspects of responsible AI deployment.

Source: Porton Health

Establishing multidisciplinary governance committees represents the first step in ethical AI implementation. These committees include healthcare providers, AI experts, ethicists, legal advisors, patient representatives, and data scientists to oversee AI decisions and policies.

Comprehensive risk assessments evaluate potential harms before deploying AI systems. Organizations examine data security vulnerabilities, privacy concerns, bias risks, and clinical safety considerations. This assessment informs decisions about whether to proceed with AI implementation and what safeguards to establish.

Staff training programs ensure healthcare professionals understand AI capabilities, limitations, and ethical considerations. Training covers AI system functionality, potential biases and risks, and best practices for integrating AI tools into clinical workflows.

Education programs address the importance of data quality and diversity in AI systems. Healthcare workers learn why representative datasets matter for equitable patient care and how to recognize potential bias in AI recommendations.

Technical safeguards protect patient data and maintain AI system integrity through multiple layers of security and monitoring. Data encryption protocols secure information, while role-based access controls limit data access to authorized personnel only.

Performance measurement systems track AI effectiveness and ethical compliance through specific metrics. These systems evaluate clinical outcomes, equity impacts, and adherence to organizational policies and regulatory requirements.

Common Challenges in Healthcare AI Ethics Implementation

Healthcare organizations encounter several recurring challenges when implementing ethical AI systems. Understanding these challenges helps organizations prepare for common obstacles and develop effective solutions.

Source: MDPI

Staff resistance to AI adoption often stems from concerns about job displacement, increased workload, or skepticism about AI accuracy. Healthcare professionals may worry that AI systems will replace clinical judgment or create additional documentation requirements without clear benefits.

Technical integration challenges arise when AI systems don’t work well with existing electronic health records or clinical workflows. Poor integration can disrupt patient care processes and reduce AI effectiveness. Organizations address these challenges through careful system selection and pilot testing programs.

Cost considerations affect AI ethics implementation because comprehensive governance requires significant investment in staff training, monitoring systems, and ongoing compliance activities. Smaller healthcare organizations may struggle to afford robust AI ethics programs.

Vendor management complexities emerge when working with AI companies that may not fully understand healthcare regulations or ethical requirements. Organizations must carefully evaluate vendors and establish clear contractual obligations for ethical AI development and deployment.

Patient communication challenges occur when explaining AI involvement in medical care. Healthcare providers need training to communicate AI concepts clearly without causing confusion or anxiety among patients.

Frequently Asked Questions About AI Ethics in Healthcare

How do healthcare organizations detect bias in their AI diagnostic systems?

Healthcare organizations detect bias through systematic testing across different patient populations and continuous monitoring of clinical outcomes. Organizations compare AI performance metrics between demographic groups, analyze diagnostic accuracy rates for different patient populations, and track whether certain groups receive different quality recommendations from AI systems.

Testing protocols evaluate AI systems using diverse datasets that represent the organization’s patient population. Organizations examine whether AI accuracy remains consistent across racial, ethnic, gender, and age groups. Performance monitoring continues after deployment to identify bias that may emerge over time as patient populations or AI systems change.

What specific HIPAA requirements apply when AI vendors process patient data?

AI vendors that handle protected health information must sign Business Associate Agreements that establish specific data protection obligations. These agreements require vendors to implement appropriate technical safeguards including data encryption, access controls, and audit logging capabilities.

Vendors must comply with the minimum necessary standard, accessing only the patient information required for their AI system’s intended function. They must also follow breach notification requirements, reporting any unauthorized access or disclosure of patient data within specified timeframes. Organizations retain ultimate responsibility for HIPAA compliance even when using third-party AI vendors.

How long does it typically take to establish AI ethics governance in a healthcare organization?

Most healthcare organizations require six to twelve months to establish comprehensive AI ethics frameworks. The timeline includes two months for stakeholder engagement and committee formation, followed by two months for policy development and risk assessment activities.

Staff training and system implementation typically take four months, encompassing education about AI ethics principles and installation of monitoring systems. The final phase involves three to four months of pilot testing and refinement based on practical experience with AI governance processes.

What happens when an AI system makes a medical error that harms a patient?

Healthcare organizations activate incident response protocols that include immediate patient safety measures, thorough investigation of the AI system’s role in the error, and reporting to relevant regulatory authorities when required. The investigation examines whether the error resulted from AI system malfunction, inappropriate use by healthcare providers, or inadequate oversight processes.

Legal responsibility typically involves multiple parties including the healthcare provider who made the final clinical decision, the healthcare organization that deployed the AI system, and potentially the AI vendor if system defects contributed to the error. Malpractice liability follows established medical standards for AI-assisted care decisions.

Which types of healthcare AI applications require the most extensive ethical oversight?

High-risk AI applications that directly influence life-threatening medical decisions require the most comprehensive ethical oversight. These include AI systems that diagnose serious conditions like cancer, recommend surgical procedures, or determine medication dosages for critical care patients.

AI systems that affect vulnerable patient populations or address conditions with known health disparities also require enhanced oversight. Examples include mental health diagnostic tools, pediatric care applications, and AI systems used in emergency departments where rapid decisions are necessary. The level of oversight scales with the potential for patient harm and the difficulty of correcting AI errors.