Artificial intelligence in software development refers to using machine learning algorithms and automated tools to assist programmers in writing, testing, and maintaining computer applications.

Software development teams increasingly rely on AI tools to accelerate coding tasks and improve application quality. These intelligent systems can generate code from natural language descriptions, automatically detect bugs, and suggest optimizations throughout the development process. According to IBM’s analysis, AI-powered tools help developers write code faster while reducing human error through intelligent suggestions and automated completion features.

The technology transforms traditional programming workflows by introducing automated assistance at every stage. Development teams can leverage AI for requirements analysis, code generation, testing, and deployment monitoring. NIST’s Secure Software Development Framework now includes specific guidance for generative AI integration, recognizing the growing importance of these tools in modern development practices.

Organizations implementing AI development tools report significant productivity gains but also face new challenges around governance and security. The National Institute of Standards emphasizes balancing AI benefits with proper risk management to ensure reliable and secure software delivery.

Source: AIM Research

What Is AI in Software Development

AI in software development uses computer systems that perform tasks typically requiring human intelligence to create, test, and maintain applications. These systems analyze patterns in existing code, understand programming languages, and generate solutions based on learned data from millions of software projects.

Machine learning algorithms power many AI development tools by recognizing patterns in existing codebases and learning from developer behaviors. Natural language processing enables AI systems to understand human language and convert written requirements into technical specifications. Generative AI creates new code, documentation, and test cases by combining learned patterns with specific project requirements.

How AI Transforms Development Workflows

AI automates repetitive coding tasks that traditionally consume significant developer time. Code completion tools predict and generate entire functions from natural language descriptions, while automated documentation systems update project information without manual intervention.

Intelligent code review systems scan code for bugs, security vulnerabilities, and violations of coding standards automatically. The technology identifies subtle problems that human reviewers might miss, like memory leaks or inefficient database queries. Some AI systems can automatically fix simple issues and suggest improvements for complex problems.

Testing automation represents another major transformation area. AI analyzes application logic and automatically generates test cases that cover edge cases, error conditions, and normal usage scenarios. The technology creates unit tests, integration tests, and user interface tests without manual intervention.

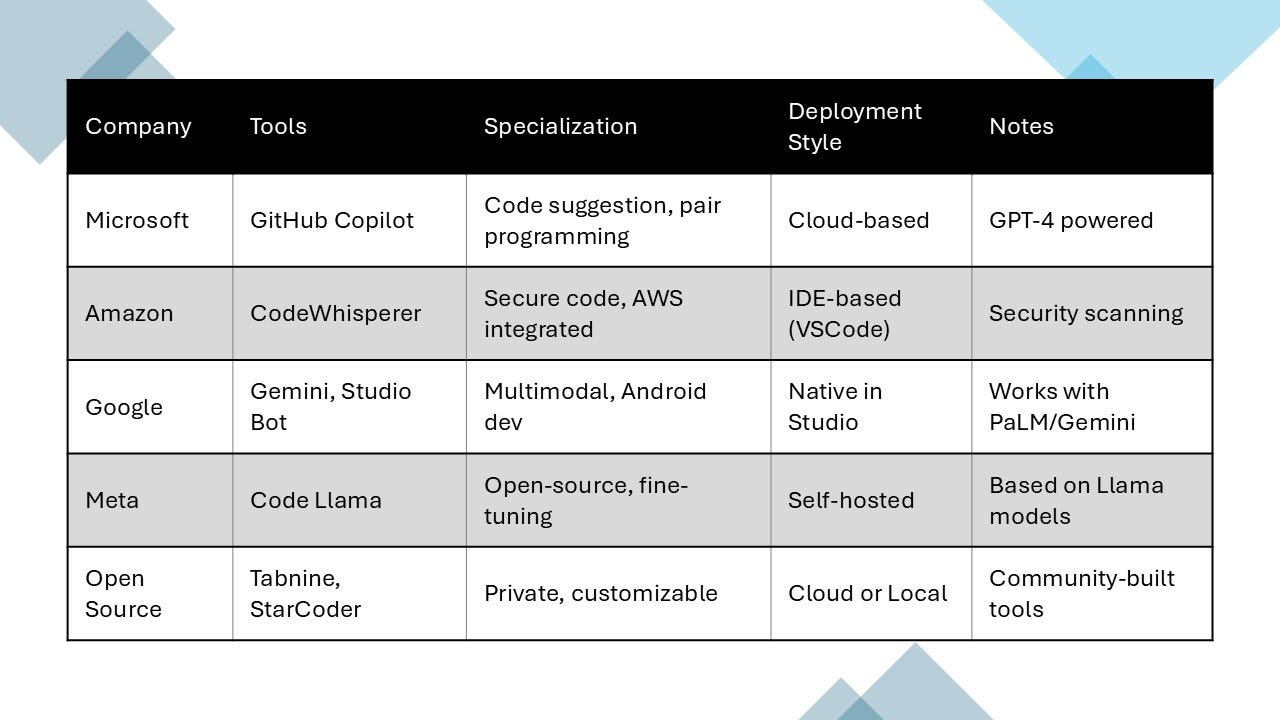

Key AI Development Tools and Platforms

Development teams have access to various AI-powered tools that enhance productivity across the software development lifecycle. These tools range from code completion assistants to comprehensive testing platforms.

Code Generation and Completion Tools

GitHub Copilot operates as an AI pair programmer that suggests code as developers type. The platform integrates with popular editors and supports dozens of programming languages with strong performance in Python, JavaScript, TypeScript, and Go.

Tabnine focuses on code completion using machine learning models trained on publicly available code. The platform offers both cloud-based and on-premises deployment options for organizations with strict data security requirements.

Amazon CodeWhisperer provides code recommendations based on developer comments and existing code patterns. The platform integrates with AWS services and includes built-in security scanning that identifies potential vulnerabilities in generated code.

Source: Medium

AI-Powered Testing Platforms

Testim uses machine learning to create automated tests that adapt to application changes. The platform records user interactions and generates test scripts that remain functional despite UI modifications through smart locators that automatically update when page elements change.

Applitools provides visual testing capabilities using AI to detect visual differences across browsers, devices, and screen resolutions. The platform captures screenshots during test execution and compares them against baseline images to identify visual regressions.

Functionize combines natural language processing with machine learning to generate test cases from requirements documentation. The platform automatically maintains tests when applications change and provides intelligent test execution focused on high-risk areas.

Code Review and Security Systems

SonarQube incorporates machine learning to detect code smells, security vulnerabilities, and maintainability issues across multiple programming languages. The platform provides detailed remediation guidance and tracks code quality metrics over time.

Veracode offers static application security testing with AI-powered vulnerability detection that reduces false positives. The platform integrates with CI/CD pipelines to provide continuous security scanning throughout development.

Snyk focuses on open source security by scanning dependencies and container images for known vulnerabilities. The platform uses machine learning to prioritize security issues and provides automated fix suggestions through pull requests.

Benefits of AI Development Tools

AI-powered development tools deliver measurable improvements across software teams and organizations. Research from major technology providers demonstrates that AI integration transforms traditional workflows through automation and quality enhancement.

Increased Developer Productivity

AI automates routine coding tasks, allowing developers to focus on complex problem-solving. Code completion tools generate functions from natural language descriptions, while automated documentation systems update project information continuously.

- Faster Code Generation — Developers can produce 40-60 percent more code per day with AI assistance

- Reduced Manual Work — Automated boilerplate code creation eliminates repetitive typing tasks

- Improved Focus Time — Less time on routine tasks means more attention to architectural decisions

Enhanced Code Quality

AI systems analyze code patterns to detect vulnerabilities and potential bugs before production deployment. The technology enforces coding standards consistently across development teams while identifying security vulnerabilities that traditional analysis might miss.

Pattern recognition algorithms examine code against established best practices and historical defect data. Automated security testing monitors code for threats and provides mitigation strategies in real-time.

Faster Time-to-Market

AI acceleration reduces project timelines across the development lifecycle from planning through deployment. Automated test case generation creates comprehensive testing suites from user requirements, while intelligent optimization tools streamline deployment processes.

Development teams complete projects faster when AI handles routine validation and optimization tasks. Progressive delivery strategies enabled by AI allow organizations to deploy updates more frequently with reduced risk.

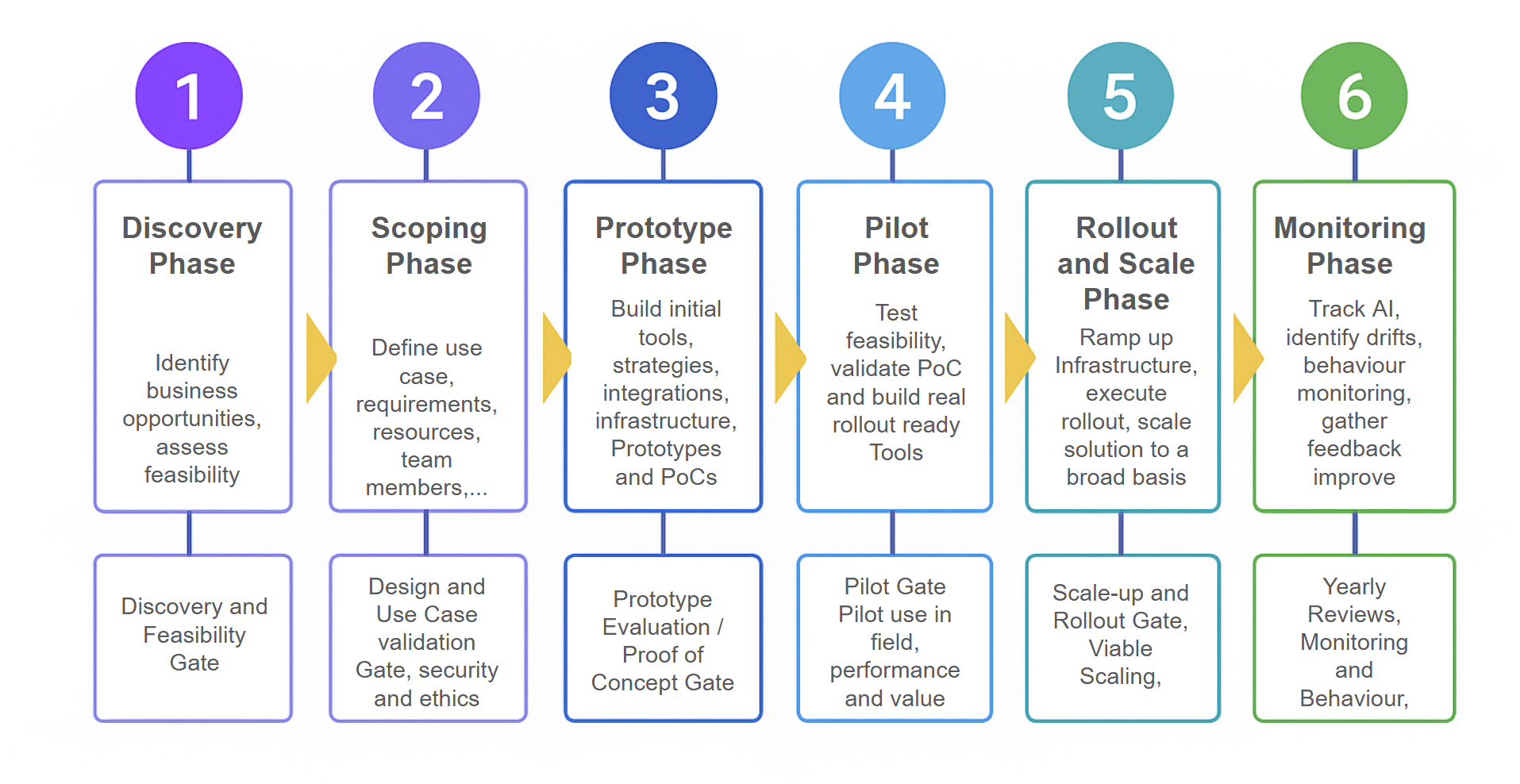

Implementation Strategy for Enterprise Teams

Organizations implementing AI development tools follow a structured process that reduces risks and increases success rates. Each phase builds on the previous one, creating a foundation for sustainable AI adoption.

Source: David Theil – Medium

Assessment and Planning Phase

The assessment begins with mapping existing development workflows and identifying bottlenecks where AI tools could provide assistance. Development teams document current processes for coding, testing, code review, and deployment to understand automation opportunities.

Organizations examine their development infrastructure to determine compatibility with AI tools. This includes evaluating code editors, version control systems, testing frameworks, and deployment pipelines to identify integration points.

Teams define specific, measurable goals for AI implementation such as reducing code review time by 30 percent or increasing test coverage by 25 percent. These metrics provide benchmarks for measuring success throughout implementation.

Tool Evaluation and Selection

Organizations establish evaluation criteria based on development needs and constraints. Common criteria include integration capabilities, security features, cost structure, vendor support, and alignment with existing workflows.

Teams create a shortlist of AI tools matching technical requirements and conduct hands-on evaluations with small developer groups. These evaluations typically last 2-4 weeks and focus on real development tasks rather than demonstrations.

Technical evaluation examines how AI tools integrate with existing development environments including IDEs, version control systems, and CI/CD pipelines. Teams test data handling, security controls, and performance impacts on development systems.

Pilot Program Execution

Pilot programs typically involve 5-10 developers working on representative projects over 6-12 weeks. Organizations select pilot participants who are open to new technologies but realistic about potential challenges.

Teams establish baseline measurements for key metrics before pilots begin, including development velocity, code quality scores, testing coverage, and developer satisfaction. These baselines enable accurate measurement of AI tool impact.

Regular check-ins address technical issues, gather feedback, and adjust usage patterns based on early experiences. Organizations document both positive outcomes and challenges to inform broader rollout planning.

Full Rollout and Optimization

Full rollout occurs in phases, gradually expanding AI tool access to additional teams and projects. Organizations maintain support resources during rollout to address questions and technical issues as usage scales.

Teams implement monitoring systems to track AI tool performance, usage patterns, and impact on development metrics. Regular analysis identifies optimization opportunities and helps refine usage guidelines.

Organizations establish feedback loops for continuous improvement, collecting input from developers about tool effectiveness and desired enhancements. This feedback informs decisions about configuration changes or tool selection adjustments.

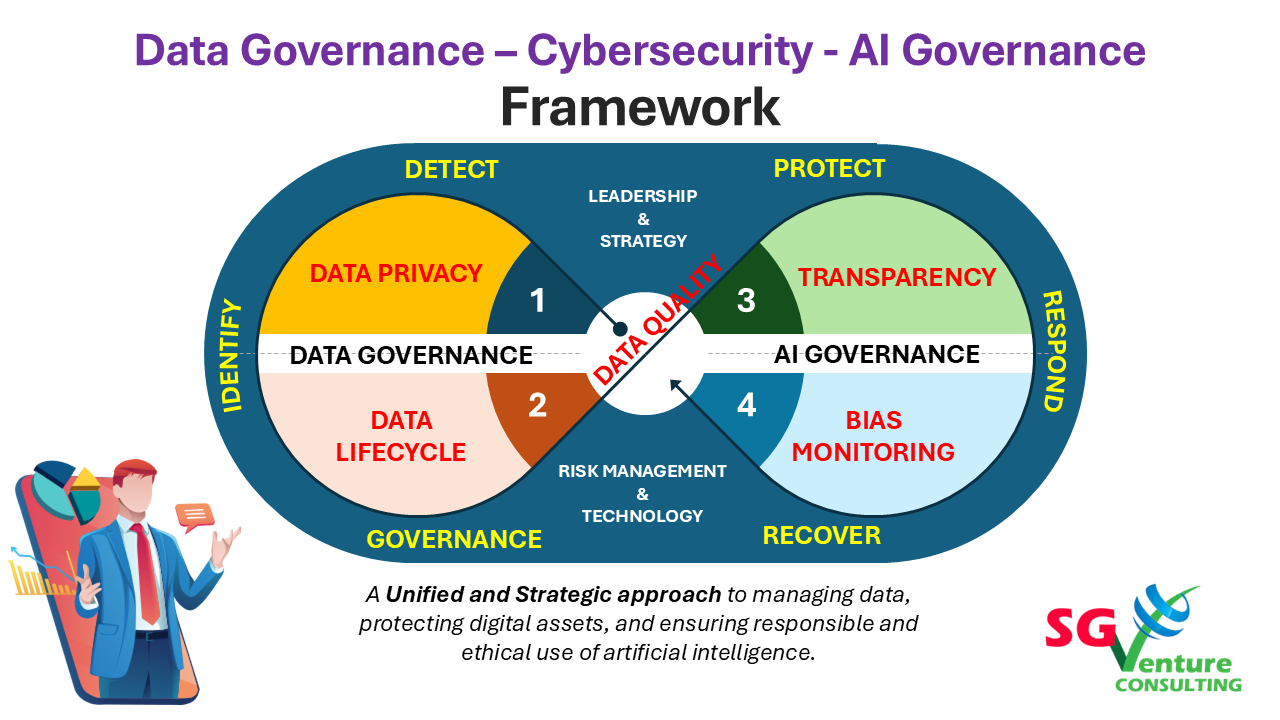

Security and Governance Framework

Enterprise organizations face complex challenges when implementing AI in development environments. A comprehensive security and governance framework addresses data protection, intellectual property safeguards, and compliance requirements.

Source: Medium

Data Privacy and IP Protection

Proprietary code protection requires specific policies for AI tool usage in development environments. Organizations establish clear boundaries around which code repositories and projects AI tools can access during development activities.

Data handling policies define how AI systems process, store, and transmit development-related information. These policies specify data retention periods, access permissions, and deletion procedures for information processed by AI tools.

Code review processes verify that AI-generated code doesn’t include copyrighted material or proprietary algorithms from other sources. Development teams implement scanning tools that detect potential intellectual property violations before code reaches production.

Governance Policies and Risk Management

AI usage policies establish clear guidelines for how development teams can incorporate AI tools into workflows. Policies specify approved AI tools, acceptable use cases, and prohibited activities to maintain security and compliance.

Risk assessment frameworks identify potential risks associated with AI implementation including technical failures, security breaches, and compliance violations. Assessments consider likelihood and impact of various scenarios to prioritize mitigation efforts. Responsible AI practices help organizations balance innovation with risk management.

Accountability measures assign specific responsibilities for AI governance, security monitoring, and compliance oversight. Organizations designate individuals or teams responsible for policy enforcement, incident response, and ongoing risk management.

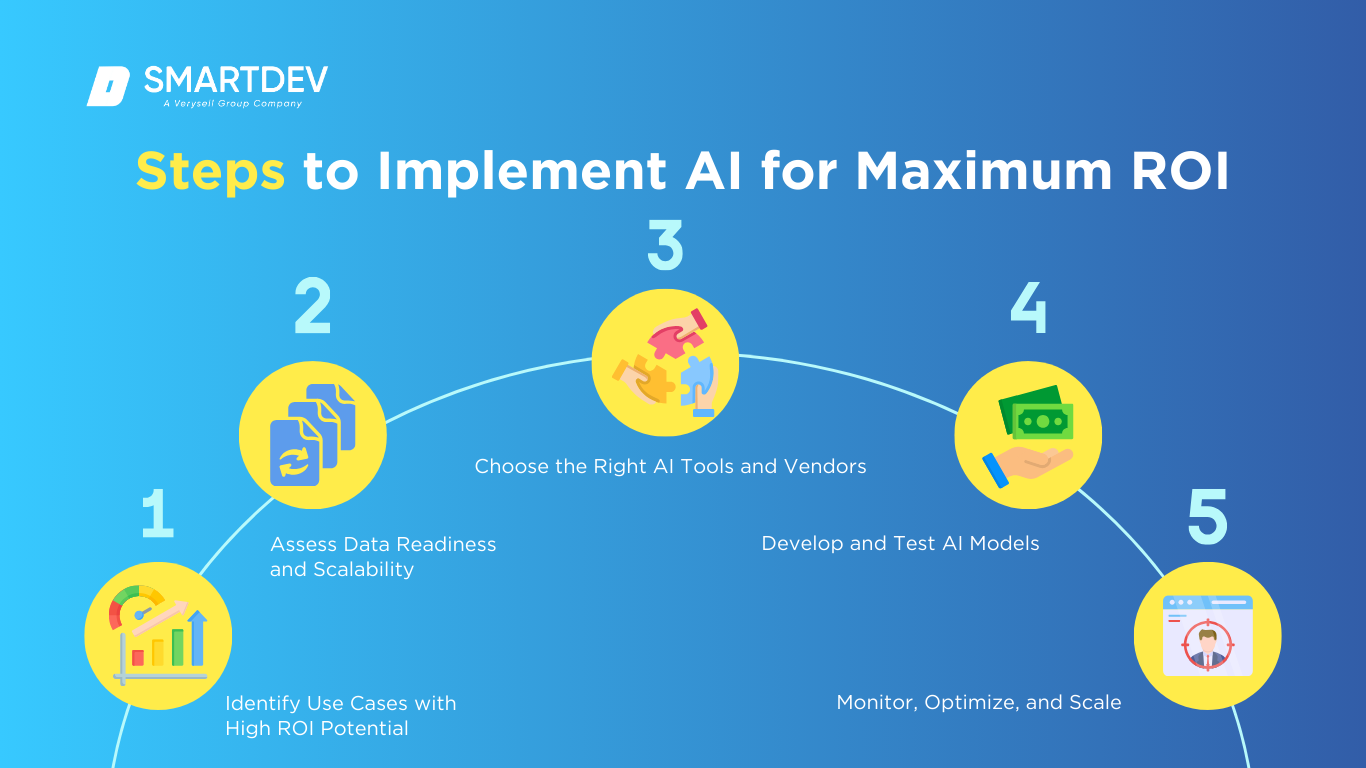

Measuring ROI and Business Impact

Organizations implementing AI development tools require systematic approaches to measure return on investment and business impact. Accurate measurement demonstrates value to stakeholders while identifying optimization areas.

Developer Productivity Metrics

Developer productivity measurement focuses on quantifiable outputs and efficiency indicators that demonstrate AI tool effectiveness. These metrics provide concrete data about individual and team performance improvements.

Code output metrics include lines of code written per day, functions completed per week, and features delivered per sprint cycle. Development velocity indicators track story points completed per sprint and average time to complete development tasks.

Developer satisfaction measures through survey scores on tool usability, self-reported productivity improvements, and tool adoption rates across teams. AI development tools typically increase individual developer output by 40-60 percent while reducing time spent on routine tasks.

Code Quality and Security Improvements

Code quality measurement evaluates AI tool impact on software reliability, maintainability, and security. These metrics demonstrate long-term value through reduced technical debt and improved system stability.

Bug reduction metrics track defects per thousand lines of code, production bug rates before and after implementation, and time from bug introduction to detection. Security enhancement indicators measure vulnerabilities detected during development and compliance violations caught before production.

Organizations typically observe 50-70 percent reductions in security vulnerabilities and 30-50 percent decreases in production bugs after implementing AI development tools. Code maintainability scores often improve by 25-40 percent within six months.

Cost Reduction Analysis

Cost measurement calculates direct financial benefits from AI tool implementation across personnel, infrastructure, and operational expenses. These calculations provide clear financial justification for AI investments.

Labor cost savings include reduced hours for manual coding tasks, decreased debugging time, and lower code review resource requirements. Infrastructure savings come from optimized code reducing cloud computing costs and decreased third-party tool licensing needs.

Source: SmartDev

Financial analysis shows average cost reductions of 20-35 percent in development operations within the first year. Labor cost savings typically range from $50,000 to $150,000 per developer annually, depending on salary levels and tool effectiveness.

Frequently Asked Questions About AI in Software Development

Will AI coding assistants replace software developers completely?

AI enhances developer capabilities rather than replacing them, automating routine tasks while humans focus on creative problem-solving and strategic thinking. AI tools excel at generating boilerplate code and suggesting completions, but software development requires complex decision-making and architectural planning that AI cannot fully replicate.

Human developers remain essential for designing system architecture, making strategic technical decisions, and solving unique problems requiring creativity and context. AI functions as an intelligent assistant that accelerates coding tasks while developers handle conceptual and strategic aspects.

How much do AI development tools typically cost for enterprise teams?

Costs vary based on team size and tool selection, ranging from free open-source options to enterprise subscriptions costing hundreds to thousands of dollars monthly. Individual developer subscriptions for commercial AI coding assistants typically cost $10-30 per month per user.

Enterprise implementations depend on team size and feature requirements. Organizations generally see return on investment through reduced development time and improved code quality within 3-6 months of implementation.

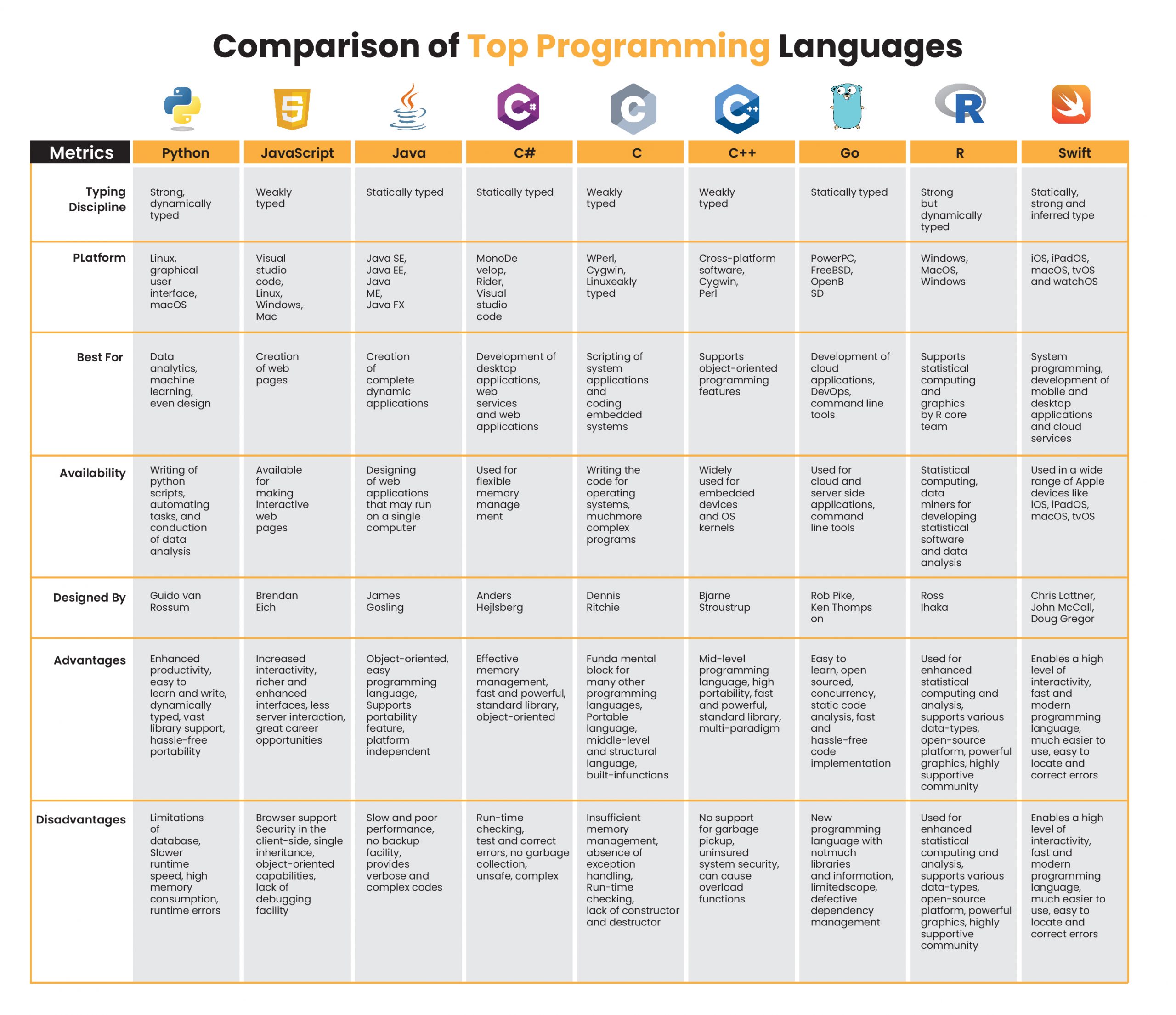

Which programming languages work best with current AI development tools?

Most AI development tools support popular languages like Python, JavaScript, Java, and C++ with expanding support for newer languages. Python receives the strongest AI tool support due to its prevalence in AI research and data science.

Source: ISHIR

JavaScript and TypeScript benefit from extensive AI assistance across frontend and backend development. Java and C# have robust AI tool integration through major development environments, while newer languages like Rust and Go are gaining support as adoption increases.

How can organizations protect proprietary code when using AI platforms?

Organizations can choose tools with strong privacy policies, use on-premise solutions when necessary, and implement code review processes to prevent accidental exposure. Some platforms process code locally without sending it to external servers, while others may use submitted code for model training.

On-premise AI solutions keep code within organizational boundaries but require additional infrastructure investment. Code review processes help identify potential security issues before reaching production systems, while regular audits of AI tool usage maintain security standards.

Do AI development tools integrate with existing legacy development systems?

Modern AI tools offer APIs and plugins for integration with legacy systems, though some customization may be required for seamless workflow adoption. Popular development environments like Visual Studio and IntelliJ support AI plugins that work alongside existing workflows.

Legacy system integration may require middleware or custom adapters to connect AI tools with older development environments. Organizations often implement gradual integration strategies, starting with newer projects before expanding to legacy systems. AI consulting services can help organizations navigate complex integration challenges and develop effective implementation strategies.