AI safety involves protecting artificial intelligence systems from technical failures, security breaches, and harmful behaviors that could damage organizations or people. Modern AI systems process sensitive data, make automated decisions, and control critical business operations. These capabilities create new types of risks that traditional cybersecurity measures cannot fully address.

Organizations face mounting pressure to implement AI systems while managing unprecedented safety challenges. Data poisoning attacks can corrupt AI training processes, while adversarial examples trick AI systems into making dangerous mistakes. Privacy breaches through model inversion attacks expose confidential information used in AI training datasets.

The rapid adoption of AI across healthcare, finance, and other critical sectors has amplified potential consequences of AI failures. A compromised medical AI system could provide incorrect diagnoses, while manipulated financial AI could approve fraudulent transactions. The ECRI Institute identified AI applications as the top technology hazard for healthcare organizations in 2025.

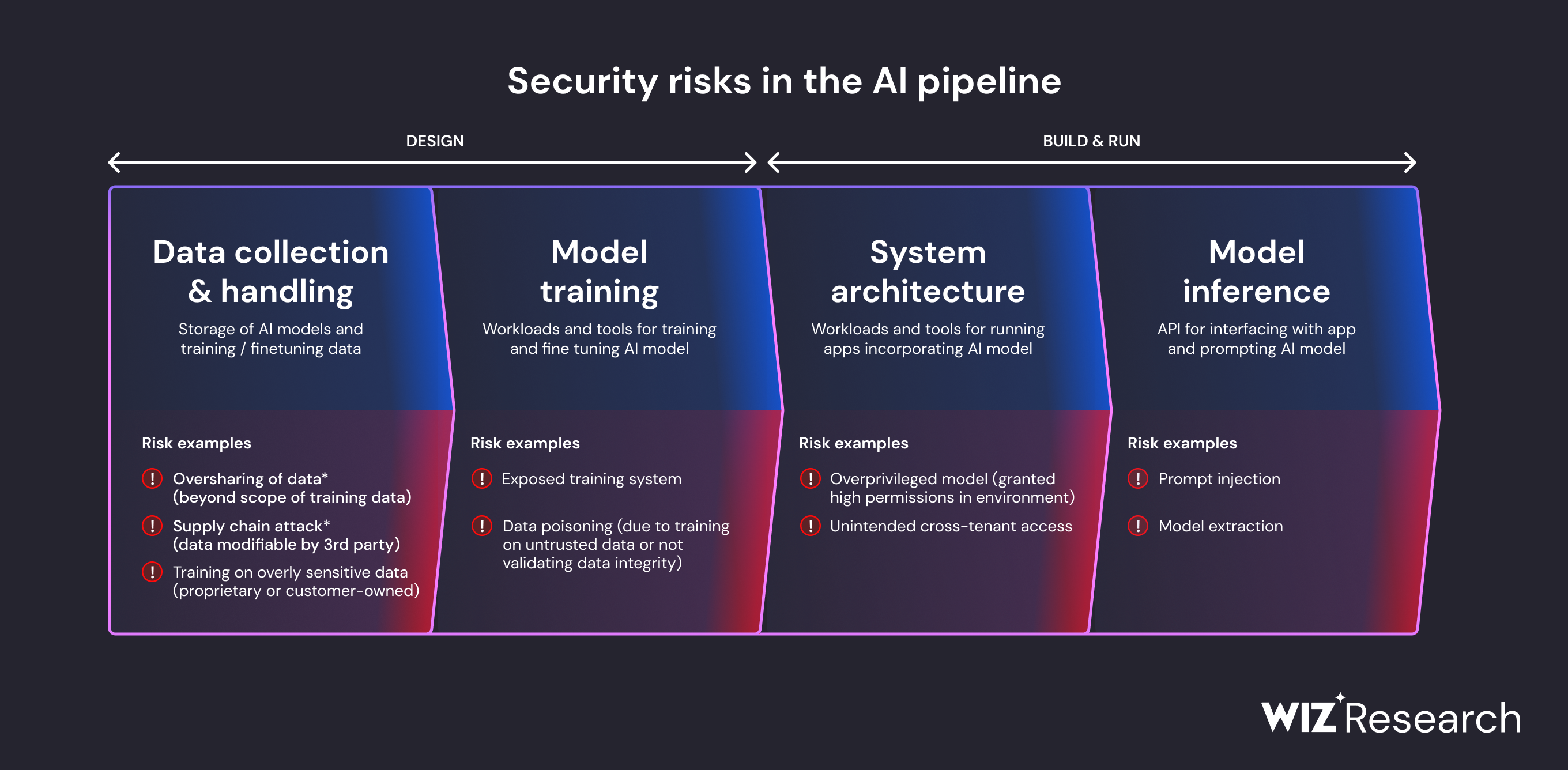

Major categories of AI safety risks across the pipeline. Source: Wiz

Regulatory frameworks now require comprehensive AI risk management approaches. The National Institute of Standards and Technology released the AI Risk Management Framework to help organizations identify and mitigate AI-specific threats. Companies lacking proper AI governance face significantly higher security breach costs and regulatory penalties compared to organizations with established AI safety programs.

What Is AI Safety for Business

AI safety refers to preventing AI accidents, misuse, and harmful consequences while ensuring artificial intelligence technologies benefit humanity rather than cause damage. For enterprise organizations, AI safety encompasses the practices, frameworks, and controls that minimize risks while maintaining the operational benefits of AI systems.

Definition and Core Principles

AI safety represents a comprehensive approach to managing artificial intelligence risks throughout the entire system lifecycle, from initial development through deployment and ongoing operations. The field combines technical safeguards, governance frameworks, and operational procedures to address the unique challenges posed by systems that can make autonomous decisions and exhibit behaviors that may not become apparent until after deployment.

The core principles of responsible AI development include transparency, ensuring AI models operate in ways that can be understood and explained to relevant stakeholders. Fairness addresses bias mitigation and promotes equitable outcomes across diverse populations, while accountability establishes clear roles and responsibilities for AI-related decisions and outcomes.

Robustness focuses on building secure, reliable systems that can withstand potential failures or adversarial attacks. These principles guide organizations in developing AI systems that align with human values and organizational objectives while maintaining operational effectiveness and competitive advantage.

Why AI Safety Matters for Organizations

AI safety failures can result in severe business consequences that extend far beyond immediate technical problems. Organizations lacking proper AI governance policies face significantly higher breach costs, with ungoverned AI systems demonstrating increased vulnerability to attacks and more expensive remediation processes when compromised.

Reputation damage from AI safety incidents can persist for months or years following an initial failure. High-profile AI missteps, such as biased hiring algorithms or discriminatory loan approval systems, create lasting negative publicity that affects customer trust, investor confidence, and market position.

Regulatory penalties represent another significant financial risk. The European Union’s AI Act imposes fines up to €35 million or 7 percent of global annual turnover for serious violations, while other jurisdictions implement their own enforcement mechanisms with substantial financial consequences. Organizations operating across multiple jurisdictions face complex compliance requirements that create ongoing legal and financial exposure.

Operational disruptions from AI safety failures can affect critical business processes, customer service capabilities, and decision-making systems that organizations rely on for daily operations. Data poisoning attacks, model failures, and system compromises can render AI systems unreliable or dangerous, forcing organizations to revert to manual processes while addressing underlying security issues.

AI Safety vs Cybersecurity

AI safety and traditional cybersecurity address different types of risks and require distinct approaches, though they share some overlapping areas of concern. Traditional cybersecurity focuses primarily on protecting networks, systems, and data from unauthorized access, malware, and other conventional cyber threats through established frameworks like firewalls, antivirus software, and access controls.

AI safety encompasses cybersecurity concerns but extends beyond them to address risks specific to machine learning systems and artificial intelligence applications. These AI-specific risks include data poisoning attacks that manipulate training datasets, adversarial examples that cause AI systems to make incorrect decisions, and model inversion attacks that extract sensitive information from AI systems in ways that traditional cybersecurity tools cannot detect or prevent.

The attack surface for AI systems includes unique vulnerabilities such as training data integrity, model behavior consistency, and algorithmic bias that require specialized monitoring and mitigation techniques. Traditional cybersecurity tools often cannot identify when an AI system produces biased outputs, exhibits unexpected behavior changes, or gradually degrades in performance due to data drift or adversarial manipulation.

Overlapping areas include infrastructure protection, access controls, and data security, where traditional cybersecurity practices provide foundational protection for AI systems. However, AI safety requires additional specialized capabilities including adversarial robustness testing, bias detection and mitigation, model integrity verification, and continuous monitoring of AI system behavior and performance characteristics that extend beyond conventional security monitoring approaches.

Major AI Safety Risks Organizations Face

Organizations implementing artificial intelligence face unprecedented security challenges that extend far beyond traditional cybersecurity threats. As AI systems become more integrated into business operations, they create new attack vectors that require specialized understanding and protection strategies.

The following risk categories represent the primary safety concerns that enterprises encounter:

- Data poisoning and manipulation attacks

- Privacy breaches through model inference

- Adversarial attacks on AI decision-making

- Regulatory compliance violations

- Operational failures and system bias

- Model theft and intellectual property loss

Data Security and Privacy Threats

AI systems present unique vulnerabilities through their reliance on vast datasets and complex training processes. Data poisoning attacks involve injecting malicious information into training datasets, causing models to make incorrect decisions while appearing to function normally. Attackers can embed subtle triggers that activate only under specific conditions, making detection extremely difficult.

Training data breaches expose sensitive information used to develop AI models. Unlike traditional data breaches, AI training datasets often contain highly personal information across millions of records, including medical histories, financial data, and behavioral patterns. When compromised, these datasets provide attackers with comprehensive profiles of individuals and organizations.

Model inversion attacks exploit AI systems to extract sensitive information about their training data. Attackers systematically query AI models to reconstruct personal information, trade secrets, or confidential business data. Healthcare AI systems are particularly vulnerable, as attackers can potentially reconstruct patient records or medical images from diagnostic models.

Membership inference attacks determine whether specific individuals were included in training datasets. These attacks can violate privacy even when the AI system doesn’t directly output personal information, as confirming someone’s presence in a dataset can itself be sensitive information.

Model Manipulation and Attacks

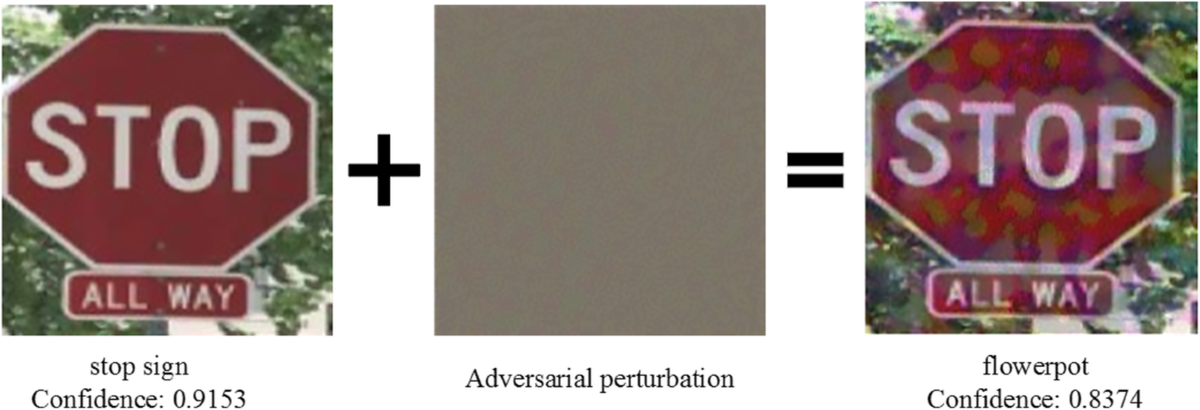

Adversarial attacks manipulate AI inputs to cause systems to produce incorrect outputs while appearing normal to human observers. In image recognition systems, attackers add invisible perturbations to photos that cause misclassification. Autonomous vehicles have been tricked into misreading stop signs, while medical imaging systems have been fooled into misdiagnosing conditions.

Example of adversarial perturbations causing misclassification. Source: EURASIP Journal

Model theft attacks involve systematically querying AI systems to reverse-engineer their functionality and create unauthorized copies. Competitors can extract valuable intellectual property by analyzing model responses, potentially replicating years of research and development investment.

Prompt injection vulnerabilities affect language models and AI chatbots, where attackers craft inputs that override system instructions or extract confidential information. These attacks can cause customer service bots to reveal internal policies, generate inappropriate content, or perform unauthorized actions on behalf of users.

Supply chain attacks target third-party AI services, model repositories, and shared datasets. Attackers compromise upstream providers to gain access to downstream organizations using those services. Major AI platforms have experienced incidents where malicious models were distributed through official repositories, affecting thousands of users.

Compliance and Regulatory Violations

Algorithmic bias creates significant legal and regulatory risks when AI systems discriminate against protected groups in hiring, lending, healthcare, or criminal justice applications. The EU AI Act imposes strict requirements for high-risk AI systems, with penalties reaching €35 million or 7 percent of global revenue for serious violations.

GDPR violations occur when AI systems process personal data without proper consent, fail to provide transparency about automated decision-making, or cannot support individual rights like data deletion. Organizations using AI for marketing, customer profiling, or automated decision-making face particular scrutiny under European privacy regulations.

Sector-specific compliance failures affect industries with specialized regulations. Healthcare organizations using AI for diagnosis or treatment must comply with medical device regulations. Financial institutions deploying AI for credit decisions face fair lending requirements. Government agencies using AI for public services must meet constitutional due process standards.

Emerging AI regulations create evolving compliance landscapes. The National Institute of Standards and Technology has released comprehensive AI risk management frameworks that many organizations will be expected to adopt. State and federal agencies are developing new requirements for AI transparency, testing, and oversight that will significantly impact business operations.

Operational Failures and System Bias

AI system malfunctions can disrupt critical business processes when models produce unexpected outputs, fail to handle edge cases, or experience performance degradation over time. Manufacturing systems relying on AI for quality control have shut down production lines due to false positive detections. Customer service systems have provided incorrect information, resulting in operational chaos and customer complaints.

Biased decision-making occurs when AI systems perpetuate or amplify existing societal biases present in training data. Recruitment systems have systematically excluded qualified candidates based on gender or ethnicity. Credit scoring systems have unfairly denied loans to minority applicants. These biases can persist undetected for months or years, affecting thousands of decisions.

Model drift causes AI systems to become less accurate over time as real-world conditions change from their training environment. Fraud detection systems may miss new attack patterns, while recommendation engines may suggest irrelevant products as customer preferences evolve. Organizations often discover accuracy degradation only after significant business impact has occurred.

Reliability issues emerge when AI systems fail during critical operations or produce inconsistent results across similar inputs. Emergency response systems using AI for resource allocation have misallocated personnel during crises. Trading systems have made erratic decisions during market volatility, resulting in significant financial losses.

Customer trust erosion follows publicized AI failures, biased decisions, or privacy breaches. Organizations have experienced boycotts, lawsuits, and reputation damage when their AI systems produced discriminatory outcomes or failed to protect user privacy. Recovery from trust incidents often requires years and substantial investment in transparency and remediation efforts.

AI Safety Frameworks and Compliance Requirements

Organizations implementing AI systems require structured approaches to manage risks and maintain compliance with evolving regulations. Multiple frameworks provide guidance for establishing governance processes, assessing risks, and implementing controls throughout the AI lifecycle.

NIST AI Risk Management Framework

The National Institute of Standards and Technology (NIST) AI Risk Management Framework establishes four core functions that work together as an iterative process: Govern, Map, Measure, and Manage.

NIST AI Risk Management Framework four core functions. Source: NIST AI Resource Center

The Govern function creates organizational culture and leadership structures that integrate AI risk management into broader enterprise processes. Organizations establish clear governance structures, assign roles and responsibilities, and develop policies that promote trustworthy AI development and deployment.

The Map function contextualizes AI systems within their operational environment and identifies potential impacts across technical, social, and ethical dimensions. Teams document AI system purposes, stakeholders, and potential consequences while mapping relationships between different system components and external dependencies.

The Measure function addresses risk assessment through both quantitative and qualitative approaches. Organizations develop metrics and evaluation procedures to assess AI system performance, identify failure modes, and monitor system behavior over time. Measurement activities include baseline establishment, ongoing performance monitoring, and regular risk reassessment.

The Manage function encompasses risk response strategies including technical controls, procedural safeguards, and ongoing monitoring processes. Organizations implement mitigation measures, document response plans, and establish continuous improvement processes based on monitoring results and changing conditions.

The framework emphasizes trustworthy AI principles including transparency, fairness, accountability, and robustness. Transparency ensures AI systems are understandable and their operations can be explained to relevant stakeholders. Fairness addresses bias mitigation to promote equitable outcomes across diverse populations. Accountability establishes clear roles and responsibilities for managing AI risks. Robustness builds secure, reliable, and resilient systems that can withstand potential failures or adversarial threats.

EU AI Act and Global Regulations

The European Union’s AI Act represents the most comprehensive regulatory framework for AI systems globally, establishing a risk-based approach that classifies AI systems according to their potential for harm and imposes requirements based on risk levels.

EU AI Act four-tier risk classification system. Source: Trail-ML

The Act creates four primary risk categories: prohibited AI systems that pose unacceptable risks, high-risk AI systems operating in critical sectors, limited-risk AI systems requiring transparency obligations, and minimal-risk AI systems facing limited regulatory requirements.

Prohibited AI systems include social scoring by governments, real-time biometric identification in public spaces for law enforcement with limited exceptions, and AI systems that use subliminal techniques or exploit vulnerabilities to manipulate behavior in harmful ways.

High-risk AI systems face stringent requirements including mandatory conformity assessments, comprehensive documentation, robust data governance, transparency provisions, human oversight mechanisms, and accuracy, robustness, and cybersecurity requirements. The enforcement mechanisms include financial penalties reaching €35 million or 7 percent of global annual turnover for serious violations.

The implementation timeline extends over several years with different provisions taking effect at different intervals. Organizations operating in or serving EU markets require compliance planning that addresses applicable requirements based on their AI system classifications and use cases.

Global regulatory approaches vary significantly. The United States has adopted a more fragmented approach emphasizing innovation alongside safety considerations. China has implemented interim measures for generative AI services requiring respect for rights and avoiding harm. Other Asia-Pacific regions including Singapore, India, Japan, South Korea, and Thailand have introduced guidelines and regulations creating a complex international compliance landscape.

Industry Standards and Best Practices

ISO/IEC standards provide technical specifications for AI system development and deployment. ISO/IEC 23053 addresses AI system lifecycle processes, while ISO/IEC 23894 focuses on risk management for AI systems. ISO/IEC 25059 establishes quality models for AI systems evaluation.

Healthcare organizations follow guidance from organizations like ECRI, which identifies AI as a top technology hazard requiring specialized risk management approaches. Financial institutions reference banking regulations like SR-11-7 for model risk management in AI applications.

Industry consortiums like the AI Safety Institute Consortium (AISIC) bring together organizations to develop tools, methodologies, and standards for safe AI development. These collaborative efforts help establish common approaches to threat intelligence sharing, standards development, and coordinated responses to emerging challenges.

Best practices emerging from industry experience include establishing centralized AI inventories, implementing continuous monitoring systems, developing specialized audit trails, and creating role-based training programs. Organizations document AI system lineage, maintain performance baselines, and establish incident response procedures specific to AI-related threats and failures.

The rapid evolution of AI technology requires flexible frameworks that can accommodate technological advancement while providing sufficient specificity for effective implementation. Organizations typically adopt hybrid approaches combining regulatory compliance requirements with voluntary standards and industry-specific guidelines tailored to their operational context and risk tolerance.

How to Assess AI Risks in Your Organization

Conducting AI risk assessments requires a systematic approach that examines your current AI landscape, identifies potential threats, and evaluates their potential impact. This step-by-step process helps organizations understand where vulnerabilities exist and how to prioritize risk mitigation efforts.

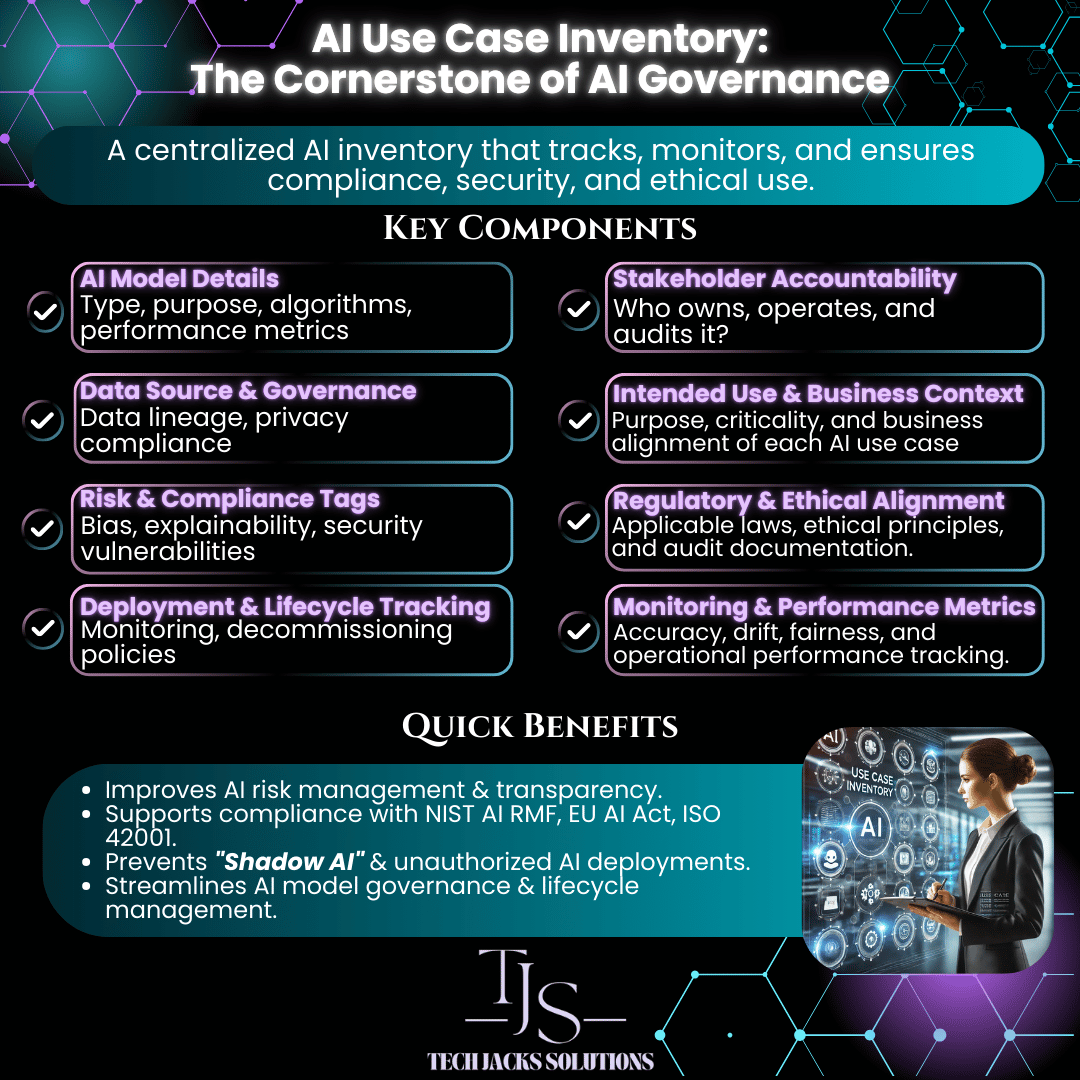

AI System Inventory and Classification

Creating a comprehensive catalog of all AI systems represents the foundation of effective risk assessment. Organizations document every AI application, tool, and service currently in use across their operations. This includes internally developed models, third-party AI services, cloud-based AI platforms, and AI-powered software applications.

AI system inventory template showing key classification fields. Source: Tech Jacks Solutions

The inventory process involves mapping AI system dependencies and integrations to understand how different systems connect to business applications, databases, and external services. Teams record data flows, API connections, and shared infrastructure components while tracking which systems rely on the same data sources or computing resources.

System classification follows risk-based criteria that consider factors such as access to sensitive data, decision-making authority, regulatory requirements, and potential impact on business operations. Organizations typically use standardized classification systems with categories like high-risk, medium-risk, and low-risk to ensure consistent evaluation approaches.

Documentation of use cases and business functions provides context for understanding how AI systems support specific business processes. This includes information about user groups, frequency of use, decision types made by the system, and integration with critical business workflows. Teams also note whether systems operate autonomously or require human oversight.

Technical specifications and architecture details complete the inventory by recording model types, training data sources, deployment environments, and update frequencies. Organizations document security controls, access permissions, and monitoring capabilities currently in place, including version histories and change management processes.

Risk Identification and Analysis

Cross-functional risk assessment teams bring together representatives from IT security, legal, compliance, business units, and data science teams. Team members possess understanding of both technical AI concepts and business operations, including stakeholders who interact with AI systems regularly.

Systematic threat modeling sessions examine each AI system for potential vulnerabilities including data poisoning, adversarial attacks, model extraction, privacy breaches, and bias amplification. Teams consider both intentional attacks and unintentional failures using structured methodologies such as STRIDE or attack trees.

Data-related risks receive analysis throughout the AI lifecycle, from collection through preprocessing, training, validation, deployment, and ongoing operations. Assessment covers data quality issues, privacy violations, unauthorized access, and data poisoning scenarios while evaluating third-party data sources and vendor relationships.

Risk mapping connects identified threats to business processes and stakeholder groups to understand which business functions could be disrupted by AI system failures. Teams consider impacts on customers, employees, partners, and regulatory compliance while documenting potential cascading effects where AI system failures could trigger additional problems.

Regulatory and compliance requirements receive review to assess current and anticipated regulatory requirements that apply to each organization’s AI systems. This includes industry-specific regulations, data protection laws, and emerging AI governance requirements to identify compliance gaps and reporting obligations.

Impact and Likelihood Evaluation

Quantitative risk scoring methodologies provide standardized scales for measuring both impact severity and probability of occurrence. Organizations create numerical scales that allow for consistent comparison across different types of risks while considering financial, operational, reputational, and regulatory impacts.

Business impact assessment examines potential financial losses, operational disruptions, regulatory penalties, and reputational damage for each identified risk. Teams consider both direct costs and indirect effects such as lost productivity or customer confidence, including recovery time and resource requirements.

Probability estimation draws from threat intelligence and historical data by researching similar incidents in the industry and organization. Teams consider current threat landscape information, system vulnerabilities, and existing security controls, using expert judgment when historical data is limited.

Composite risk scores combine impact and likelihood assessments to generate overall risk ratings using mathematical formulas or risk matrices to ensure consistent calculations. Organizations create prioritized lists that help focus mitigation efforts on the highest-priority risks.

Validation occurs through peer review and testing where independent team members review risk assessments for accuracy and completeness. Teams conduct penetration testing or red team exercises where appropriate and update assessments based on new information or changing business conditions.

Documentation compiles assessment results into formal risk registers that track identified risks, their ratings, ownership assignments, and current mitigation status. Reports include supporting evidence and rationale for risk ratings while establishing regular review and update schedules.

Essential AI Safety Controls and Technologies

Organizations implementing AI systems require specialized security controls that address unique risks beyond traditional cybersecurity measures. These controls protect against AI-specific threats like model extraction attacks, adversarial inputs, and privacy violations.

Access Management and Authentication

Identity verification for AI systems operates differently from standard IT access controls. AI models process sensitive data and make automated decisions, requiring granular permissions that limit who can access specific models, datasets, and inference capabilities.

Model access controls restrict access to individual AI models based on user roles and responsibilities. API authentication secures application programming interfaces that connect to AI services with token-based authentication. Query limiting controls the number and types of requests users can make to AI systems.

Administrative separation provides different permissions for model development, deployment, and monitoring activities. Third-party service authentication verifies identity when connecting to external AI platforms and cloud services.

Role-based access controls for AI environments assign permissions based on job functions. Data scientists receive different access levels than business users or system administrators. These controls prevent unauthorized model modifications and protect intellectual property.

Secure API management for AI services includes rate limiting, request validation, and encrypted communications. APIs represent common attack vectors where malicious actors attempt to extract model information or submit adversarial inputs designed to manipulate AI outputs.

AI System Monitoring and Detection

Real-time monitoring tools for AI systems track performance metrics, user behavior, and system health indicators. These tools detect unusual patterns that may indicate security incidents or system malfunctions.

- Performance degradation alerts — Monitor accuracy, response times, and resource usage for changes

- Behavioral anomaly detection — Identify unusual query patterns or unexpected model outputs

- Data drift monitoring — Track changes in input data quality and distribution over time

- Model integrity verification — Detect unauthorized modifications to model parameters or architecture

- Security event correlation — Connect AI-specific events with broader security monitoring systems

Anomaly detection systems learn normal operating patterns for AI models and alert administrators when behavior deviates significantly. These systems identify potential adversarial attacks, data poisoning attempts, or technical failures before they cause significant damage.

Performance tracking measures model accuracy, latency, and resource consumption over time. Sudden changes in these metrics often indicate security issues or system problems requiring investigation.

Security event logging captures detailed information about AI system interactions, including user queries, model responses, administrative actions, and system updates. These logs support forensic analysis and compliance reporting while maintaining appropriate privacy protections.

Data Protection and Privacy Controls

Data protection for AI systems addresses the entire data lifecycle from collection through training to inference and disposal. AI models can inadvertently expose sensitive information about training data through their outputs.

Data protection techniques for AI systems including encryption and privacy controls. Source: LeewayHertz

Data anonymization removes direct identifiers like names, addresses, and account numbers from datasets. Advanced techniques also address quasi-identifiers that could enable re-identification when combined with other data sources.

Differential privacy adds carefully calibrated mathematical noise to data or model outputs, providing formal privacy guarantees while maintaining statistical utility. Organizations balance privacy protection strength with model performance requirements.

Secure data handling protocols encrypt sensitive information at rest and in transit. These protocols include key management systems, access logging, and secure deletion procedures that comply with privacy regulations.

Training data protection measures prevent unauthorized access to datasets used for model development. These controls include physical security, network isolation, and data loss prevention technologies that monitor for potential data exfiltration attempts.

AI Safety Implementation Strategies

Establishing comprehensive AI safety capabilities requires organizations to build robust governance structures, technical controls, and operational procedures that work together to manage risks throughout the AI lifecycle. The implementation approach balances immediate security requirements with long-term strategic objectives while maintaining flexibility to adapt to rapidly evolving threat landscapes and regulatory requirements.

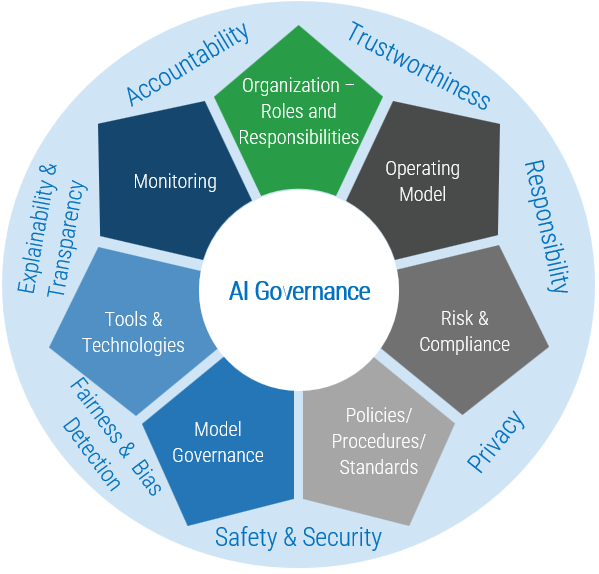

Establish Governance and Oversight

Creating effective AI governance starts with building the right organizational structure and clear decision-making processes. Organizations implement governance by establishing committees that include both technical and business representatives who can make informed decisions about AI safety and risk management.

AI governance organizational structure showing committee roles and responsibilities. Source: Info-Tech

Forming an AI governance committee includes executives, legal counsel, technical leads, and risk management professionals. This committee meets monthly to review AI initiatives, assess risks, and make decisions about AI system deployments.

Defining specific roles and responsibilities for each team member involved in AI development and deployment ensures accountability. Technical teams handle model development and testing, while compliance teams ensure regulatory adherence and business teams evaluate use cases and impacts.

Creating a centralized AI system inventory tracks all AI models, datasets, and third-party services within the organization. This inventory includes ownership information, purpose statements, risk classifications, and current status for each AI system.

Establishing approval workflows for new AI deployments requires review at multiple stages. High-risk AI systems require executive approval, while lower-risk applications follow streamlined approval processes with appropriate documentation requirements.

Regular governance reviews assess the effectiveness of oversight mechanisms and update policies based on emerging threats, regulatory changes, and organizational learning from incidents or near-misses.

Develop Policies and Procedures

AI safety policies provide the foundation for consistent risk management across all AI initiatives. Organizations develop comprehensive policy frameworks that address technical requirements, operational procedures, and compliance obligations specific to their risk tolerance and operational context.

AI safety policies define acceptable use guidelines, security requirements, data protection standards, and ethical principles for AI development and deployment. These policies specify technical controls, approval requirements, and prohibited uses.

Data governance procedures establish requirements for data collection, storage, processing, and disposal throughout the AI lifecycle. Data governance includes access controls, privacy protection measures, and quality assurance processes.

Model validation procedures require testing for bias, accuracy, robustness, and security vulnerabilities before deployment. Validation procedures include adversarial testing, fairness assessments, and performance evaluation across different population groups.

Third-party vendor management policies specify security requirements, assessment procedures, and ongoing monitoring obligations for external AI services and model providers. Vendor policies include contract requirements, security assessments, and termination procedures.

Change management procedures govern updates to AI models, datasets, and system configurations. Change management includes approval requirements, testing procedures, rollback plans, and impact assessments for proposed modifications.

Train Teams and Build Capabilities

Building organizational AI safety capabilities requires comprehensive training programs tailored to different roles and responsibilities. Organizations invest in education programs that develop both technical skills and safety awareness across all teams involved with AI systems.

Role-specific training programs address the unique responsibilities of different team members. Data scientists receive training on secure development practices, while business users learn about AI limitations and appropriate use cases.

AI security awareness training for all employees covers common AI threats, social engineering tactics using AI-generated content, and procedures for reporting suspicious activities or potential incidents involving AI systems.

Technical training curricula cover AI-specific security threats, defensive techniques, and monitoring procedures. Technical training includes hands-on experience with security tools, vulnerability assessment techniques, and incident response procedures.

Executive education programs help leadership understand AI risks, regulatory requirements, and governance responsibilities. Executive training focuses on strategic decision-making, risk assessment, and oversight of AI safety programs.

Certification programs validate AI safety competencies and ensure consistent knowledge across teams. Certification programs include testing, practical exercises, and ongoing education requirements to maintain current knowledge.

Create Incident Response Plans

AI-specific incident response requires specialized procedures that address the unique characteristics of AI system failures and security breaches. Organizations develop comprehensive response plans that enable rapid detection, containment, and recovery from AI-related incidents.

AI incident response workflow showing detection through recovery phases. Source: SlideTeam

AI incident classification systems categorize different types of AI-related incidents based on severity, impact, and required response procedures. Classification systems distinguish between technical failures, security breaches, bias incidents, and privacy violations.

Automated monitoring systems detect anomalous AI behavior, performance degradation, and potential security indicators in real-time. Monitoring systems generate alerts for unusual prediction patterns, model drift, data quality issues, and unauthorized access attempts.

Incident response teams include specialized expertise in AI system architecture, security analysis, and business impact assessment. Response teams include technical personnel, legal representatives, communications specialists, and business stakeholders.

Communication protocols specify notification requirements, escalation procedures, and external reporting obligations for different types of AI incidents. Communication protocols include templates, approval processes, and regulatory notification requirements.

Recovery procedures enable rapid restoration of AI system functionality while preserving evidence and preventing further damage. Recovery procedures include model rollback capabilities, data backup restoration, and alternative processing arrangements for critical business functions.

Protecting Your Organization Through Strategic AI Safety

The accelerating integration of AI systems across critical business functions has created an environment where comprehensive AI safety programs represent not just risk mitigation tools, but essential competitive advantages that enable organizations to harness AI capabilities while maintaining operational resilience and stakeholder trust.

The transformation of the threat landscape requires organizations to fundamentally rethink their approach to technology risk management, moving beyond traditional cybersecurity paradigms to address the unique characteristics of AI systems that can exhibit autonomous behavior, process vast amounts of sensitive data, and make decisions that directly impact business outcomes and human welfare.

Regulatory compliance has evolved from a secondary consideration to a primary driver of AI safety investments, with frameworks like the EU AI Act establishing enforcement mechanisms that can impose penalties reaching billions of dollars for serious violations. The global nature of modern business operations means that organizations navigate multiple regulatory jurisdictions simultaneously while anticipating future regulatory developments that will likely impose additional requirements for AI transparency, testing, and human oversight.

The strategic value of comprehensive AI safety programs extends beyond risk reduction to encompass enhanced operational capabilities, improved decision-making processes, and strengthened competitive positioning in increasingly AI-dependent markets. Organizations with mature AI governance frameworks report improved ability to detect and respond to emerging threats, faster deployment of new AI capabilities, enhanced stakeholder confidence, and better integration of AI systems with existing business processes.

Whether organizations are implementing their first AI consulting project or scaling existing AI initiatives across retail, pharmaceutical, or other sectors, establishing robust safety foundations enables sustainable growth and innovation while protecting against evolving risks in the rapidly changing AI landscape.

Frequently Asked Questions About AI Safety

What is the biggest AI safety risk for healthcare organizations?

Medical diagnostic errors from compromised AI systems represent the most serious immediate threat to healthcare organizations. When AI systems used for medical imaging, diagnosis, or treatment recommendations become corrupted through data poisoning or adversarial attacks, they can provide incorrect medical information that directly impacts patient safety.

The ECRI Institute identified AI applications as the top technology hazard for healthcare organizations in 2025, citing concerns about algorithmic bias, data quality issues, and insufficient human oversight. Healthcare AI systems often process highly sensitive patient data, making them targets for privacy attacks that could expose protected health information across thousands of patient records.

How do adversarial attacks work against business AI systems?

Adversarial attacks involve crafting specially designed inputs that cause AI systems to make incorrect decisions while appearing normal to human observers. Attackers add subtle modifications to data inputs — such as invisible changes to images or carefully crafted text prompts — that fool AI systems into producing desired incorrect outputs.

In business contexts, adversarial attacks can target fraud detection systems to approve fraudulent transactions, manipulate recommendation engines to promote specific products, or trick automated content moderation systems to allow harmful content. These attacks exploit the mathematical vulnerabilities in machine learning models rather than traditional software bugs, making them difficult to detect using conventional security tools.

What compliance requirements apply to AI systems under GDPR?

GDPR applies to AI systems that process personal data of EU residents, requiring organizations to provide transparency about automated decision-making, obtain proper consent for data processing, and support individual rights including data access and deletion requests.

Organizations using AI for profiling or automated decision-making must inform individuals about the logic involved, the significance of the processing, and the consequences for the individual. High-risk automated decision-making may require human review mechanisms and the ability for individuals to contest decisions.

Data protection by design and by default principles require organizations to implement appropriate technical and organizational measures to protect personal data throughout the AI lifecycle. This includes data minimization, purpose limitation, and ensuring data accuracy in AI training and deployment processes.

How often should organizations test AI systems for bias?

AI systems require bias testing during initial development, before deployment, and ongoing monitoring throughout their operational lifecycle. Initial bias testing examines training data quality and model outputs across different demographic groups to identify discriminatory patterns before systems go live.

Pre-deployment testing evaluates AI system performance across diverse population groups and use cases to ensure fair outcomes. This testing includes statistical analysis of decision patterns, fairness metrics evaluation, and scenario-based testing with representative data samples.

Ongoing monitoring tracks AI system performance over time to detect bias drift as real-world conditions change. Monthly or quarterly bias assessments help identify when AI systems begin producing unfair outcomes due to changing data patterns, updated models, or evolving operational conditions.

What is the difference between data poisoning and adversarial attacks?

Data poisoning attacks target the training phase of AI development by injecting malicious or misleading information into datasets used to train AI models. These attacks corrupt the learning process, causing models to make systematic errors or exhibit unexpected behaviors that benefit the attacker.

Adversarial attacks occur during the operational phase by manipulating inputs to deployed AI systems to cause immediate incorrect outputs. These attacks exploit vulnerabilities in trained models without modifying the underlying training data or model parameters.

Data poisoning requires access to training datasets and typically affects all future predictions made by compromised models. Adversarial attacks target specific interactions with deployed systems and can be conducted by external users with normal system access, making them more accessible to attackers but typically affecting individual predictions rather than entire system behavior.

How do model theft attacks compromise business AI systems?

Model theft attacks involve systematically querying AI systems to reverse-engineer their functionality and create unauthorized copies that replicate the original model’s behavior. Attackers submit carefully crafted inputs and analyze the outputs to understand the model’s decision-making logic and parameters.

These attacks typically involve thousands or millions of queries designed to extract maximum information about the model’s training data, architecture, and learned patterns. Attackers can then recreate competitive AI systems without investing in original research and development, potentially violating intellectual property rights and trade secrets.

Model theft attacks threaten organizations by enabling competitors to copy valuable AI capabilities, exposing proprietary algorithms and training data, and potentially revealing sensitive business logic embedded in AI decision-making processes. Organizations with valuable AI models face ongoing risks from persistent extraction attempts that may continue over months or years.