Artificial intelligence systems now make decisions that affect millions of people daily, from loan approvals to medical diagnoses. As these systems become more powerful and widespread, organizations face new challenges in ensuring their AI operates safely, fairly, and transparently. The stakes have never been higher for getting AI governance right.

AI TRiSM (Trust, Risk, and Security Management) provides a comprehensive framework for managing these challenges. The approach helps organizations build trustworthy AI systems while meeting growing regulatory requirements. Major technology companies and enterprises across industries are implementing AI TRiSM frameworks to maintain competitive advantage while minimizing risks.

The framework has gained significant attention as new regulations like the EU AI Act take effect and organizations realize the potential consequences of poorly managed AI systems. Understanding AI TRiSM concepts has become essential for leaders responsible for AI strategy and implementation.

What Is AI TRiSM?

AI TRiSM stands for Artificial Intelligence Trust, Risk, and Security Management. Gartner developed this framework to help organizations govern AI systems safely and ethically throughout their entire lifecycle.

The framework addresses the unique challenges that AI systems create compared to traditional software. AI systems can make decisions, learn from data, and change their behavior over time, which requires specialized approaches to management and oversight.

AI TRiSM framework showing the four interconnected components. Source: Medium

The Four Components of AI TRiSM

AI TRiSM breaks down into four interconnected components that work together to ensure responsible AI deployment:

- Trust — reliability in AI system performance and explainability of decisions

- Risk — operational risks, compliance violations, and reputational damage

- Security — protection of data, models, and system integrity

- Management — organizational structure for coordinating oversight and governance

Trust encompasses how well AI systems perform consistently and whether users can understand how these systems make decisions. When a loan application gets denied, trust means the AI system provides accurate decisions and clear explanations for its reasoning.

Risk covers three main areas that can impact organizations. Operational risk includes AI system failures or performance issues. Compliance risk involves violations of laws or regulations. Reputational risk means damage to an organization’s credibility from AI mistakes or unethical behavior.

Security protects AI systems from various threats. Data protection safeguards training information and user data. Model integrity prevents unauthorized changes to AI algorithms. These security measures help ensure AI systems work as intended without interference.

Why AI TRiSM Matters for Organizations

Organizations have rapidly adopted artificial intelligence without establishing proper oversight frameworks. This creates significant vulnerabilities that can compromise business operations, regulatory compliance, and stakeholder trust.

New Risks From AI Systems

AI systems introduce risks that traditional IT governance can’t address. AI hallucinations occur when systems generate false information that appears credible. Algorithmic bias emerges when training data contains historical prejudices, causing unfair decisions across different populations.

AI models also exhibit unpredictable outputs that change over time through model drift. Performance degrades as real-world conditions shift from original training environments. These systems can produce results that seem reasonable but contain subtle errors difficult to detect.

Regulatory Requirements Drive Governance

Government agencies worldwide have introduced regulations specifically targeting AI systems. The European Union’s AI Act establishes comprehensive requirements for high-risk AI applications. The National Institute has released frameworks for AI risk management.

These regulations require organizations to demonstrate systematic oversight of AI systems. Documentation of development processes, ongoing monitoring of performance, and evidence of risk mitigation measures become mandatory compliance requirements.

Business Impact of AI Failures

AI system failures can rapidly damage customer relationships through visible mistakes or biased decisions. When AI systems make errors in customer-facing applications, incidents often receive significant public attention and social media amplification.

Customer trust in AI-powered services depends on consistent, fair, and reliable performance. Organizations that experience high-profile AI failures may lose competitive advantages as customers migrate to competitors with more trustworthy implementations.

The Four Pillars of AI TRiSM

Gartner’s AI TRiSM framework rests on four fundamental pillars that organizations use to manage artificial intelligence systems responsibly. Each pillar addresses specific aspects of AI governance.

Explainability and Transparency

AI explainability refers to the ability to understand how artificial intelligence systems make decisions. When an AI model recommends a loan approval or suggests a medical treatment, stakeholders can examine the reasoning behind these decisions.

Model interpretability involves techniques that reveal which data inputs influenced specific AI outputs. If an AI system denies a credit application, interpretability tools can show whether income level, credit history, or employment status carried the most weight in that decision.

Audit trails document every step in an AI system’s decision-making process. These records track data inputs, model versions, processing steps, and final outputs. Audit trails enable organizations to recreate and examine past decisions, supporting accountability and improvement efforts.

ModelOps and Lifecycle Management

ModelOps represents the operational framework for managing AI models throughout their entire lifecycle. This approach adapts principles from software development operations to address the unique challenges of AI systems in production environments.

Complete AI model lifecycle management process. Source: NexaStack

The AI model lifecycle begins with development and training, progresses through testing and validation, continues with deployment and monitoring, and concludes with retirement or replacement. ModelOps ensures each phase receives appropriate oversight and documentation.

Version control for AI models tracks changes to algorithms, training data, and system configurations. Unlike traditional software, AI models can behave differently even with identical code if training data changes. Version control systems maintain detailed records of model iterations and performance characteristics.

Performance monitoring involves continuous assessment of AI model accuracy, speed, and reliability in production environments. Models can experience drift where their performance degrades over time due to changing data patterns or environmental conditions.

Security and Resilience

Comparison between traditional IT security and AI-specific security challenges. Source: ResearchGate

AI systems face unique security threats that differ from traditional cybersecurity challenges. Adversarial attacks involve deliberately crafted inputs designed to fool AI systems into making incorrect decisions. Attackers might add imperceptible modifications to images that cause AI vision systems to misclassify objects.

Model poisoning occurs when attackers corrupt training data to influence AI system behavior. By introducing biased or malicious examples during the training process, attackers can create models that appear to function normally but fail in specific circumstances.

Data breaches in AI contexts can expose stolen information and reveal sensitive details about model architecture and training processes. Attackers who access AI training data might reconstruct private information about individuals or reverse-engineer proprietary algorithms.

AI-specific security controls include input validation systems that detect suspicious data submissions. Differential privacy techniques add mathematical noise to datasets, protecting individual privacy while preserving overall data utility for AI training.

Privacy and Data Protection

Privacy and data protection in AI systems involves multiple layers of safeguards for personal information throughout the AI lifecycle. Data governance establishes policies for collecting, storing, processing, and sharing information used to train and operate AI models.

Consent management ensures individuals understand how their data will be used in AI systems. Privacy regulations like GDPR require explicit consent for certain types of data processing and grant individuals rights to access, correct, or delete their information.

Privacy-preserving techniques enable AI development while protecting individual privacy. Differential privacy adds statistical noise to datasets, making it impossible to identify specific individuals while preserving overall data patterns needed for AI training.

Federated learning allows multiple organizations to collaboratively train AI models without sharing raw data. Each participant trains models on their local data, then shares only the resulting algorithm updates rather than the underlying information.

Key Benefits of AI TRiSM Implementation

Business value and ROI metrics from AI TRiSM implementation. Source: Techstack

AI TRiSM implementation delivers measurable business outcomes that directly impact organizational performance. Organizations implementing comprehensive frameworks report improvements across multiple operational dimensions.

Enhanced Model Performance

AI TRiSM frameworks establish systematic monitoring and maintenance processes that preserve model accuracy over extended periods. Model drift detection systems continuously monitor AI performance against established baselines, identifying performance degradation before it impacts business operations.

Version control and change management processes ensure that model updates undergo rigorous testing before deployment. Organizations maintain comprehensive model catalogs that track performance metrics, dependencies, and deployment configurations.

Streamlined Regulatory Compliance

AI TRiSM frameworks align organizational practices with regulatory requirements including the EU AI Act and NIST frameworks. Automated documentation systems generate compliance reports and audit trails that demonstrate adherence to regulatory requirements.

Cross-jurisdictional compliance becomes manageable through standardized governance processes that accommodate multiple regulatory frameworks simultaneously. Organizations operating globally benefit from consistent approaches that address diverse regional requirements.

Reduced Operational Risk

AI TRiSM implementation mitigates technical risks including security vulnerabilities, adversarial attacks, and system failures. Systematic risk assessment processes identify potential issues before they escalate into costly operational problems.

Financial risk reduction occurs through improved resource allocation, reduced incident response costs, and decreased regulatory penalties. Reputational risk management improves through transparent AI practices and proactive stakeholder communication.

AI TRiSM and Regulatory Compliance

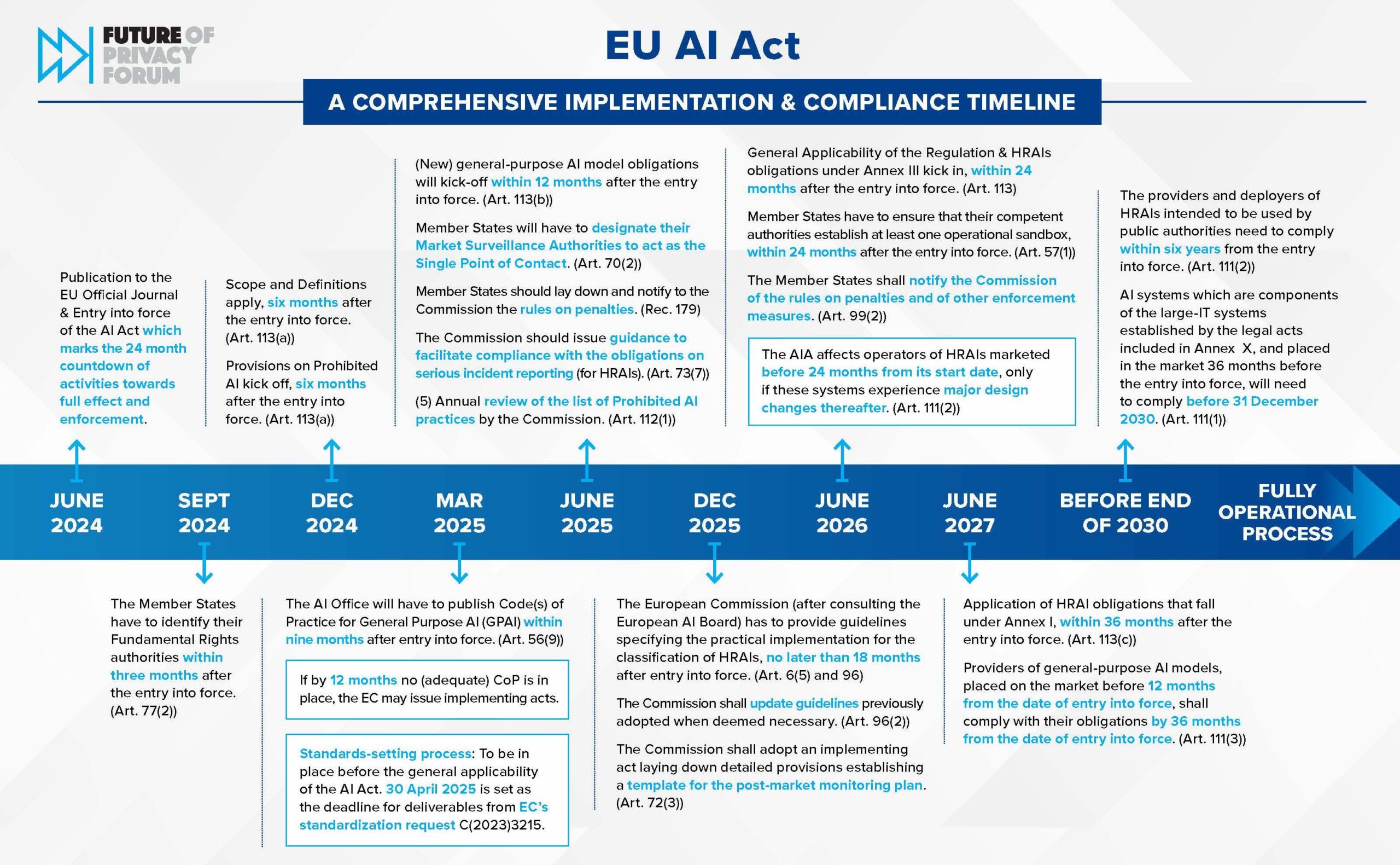

EU AI Act implementation timeline and compliance requirements. Source: The Future of Privacy Forum

The regulatory landscape around artificial intelligence has transformed dramatically, creating strong drivers for AI TRiSM adoption. Governments recognize that AI systems can cause significant harm without proper oversight, leading to comprehensive frameworks that require trustworthy AI practices.

NIST AI Risk Management Framework

The National Institute developed the AI Risk Management Framework as voluntary guidance to help organizations build trustworthy AI systems. The framework establishes four core functions that guide systematic risk management throughout the AI lifecycle.

- Govern — establishes organizational policies and oversight mechanisms for AI risk management

- Map — provides context for understanding AI system risks by identifying stakeholders and potential impacts

- Measure — employs various methods to analyze and monitor AI risks, including tracking metrics for trustworthy characteristics

- Manage — implements risk controls and mitigation strategies with continuous monitoring processes

NIST emphasizes that trustworthy AI systems demonstrate specific characteristics: validity and reliability, safety, security and resilience, accountability and transparency, explainability and interpretability, privacy enhancement, and fairness with managed bias.

EU AI Act Compliance Requirements

The European Union AI Act represents the world’s first comprehensive legal framework for AI regulation, establishing mandatory requirements based on risk categorization. The Act creates a risk-based approach where higher-risk AI systems face more stringent regulatory requirements.

High-risk AI systems under the EU AI Act face extensive compliance requirements:

- Comprehensive risk management systems throughout the AI lifecycle

- Data governance and quality management processes

- Detailed technical documentation maintenance

- Record-keeping systems for AI operations

- Transparency and information provision to users

- Human oversight of AI decisions

- Accuracy, robustness, and cybersecurity measures

The Act prohibits certain AI practices entirely, including AI systems that use subliminal techniques, AI systems for social scoring by public authorities, and real-time remote biometric identification in public spaces with limited exceptions.

Industry-Specific Standards

Healthcare organizations face unique AI governance requirements due to the life-critical nature of medical applications. The FDA provides specific guidance for AI and machine learning in medical devices, requiring organizations to demonstrate safety and effectiveness through clinical validation.

Financial services organizations operate under extensive regulatory frameworks that include AI-specific guidance from banking regulators. The Federal Reserve’s SR-11-7 guidance requires banks to implement strong model risk management practices for AI systems used in decision-making processes.

Government agencies deploying AI systems face unique transparency and accountability requirements, often including public disclosure obligations and citizen rights to explanation for AI-driven decisions. Canada’s Directive on Automated requires risk assessments and mitigation measures based on system impact scores.

How to Implement AI TRiSM Successfully

Organizations can implement AI Trust, Risk, and Security Management through a structured approach. Each step builds on the previous one to create a comprehensive governance framework.

Assess Current AI Governance Maturity

Start by conducting a thorough inventory of all AI systems within your organization. Document every AI model, algorithm, and automated decision-making tool currently in use across all departments, including development tools, third-party AI services, and experimental projects.

AI risk assessment matrix for categorizing systems by risk level. Source: AWS

Evaluate your current governance practices against the four AI TRiSM pillars. Review existing policies for explainability requirements, model lifecycle management, security controls, and privacy protections. Identify gaps between current practices and AI TRiSM standards.

Map your regulatory obligations based on your industry and operating locations. Financial services organizations face different requirements than healthcare companies. Organizations operating in the European Union must consider the AI Act requirements.

Develop Comprehensive Risk Management Policies

Create written policies that address each stage of the AI lifecycle from development through decommissioning. These policies cover data collection and quality standards, model development and testing procedures, deployment approval processes, and ongoing monitoring requirements.

Establish clear criteria for different risk levels of AI applications. High-risk systems that affect individual rights or safety require stricter controls than low-risk internal automation tools. Document the specific requirements for each risk category.

Define data governance standards for AI systems. Specify how training data collection, validation, and storage occurs. Include requirements for data quality, bias testing, and documentation, addressing retention periods and deletion procedures.

Deploy Monitoring and Control Systems

Implement centralized inventory management systems that track all AI assets across your organization. These systems maintain records of model versions, performance metrics, compliance status, and ownership information.

Deploy automated monitoring tools that can detect model drift, performance changes, and potential bias issues. Set up alerting systems that notify relevant teams when metrics fall outside acceptable ranges, configuring different alert thresholds based on each AI system’s risk level.

Establish continuous testing frameworks for AI models in production. Regular validation ensures models continue to perform as expected and comply with governance requirements, including both technical performance tests and fairness assessments.

Train Teams and Establish Clear Accountability

Organizational chart showing AI TRiSM roles and responsibilities. Source: ResearchGate

Assign specific roles and responsibilities for AI governance across your organization. Technical teams handle model development and monitoring, while business teams manage risk assessment and stakeholder communication. Legal and compliance teams oversee regulatory requirements.

Provide training programs tailored to different roles within your organization. Data scientists receive training on bias detection and explainability techniques. Business users learn about AI risk assessment and compliance requirements. Executive teams learn governance oversight and decision-making frameworks.

Establish regular review cycles for AI governance practices. Monthly technical reviews assess model performance and compliance status. Quarterly business reviews evaluate risk management effectiveness and policy updates. Annual strategic reviews assess overall governance maturity.

Frequently Asked Questions About AI TRiSM

How much does implementing an AI TRiSM framework typically cost for mid-sized companies?

AI TRiSM implementation costs vary significantly based on organization size, existing AI infrastructure, and desired maturity level. Mid-sized companies might spend tens of thousands to hundreds of thousands of dollars on comprehensive TRiSM platforms and processes. The investment typically includes software tools, training programs, and consulting services to establish governance frameworks.

What specific technical skills does my team need to manage AI explainability requirements?

Technical teams implementing AI explainability need skills in interpretable machine learning techniques, model visualization tools, and audit trail systems. Data scientists require knowledge of techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) values. Software engineers need experience with documentation systems and automated reporting tools that generate model cards and decision explanations.

How long does it take to achieve full AI TRiSM compliance with the EU AI Act?

AI TRiSM implementation timelines for EU AI Act compliance range from several months to multiple years depending on the organization’s current AI maturity and the risk level of their AI systems. Organizations with basic AI governance can establish core compliance capabilities within 6-12 months. Companies building both AI capabilities and governance structures simultaneously typically require 18-36 months for comprehensive implementation.

Can organizations use existing IT governance frameworks as a foundation for AI TRiSM?

Organizations can successfully build AI TRiSM on existing IT governance frameworks by extending current risk management, security, and compliance programs to address AI-specific requirements. Existing change management processes, incident response procedures, and audit frameworks provide foundations for AI governance while requiring additional controls for algorithmic bias, model drift, and AI-specific security threats.

Which AI systems require the most stringent TRiSM controls under current regulations?

High-risk AI systems under regulations like the EU AI Act require the most stringent TRiSM controls. These include AI systems used in biometric identification, critical infrastructure management, educational or vocational training access, employment decisions, essential private and public services access, law enforcement applications, migration and asylum processes, and administration of justice and democratic processes.