The European Union’s Artificial Intelligence Act is the world’s first comprehensive legal framework governing AI systems, establishing rules for how artificial intelligence can be developed and used across Europe. This groundbreaking regulation officially entered into force on August 1, 2024, creating binding obligations for companies worldwide that provide AI services to European users.

The legislation introduces a risk-based approach that categorizes AI systems into different levels based on their potential for harm. Companies using prohibited AI practices face complete bans, while high-risk applications must meet strict safety and transparency requirements. The Act’s scope extends beyond EU borders, affecting any organization that serves European customers or markets AI systems within the European Union.

The regulatory framework establishes substantial financial penalties reaching up to €35 million or 7 percent of global annual revenue for serious violations. Organizations across all industries must now assess their AI systems and implement compliance measures according to a phased timeline that extends through 2027. The Act represents a fundamental shift in AI governance, setting standards that many expect will influence regulatory approaches worldwide.

What Is the EU AI Act

The EU AI Act stands as the world’s first comprehensive artificial intelligence regulation, marking a historic milestone in technology governance. Formally known as Regulation (EU) 2024/1689, this legislation establishes harmonized rules for artificial intelligence systems across all 27 European Union member states.

Legal Framework and Core Objectives

The European Union published this regulation in the Official Journal on July 12, 2024, giving it full legal status across member states. As a regulation rather than a directive, the AI Act applies directly in all EU countries without requiring individual national legislation for implementation.

The regulation operates on four fundamental objectives. Safety represents the primary concern, ensuring AI systems operate reliably without causing harm to individuals or society. Fundamental rights protection forms another cornerstone, safeguarding privacy, non-discrimination, and human dignity in AI applications.

Innovation support balances protective measures with economic growth, creating regulatory certainty that enables continued AI development within appropriate boundaries. Single EU market creation eliminates regulatory fragmentation across member states, establishing consistent rules that facilitate cross-border AI deployment and trade.

Global Reach and Application

The EU AI Act applies to any entity providing AI systems in the EU market regardless of geographic location, creating global compliance obligations similar to GDPR’s worldwide reach. Organizations based outside Europe must comply with AI Act requirements if they offer AI systems or services to users within the European Union.

Source: European Court of Auditors – European Union

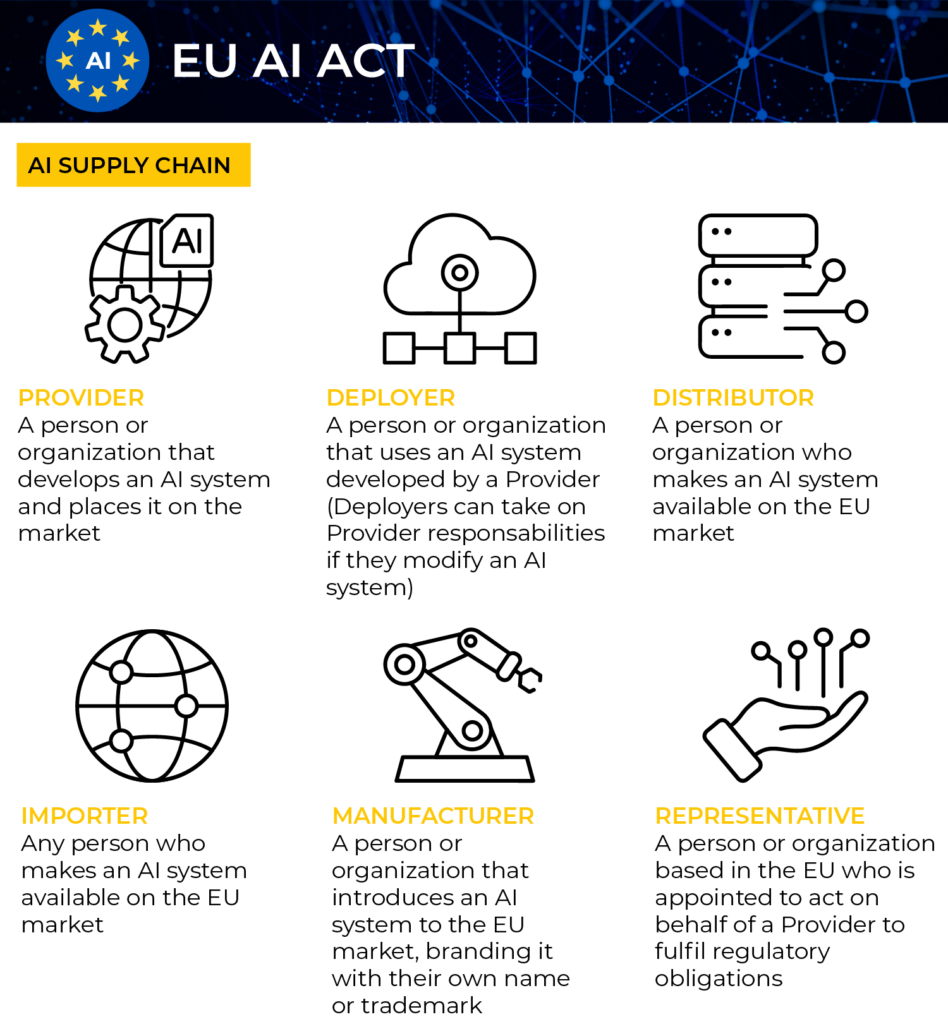

The regulation covers providers who develop AI systems, deployers who use AI systems professionally, importers who bring AI systems into the EU market, and distributors who make AI systems available in the EU. This comprehensive scope ensures that all participants in the AI value chain maintain responsibility for regulatory compliance when serving European users.

How AI Systems Are Classified

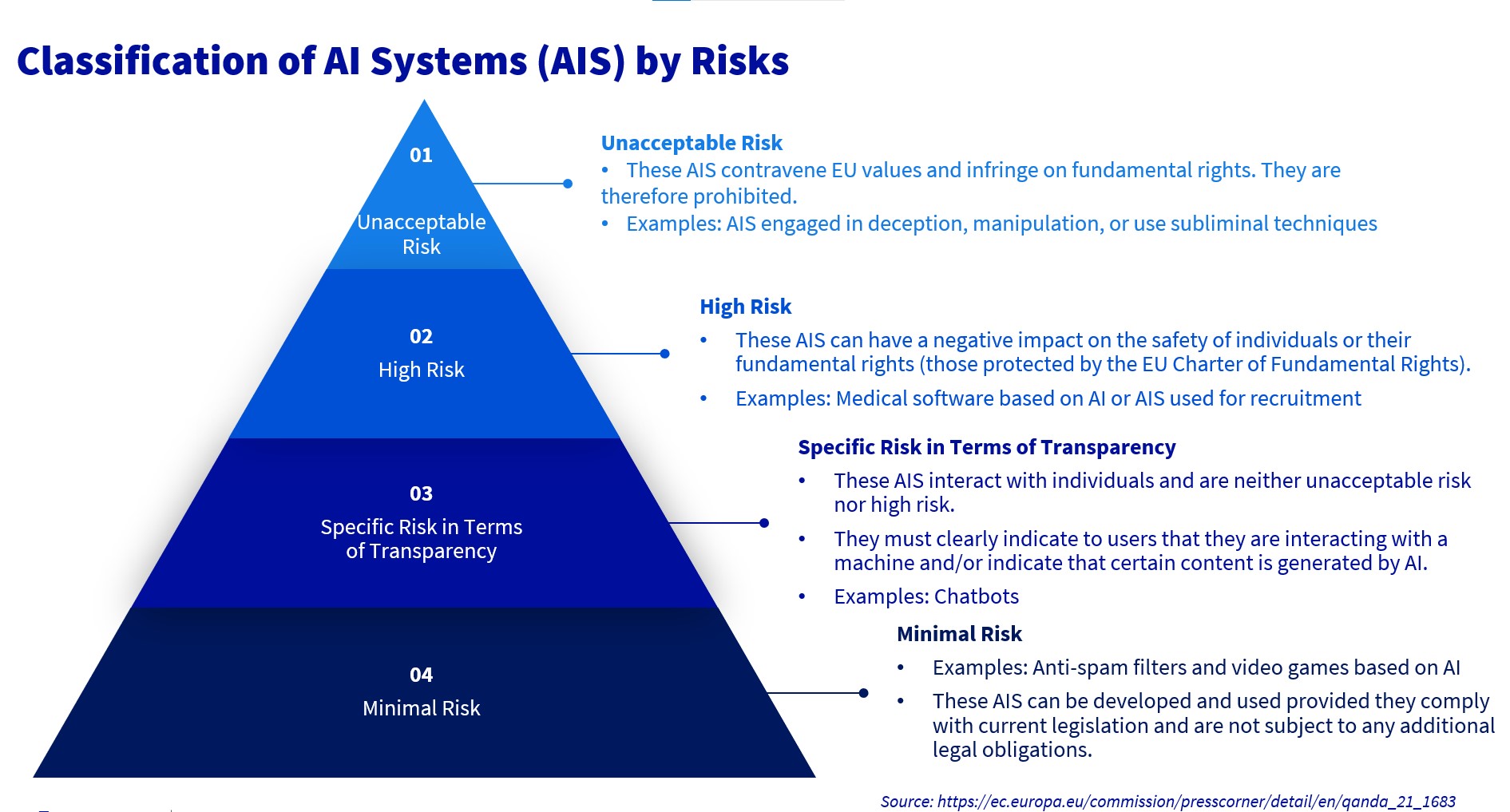

The EU AI Act organizes AI systems into four distinct risk categories that determine compliance requirements. Each category reflects the potential harm the AI system could cause to people’s safety, rights, or democratic processes.

Source: Trail-ML

Prohibited AI Systems

The EU completely prohibits AI systems that pose unacceptable risks to fundamental human rights and democratic values. These banned AI practices became illegal on February 2, 2025, across all EU member states.

The prohibited applications include:

- Subliminal manipulation systems — AI that influences people’s behavior through techniques they cannot consciously detect

- Social scoring systems — AI that evaluates or ranks people based on their social behavior or personal characteristics

- Real-time biometric identification in public spaces — AI systems that identify people through facial recognition, fingerprints, or other biometric data in publicly accessible areas (with limited exceptions for serious crimes)

- Emotion recognition in workplaces and schools — AI that detects emotional states of workers or students (except for medical or safety purposes)

- AI that exploits vulnerabilities — Systems that target people’s age, disability, or economic situation to manipulate their behavior

High-Risk AI Applications

High-risk AI systems face the strictest compliance requirements because they operate in areas where errors or bias could significantly harm individuals or society. Organizations using these systems must implement comprehensive oversight, documentation, and safety measures.

Source: Forvis Mazars

High-risk categories include AI controlling critical infrastructure like power grids or transportation networks, systems evaluating student performance or determining admission decisions, AI used for hiring or firing decisions in workplaces, and systems determining eligibility for healthcare or social benefits. Law enforcement AI for risk assessment, migration systems processing visa applications, and biometric identification systems also qualify as high-risk.

Limited Risk and Minimal Risk Systems

Limited risk AI systems trigger transparency requirements but face fewer regulatory restrictions. Users must receive clear, timely disclosure that they’re interacting with an AI system rather than humans.

Limited risk transparency requirements apply to chatbots, AI-generated content, deepfakes, and emotion recognition systems used outside prohibited contexts. Minimal risk AI systems include most other AI applications like spam filters, video games with AI characters, or entertainment recommendation systems.

Who Must Comply With the EU AI Act

The EU AI Act applies to organizations based on specific roles within the AI ecosystem and geographic factors. Compliance obligations depend on whether you develop AI systems, deploy them for business purposes, or provide supporting services.

Source: DPO Centre

Geographic Scope

The EU AI Act applies to any organization that places AI systems on the European market, regardless of where the organization is located. A company based in Silicon Valley that offers AI services to European customers must comply with the Act’s requirements.

The Act covers AI systems used within EU borders, AI outputs utilized in the EU, and AI services provided to EU users. Geographic location of the AI provider doesn’t determine compliance obligations — market placement triggers regulatory requirements.

Provider vs Deployer Roles

Providers develop, manufacture, or have AI systems developed for market placement under their name or trademark. Software companies that create AI applications, technology firms that build machine learning models, and startups that develop AI-powered products typically serve as providers.

Deployers use AI systems under their authority for professional purposes rather than personal use. A hospital using AI for medical diagnosis, a bank implementing AI for loan decisions, or a retailer deploying AI for inventory management operates as a deployer.

Provider obligations focus on technical development standards, safety requirements, and documentation. Deployers concentrate on operational implementation, monitoring system performance, protecting individual rights, and maintaining appropriate human control over AI-driven decisions.

Third-Party AI Services

Organizations using AI services from vendors like OpenAI, Google Cloud AI, or Microsoft Azure AI typically operate as deployers rather than providers. When a marketing agency uses ChatGPT to draft content or a consulting firm employs AI transcription services, these organizations become deployers under the Act.

Shared responsibility models emerge between AI service vendors and their customers. Vendors typically handle technical compliance requirements such as safety testing and documentation. Customers remain responsible for appropriate deployment, human oversight, and transparency measures within their specific use contexts.

High-Risk AI System Requirements

High-risk AI systems face the most stringent compliance requirements under the EU AI Act. Organizations deploying these systems must implement comprehensive safeguards throughout the entire system lifecycle.

Risk Management and Documentation

Risk management systems operate throughout the complete AI system lifecycle, from initial development through deployment and ongoing operation. These systems identify foreseeable risks to health, safety, and fundamental rights when systems function according to their intended purpose and under conditions of reasonably foreseeable misuse.

Technical documentation requirements are extensive and detailed. Documentation includes comprehensive descriptions of system design, development processes, performance characteristics, and risk management measures. Organizations maintain and update documentation throughout the system lifecycle.

Data Governance and Quality

Training, validation, and testing datasets meet stringent quality and representativeness standards. Data remains relevant, sufficiently representative, and free from errors to the greatest extent possible, with particular attention to avoiding biases that could lead to discrimination.

Organizations implement appropriate data collection processes, including annotation, labeling, cleaning, and updating procedures. Bias detection and mitigation measures identify and address potential biases that could affect health, safety, or fundamental rights.

Human Oversight and Transparency

Human oversight mechanisms ensure meaningful human control over automated decision-making processes. Systems enable human supervisors to understand system operation, monitor performance, and intervene when necessary to prevent or minimize risks.

Organizations provide clear instructions for use that enable deployers to understand system capabilities, limitations, and appropriate deployment contexts. Transparency obligations require clear disclosure of AI system capabilities and limitations to affected individuals.

Implementation Timeline and Key Deadlines

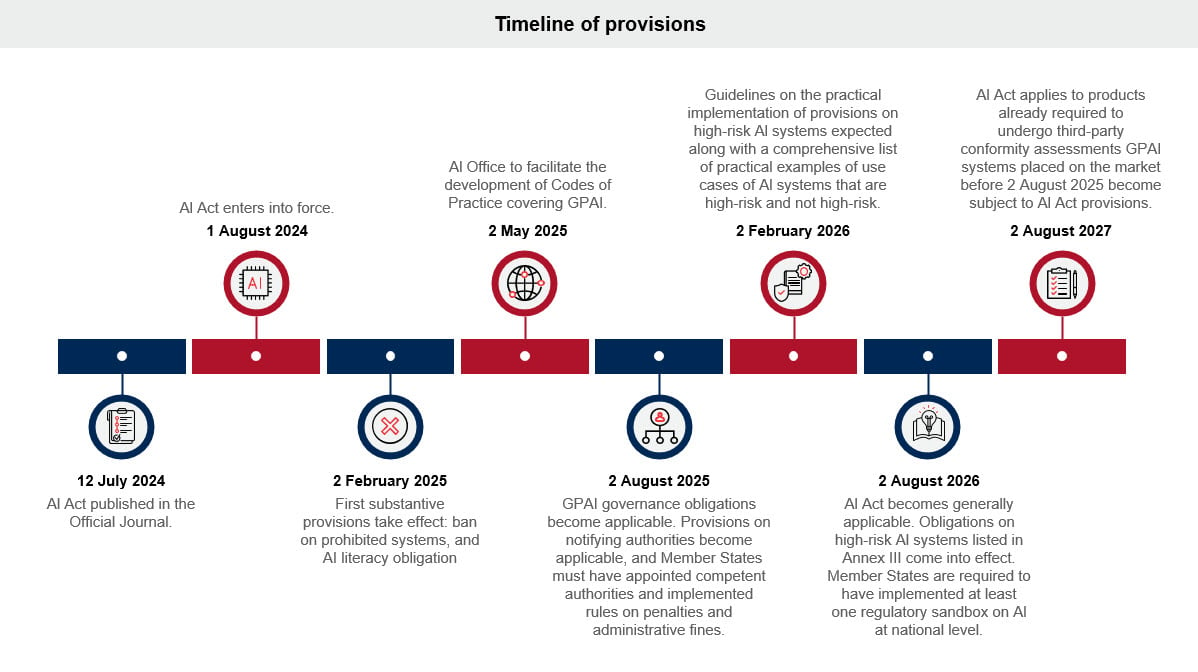

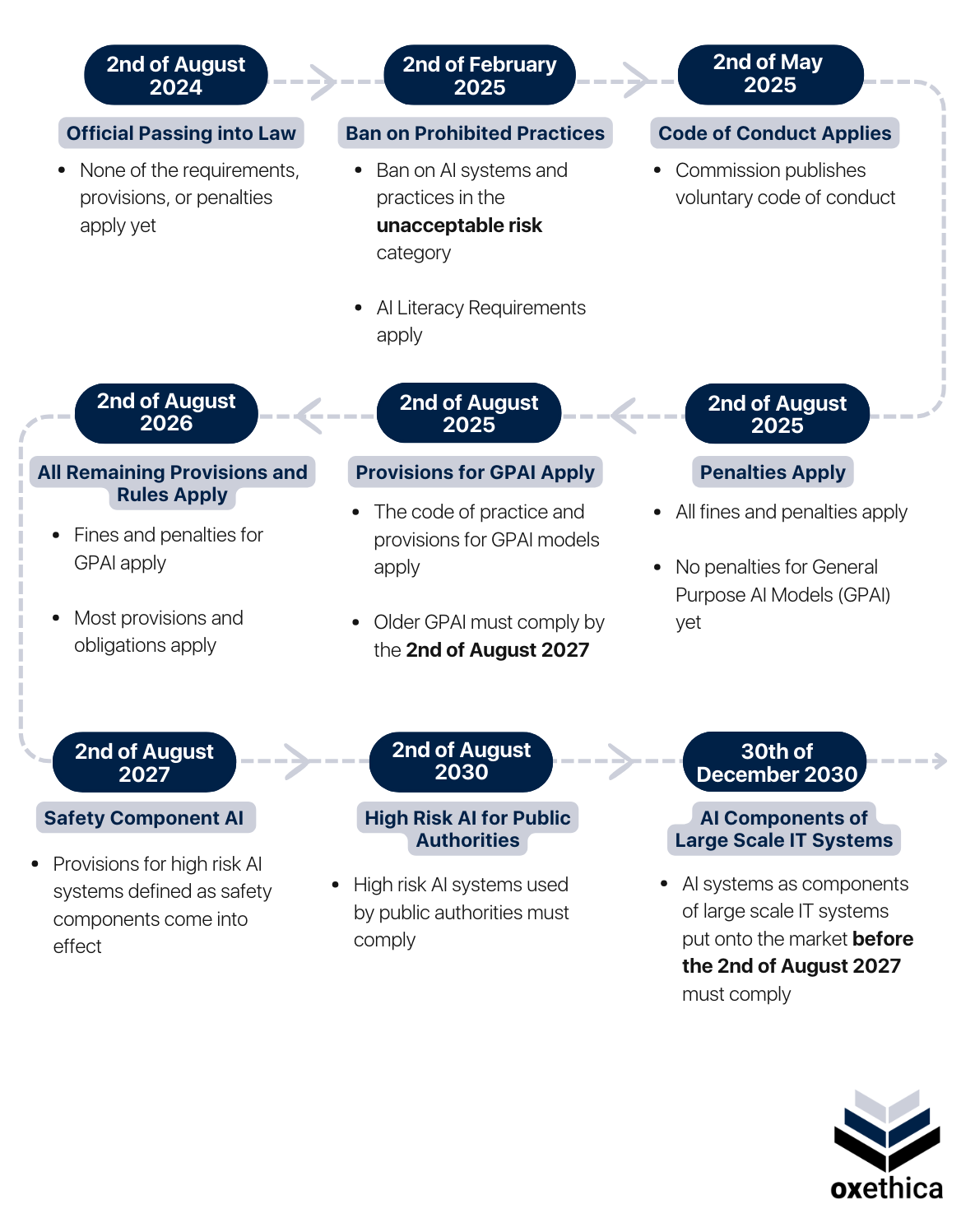

The EU AI Act follows a phased rollout spanning three years, with critical requirements already in place and others coming online progressively through 2027.

Source: Connect On Tech – Baker McKenzie

Current Requirements

The prohibition on unacceptable risk AI practices became effective on February 2, 2025, meaning organizations can no longer deploy systems that use subliminal manipulation, exploit vulnerabilities, or implement social scoring mechanisms.

General-Purpose AI model obligations took effect on August 2, 2025, requiring providers of foundation models to comply with documentation, transparency, and risk assessment requirements. The full penalty framework became operational simultaneously, with financial penalties ranging from €7.5 million to €35 million or up to 7 percent of global annual turnover.

Upcoming Milestones

High-risk AI system requirements become fully applicable on August 2, 2026, representing the largest compliance milestone for most organizations. Systems operating in areas such as biometrics, critical infrastructure, education, employment, essential services, law enforcement, and justice will need comprehensive risk management systems, data governance frameworks, and human oversight mechanisms.

Conformity assessment procedures and CE marking requirements also take effect on August 2, 2026, for applicable high-risk systems. Fundamental Rights Impact Assessments become mandatory for deployers of high-risk AI systems on the same date.

All remaining provisions of the EU AI Act become fully effective by August 2, 2027, completing the three-year implementation timeline. The Act provides no grace periods for non-compliance after these deadlines pass.

Penalties and Enforcement

The EU AI Act establishes substantial financial penalties to ensure organizations comply with AI governance requirements. Organizations that violate AI regulations face fines that can reach tens of millions of euros or significant percentages of their global revenue.

Source: OVHcloud Blog

Financial Penalty Structure

The penalty structure follows a tiered approach based on violation severity:

- Prohibited AI practices: €35 million or 7 percent of global annual turnover

- High-risk AI system violations: €15 million or 3 percent of global annual turnover

- GPAI model non-compliance: €15 million or 3 percent of global annual turnover

- False information to authorities: €7.5 million or 1.5 percent of global annual turnover

For each violation, authorities apply whichever amount is higher — the fixed euro amount or the percentage of global turnover. This dual calculation ensures penalties remain meaningful for both small companies and large multinational corporations.

Enforcement Structure

Each EU member state designates national market surveillance authorities as the primary enforcement bodies for AI Act violations. The AI Office, established within the European Commission, coordinates enforcement activities across member states and directly supervises general-purpose AI models with systemic risk.

National authorities possess comprehensive investigative powers including access to technical documentation, source code examination, and remote system monitoring. They can require organizations to provide information, implement corrective measures, or cease operations of non-compliant AI systems.

Steps to Achieve Compliance

Organizations can break down EU AI Act compliance into three main phases that address governance, documentation, and ongoing monitoring.

Establish Governance Framework

Companies begin compliance by identifying who will oversee AI governance within their organization. Organizations typically designate an AI governance committee that includes representatives from legal, technical, risk management, and business units.

The governance framework includes written policies that define how the organization develops, procures, and deploys AI systems. Companies conduct AI system inventories to catalog all existing AI tools and classify each system according to EU AI Act risk categories.

Implement Documentation Requirements

Organizations create comprehensive documentation systems for AI systems that fall under regulatory requirements. High-risk AI systems require detailed technical documentation that describes system design, development processes, and performance characteristics.

Risk management procedures operate throughout the AI system lifecycle, identifying potential risks to health, safety, and fundamental rights, then implementing appropriate mitigation measures. Data governance protocols ensure AI systems use high-quality, representative datasets that minimize bias and discrimination.

Deploy Monitoring Systems

Continuous monitoring systems track AI performance, accuracy, and potential bias throughout system operation. Organizations establish metrics and thresholds that trigger investigation when systems perform outside expected parameters.

Regular compliance reviews evaluate whether AI systems continue meeting regulatory requirements as technology and deployment contexts evolve. Incident response procedures define how organizations detect, investigate, and respond to AI system malfunctions or compliance violations.

Source: oxethica

Frequently Asked Questions

Does the EU AI Act apply to my company if we only use ChatGPT for business?

Yes, organizations using third-party AI tools like ChatGPT for business purposes in the EU become deployers under the Act. Even when you don’t develop the AI systems yourself, you face compliance obligations including transparency requirements and potentially fundamental rights impact assessments depending on how you use the tools.

How can I verify my AI vendor complies with EU AI Act requirements?

Request specific compliance documentation from vendors including conformity assessments for high-risk systems, technical documentation, and risk management procedures. Include contractual clauses that specify AI Act compliance requirements and clarify who bears responsibility for different types of violations.

What happens if my vendor violates AI Act requirements while I’m using their system?

Deployers maintain independent compliance obligations and can face penalties even when using third-party AI systems. The Act creates separate responsibilities for providers and deployers, meaning both parties can face enforcement action for violations within their respective roles, regardless of the other party’s compliance status.

When do I need to conduct a fundamental rights impact assessment?

Deployers of high-risk AI systems must conduct fundamental rights impact assessments before deployment, starting August 2, 2026. These assessments evaluate potential impacts on privacy, non-discrimination, freedom of expression, and other fundamental rights, then develop mitigation measures for identified risks.

How often must I update my AI Act compliance documentation?

Documentation requires updates whenever AI systems change significantly, new risks are identified through monitoring, or deployment contexts change. The Act requires dynamic compliance management with continuous monitoring and regular documentation updates to reflect current system operation and risk status.

Ready to navigate EU AI Act compliance requirements for your organization? The regulatory landscape continues evolving, and early preparation positions companies for successful compliance while maintaining competitive advantages in responsible AI deployment. Contact our AI consulting team to develop your compliance strategy today.