ISO 42001 addresses algorithmic bias through systematic governance requirements, data quality controls, and continuous monitoring standards that organizations must implement throughout their AI system lifecycles.

Algorithmic bias has become a critical business risk as AI systems make decisions that affect hiring, lending, healthcare, and criminal justice. When these systems produce unfair outcomes for certain groups of people, organizations face regulatory penalties, lawsuits, and reputation damage. The European Union’s AI Act, which began enforcement in February 2025, now requires organizations to demonstrate systematic bias prevention in high-risk AI applications.

ISO 42001, published in December 2023, provides the first international standard specifically designed to manage AI-related risks including algorithmic discrimination. The standard establishes governance frameworks that require organizations to identify bias risks, implement specific controls, and maintain documented evidence of bias mitigation efforts. Organizations implementing ISO 42001 transform bias prevention from informal practices into auditable management systems.

Source: Northwest AI Consulting

What Is Algorithmic Bias and Why Organizations Need to Address It

Algorithmic bias refers to systematic unfairness in artificial intelligence systems that produces prejudiced or discriminatory results for certain groups of people. When AI systems make decisions that consistently favor one group over another without justifiable reasons, algorithmic bias occurs.

Bias enters AI systems through three primary pathways. Training data bias emerges when the data used to teach AI models contains historical prejudices, incomplete representation of certain groups, or skewed information that reflects past discrimination. Model design bias occurs when the structure, parameters, or mathematical choices within algorithms inadvertently create unfair outcomes, even when training data appears balanced.

Implementation bias happens when AI systems are deployed in contexts or environments different from those they were designed for, causing unexpected discriminatory effects. For example, a hiring algorithm trained on historical employment data might perpetuate past discrimination against women or minorities by learning patterns from biased hiring decisions. Organizations implementing AI for operations must carefully address these bias risks.

Common Types of Bias in AI Systems

Several distinct types of bias affect AI systems across different industries and applications:

- Selection bias — occurs when training data excludes certain groups or perspectives, leading to AI systems that perform poorly for underrepresented populations

- Confirmation bias — happens when AI systems reinforce existing beliefs or assumptions rather than making objective evaluations

- Representation bias — emerges when certain groups are inadequately represented in training data, causing AI systems to perform differently across demographic groups

- Measurement bias — occurs when data collection methods, definitions, or measurement tools systematically disadvantage certain groups

Source: Analytics Vidhya

Organizations face significant operational and financial consequences when algorithmic bias remains unaddressed. Reputational damage represents one of the most immediate risks, as public exposure of discriminatory AI systems can permanently harm brand trust and customer confidence.

Lost customers and market share follow reputational damage, as consumers increasingly choose organizations that demonstrate ethical AI practices. Organizations with documented bias problems find themselves excluded from partnerships, contracts, and market opportunities as business partners seek to avoid association with discriminatory practices.

How ISO 42001 Creates Systematic Framework for Bias Governance

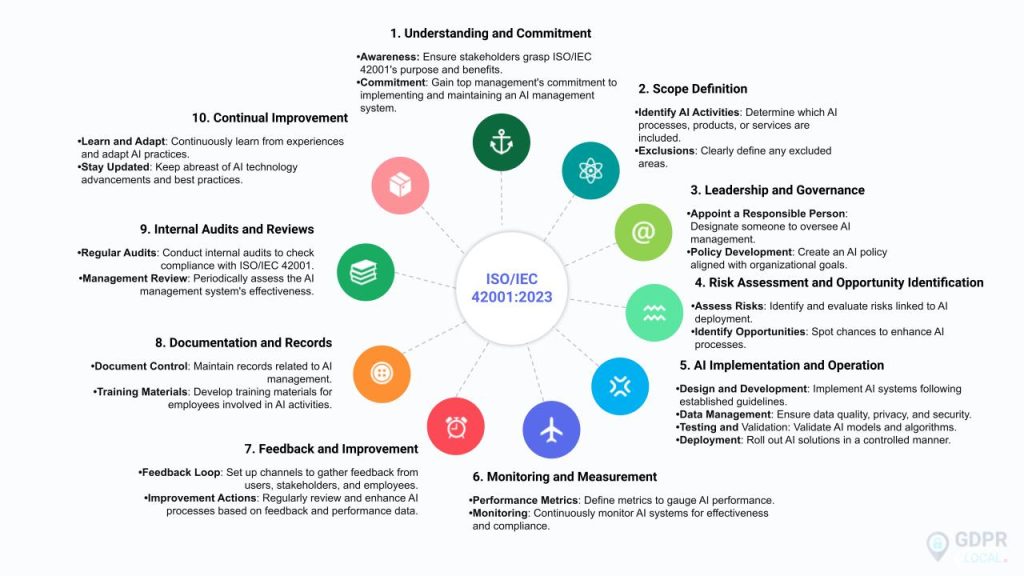

ISO/IEC 42001:2023 represents the world’s first international standard specifically designed for artificial intelligence management systems. Published in December 2023, this framework provides organizations with structured methods to establish, implement, maintain, and continuously improve their AI governance practices.

The framework operates through ten clauses that cover everything from establishing organizational context to continuous improvement processes. Unlike traditional information security standards that focus primarily on data protection, ISO 42001 explicitly recognizes that AI systems generate distinct risk categories including model drift, adversarial attacks, and algorithmic bias.

Organizations implementing ISO 42001 evaluate 38 controls organized under nine control objectives in Annex A. Control objective A.2 specifically addresses ethical AI and fairness, requiring organizations to identify potential algorithmic bias and implement controls to prevent discriminatory outcomes. For organizations looking to understand AI bias prevention, this framework provides essential guidance.

Risk-Based Approach to Algorithmic Fairness

ISO 42001 establishes a systematic process for assessing bias risks before AI systems reach production environments and throughout their operational lifecycles. Requirement 6.1.2 mandates organizations conduct AI risk assessments where they identify risks including algorithmic bias and discrimination, analyze potential consequences to individuals and society, and evaluate these risks against established criteria.

Source: ISO Docs

The standard introduces the AI System Impact Assessment (AIIA) as a distinct requirement from traditional risk assessment processes. The AIIA specifically evaluates potential consequences of AI systems for individuals, groups, and society, creating space for organizations to systematically assess whether systems could cause discrimination or exclusion.

During the assessment phase, organizations identify bias risks across multiple dimensions. Data bias occurs when training datasets are unrepresentative or reflect historical inequities. Algorithmic bias emerges from design choices that inadvertently introduce discrimination. Human bias infiltrates systems through subjective decisions made during development and deployment phases.

Risk treatment plans address identified bias risks with specific controls. Organizations select from Annex A controls or develop alternative controls that achieve equivalent risk mitigation. Control A.7.3 requires documentation of data acquisition processes, including considerations of representativeness across demographic groups.

Integration With Enterprise Quality Management Systems

ISO 42001 follows the same high-level structure as other ISO management system standards, enabling seamless integration with existing quality management frameworks. Organizations already certified to ISO 9001 (Quality Management Systems) can leverage their established governance structures, documentation processes, and audit procedures when implementing AI governance requirements.

The integration extends to specific operational processes where AI governance intersects with quality management. Organizations implementing both standards can align their document control procedures, ensuring that AI system documentation follows the same version control, approval, and distribution processes established for quality management.

Risk management processes established under ISO 9001 provide a foundation for AI risk assessment requirements in ISO 42001. Organizations can extend their existing risk registers to include AI-specific risks like algorithmic bias, model drift, and transparency requirements.

Key ISO 42001 Controls That Directly Address Algorithmic Bias

ISO 42001’s Annex A contains 38 specific controls organized under nine control objectives, with several controls directly targeting algorithmic bias prevention, detection, and remediation. Organizations implementing ISO 42001 evaluate these controls during risk assessment and implement those relevant to their AI systems’ bias risks.

Bias Prevention and Detection Controls

Control A.2.1 requires organizations to identify potential algorithmic bias sources and implement controls to prevent discriminatory outcomes across all AI decision-making processes. This control mandates that organizations establish documented processes for bias identification and mitigation, ensuring fairness across all demographic groups affected by AI decisions.

Control A.6.2.4 on Model Verification and Validation requires organizations to define testing requirements including criteria thresholds for accuracy, drift, and bias metrics before deploying AI systems. Organizations establish baseline fairness measurements during development and validation phases, then test AI systems across different demographic groups before deployment.

Control A.7.2 addresses data management processes for AI system development, requiring documentation of data selection methods and representativeness considerations. Control A.7.3 mandates that data acquisition processes document selection criteria, including assessment of potential bias sources in data collection methods.

Data Quality and Fairness Requirements

Control A.7.4 requires organizations to define data quality requirements that include verification that datasets adequately represent relevant demographic groups and minimize embedded biases. Organizations establish measurable criteria for demographic representation and document how they assess whether training data meets these standards.

Control A.7.5 mandates complete documentation of data lineage to identify where bias might originate or where historical inequities affect AI outputs. This provenance tracking enables organizations to trace bias issues to their root causes in data collection or processing.

Control A.7.6 requires that data preparation methods include documented criteria ensuring that processing steps do not introduce or amplify algorithmic bias. Organizations document how they handle demographic imbalances, address underrepresentation of certain groups, and manage historical biases embedded in legacy datasets.

Data Governance Standards That Prevent Algorithmic Bias

ISO 42001 treats data governance as the foundation for preventing algorithmic bias. The standard recognizes that biased training data creates biased AI systems, making data quality controls essential for fair outcomes. Organizations implementing ISO 42001 establish systematic processes to identify, document, and mitigate bias sources in their training datasets.

Source: Digital Regulation Platform

The connection between data quality and bias prevention appears throughout ISO 42001’s control framework. Poor data quality, unrepresentative samples, and historical inequities embedded in datasets all contribute to algorithmic discrimination.

Data Quality Requirements for AI Training Sets

Control A.7.4 mandates that organizations implement data quality assurance processes to ensure accuracy, completeness, and consistency in training datasets. Accuracy requirements focus on correctness of data labels and measurements. Completeness requirements address missing data points, incomplete records, or gaps in data coverage that could create blind spots for certain demographic groups.

Relevance requirements ensure that training data aligns with the AI system’s intended use case and operating environment. Organizations evaluate whether historical training data remains relevant for current applications, particularly when social conditions, demographics, or business contexts have changed since data collection.

The standard requires organizations to establish measurable criteria for each quality dimension. For example, accuracy thresholds might specify maximum acceptable error rates for data labels, while completeness thresholds might require minimum sample sizes for each demographic group represented in training data.

Representative Dataset Standards and Documentation

Organizations identify the demographic characteristics relevant to their AI system’s fairness goals. For hiring applications, relevant characteristics might include race, gender, age, and educational background. For healthcare applications, relevant characteristics might include age, gender, race, socioeconomic status, and geographic location.

The standard requires organizations to document the demographic composition of their training datasets. This documentation includes:

- Sample sizes for each demographic group

- Methods used to collect demographic information

- Any gaps or imbalances in representation

- Approaches to managing situations where perfect demographic balance isn’t achievable

Organizations also document their criteria for determining adequate representation. These criteria consider factors such as statistical power requirements for subgroup analysis, regulatory requirements for demographic coverage, and the potential impact of underrepresentation on different groups.

Bias Detection and Continuous Monitoring Requirements

ISO 42001 establishes specific obligations for organizations to continuously monitor AI systems for bias drift and performance degradation throughout the operational lifecycle. Under Clause 9’s performance evaluation requirements, organizations implement systematic monitoring processes that track fairness metrics across demographic groups over time.

The monitoring obligations recognize that AI systems operate in dynamic environments where data distributions shift and user populations evolve. Organizations maintain continuous surveillance of model behavior to identify bias emergence that could result from concept drift, changing input patterns, or algorithmic degradation over time.

Fairness Metrics and Testing Protocols

Organizations implementing ISO 42001 establish documented fairness testing methodologies that define specific metrics and evaluation criteria for bias detection. Control A.2.1 requires organizations to implement systematic processes for identifying potential algorithmic bias and preventing discriminatory outcomes across all applications.

Source: Google for Developers

Demographic parity represents one widely-implemented fairness metric that measures whether AI predictions remain independent of membership in sensitive demographic groups. Equalized odds provides stricter fairness evaluation by requiring AI models to achieve equal true positive rates and false positive rates across all demographic groups.

Equal opportunity focuses specifically on true positive rates within positive classes, ensuring fairness among individuals selected or approved by the system. Organizations document testing protocols that specify how fairness metrics are calculated, what thresholds constitute acceptable performance, and how frequently testing occurs.

Ongoing Performance Evaluation for Bias Drift

ISO 42001’s Clause 9.1 mandates that organizations monitor and measure AI system performance including fairness characteristics on an ongoing basis. Organizations establish baseline fairness metrics during development phases, then implement continuous monitoring systems that track model performance across demographic groups over time.

Organizations implement automated monitoring infrastructure that calculates fairness metrics at regular intervals and alerts responsible personnel when systems exceed predefined bias thresholds. The monitoring systems track multiple fairness dimensions simultaneously, recognizing that bias can manifest differently across various demographic characteristics and decision contexts.

Organizations document monitoring frequency, alert thresholds, and escalation procedures for addressing detected bias drift. The continuous monitoring obligations extend beyond deployment through the entire operational lifecycle, ensuring that bias detection remains active even as systems age and operating conditions change.

Organizational Roles Essential for Effective Bias Management

ISO 42001 requires organizations to assign specific people to manage algorithmic bias throughout the AI system lifecycle. These roles create accountability structures where named individuals own responsibility for detecting, preventing, and fixing bias in AI systems.

Source: LinkedIn

The standard doesn’t dictate which job titles organizations create, but it mandates that someone be accountable for each bias management function. Organizations typically establish specialized roles or expand existing positions to include bias governance responsibilities.

Executive Leadership and Governance Responsibilities

Chief Executive Officers hold ultimate accountability for AI bias management under ISO 42001. CEOs establish organizational policies that commit to fair and ethical AI use, then ensure adequate resources flow to bias detection and mitigation programs.

Chief Technology Officers oversee technical implementation of bias controls across AI development processes. CTOs verify that engineering teams implement fairness testing, maintain bias monitoring systems, and respond to detected bias issues according to documented procedures.

Chief Data Officers manage data governance strategies that prevent bias from entering AI systems through training data. CDOs establish data quality requirements, oversee data collection practices, and ensure training datasets represent diverse demographic groups appropriately.

Technical Team Accountability for Fairness Testing

Data scientists implement fairness metrics and conduct bias testing during AI model development. These professionals select appropriate fairness measures — such as demographic parity or equalized odds — based on the AI system’s intended use and potential for discrimination.

Machine learning engineers build bias detection capabilities into AI systems and establish monitoring infrastructure. Engineers implement automated fairness testing, create bias monitoring dashboards, and develop alert systems that notify teams when bias metrics exceed acceptable thresholds.

AI Model Validators conduct independent assessments of AI system fairness before deployment. Validators perform bias audits separate from development teams, testing AI systems across different demographic groups and documenting fairness performance.

How ISO 42001 Aligns With EU AI Act Bias Requirements

The European Union’s AI Act began enforcement in February 2025, creating mandatory requirements for organizations using high-risk AI systems. ISO 42001, the international standard for AI management systems, provides a framework that addresses many of these same bias and fairness requirements through voluntary controls and governance structures.

Source: LinkedIn

Both frameworks recognize algorithmic bias as a critical risk that requires systematic identification, measurement, and mitigation throughout the AI system lifecycle. The EU AI Act specifically prohibits AI systems that use subliminal techniques, exploit vulnerabilities, or create social scoring systems that could lead to discrimination.

Key Requirements Comparison

The EU AI Act’s Article 10 requires that training datasets be examined for biases, adequately represent relevant groups, and include measures to detect and mitigate bias. ISO 42001’s Control A.7.4 addresses this through data quality requirements including representativeness across demographic groups, while Control A.7.6 documents data preparation methods to prevent bias introduction.

Article 9 of the EU AI Act implements risk management systems including bias testing and validation across demographic groups. ISO 42001’s Control A.6.2.4 defines model verification criteria including bias thresholds, and Control A.2.1 identifies potential algorithmic bias and implements prevention controls.

Article 11 maintains detailed documentation of AI system design, data sources, and decision logic for regulatory review. ISO 42001’s Control A.3.1 documents AI processes including models and data sources, while Control A.8.1 provides information about AI system limitations and bias risks.

The frameworks differ in their enforcement mechanisms. The EU AI Act creates legal obligations with penalties reaching €35 million for violations, while ISO 42001 represents voluntary certification that organizations pursue for governance maturity and competitive positioning.

Building Your Algorithmic Bias Governance Program With ISO 42001

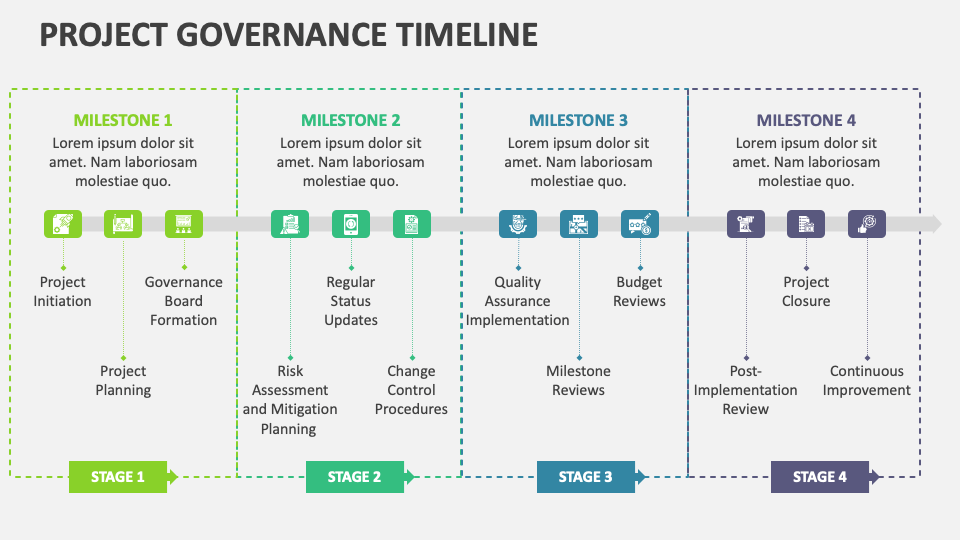

Creating a bias governance program requires systematic planning, clear responsibilities, and measurable controls. ISO 42001 provides the framework to transform bias mitigation from an abstract goal into concrete organizational practices.

Source: Collidu

Establish Leadership Commitment and Governance Structure

Begin with documented leadership commitment to ethical AI and bias mitigation. The CEO and senior leadership team creates an AI policy that explicitly addresses bias prevention and fairness across all AI applications.

Assign specific roles for bias governance. Appoint a Chief Data Officer to oversee data quality and representativeness. Designate AI Risk Managers to identify and assess bias risks throughout your systems. Create AI Ethics and Bias Auditor positions to conduct regular fairness evaluations.

Document these role assignments with clear accountability structures. Each role requires written responsibilities and reporting relationships that connect bias governance to executive oversight.

Conduct Comprehensive AI Risk Assessment

Map all AI systems currently deployed or under development across your organization. Catalog each system’s purpose, data sources, affected stakeholder groups, and potential for discriminatory impact.

Assess bias risks using documented criteria that reflect your organizational context and regulatory environment. Evaluate how each AI system might affect different demographic groups, including protected classes under applicable anti-discrimination laws.

Document risk assessment findings with specific attention to systems that make or inform decisions about hiring, lending, healthcare, criminal justice, or other consequential domains where bias creates significant harm potential.

Implement Data Governance Controls

Establish data quality standards that explicitly address representativeness across demographic groups. Document data sources, collection methods, and any known limitations or gaps in demographic coverage.

Create data provenance tracking that enables you to trace bias issues to their origins in data collection, preparation, or historical sources. Maintain detailed records of data lineage from source through processing to model training.

Implement data preparation processes that include bias detection and mitigation steps. Document how you handle data imbalances, address underrepresentation of certain groups, and manage historical biases embedded in legacy datasets.

Frequently Asked Questions About ISO 42001 and Algorithmic Bias

How long does implementing ISO 42001 algorithmic bias controls typically take?

Implementation timeline depends on organization size and existing AI governance maturity. Most organizations complete initial bias controls within six to twelve months.

Organizations starting with robust data governance and existing AI oversight can implement bias controls faster than those building governance from scratch. Larger enterprises with multiple AI systems and complex data environments require additional time to establish consistent bias detection and monitoring across all systems.

What happens when algorithmic bias is discovered in a certified organization’s AI system?

Organizations follow incident response procedures to investigate, remediate, and prevent recurrence. Certification remains valid if proper procedures are followed according to the standard’s requirements.

ISO 42001 requires organizations to establish incident response procedures specifically for AI-related issues including algorithmic bias. When bias incidents occur, organizations document the incident, investigate root causes, implement corrective actions, and update controls to prevent similar incidents.

Can ISO 42001 completely eliminate algorithmic bias from AI systems?

ISO 42001 provides a systematic approach to minimize bias but cannot eliminate it entirely. The standard focuses on continuous improvement and risk reduction rather than perfect bias elimination.

Algorithmic bias stems from multiple sources including training data limitations, algorithmic design choices, and embedded historical inequities. Different fairness definitions often conflict mathematically, making perfect fairness across all groups technically impossible.

Do smaller organizations using AI systems need ISO 42001 for managing algorithmic bias?

While not legally required for all organizations, ISO 42001 provides a valuable framework for any organization deploying AI systems that impact people’s lives or opportunities.

The EU AI Act requires bias governance for high-risk AI systems regardless of organization size. Smaller organizations deploying AI systems in hiring, credit scoring, healthcare, or other consequential domains face regulatory requirements and liability risks similar to larger enterprises.

ISO 42001 offers a structured approach to bias governance that scales for different organizational sizes. Smaller organizations can implement essential bias controls without full enterprise-scale governance infrastructure, focusing on core requirements for their specific AI systems and risk profiles.