An AI governance framework is a structured set of policies, processes, and oversight mechanisms that guide the responsible development and deployment of artificial intelligence systems within an organization.

Organizations across industries are rapidly adopting artificial intelligence technologies to improve operations, enhance customer experiences, and drive innovation. However, the increasing use of AI systems brings new risks including potential bias, privacy violations, security vulnerabilities, and unintended consequences that can harm individuals and damage organizational reputation.

The absence of proper governance creates significant exposure to regulatory penalties, legal liability, and loss of stakeholder trust. Recent high-profile AI failures have demonstrated the real-world consequences of inadequate oversight, from biased hiring algorithms to chatbots that provide harmful advice.

Developing a comprehensive AI governance framework enables organizations to harness AI benefits while managing risks systematically. A well-designed framework provides clear guidelines for AI development teams, establishes accountability structures, and creates processes for ongoing monitoring and improvement.

What Is an AI Governance Framework?

An AI governance framework is a comprehensive system that organizations use to manage their AI technologies responsibly. Think of it as a playbook that tells everyone in your organization how to develop, deploy, and monitor AI systems safely and ethically.

The framework answers a simple question: “How do we make sure our AI systems work properly and don’t cause harm?” It provides the structure and rules that teams follow when working with AI technologies.

Core elements include:

— Written policies — Guidelines that specify how teams collect data, test AI systems, and prevent bias

— Clear roles — Specific people responsible for reviewing AI projects and making governance decisions

— Risk management — Processes for identifying problems before they happen and fixing issues when they arise

These elements work together to make responsible AI practices part of regular business operations rather than optional add-ons.

Image source: Consultia

Why Organizations Need AI Governance Frameworks

AI systems can create serious problems when they operate without proper oversight. Companies face expensive lawsuits when hiring algorithms discriminate against certain groups. Chatbots provide incorrect medical advice that harms customers. Trading algorithms cause massive financial losses during market changes.

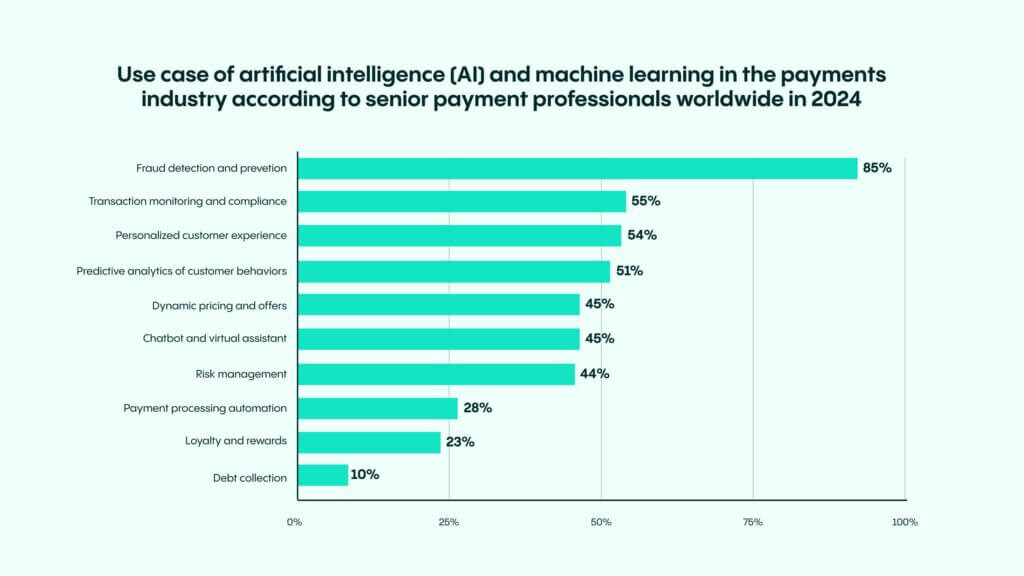

Image source: Veriff

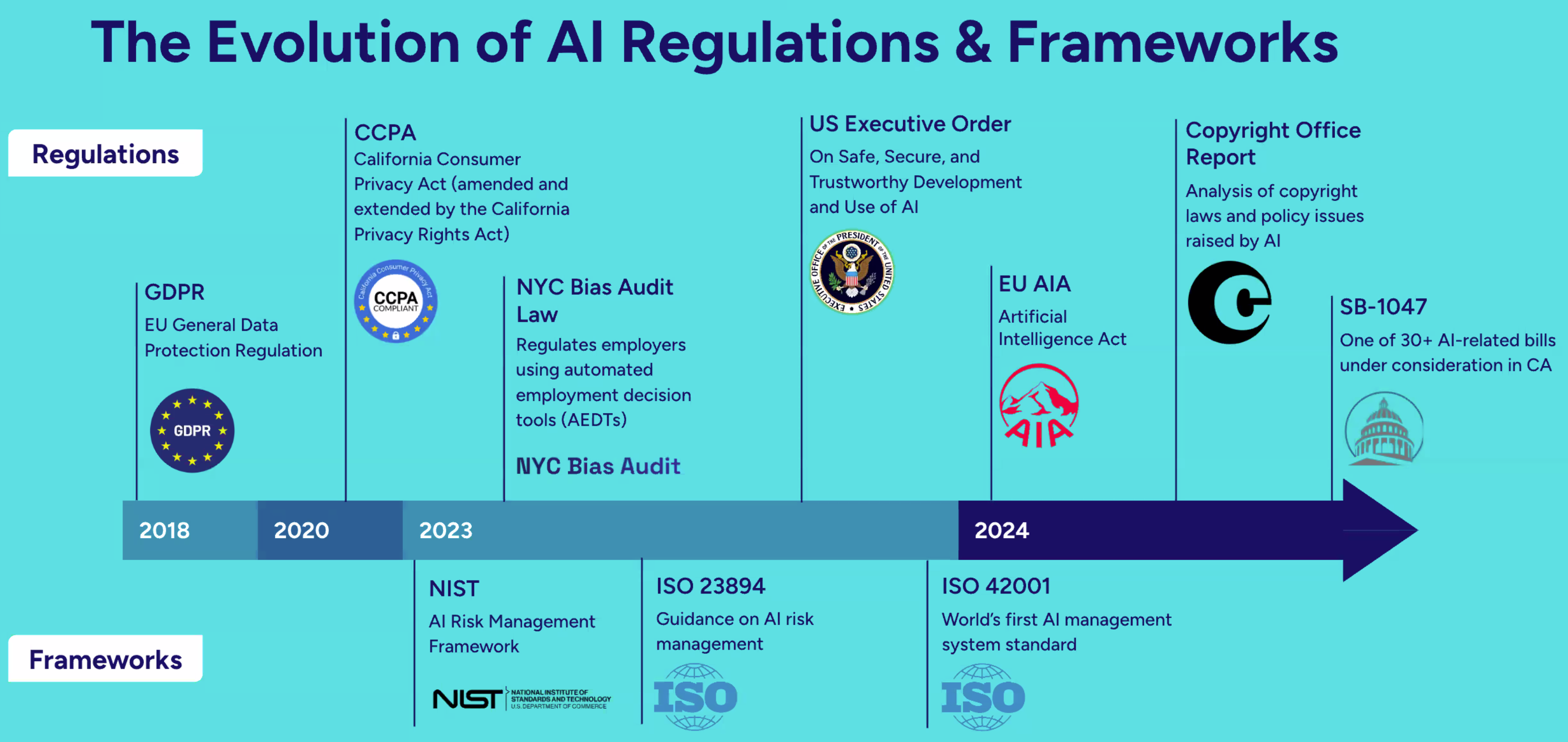

Regulatory compliance drives immediate action. The European Union AI Act became enforceable in 2024, requiring specific oversight for high-risk AI systems. Companies face fines up to seven percent of global revenue for violations. Similar regulations are emerging worldwide, including sector-specific requirements in the United States.

Without governance frameworks, organizations lack systematic ways to identify and address these risks before they become costly incidents. Teams make decisions about AI systems without clear guidelines or accountability structures.

Stakeholder trust increasingly depends on transparent AI practices. Customers, employees, and investors evaluate companies based on their AI oversight capabilities when making decisions about purchases, employment, and investments.

Essential Components of AI Governance Frameworks

Every effective AI governance framework contains four key building blocks that work together to create structured oversight.

Governance Structure and Leadership

The governance structure establishes who makes decisions about AI and how those decisions flow through the organization. A senior executive like a Chief AI Officer holds primary responsibility for AI governance outcomes across the entire company.

Image source: ResearchGate

An AI governance committee serves as the main decision-making group. This committee includes people from technology, legal, risk management, and business operations departments. Committee members review AI projects, create policies, and resolve concerns about ethics or risks.

Governance champions work within individual departments as communication bridges between the central committee and day-to-day teams. These people conduct initial risk assessments and determine which projects need escalation to the main committee.

Policies and Standards Documentation

Written policies provide detailed guidance for AI development and deployment activities. Data governance policies specify how organizations collect, process, store, and protect information used in AI systems.

Model development standards establish technical requirements for building AI systems, including testing procedures and performance benchmarks. Deployment policies define requirements for moving AI systems from development into production environments.

Ethical guidelines establish principles for responsible AI development. These guidelines address bias prevention, fairness requirements, and transparency obligations that apply to all AI projects.

Risk Management Processes

Risk identification systematically catalogs potential problems that could arise from AI system operation. Organizations evaluate performance risks like model failures, data risks including quality issues, and security risks from cyberattacks.

Risk assessment procedures provide structured methods for evaluating how likely problems are and how severe their impact might be. Teams use scoring systems that consider both probability and consequences.

Risk mitigation strategies include:

- Technical controls — Automated monitoring systems and data validation procedures

- Human oversight — Requirements for people to review high-stakes AI decisions

- Response procedures — Clear steps for fixing problems when they occur

Stakeholder Engagement Mechanisms

Stakeholder engagement involves all parties who might affect or be affected by AI systems. Internal stakeholders include employees at all levels. External stakeholders include customers, suppliers, regulators, and community members.

Communication channels provide ways for stakeholders to raise concerns and participate in governance processes. Organizations establish surveys, focus groups, and digital platforms to accommodate different preferences.

Training programs build understanding of AI technologies and governance requirements across different audiences with appropriate levels of technical detail.

Building Your AI Governance Framework

Image source: LinkedIn

Creating an AI governance framework follows seven systematic steps that balance innovation with responsible oversight.

Step 1: Assess Your Current AI Landscape

Start by cataloging all AI systems currently in use across your organization. Document customer service chatbots, recommendation engines, automated decision tools, and data analytics platforms. Identify which teams developed or purchased these systems and what data they access.

Evaluate existing oversight mechanisms. Examine current policies, approval processes, and monitoring procedures to identify gaps where AI activities operate without formal governance.

Step 2: Define Governance Objectives and Scope

Clear objectives provide direction for implementation efforts. Common goals include ensuring ethical AI use, maintaining regulatory compliance, managing operational risks, and building stakeholder trust.

Scope definition determines which AI activities fall under governance oversight. Some organizations begin with high-risk applications like hiring algorithms, while others implement comprehensive coverage from the start.

Step 3: Establish Governance Structure and Roles

Create an AI governance committee that includes representatives from technology, legal, risk management, and business operations. This committee makes policy decisions and provides strategic direction.

Designate governance champions within each business unit who serve as communication bridges between the central committee and operational teams. Assign a senior executive with authority to oversee governance implementation.

Step 4: Develop Comprehensive Policies and Procedures

Create policies that address data governance, model development standards, risk assessment procedures, and ethical guidelines. Include requirements for data quality validation, privacy protection, and documentation standards.

Specify when human oversight is required, what testing methods teams use, and how to document decisions for future review.

Step 5: Implement Risk Assessment Processes

Develop templates that evaluate technical performance risks, data-related issues, fairness concerns, security vulnerabilities, and stakeholder impacts. Create scoring systems that help teams quantify risk levels.

Establish review processes that match oversight intensity to risk levels. Low-risk applications might require basic documentation, while high-risk systems undergo comprehensive committee review.

Step 6: Deploy Monitoring and Auditing Systems

Set up systems that track model accuracy, detect data changes, identify performance problems, and monitor resource usage. Create automated alerts that notify teams when metrics exceed acceptable limits.

Implement compliance monitoring through regular audits and documentation reviews. Establish incident response procedures for rapid problem identification and resolution.

Step 7: Launch Training and Change Management Programs

Provide technical teams with detailed guidance on implementing governance requirements in their workflows. Give business users sufficient understanding to participate effectively without becoming technical experts.

Address concerns about additional processes and provide clear guidance on new procedures. Celebrate early successes that demonstrate governance value.

AI Risk Assessment and Management

AI risk assessment involves systematically identifying and controlling potential problems that can arise from artificial intelligence systems. Organizations use structured approaches to understand what could go wrong and prevent these problems before they occur.

Risk Categories

AI risks fall into four main types:

- Technical risks — Model performance problems, data quality issues, system failures, and cybersecurity vulnerabilities

- Ethical risks — Algorithmic bias, lack of transparency, privacy violations, and discrimination against protected groups

- Legal risks — Regulatory violations, liability for AI decisions, data protection breaches, and employment law issues

- Operational risks — Business disruption, workforce impacts, vendor dependency, and reputation damage

Technical risks often show up as degraded system performance over time. Models become less accurate as real-world conditions change from training environments. Data quality problems emerge when input information contains errors or reflects sampling biases.

Ethical risks arise when AI systems produce unfair outcomes or violate social values. Bias can become embedded through training data that reflects historical discrimination or through design choices that favor certain groups.

Assessment Methods

Organizations use structured frameworks to evaluate AI risks consistently across different projects. The probability-impact matrix serves as a fundamental tool where teams assess how likely each risk is and estimate potential consequences.

Image source: AuditBoard

Quantitative methods assign numerical values using statistical models and historical data. Qualitative approaches use expert judgment for risks that resist numerical measurement. Scenario-based assessment examines how AI systems might perform under various stress conditions.

Mitigation Strategies

Risk mitigation combines multiple approaches:

- Technical controls — Automated monitoring, data validation, and model testing procedures

- Procedural safeguards — Human oversight requirements and approval workflows

- Organizational oversight — Training programs and incident response procedures

Technical controls embed risk management directly into AI system operations. Automated monitoring tracks performance metrics in real-time, detecting problems that might indicate emerging issues.

Human oversight ensures qualified personnel review high-stakes decisions before implementation. Approval workflows create checkpoints where stakeholders can evaluate recommendations and intervene when necessary.

AI Compliance and Regulatory Requirements

Organizations implementing AI systems face complex regulatory requirements that vary by location, industry, and application type. Understanding these requirements helps build governance frameworks that satisfy legal obligations while maintaining operational flexibility.

Current Regulations

Image source: Data Crossroads

The European Union AI Act represents the most comprehensive AI regulation currently in effect. This framework categorizes AI systems into prohibited, high-risk, limited risk, and minimal risk categories with corresponding compliance requirements.

High-risk AI systems include applications in employment, education, healthcare, and law enforcement. These systems require risk management documentation, data governance procedures, and human oversight protocols.

The United States takes a sector-specific approach. The Federal Trade Commission provides guidance on AI bias and consumer protection. The FDA regulates AI medical devices, while financial regulators address algorithmic lending decisions.

State-level regulations add complexity:

- California’s SB-1001 requires disclosure of automated decision-making

- New York City Local Law 144 mandates bias audits for employment tools

- Illinois BIPA affects AI systems processing biometric data

Compliance Integration

Effective compliance begins with mapping regulatory requirements to organizational AI activities. Legal teams collaborate with technical groups to translate requirements into operational procedures.

Risk-based approaches align compliance efforts with regulatory expectations while managing resources efficiently. High-risk systems receive enhanced oversight, while lower-risk applications follow streamlined processes.

Cross-functional compliance teams ensure comprehensive coverage. Legal counsel provides regulatory interpretation, technical teams implement required controls, and business units contribute operational context.

Documentation Requirements

Comprehensive documentation captures information required by regulatory frameworks. AI system documentation includes technical specifications, process documentation, and risk assessments.

Required documentation typically covers:

- Technical specifications describing model architecture and performance metrics

- Risk assessments evaluating potential harms and mitigation measures

- Data governance tracking sources, processing activities, and retention periods

- Monitoring records demonstrating ongoing compliance verification

Record retention policies align with regulatory requirements while supporting operational needs. Organizations establish systematic approaches to document lifecycle management, including creation standards and disposal protocols.

Common Implementation Challenges and Solutions

Organizations face predictable obstacles when implementing AI governance frameworks. Understanding these challenges helps teams prepare appropriate strategies and set realistic expectations.

Typical Challenges

Resistance to change represents the most frequent barrier. Technical teams may view governance as bureaucratic overhead that slows innovation. Business units often resist new approval processes that delay project launches.

Resource constraints create implementation difficulties across multiple dimensions. Many organizations underestimate the human resources required, particularly time investment for training and ongoing monitoring activities.

Technical complexity introduces challenges that existing IT governance frameworks may not adequately address. AI systems operate differently from traditional software, requiring specialized expertise for effective oversight.

Success Factors

Executive sponsorship provides organizational authority and resource allocation necessary for implementation. Senior leaders demonstrate visible commitment through policy endorsement and consistent messaging about responsible AI development.

Cross-functional collaboration ensures frameworks address the full range of organizational perspectives. Effective committees include representatives from technology, legal, risk management, and business operations.

Key success elements include:

- Clear communication strategies that build understanding across stakeholder groups

- Pilot project approaches that test procedures with limited scope

- Training programs that provide appropriate knowledge for different roles

- Metrics that track implementation progress and demonstrate value

Frequently Asked Questions

Image source: No Jitter

How long does AI governance framework implementation typically take?

Implementation timelines vary based on organizational size and complexity. Small organizations with limited AI deployments can establish basic frameworks in three to six months. Medium-sized companies typically require six to twelve months for comprehensive structures. Large enterprises often need twelve to eighteen months for full implementation across multiple business units.

What specific roles handle day-to-day AI governance activities?

Data scientists implement bias detection and conduct fairness assessments in their models. Software engineers integrate governance controls into system architecture and maintain monitoring capabilities. Product managers ensure AI features align with governance policies and coordinate stakeholder communication. IT operations teams monitor system performance and maintain security controls.

How do organizations govern third-party AI tools and vendor solutions?

Third-party AI governance extends policies to cover vendor selection and ongoing oversight. Due diligence evaluates vendor governance practices and compliance capabilities before procurement. Contract negotiations establish specific requirements including data handling standards and incident reporting obligations. Organizations maintain responsibility for oversight even when using external services through regular vendor assessments and performance reviews.

What documentation do auditors typically review during AI governance assessments?

Auditors examine risk assessment records, policy compliance documentation, training completion records, and incident response logs. They review technical specifications, data governance procedures, monitoring system outputs, and stakeholder engagement records. Documentation verification includes checking approval workflows, escalation procedures, and corrective action tracking.

How often do AI governance policies require updates due to regulatory changes?

Governance frameworks require annual comprehensive reviews and quarterly assessments for emerging risks. Trigger events like new regulations, major AI deployments, or significant incidents require immediate updates regardless of scheduled cycles. The evolving nature of AI regulations, particularly generative AI capabilities, may necessitate more frequent policy updates than traditional IT governance frameworks.

Can organizations with limited budgets implement effective AI governance?

Smaller organizations can implement effective governance by focusing on essential elements and scaling approaches to match resources. Risk-based approaches prioritize activities based on AI system criticality. Simplified policies address core requirements without excessive complexity. Phased implementation allows gradual capacity building, while external resources like industry frameworks and consulting support can supplement internal capabilities.