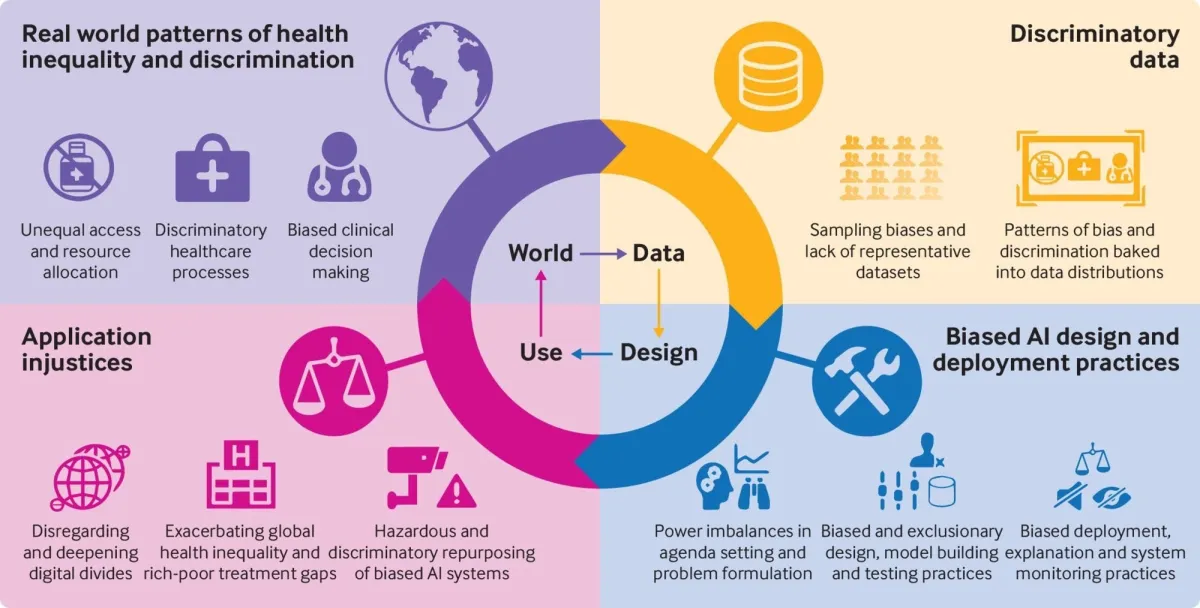

Artificial intelligence bias occurs when AI systems produce unfair or discriminatory outcomes that reflect societal inequalities or technical flaws in data and algorithms. Organizations deploy AI systems in critical areas like healthcare, hiring, and lending, where biased decisions can harm individuals and communities. The consequences of biased AI range from denied job opportunities to inadequate medical care for certain groups.

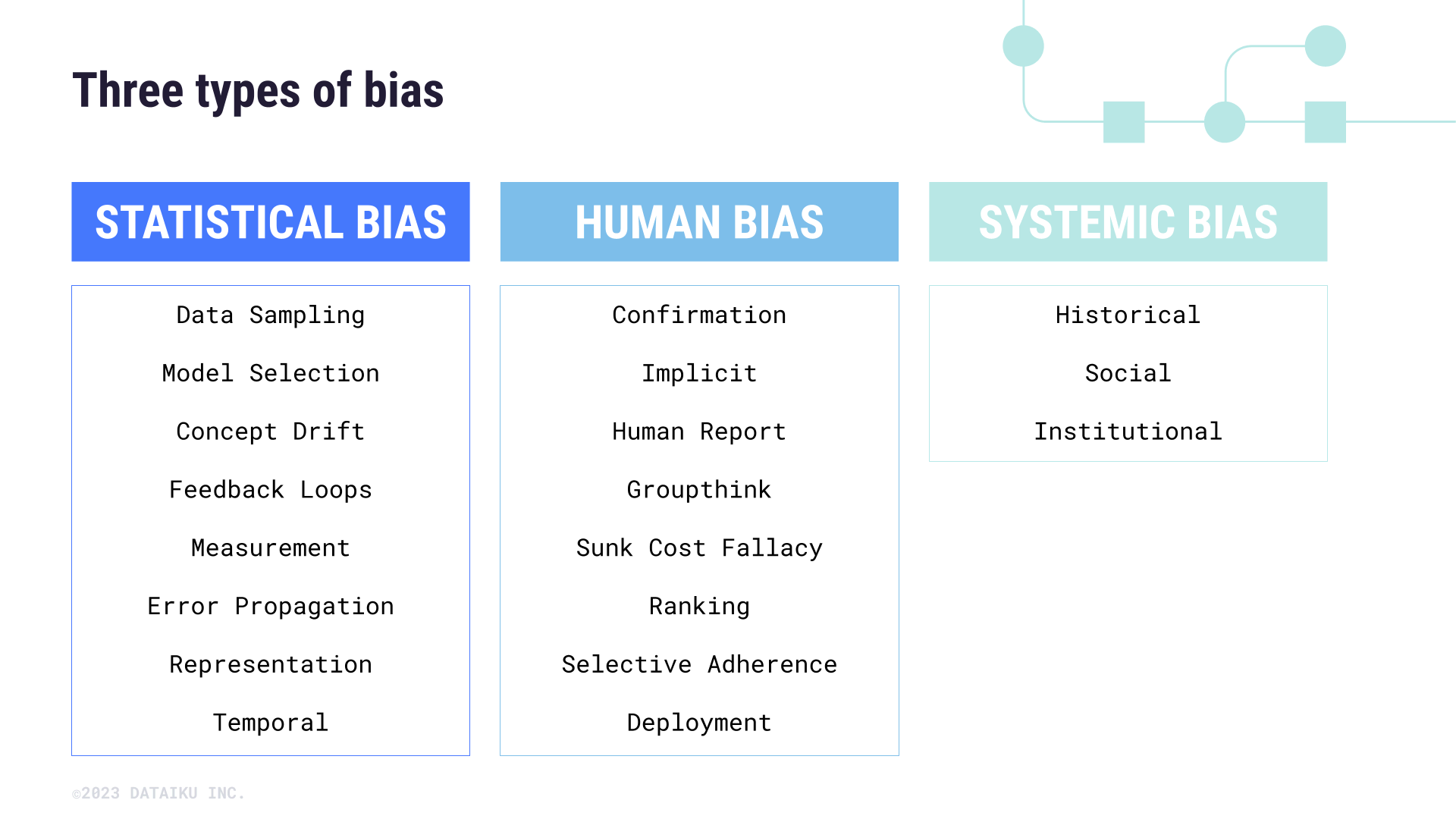

The National Institute of Standards identifies three main types of AI bias: systemic bias reflecting societal inequalities, statistical bias from flawed data or algorithms, and human bias introduced during development. These bias types often interact throughout the AI development process, creating complex challenges for organizations. Modern AI systems require comprehensive approaches that address technical, organizational, and social factors contributing to unfair outcomes.

Source: Dataiku Knowledge Base

Prevention strategies span the entire AI lifecycle, from initial data collection through ongoing monitoring of deployed systems. Technical solutions alone can’t eliminate bias. Organizations use governance frameworks, diverse teams, and continuous oversight to build fair AI systems. Implementing proper AI maturity assessments helps companies understand their current capabilities and develop appropriate bias prevention strategies.

Source: ResearchGate

The stakes for bias prevention continue rising as AI adoption accelerates across industries. Regulatory frameworks like the EU AI Act now mandate bias assessments for high-risk AI applications. Organizations that fail to address bias face legal liability, reputational damage, and harm to the communities they serve.

Understanding AI Bias and Its Real-World Impact

AI bias emerges when artificial intelligence systems produce unfair or discriminatory outcomes that systematically favor or disadvantage certain groups of people. These biased results happen when AI models make decisions based on patterns in training data that reflect historical discrimination or when algorithms are designed in ways that inadvertently treat different groups unequally.

Biased AI systems create significant risks for organizations across multiple areas. Legal risks emerge when AI decisions violate anti-discrimination laws, leading to costly lawsuits and regulatory penalties. Reputational damage occurs when biased AI systems generate negative publicity and erode public trust in the organization.

Real-world examples demonstrate how AI bias manifests in practice. Amazon’s hiring algorithm showed bias against women by penalizing resumes that included words like “women’s” because the system learned from historical hiring data that predominantly featured male employees. Healthcare algorithms have exhibited racial bias by using healthcare spending as a proxy for medical need, which disadvantaged Black patients who historically received less healthcare due to systemic barriers.

Source: Research AIMultiple

AI bias manifests in three primary categories:

- Algorithmic bias — unfairness that emerges from the design and structure of machine learning algorithms themselves, such as optimization functions that prioritize overall accuracy while ignoring performance disparities across demographic groups

- Data bias — discrimination that results from training datasets that are unrepresentative, incomplete, or contain historical patterns of discrimination, such as hiring data that reflects past exclusion of certain groups

- Cognitive bias — human prejudices and assumptions that influence AI development decisions, from problem definition through data collection to model interpretation, often reflecting unconscious biases of development teams

Understanding these bias types becomes crucial when implementing AI agents in sales or other customer-facing applications where unfair treatment could significantly impact business relationships and customer experience.

Identifying Bias Before It Causes Harm

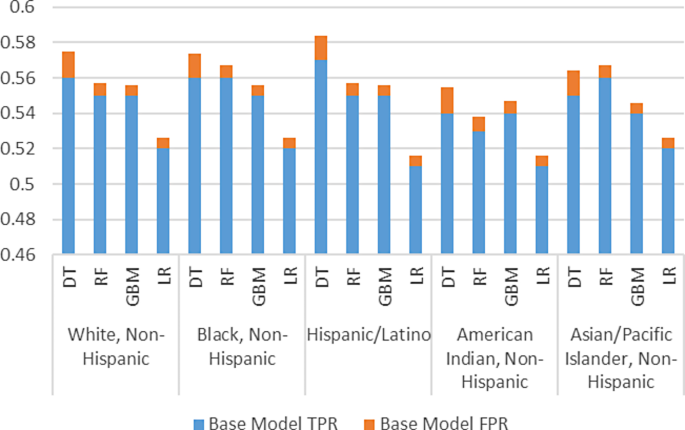

Identifying bias in AI systems requires understanding performance patterns and recognizing when algorithms produce unfair results for different groups of people. Performance disparities across demographic groups serve as the primary indicator of biased AI systems. When an AI model achieves 95 percent accuracy for one racial group but only 75 percent accuracy for another, this disparity signals potential bias that requires investigation.

Source: BMC Medical Informatics and Decision Making

Unexpected patterns in AI outputs often reveal underlying bias issues that aren’t immediately obvious from aggregate performance metrics. An AI system might produce results that correlate strongly with protected characteristics even when those characteristics weren’t directly included in the training data. Geographic patterns can also indicate bias — if a credit scoring algorithm consistently assigns lower scores to applicants from certain ZIP codes that correspond to minority communities, this pattern suggests the system may be using location as a proxy for race.

Training data represents the most significant source of bias in AI systems because algorithms learn patterns from historical examples. When training datasets don’t accurately represent the diversity of populations the AI system will serve, the resulting models perform poorly for underrepresented groups. Historical data often reflects past discrimination and societal inequalities that become embedded in AI systems.

Organizations can identify potentially biased AI systems by monitoring specific indicators that suggest unequal treatment across demographic groups:

- Unequal error rates across groups — A loan approval algorithm that falsely rejects qualified minority applicants at twice the rate of qualified white applicants

- Disparate impact in outcomes — A hiring AI that recommends male candidates for technical positions 80 percent of the time despite receiving resumes from equally qualified men and women

- Performance degradation for specific populations — A voice recognition system that works accurately for native speakers but struggles with accented English

- Unexpected correlations with protected characteristics — An insurance pricing algorithm whose outputs correlate strongly with applicant race despite not directly using racial information

These identification challenges become particularly important when implementing AI for sales prospecting where biased algorithms could unfairly exclude qualified prospects or misrepresent market opportunities.

Technical Strategies to Prevent Bias

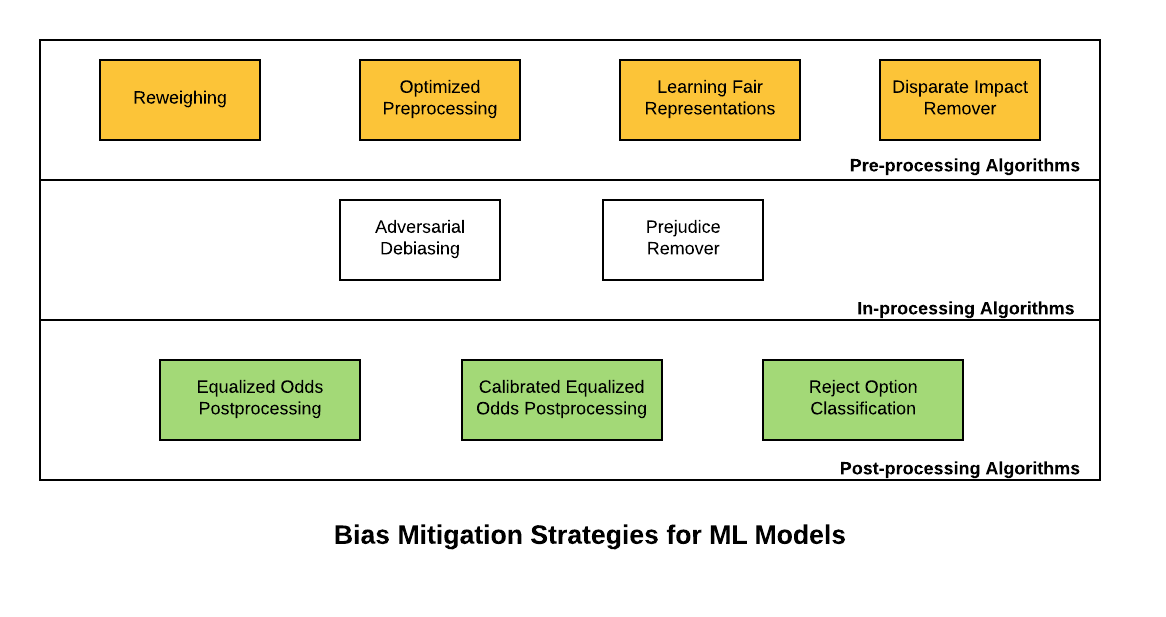

Organizations can implement three main technical approaches to prevent AI bias at different stages of the machine learning process. Each method addresses bias at a specific point in the AI development lifecycle, and teams often combine multiple techniques to achieve the best results.

Source: Analytics Yogi

Pre-processing methods fix bias problems in training data before the AI model begins learning. These techniques recognize that biased training data creates biased AI systems regardless of how sophisticated the algorithms are. Organizations assign higher importance to underrepresented groups in their datasets by giving these samples more weight during training. Teams expand their datasets by creating additional examples of underrepresented groups.

In-processing techniques modify the learning algorithms themselves to build fairness directly into the model during training. These methods balance accuracy and fairness from the beginning rather than trying to fix bias after training is complete. Adversarial debiasing uses two competing neural networks during training — the main model learns to make accurate predictions while a secondary “adversary” network tries to guess protected attributes from the main model’s internal representations.

Post-processing methods adjust AI outputs after the model makes its initial decisions to ensure fair results across different groups. These techniques work with existing trained models without requiring retraining. Organizations apply different decision thresholds to different demographic groups to equalize specific fairness metrics.

The choice between pre-processing, in-processing, and post-processing depends on several factors including data availability, model architecture constraints, and business requirements. Organizations with full control over their training data collection often benefit most from pre-processing techniques. Teams that work with fixed datasets or third-party data sources may find post-processing methods more practical.

These technical strategies become essential when developing AI agents that interact directly with customers, where bias could significantly impact user experiences and business outcomes.

Building Governance Frameworks for Fair AI

Organizations prevent AI bias through systematic governance structures that embed fairness into every stage of AI development and deployment. Strong governance creates accountability, establishes clear standards, and ensures consistent approaches across all AI initiatives.

Source: ResearchGate

AI ethics committees provide dedicated oversight for fairness decisions in artificial intelligence projects. These committees review AI initiatives, assess bias risks, and ensure alignment with organizational values and legal requirements. Effective committees include representatives from diverse functions and backgrounds — technical members bring expertise in machine learning and bias detection methods, while legal representatives ensure compliance with anti-discrimination laws.

Organizations assign specific bias prevention responsibilities across different levels and functions. Senior leadership sets the overall tone and culture around responsible AI use. Data science and engineering teams implement technical bias mitigation measures during model development, testing, and deployment. Product managers and business owners define fairness requirements for AI systems they sponsor.

Comprehensive policies provide written standards and procedures for preventing AI bias across the organization. These policies specify what constitutes acceptable levels of bias for different applications and establish consistent approaches across projects. Bias prevention policies define when bias assessments are required, typically for AI systems that make decisions affecting people in areas like employment, lending, healthcare, or criminal justice.

Companies implementing AI consulting services often find that establishing robust governance frameworks early in their AI journey prevents costly bias issues later and builds stakeholder confidence in their responsible AI practices.

Testing AI Systems for Bias

Organizations test AI models for bias through systematic evaluation processes that examine how algorithms perform across different groups of people. Bias testing involves running AI systems through scenarios that reveal whether the technology treats people fairly regardless of characteristics like race, gender, age, or income level.

Source: Nature

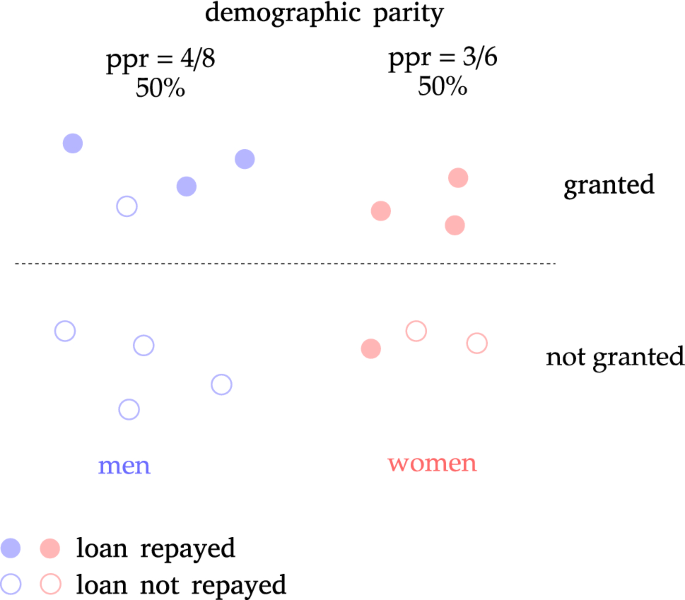

Fairness metrics provide mathematical ways to measure whether AI systems treat different groups equitably. Organizations use multiple metrics because fairness can be defined in various ways, and different metrics may reveal different types of bias problems. Demographic parity measures whether positive outcomes occur at equal rates across different groups. Equalized odds focuses on error rates rather than overall outcome rates.

Cross-group performance analysis involves calculating AI system accuracy and error rates separately for each demographic group to identify performance disparities. Organizations create testing frameworks that systematically evaluate how well AI models work for different populations, revealing whether certain groups experience higher rates of incorrect predictions.

Stakeholder testing involves engaging people who will be affected by AI systems in evaluation processes to identify bias issues that technical analysis might miss. Organizations invite representatives from different demographic groups to interact with AI systems and provide feedback about their experiences, particularly focusing on whether systems seem to treat them fairly.

This testing becomes critical when deploying AI in call centers where biased systems could provide unequal service quality to different customer segments, potentially damaging relationships and creating legal liability.

Monitoring Deployed AI Systems

AI systems can develop bias problems after deployment, even when they performed fairly during initial testing. Real-world environments change constantly, and these changes can cause previously fair systems to produce discriminatory outcomes over time. Data drift occurs when the characteristics of incoming data change from what the model learned during training.

Source: ResearchGate

Automated monitoring systems track AI performance across different demographic groups in real-time. These systems calculate fairness metrics continuously as the AI system makes decisions, comparing current performance to baseline measurements established during deployment. Performance monitoring systems collect data on key metrics including accuracy rates, approval rates, error types, and response times for each demographic group.

Early warning systems notify teams immediately when bias indicators appear in system performance. These alert systems use predefined thresholds to trigger notifications when fairness metrics deteriorate beyond acceptable levels. Teams can respond quickly to investigate and address emerging bias before it affects large numbers of people.

Scheduled review cycles ensure systematic evaluation of AI system fairness at regular intervals. These reviews complement automated monitoring by providing opportunities for deeper analysis of system performance and consideration of broader contextual factors that automated systems might miss.

Continuous monitoring becomes especially important as organizations scale their AI implementations across various industry applications where changing conditions could introduce new bias patterns.

Building Diverse Development Teams

Building bias-free AI systems requires intentional team composition and processes that account for human factors in AI development. Research consistently shows that homogeneous teams overlook bias issues that diverse groups readily identify. When teams lack representation from groups who may be harmed by biased AI systems, they often fail to recognize fairness problems during design, testing, and deployment.

Team diversity helps identify blind spots and prevents bias from entering AI systems by bringing multiple perspectives to problem-solving. Diverse teams catch potential issues that homogeneous groups miss because different life experiences reveal different potential failure modes. Effective diversity extends beyond demographic characteristics to include educational backgrounds, career paths, and domain expertise.

Creating inclusive team cultures where diverse perspectives are genuinely valued matters as much as achieving numerical diversity. Teams with diverse composition but dominant cultures that marginalize minority voices fail to realize bias prevention benefits. Organizations can build inclusive cultures through specific practices like establishing team norms that encourage dissenting opinions and questions about fairness.

Involving affected communities and end users throughout development helps prevent bias by ensuring AI systems address real needs rather than developers’ assumptions. Effective stakeholder engagement starts during problem formulation, when communities can help identify appropriate use cases, define relevant fairness metrics, and establish boundaries that guide development.

Frequently Asked Questions About AI Bias Prevention

What’s the difference between demographic parity and equalized odds in fairness metrics?

Demographic parity measures whether positive outcomes (like loan approvals) occur at equal rates across different groups — for example, 60 percent approval for all racial groups. Equalized odds requires that both true positive rates and false positive rates are equal across groups — among qualified applicants, approval rates are equal, and among unqualified applicants, rejection rates are equal across all groups.

How can organizations detect bias in third-party AI systems they purchase?

Organizations can request bias testing documentation from vendors, including performance metrics across demographic groups and information about training data composition. Contract negotiations can include bias-related requirements such as access to model testing results and regular bias audits. Post-deployment monitoring involves tracking system outputs across different user populations and comparing outcomes to internal fairness standards.

What causes AI systems to develop new bias after deployment?

Data drift occurs when the characteristics of incoming data change from what the model learned during training. Concept drift happens when the relationships between inputs and outputs shift over time. User behavior patterns evolve, societal conditions change, and new populations may start using the system, all of which can introduce bias that wasn’t present when the system first launched.

How much accuracy do organizations typically sacrifice to achieve fairness?

Fairness-accuracy trade-offs vary significantly based on the application domain and potential for harm. In applications where discrimination could cause severe harm, organizations may accept substantial accuracy reductions to achieve fairness. Organizations make explicit decisions about acceptable trade-offs based on ethical considerations, legal requirements, and stakeholder input rather than purely technical criteria.