An AI maturity assessment is a systematic evaluation process that measures an organization’s readiness to develop, deploy, and manage artificial intelligence technologies effectively across multiple dimensions including strategy, governance, data, and technology infrastructure.

Many organizations rush into AI implementation without understanding their current capabilities or readiness levels. This approach often leads to failed projects, wasted resources, and unrealized business value. A structured assessment reveals where an organization stands today and identifies specific areas that need improvement before scaling AI initiatives.

The assessment process has evolved significantly as AI adoption has matured across industries. Modern frameworks now emphasize governance, risk management, and ethical considerations alongside technical capabilities. Organizations that conduct thorough assessments before major AI investments consistently achieve better outcomes than those that skip the evaluation phase.

What Is an AI Maturity Assessment

An AI maturity assessment evaluates your organization’s current capabilities across the areas that determine success with artificial intelligence. Think of it as a comprehensive health check for your AI readiness — examining everything from your data quality to your team’s skills to your leadership’s commitment.

The assessment looks at both technical factors (like your data infrastructure and computing power) and organizational factors (like your company culture and change management processes). Most organizations discover they’re somewhere in the middle — they have some AI capabilities but significant gaps that prevent effective scaling.

The National Institute of Standards and Technology AI Risk Management Framework provides foundational guidance for incorporating trustworthiness considerations into these assessments. This framework emphasizes that successful AI adoption requires more than just good technology.

Why Organizations Need AI Maturity Assessment

Organizations typically run these assessments before developing comprehensive AI strategies. The evaluation provides baseline data that helps leaders set realistic goals and timelines for AI adoption. Understanding current capabilities helps determine whether to maximize AI ROI through strategic investments or focus on foundational improvements first.

Failed or struggling AI projects often trigger the need for an assessment. When AI initiatives don’t deliver expected results or face repeated roadblocks, an assessment can diagnose the underlying problems. Common issues include poor data quality, inadequate governance, or cultural resistance to change.

Competitive pressure also drives assessment needs. When competitors start gaining advantages through AI, organizations want to understand their own position and identify the fastest path to catch up.

Key Frameworks for AI Maturity Assessment

Several established frameworks guide AI maturity assessments, each with different strengths and focus areas.

The IBM AI Governance Framework addresses seven key dimensions: strategy, governance, data, technology, organization, ethics, and performance measurement. Organizations progress through five phases from initial experimentation to optimized operations with sophisticated governance.

The NIST AI Risk Management Framework serves as the U.S. government standard for AI risk management. Released in January 2023, this framework emphasizes trustworthiness throughout AI design, development, and deployment.

The MITRE AI Maturity Model provides six pillars: ethical use, strategy, organization, technology enablers, data, and performance measurement. Each pillar includes structured assessment tools with questionnaires that evaluate maturity across five levels.

Industry-specific frameworks exist for healthcare, financial services, and manufacturing. These specialized approaches address unique regulatory requirements and operational constraints in each sector.

Essential Dimensions to Evaluate

Source: Hustle Badger

Strategy and Leadership Alignment

Your assessment starts with examining how well AI aligns with your business strategy. This includes whether leadership has clearly defined AI goals, allocated adequate resources, and established governance structures like ethics committees or review boards. Organizations often benefit from hiring a Chief AI Officer to provide strategic direction and oversight.

Strong alignment shows up in several ways:

- Clear AI vision — Leadership can articulate how AI supports business objectives

- Dedicated resources — Budget and staff are specifically allocated to AI initiatives

- Governance structures — Committees or boards oversee AI decisions and ethics

Data Infrastructure and Governance

Data quality directly impacts AI success, making this assessment area critical. The evaluation examines your data governance frameworks, quality management processes, and privacy protection measures.

Key indicators of data readiness include:

- Quality management — Systematic processes ensure data accuracy and completeness

- Integration capabilities — Systems can efficiently combine data from multiple sources

- Privacy controls — Policies and technologies protect sensitive information throughout the AI lifecycle

Technology Platforms and Integration

Your technology infrastructure determines whether you can actually build and deploy AI applications at scale. The assessment examines computing resources, software platforms, and integration capabilities.

Technology maturity indicators include:

- Scalable infrastructure — Systems can handle increased AI workloads

- Development platforms — Teams have access to machine learning tools and frameworks

- Integration readiness — AI applications can connect with existing business systems

Organizational Culture and Workforce

Culture often determines whether technical capabilities translate into business results. The assessment examines AI literacy levels, change management capabilities, and employee attitudes toward AI adoption. Understanding how AI transforms work helps organizations prepare their workforce for technological changes.

Cultural readiness appears through:

- Leadership modeling — Executives demonstrate data-driven decision making

- Employee engagement — Staff willingly participate in AI-enabled processes

- Training programs — Established education builds AI-related skills across the organization

How to Prepare for Your Assessment

Define Clear Objectives and Scope

Start by establishing what you want to achieve with the assessment. Common objectives include strategic planning, resource allocation, risk management, or performance benchmarking. Your objectives influence both the methodology and scope of the evaluation.

Assessment scope determines which parts of your organization the evaluation examines. Some focus on specific departments, while others evaluate enterprise-wide capabilities. The scope depends on your organization’s size, current AI usage, and available resources.

Consider starting with pilot areas or specific use cases rather than trying to assess everything at once. This approach provides manageable scope while generating actionable insights.

Assemble Your Assessment Team

Source: ResearchGate

Effective assessments combine internal knowledge with external perspective. Your team composition influences both the quality of insights and organizational acceptance of recommendations.

Internal champions provide organizational knowledge and help build support for the process. Include representatives from:

- Executive leadership for strategic perspective

- IT teams for technical infrastructure insights

- Business units for operational requirements

- Legal and compliance for regulatory considerations

External expertise brings objectivity and specialized experience. Third-party facilitators can identify blind spots that internal teams might miss and provide credibility when presenting sensitive findings.

Gather Documentation and Data

Documentation collection creates the foundation for accurate assessment. Gather existing materials that support evaluation activities:

- Current AI strategy documents and project descriptions

- Technology architecture diagrams and data governance policies

- Existing AI project performance data and lessons learned

- Organizational charts showing AI-related roles and responsibilities

Previous assessment results from IT audits or digital transformation studies offer baseline information and trending data. Missing or incomplete information leads to gaps in evaluation and reduces confidence in results.

Step-by-Step Assessment Process

Source: Amzur Technologies

1. Conduct Stakeholder Interviews

Begin by engaging representatives from all organizational levels that interact with or may be affected by AI. Design structured interview guides that explore each participant’s understanding of AI strategy, experience with current implementations, and perceived barriers to adoption.

Include diverse perspectives:

- Executives responsible for AI strategy

- IT teams managing technical infrastructure

- Data scientists working with AI tools

- Business leaders implementing AI solutions

- End users who interact with AI systems

Document responses systematically to identify common themes, conflicting perspectives, and gaps between different stakeholder groups.

2. Evaluate Technical Infrastructure

Examine your technology foundation across computing resources, software platforms, and integration capabilities. Review cloud computing resources, development tools, and deployment platforms to assess capacity for AI at scale.

Key areas to evaluate:

- Processing power and storage capacity for AI workloads

- Machine learning development tools and frameworks

- Integration between AI systems and business applications

- Security controls protecting AI systems and data

Document current performance metrics and identify bottlenecks that may constrain AI implementation.

3. Review Data Quality and Governance

Analyze data sources across your organization to understand availability, quality, and accessibility of information that AI systems require. Examine data collection processes, storage systems, and governance policies.

Test data quality by sampling datasets for completeness, accuracy, consistency, and timeliness. Assess your organization’s ability to prepare and maintain high-quality datasets for AI model training and operations.

4. Score and Analyze Results

Apply scoring methodologies from established frameworks to rate capabilities across each assessment dimension. Assign numerical scores based on evidence collected during interviews, technical evaluations, and data reviews.

Calculate maturity scores for strategic alignment, governance practices, technical infrastructure, data readiness, and organizational culture. Identify patterns that reveal organizational strengths and priority improvement areas.

5. Compile Results and Recommendations

Create clear documentation that supports decision making and improvement planning. Your assessment report should include:

- Current maturity level ratings across evaluated dimensions

- Specific capability gaps identified through analysis

- Prioritized recommendations for improvement initiatives

- Resource requirements and timeline estimates for each recommendation

Prepare different report formats for different stakeholders — executive summaries for leadership and detailed technical findings for implementation teams.

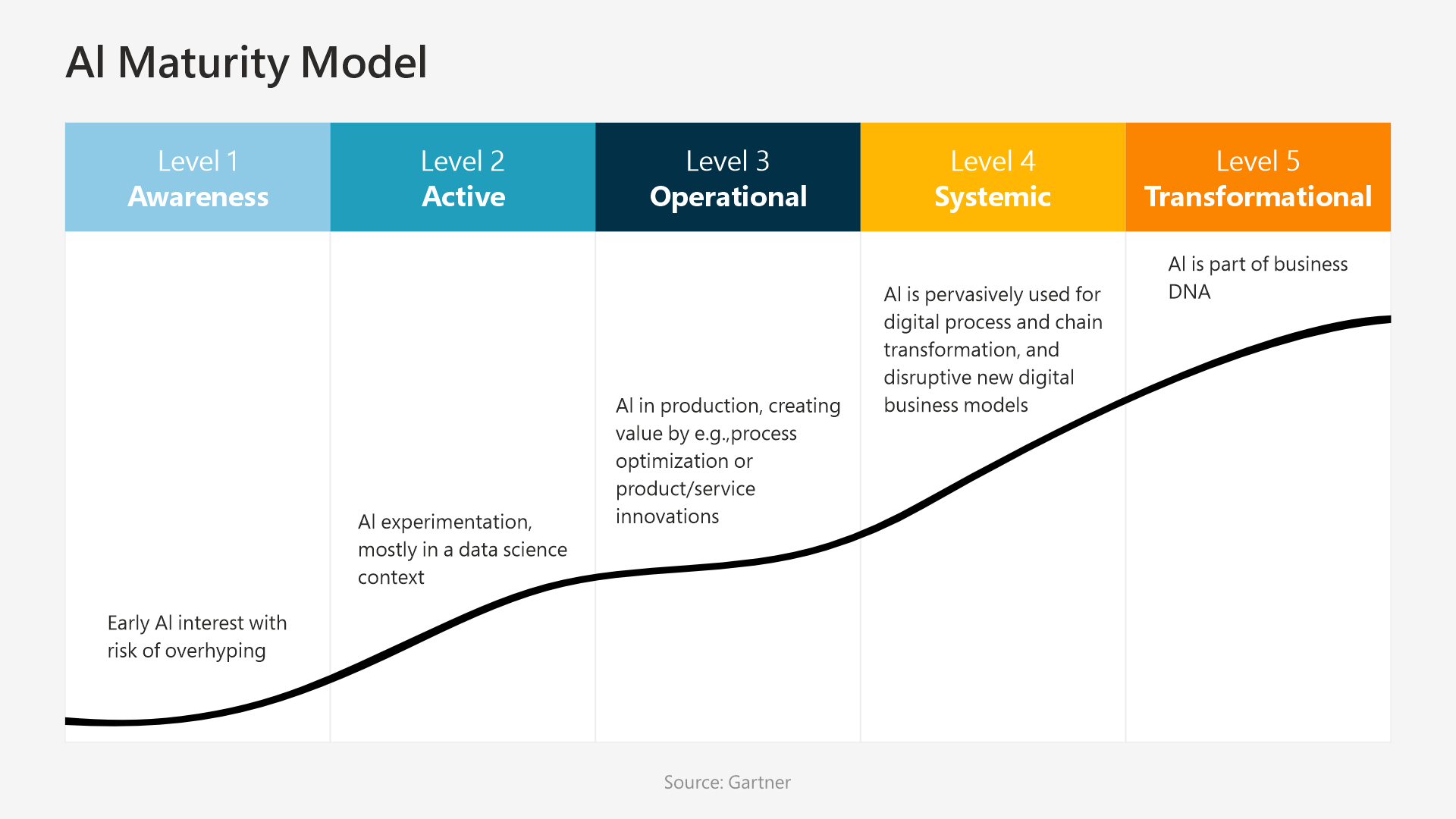

AI Maturity Levels Explained

Source: United States Artificial Intelligence Institute

Initial Level — Starting AI Exploration

Organizations at the initial level conduct isolated AI experiments without comprehensive strategy or governance. Individual departments might explore AI tools independently, but there’s no central coordination or oversight.

These organizations lack formal AI governance structures. Data management remains inconsistent across departments, with different teams maintaining separate data sources and quality standards.

Developing Level — Building Foundations

Organizations at the developing level begin establishing AI strategies and governance structures. Leadership creates formal AI committees to coordinate initiatives and evaluate potential use cases.

Basic governance frameworks emerge to guide AI experimentation. Organizations develop initial policies for AI ethics and data usage, though implementation remains limited in scope.

Defined Level — Implementing Systematic Processes

Organizations at the defined level implement formal AI processes with documented procedures. Comprehensive governance frameworks guide decision-making across all AI initiatives.

Standardized development methodologies ensure consistent approaches to AI implementation. Clear roles and responsibilities exist for AI governance, development, and maintenance activities.

Managed Level — Measuring Performance

Organizations at the managed level demonstrate measurable AI outcomes through established performance metrics and monitoring systems. AI applications integrate seamlessly with existing business workflows.

Comprehensive measurement frameworks track both technical performance and business value creation. Regular performance reviews optimize AI applications and identify improvement opportunities.

Optimized Level — Continuous Innovation

Organizations at the optimized level maintain continuous improvement cultures that drive ongoing advancement in AI capabilities. AI becomes integral to organizational strategy and operations across all business functions.

Advanced capabilities include sophisticated machine learning operations platforms and automated model development pipelines. These organizations often contribute to industry standards and share expertise with others.

Turning Assessment Results Into Action

Prioritize Improvement Initiatives

Source: Product School

Use an impact versus effort matrix to rank improvement recommendations. This framework evaluates each recommendation based on expected business value and implementation complexity.

Quick wins occupy the high-impact, low-effort category. These might include creating AI ethics guidelines, establishing data quality metrics, or implementing basic model performance tracking.

Foundational capabilities represent high-impact initiatives requiring moderate to significant effort. Examples include implementing comprehensive data governance frameworks or establishing machine learning operations platforms.

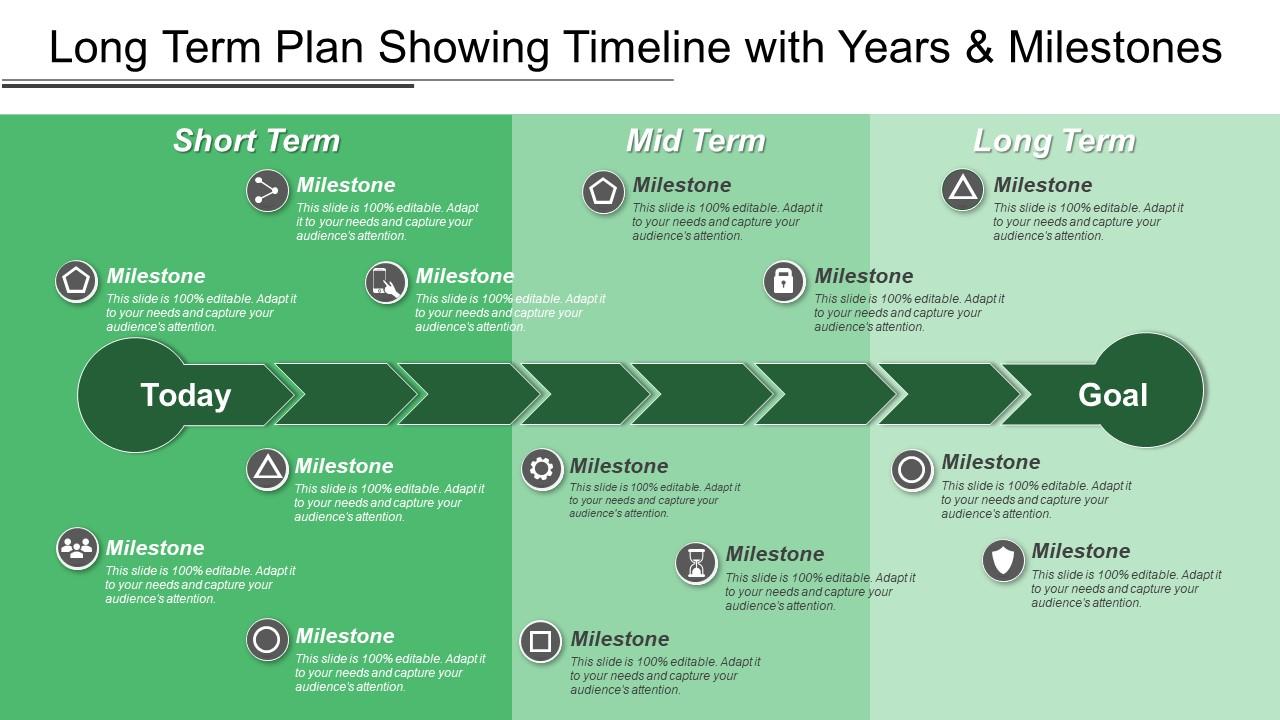

Create Implementation Roadmaps

Source: SlideTeam

Translate prioritized improvements into detailed project plans with specific timelines and resource requirements. Account for organizational change capacity and available resources while maintaining realistic expectations. Consider how AI change management affects implementation timelines and success factors.

Short-term goals (3-6 months) address immediate gaps through quick wins and foundational improvements. Medium-term objectives (6-18 months) build core capabilities and infrastructure. Long-term vision (18-36 months) encompasses advanced maturity achievements.

Plan Resources and Budget

Source: Agility PR Solutions

Resource planning requires comprehensive cost estimation across technology, training, personnel, and external support categories. Technology costs typically represent 40-60 percent of AI maturity improvement budgets.

Training and development costs include formal education programs and ongoing skill development. Personnel costs include existing employee time allocation and new hiring requirements for specialized AI positions.

Common Assessment Pitfalls to Avoid

Insufficient Stakeholder Engagement

Organizations often limit assessment participation to technical teams while excluding business stakeholders who understand operational requirements. Comprehensive assessment requires perspectives from leadership, business units, technical teams, and support functions.

Create safe environments for feedback and guarantee anonymity where appropriate. Communicate the assessment’s improvement purpose rather than evaluation focus to encourage honest participation.

Overemphasis on Technical Capabilities

Technical infrastructure and data capabilities represent necessary but insufficient conditions for AI success. Cultural readiness, change management capabilities, and workforce development often determine whether technical capabilities translate into business value.

Balance technical evaluation with organizational and cultural assessment. Include representatives from all affected groups to ensure comprehensive perspective.

Poor Action Planning

Effective action plans require prioritization based on business impact and implementation feasibility. Implementation success depends on realistic resource allocation, clear accountability assignment, and regular progress monitoring.

Focus on foundational improvements that enable multiple AI applications rather than pursuing advanced capabilities without adequate prerequisites.

Building Long-Term AI Excellence

Regular assessment cycles support ongoing AI maturity development rather than one-time evaluation. Most organizations establish quarterly or bi-annual assessment cycles depending on their AI implementation pace and strategic planning requirements.

Assessment methodology refinement improves evaluation accuracy as organizational capabilities mature and industry best practices evolve. Regular methodology review ensures assessment processes remain aligned with organizational needs.

Industry benchmarking enables ongoing comparison with peers and identification of emerging best practices. External benchmarking helps validate internal assessment results and improvement planning decisions.

Frequently Asked Questions

How long does a comprehensive AI maturity assessment take?

Most assessments require four to eight weeks depending on organization size and complexity. Smaller organizations with fewer AI initiatives complete assessments in four to six weeks, while large enterprises may require eight weeks or longer. The timeline includes stakeholder interviews, data collection, technical evaluations, and analysis across multiple capability dimensions.

What does an AI maturity assessment typically cost?

Costs vary based on scope and methodology. Internal assessments primarily involve staff time costs, typically requiring 200-400 hours across multiple departments. External consulting engagements range from mid-five figures for basic assessments to six figures for comprehensive enterprise evaluations.

How often should we repeat AI maturity assessments?

Annual assessments help track progress effectively. Organizations in rapid AI development phases may benefit from quarterly progress reviews focusing on specific maturity dimensions. Companies experiencing significant changes like mergers or leadership transitions may require additional assessments to evaluate how these changes affect AI readiness.

Can small organizations benefit from formal AI maturity assessments?

Small organizations can use simplified frameworks focusing on core capabilities rather than comprehensive enterprise models. Streamlined assessments concentrate on essential dimensions like data quality, basic governance structures, and technical infrastructure readiness without requiring extensive organizational complexity analysis.

What if our assessment reveals we’re not ready for AI implementation?

Focus on foundational improvements in data quality, governance, and organizational readiness before pursuing AI initiatives. Assessment results identifying readiness gaps provide clear roadmaps for capability development in priority areas like data management, workforce training, or technology infrastructure.

How do we ensure honest participation during the assessment?

Create safe environments for feedback, guarantee anonymity where appropriate, and communicate the assessment’s improvement purpose rather than evaluation focus. Anonymous survey tools and confidential interview processes encourage candid responses about organizational challenges and capability gaps.