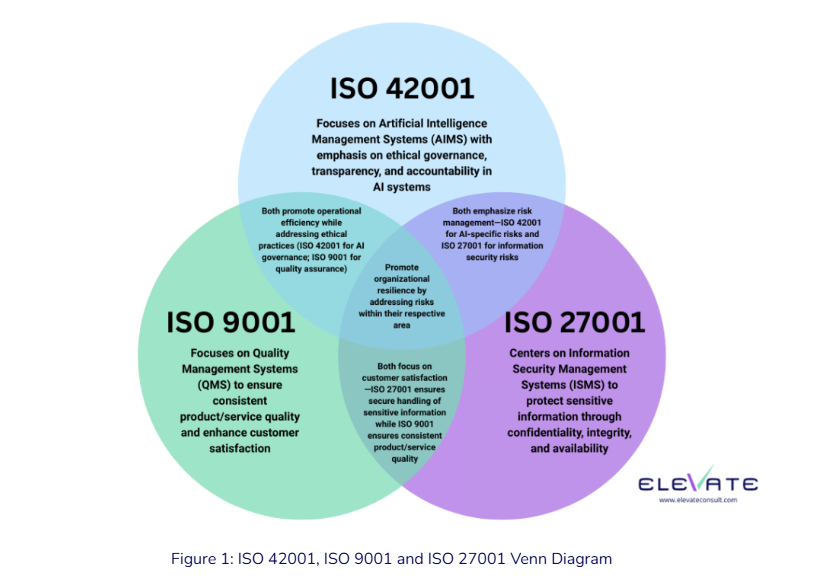

ISO 27001 and ISO 42001 are international standards that address different but overlapping risk management frameworks — information security versus artificial intelligence governance.

The emergence of AI systems has created new risk categories that traditional cybersecurity frameworks can’t adequately address. While ISO 27001 focuses on protecting data confidentiality, integrity, and availability, ISO 42001 tackles novel challenges like algorithmic bias, ethical AI deployment, and automated decision-making oversight.

Organizations implementing AI systems face a critical choice between adopting established information security practices or embracing emerging AI governance requirements. Many companies discover that ISO 27001’s proven controls fail to address AI-specific risks such as model transparency, bias mitigation, and societal impact assessment.

The risk landscapes of these two standards reveal fundamental differences in scope, methodology, and organizational impact that directly influence implementation strategies and compliance costs. Understanding these distinctions helps organizations develop comprehensive governance frameworks that address both traditional cybersecurity threats and emerging AI-related risks.

What Are the Critical Risk Differences Between ISO 27001 and ISO 42001

ISO 27001 addresses information security risks through the CIA triad: confidentiality, integrity, and availability of data assets. The standard focuses on protecting organizational information from unauthorized access, data corruption, and system downtime through established security controls. These risks follow predictable patterns with well-understood attack vectors and proven mitigation strategies.

Source: Wallarm

ISO 42001 introduces AI-specific risk categories that extend beyond traditional cybersecurity boundaries. The standard addresses algorithmic bias, model transparency, over-reliance on automation, and ethical considerations that can affect individuals and communities. According to IBM’s research, 80 percent of business leaders identify AI explainability, ethics, bias, and trust as major roadblocks to AI adoption.

Source: AI at Wharton – University of Pennsylvania

AI risks manifest through interconnected pathways that can create cascading failures across organizational and societal boundaries. A data privacy violation in an AI system may simultaneously trigger regulatory compliance issues, create bias in model outputs, and undermine public trust. Traditional information security frameworks lack mechanisms to address these complex, multi-dimensional risk scenarios.

The temporal nature of AI risks differs significantly from conventional cybersecurity threats. AI models evolve through continued learning, data drift alters system behavior over time, and emergent properties create unforeseen risk scenarios. ISO 27001 risks typically remain stable until external factors change, while AI risks require continuous monitoring and adaptive management strategies.

ISO 27001 Risk Management Framework and Security Coverage

ISO 27001 operates on a risk-based approach that systematically identifies, assesses, and treats information security risks through 93 controls organized into four categories: organizational, people, physical, and technological. Organizations establish risk criteria, conduct asset inventories, and implement appropriate controls based on their specific threat environment and business objectives.

Source: Iseo Blue

The standard’s risk assessment methodology relies on established likelihood and impact evaluation criteria supported by historical data and industry benchmarks. Organizations can leverage decades of cybersecurity incident data, mature threat intelligence sources, and proven vulnerability assessment methodologies to quantify risks using either qualitative or quantitative approaches.

Risk treatment within ISO 27001 follows four primary strategies:

- Risk modification — implementing security controls to reduce likelihood or impact

- Risk retention — accepting certain risks within organizational tolerance

- Risk avoidance — eliminating activities that generate unacceptable risks

- Risk sharing — transferring risks through insurance or outsourcing arrangements

The framework requires continuous monitoring and regular review of risk assessments to account for evolving threat landscapes and organizational changes. Organizations establish systematic processes for evaluating control effectiveness, conducting periodic risk assessments, and updating their Statement of Applicability to reflect current risk treatment decisions.

ISO 42001 AI Risk Categories and Emerging Threat Landscape

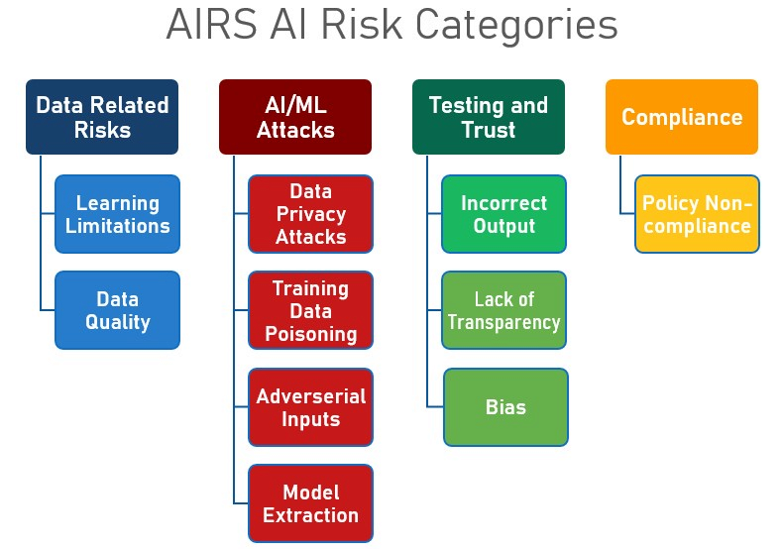

ISO 42001 addresses AI-specific risks through 39 controls organized into nine control objectives covering AI system lifecycle management, data governance, model transparency, bias mitigation, and human oversight. The standard requires both AI risk assessments focusing on technical and operational risks to organizations and AI impact assessments evaluating broader consequences for individuals and society.

Data privacy violations represent a critical risk category where AI systems process vast quantities of sensitive personal and proprietary information for model training and inference. These risks manifest through unlawful data scraping for AI model training, weak consent mechanisms in data collection processes, and exposure of personally identifiable information during AI system operations.

Source: Research AIMultiple

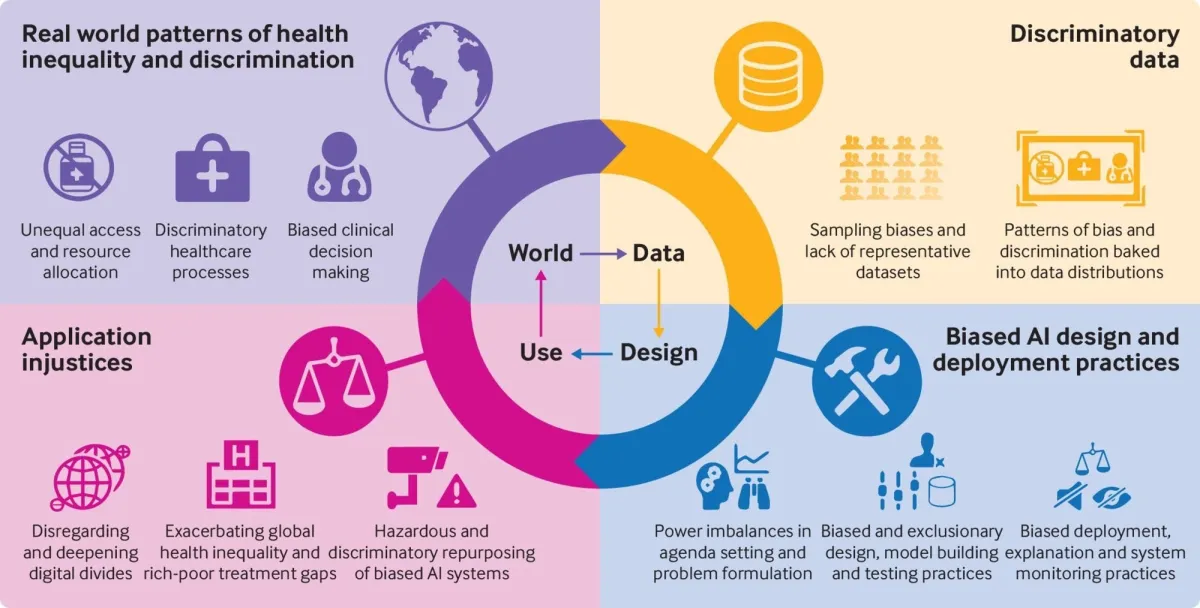

Algorithmic bias and discrimination risks emerge when AI systems reflect and amplify biases present in training data or design assumptions. These risks can lead to discriminatory outcomes affecting hiring decisions, lending practices, healthcare delivery, or law enforcement activities with implications that extend beyond organizational boundaries to affect entire communities.

Over-reliance on automation creates risks when organizations remove or weaken human oversight of critical decision-making processes. AI models may miss emerging threats due to outdated training data while security teams develop complacency assuming comprehensive AI coverage.

Critical AI Risks ISO 27001 Cannot Address

ISO 27001’s control framework lacks mechanisms to evaluate and mitigate algorithmic bias in AI decision-making systems. The standard’s focus on technical security controls can’t address discriminatory outcomes that result from biased training data or flawed model assumptions. Organizations relying solely on ISO 27001 may implement technically secure AI systems that still produce unfair or discriminatory results.

Ethical considerations in AI deployment fall outside ISO 27001’s scope, which concentrates on protecting information assets rather than evaluating societal impact. The standard provides no guidance for assessing whether AI applications align with organizational values or societal expectations.

Transparency and explainability requirements for AI systems represent gaps in traditional information security frameworks. ISO 27001 controls focus on protecting system integrity and availability but don’t address the need for AI decision-making processes to be understandable and accountable. Regulatory frameworks increasingly require organizations to explain how AI systems reach specific decisions, particularly in high-stakes applications.

Stakeholder engagement and public trust considerations extend beyond ISO 27001’s organizational focus. AI systems often affect external stakeholders who have no direct relationship with the implementing organization, creating governance responsibilities that information security frameworks don’t address.

When Your Organization Needs Both Standards for Complete Risk Coverage

Organizations deploying AI systems within information technology environments require both standards to address overlapping but distinct risk domains. ISO 27001 provides essential protection for the underlying infrastructure, data storage systems, and network security that support AI applications. ISO 42001 addresses AI-specific governance requirements including model management, bias mitigation, and ethical oversight that extend beyond traditional cybersecurity controls.

Financial services organizations exemplify the need for dual implementation where AI systems process sensitive customer data for credit decisions, fraud detection, or investment recommendations. ISO 27001 controls protect customer financial information from unauthorized access and data breaches. ISO 42001 controls ensure AI lending algorithms don’t discriminate against protected demographic groups and maintain transparency in decision-making processes required by fair lending regulations.

Healthcare organizations implementing AI diagnostic systems face similar dual requirements where patient data protection and AI system reliability both represent critical risk areas. ISO 27001 safeguards protected health information through access controls, encryption, and incident response procedures. ISO 42001 ensures AI diagnostic tools maintain accuracy across diverse patient populations and provide explainable results that support clinical decision-making.

Implementation Risks and Strategic Cost Considerations

ISO 27001 implementation benefits from mature methodologies, extensive consultant expertise, and predictable certification processes developed through decades of deployment across diverse industries. Organizations can access competitive markets for implementation services with established market rates, clear audit fee structures, and proven approaches for estimating total cost of ownership.

Source: ISO Templates & Documents

ISO 42001 implementation faces challenges associated with an emerging standard where consultant expertise remains limited and audit approaches continue evolving. The scarcity of qualified ISO 42001 consultants and auditors creates potential implementation bottlenecks and may increase costs as organizations compete for limited expertise.

Resource allocation challenges differ significantly between the standards in terms of organizational capabilities required for successful implementation:

- ISO 27001 — assumes basic organizational capabilities in information security, risk management, and IT governance

- ISO 42001 — requires specialized capabilities in AI governance, ethical assessment, bias detection, and AI-specific regulatory compliance

Timeline considerations for dual implementation present strategic challenges for organizations seeking both certifications simultaneously. Integrated management systems can provide operational efficiencies and aligned audit cycles, but implementation complexity may extend overall certification timelines and strain organizational resources. Small businesses particularly face resource constraints when managing complex compliance requirements.

Building a Strategic Risk Management Framework for AI-Driven Organizations

Organizations developing comprehensive governance strategies can align ISO 27001 and ISO 42001 implementation with broader risk management frameworks such as the NIST AI Risk Management Framework. The NIST framework’s four fundamental functions of Govern, Map, Measure, and Manage provide complementary capabilities to ISO standards while addressing different organizational needs and regulatory contexts.

Source: AI Governance, Risk & Innovation – The Governors

Integration with existing quality management standards such as ISO 9001 provides opportunities for operational efficiency and comprehensive organizational governance. Organizations can leverage quality management principles and processes to support AI governance requirements while maintaining integrated management systems that avoid duplication of effort.

Regulatory alignment represents a critical consideration as governments worldwide implement AI-specific regulations and oversight requirements. The European Union’s AI Act, NIST AI Risk Management Framework, and emerging national AI strategies create overlapping requirements that organizations address through coordinated approaches.

Advanced governance, risk, and compliance platforms enable organizations to manage multiple framework requirements through unified systems and processes. These technological solutions support simultaneous implementation of ISO 27001, ISO 42001, and NIST AI RMF requirements through integrated risk assessment tools, automated compliance monitoring, and comprehensive reporting capabilities.

Frequently Asked Questions About ISO 27001 vs ISO 42001 Risk Management

Source: SISA

Can organizations implement ISO 42001 without first achieving ISO 27001 certification? Organizations can pursue ISO 42001 certification independently, though existing ISO 27001 capabilities provide valuable foundation for AI governance implementation. The shared management system structure and risk-based approach offer operational efficiencies when implementing both standards sequentially or simultaneously.

How do surveillance audit requirements differ between ISO 27001 and ISO 42001? ISO 27001 surveillance audits follow established patterns with predictable scope, timing, and cost structures that organizations can plan effectively. ISO 42001 surveillance audits may be more variable as auditing practices continue evolving, potentially requiring more comprehensive ongoing documentation of AI system changes and risk assessment updates.

What organizational roles are typically responsible for implementing each standard? ISO 27001 implementation typically involves IT security teams, risk management functions, and compliance departments working within established organizational structures. ISO 42001 implementation requires coordination between technical teams, legal departments, ethics committees, and business stakeholders in ways that may necessitate new governance structures and cross-functional collaboration approaches.

How do ISO 27001 and ISO 42001 address data governance differently? ISO 27001 focuses on protecting data confidentiality, integrity, and availability through technical and administrative security controls. ISO 42001 addresses data governance specifically for AI systems, including data quality requirements, bias detection in datasets, and ethical considerations for data collection and use in AI model training and inference.

What happens when AI systems create security incidents that affect both standards? Security incidents involving AI systems may trigger response procedures under both standards simultaneously. Organizations implement coordinated incident response processes that address traditional cybersecurity concerns through ISO 27001 procedures while evaluating AI-specific impacts like bias amplification or ethical violations through ISO 42001 frameworks.

Conclusion

The comparative analysis of ISO 27001 and ISO 42001 reveals two distinct yet complementary approaches to managing modern organizational risks. ISO 27001 provides the foundational security framework that protects information assets through time-tested methodologies, while ISO 42001 addresses the unprecedented challenges of artificial intelligence governance that extend far beyond traditional cybersecurity concerns.

Organizations operating in today’s AI-driven landscape can’t rely solely on either standard alone. The convergence of cybersecurity threats and AI-specific risks creates a complex risk environment that demands integrated governance approaches. Companies implementing AI systems recognize that technical security controls, while essential, can’t address algorithmic bias, ethical considerations, or the societal impact of automated decision-making.

The future of organizational risk management lies in the strategic integration of established information security practices with emerging AI governance frameworks. As artificial intelligence becomes increasingly embedded in business operations and regulatory frameworks continue evolving worldwide, organizations that develop comprehensive capabilities spanning both domains will achieve competitive advantages through enhanced trust, compliance readiness, and operational resilience.