The NIST AI Risk Management Framework provides a structured approach for organizations to identify, assess, and manage risks throughout the artificial intelligence system lifecycle. Released in January 2023, this voluntary framework helps organizations balance AI innovation with responsible deployment practices.

Organizations face mounting pressure to deploy AI systems while ensuring safety, security, and fairness. The framework addresses complex challenges like algorithmic bias, privacy protection, and system reliability that traditional risk management approaches often miss. Many organizations struggle to translate AI governance principles into practical implementation steps.

The NIST framework centers on four core functions: Govern, Map, Measure, and Manage. These functions work together to create comprehensive AI risk management capabilities. The framework emphasizes trustworthiness characteristics including validity, reliability, safety, security, accountability, transparency, explainability, privacy enhancement, and fairness.

Source: NIST AI Resource Center – National Institute of Standards and Technology

Implementation requires systematic execution across seven critical steps that transform framework principles into operational reality. Each step builds on previous work while creating sustainable risk management capabilities that evolve with changing AI technologies and organizational needs.

Understanding the NIST AI Risk Management Framework

The NIST AI Risk Management Framework (AI RMF 1.0) is a voluntary guidance system created by the National Institute of Standards and Technology to help organizations manage risks throughout the entire lifecycle of AI models. Rather than imposing specific requirements, the AI RMF offers flexible guidance that organizations can adapt to their particular contexts and risk tolerance levels.

The framework helps organizations build trustworthy AI systems by establishing clear processes for understanding where risks might emerge and how to address them effectively. The framework recognizes that AI systems operate in complex environments where technological capabilities intersect with human values and social structures.

The Four Core Functions Explained

The NIST AI RMF centers around four interconnected core functions that work together to provide comprehensive AI risk management:

Govern establishes organizational culture, policies, and structures necessary for effective AI risk management. This function creates governance committees, develops comprehensive policies, and integrates AI risk considerations into standard business processes.

Map identifies the context and characteristics of AI systems within their operational environments. This function documents system purposes, data dependencies, stakeholder communities, and potential impacts on individuals and society.

Measure analyzes, benchmarks, and monitors AI risks through quantitative, qualitative, and mixed-method approaches. This function provides the empirical foundation for informed decision-making about risk levels and mitigation effectiveness.

Manage allocates resources and implements specific risk treatment strategies based on mapping and measurement insights. This function includes developing response plans, recovery procedures, and communication protocols for AI-related incidents.

How NIST AI RMF Supports Trustworthy AI Development

The framework directly addresses key concerns about responsible AI by embedding trustworthiness characteristics into every aspect of system development and operation. The AI RMF focuses on nine essential characteristics that work together to create reliable AI systems.

For bias and fairness concerns, the framework requires ongoing monitoring of decision-making patterns across different demographic groups. Organizations actively assess whether their AI systems produce equitable results rather than simply avoiding obvious discrimination.

Transparency and accountability mechanisms built into the framework ensure that AI system operations can be explained to relevant stakeholders. Documentation requirements create clear audit trails for significant decisions, system modifications, and risk management activities.

The framework addresses privacy concerns through comprehensive data protection requirements and evaluation of privacy-preserving techniques under actual operational conditions. Security provisions protect against both traditional cybersecurity threats and AI-specific attacks like adversarial inputs and model extraction attempts.

Preparing for NIST AI RMF Implementation

Implementing the NIST AI Risk Management Framework requires careful preparation across three fundamental areas: understanding your current risk position, assembling the right team, and establishing implementation foundations. Organizations that complete these preparatory steps position themselves for successful framework deployment.

Assessing Your Organization’s Current AI Risk Posture

Organizations begin preparation by conducting a comprehensive assessment of their existing AI governance capabilities and current risk management practices. This assessment provides the baseline understanding necessary for effective NIST AI RMF implementation planning.

The assessment starts with documenting current AI governance structures, including any existing AI ethics committees, risk oversight bodies, or AI-related policies. Organizations examine whether these structures have decision-making authority, defined responsibilities, and regular meeting schedules.

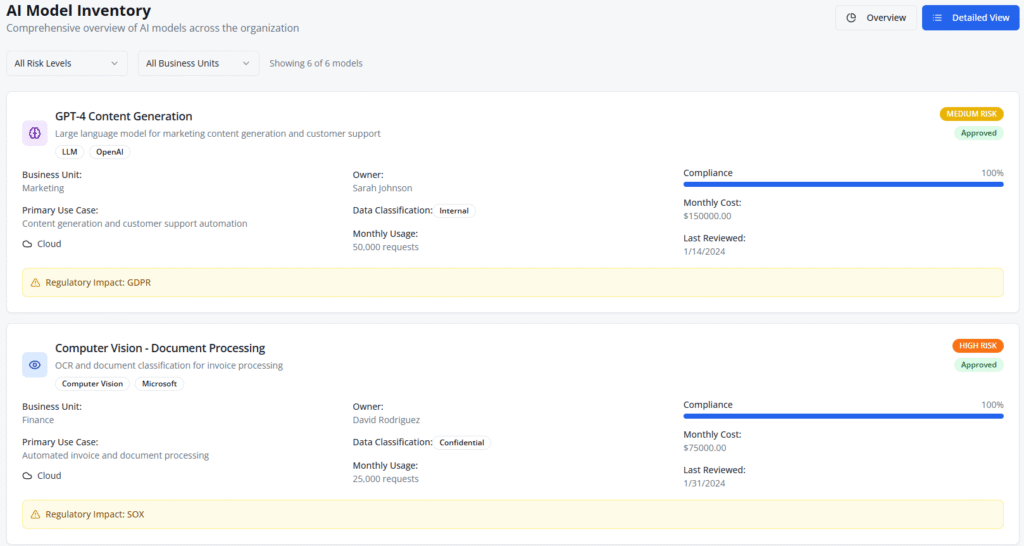

Many organizations discover they have informal AI governance practices that lack documentation or formal authority. Creating a comprehensive inventory of current AI systems represents a critical component of the assessment. This inventory documents all AI applications currently in use, under development, or planned for future deployment.

The assessment concludes with gap analysis comparing current capabilities against NIST AI RMF requirements. Organizations identify specific areas where new capabilities, resources, or structures will be required for successful implementation.

Building Your AI Governance Team

Effective NIST AI RMF implementation requires a multidisciplinary team with representatives from technical, business, legal, and ethics backgrounds. The governance team provides the collaborative foundation necessary for addressing the diverse aspects of AI risk management.

The AI governance team includes technical representatives who understand AI system development, deployment, and operation. These team members provide expertise on system capabilities, limitations, and technical risk factors.

Business representatives ensure that AI risk management activities align with organizational objectives and operational requirements. Legal and compliance representatives provide expertise on regulatory requirements, contractual obligations, and liability considerations.

Each governance team member requires clearly defined roles and responsibilities within the NIST AI RMF implementation process. The governance team requires defined decision-making authority for AI risk management activities, including the ability to approve or reject AI projects based on risk assessments.

Step 1: Build Comprehensive AI Governance Infrastructure

Governance infrastructure provides the organizational framework that oversees AI risk management across your entire organization. Building this foundation first creates the structure needed to manage AI risks effectively throughout your company’s AI journey.

Create Cross-Functional AI Governance Committee

An AI governance committee brings together representatives from different parts of your organization to make decisions about AI risks and policies. The committee composition includes:

Source: Madison AI

- Technical teams — developers and maintainers of AI systems

- Business units — groups that use AI tools in their work

- Legal and compliance professionals — experts who understand regulations

- Risk management specialists — people focused on organizational risk

- Ethics experts — professionals who understand ethical implications

- Executive leaders — people with decision-making authority

The committee meets regularly — typically monthly or quarterly — to review AI projects, assess risks, and make governance decisions. Meeting frequency depends on your organization’s AI activity level and risk profile.

The committee has substantive decision-making power, including authority to approve, modify, or stop AI projects based on risk assessments and organizational priorities. The reporting structure connects the committee directly to executive leadership and board oversight.

Develop AI Risk Management Policies and Standards

AI risk management policies establish the rules and standards that guide how your organization develops, deploys, and operates AI systems. These policies address data privacy protection, cybersecurity requirements, bias mitigation strategies, transparency obligations, and accountability mechanisms.

Policies are tailored to your organization’s specific context, risk tolerance, and regulatory environment. A healthcare organization faces different AI risks than a retail company, so policies reflect these differences.

Risk tolerance statements define what level of AI risk your organization accepts in different situations. These statements help teams understand when AI projects can proceed and when additional safeguards are required.

Compliance requirements within the policies ensure AI systems meet relevant regulations and industry standards. For organizations in regulated industries, these requirements connect AI governance to existing compliance frameworks.

Establish Executive Oversight and Accountability

Executive sponsorship provides the leadership commitment and resources necessary for effective AI governance. A senior executive, often the CTO or Chief Risk Officer, takes responsibility for AI governance outcomes and ensures the program receives adequate support.

Board reporting mechanisms keep the board of directors informed about AI risks and governance activities. Regular reports summarize AI risk assessments, governance decisions, and performance against established metrics.

Accountability measures define who is responsible for different aspects of AI governance and what consequences follow from governance failures. These measures establish clear ownership for AI risk management activities and create incentives for responsible AI practices.

Performance metrics track how well the AI governance program achieves its objectives. Metrics include the number and severity of AI-related incidents, compliance with governance policies, and effectiveness of risk mitigation activities.

Step 2: Conduct Systematic AI Risk Assessment and Mapping

Risk assessment and mapping creates a comprehensive understanding of potential problems before they occur. Organizations document every AI system, identify who gets affected, map risks across development stages, and rank problems by severity.

Inventory All AI Systems and Applications

The inventory process starts with identifying every AI system in the organization. Teams often discover more AI tools than initially expected, including chatbots, recommendation engines, automated decision systems, and predictive analytics platforms.

Each system gets cataloged with specific details about its purpose, current status, and business function. System documentation includes the intended use case, such as customer service automation or fraud detection.

Development stage information shows whether systems are in research, testing, pilot deployment, or full production. Technical details cover data sources, algorithms used, and integration points with other business systems.

Business impact assessment measures how each AI system affects operations, revenue, or customer experience. The inventory becomes a living document that teams update when new systems deploy or existing systems change functionality.

Identify Key Stakeholders and Impact Areas

Stakeholder mapping identifies everyone who affects or gets affected by AI systems. Internal stakeholders include employees who use AI tools, managers who oversee AI projects, technical teams who maintain systems, and executives who make strategic decisions.

External stakeholders encompass customers who interact with AI systems, business partners who share data, regulatory agencies that enforce compliance, and community members who experience indirect effects.

Impact analysis examines potential consequences for each stakeholder group. Customer impacts might include privacy violations, unfair treatment, or service disruptions. Employee impacts could involve job displacement, changed work processes, or exposure to biased decision-making tools.

The stakeholder map guides communication strategies and helps teams understand which groups need notification when problems occur. Different stakeholders require different types of information and have varying levels of technical expertise.

Map Risks Across the Complete AI Lifecycle

Risk mapping examines potential problems at each stage of AI system development and operation. Each phase presents unique challenges that require specific attention and mitigation strategies.

Design phase risks often stem from incomplete understanding of requirements or failure to consider diverse user needs. Teams may overlook edge cases or fail to anticipate how systems will perform in real-world conditions.

Development risks include data quality problems, such as incomplete datasets or historical biases embedded in training information. Algorithm choices may create unintended consequences, and testing may not cover all possible scenarios.

Deployment risks emerge when systems move from controlled environments to real operations. Performance may differ from testing results, integration with existing systems may fail, or users may interact with systems in unexpected ways.

Monitoring risks involve failure to detect problems after deployment. Systems may gradually degrade without triggering alerts, or teams may receive so many notifications that important signals get missed.

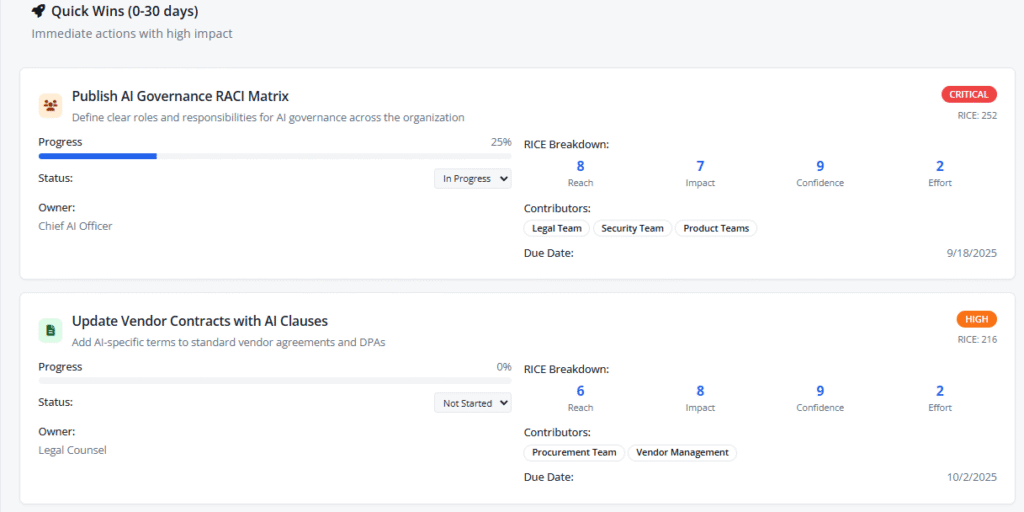

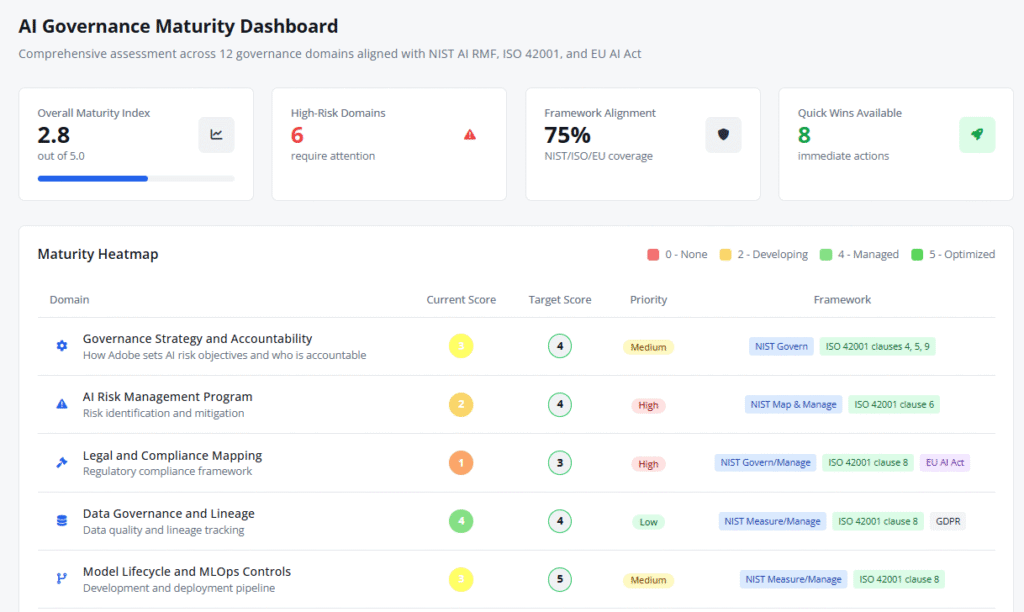

Prioritize Risks Based on Impact and Likelihood

Risk prioritization helps teams focus limited resources on the most serious problems. The process combines impact assessment with likelihood evaluation to create overall risk scores.

Source: Balbix

Impact scoring considers multiple dimensions, including financial losses, reputation damage, regulatory penalties, and harm to individuals. High-impact risks might include discriminatory hiring decisions, privacy breaches affecting thousands of customers, or safety failures in autonomous systems.

Likelihood assessment examines factors that make problems more or less probable. High-likelihood risks often involve common failure modes, such as data quality issues or integration problems.

Risk heat maps provide visual representation of prioritized risks, with impact on one axis and likelihood on the other. High-impact, high-likelihood risks appear in the red zone requiring immediate attention. Medium risks fall in yellow zones for scheduled remediation.

Step 3: Develop Robust Risk Measurement and Monitoring

Robust risk measurement and monitoring forms the foundation of effective AI governance by providing the data needed to make informed decisions about AI system safety and performance. Organizations establish measurement systems to track how well their AI systems perform and identify potential problems before they cause harm.

Select Risk Metrics and Key Performance Indicators

Organizations choose specific measurements to track different aspects of AI system trustworthiness and performance. These metrics provide concrete ways to evaluate whether AI systems operate safely and fairly.

Bias and fairness metrics measure whether AI systems treat different groups of people equally. Common measurements include demographic parity, which checks if positive outcomes occur at similar rates across different demographic groups, and equalized odds, which examines whether error rates remain consistent across populations.

Accuracy and reliability metrics track how often AI systems make correct decisions and whether performance remains consistent over time. Accuracy measures the percentage of correct predictions or classifications. Precision indicates how many positive predictions actually prove correct.

Security metrics monitor protection against threats and unauthorized access. Organizations track failed login attempts, successful intrusion attempts, data access violations, and system availability during attacks.

Privacy metrics evaluate how well AI systems protect personal information. Organizations measure data minimization compliance by tracking how much personal data systems collect versus what they actually need.

Implement Automated Risk Monitoring Tools

Automated monitoring systems continuously watch AI systems and alert personnel when problems occur. These tools provide real-time oversight without requiring constant human attention.

Monitoring platforms collect data from AI systems during normal operations. These platforms track prediction outputs, confidence scores, processing times, and resource usage. The platforms store this data for analysis and trend identification.

Alert systems notify relevant personnel when measurements exceed acceptable thresholds. Organizations configure alerts for different severity levels, with critical alerts requiring immediate response and lower-priority alerts generating daily or weekly summaries.

Data collection processes ensure monitoring systems gather complete and accurate information. Organizations establish data pipelines that capture relevant metrics without interfering with AI system performance.

Integration capabilities connect monitoring tools with existing IT infrastructure. Organizations integrate AI monitoring with security information and event management systems, IT service management platforms, and business intelligence tools.

Establish Performance Baselines and Thresholds

Performance baselines define normal operating ranges for AI systems, while thresholds trigger alerts when performance deviates from acceptable levels. Organizations establish these standards during initial AI system deployment and update them based on operational experience.

Baseline establishment involves measuring AI system performance under normal operating conditions over sufficient time periods to capture typical variation. Organizations collect baseline measurements across different user populations, time periods, and usage scenarios.

Accuracy thresholds define minimum acceptable performance levels for different types of decisions. Organizations set higher accuracy requirements for high-stakes decisions and may accept lower accuracy for less critical applications.

Fairness thresholds establish acceptable levels of disparity between different demographic groups. Organizations define maximum acceptable differences in accuracy, false positive rates, or decision rates across protected characteristics.

Security thresholds trigger alerts when systems detect potential threats or vulnerabilities. Organizations set thresholds for failed authentication attempts, unusual access patterns, and system resource usage that might indicate attacks.

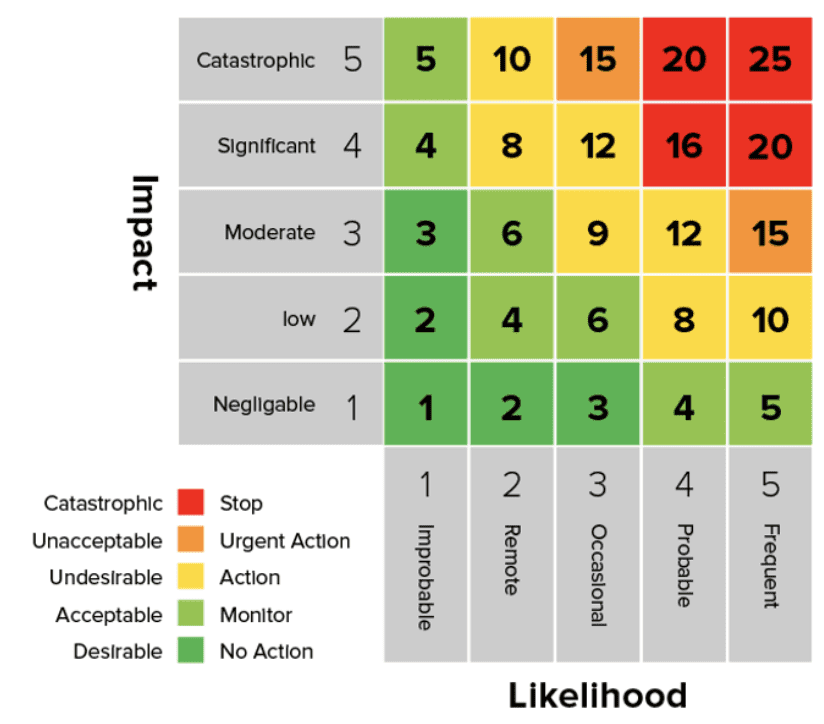

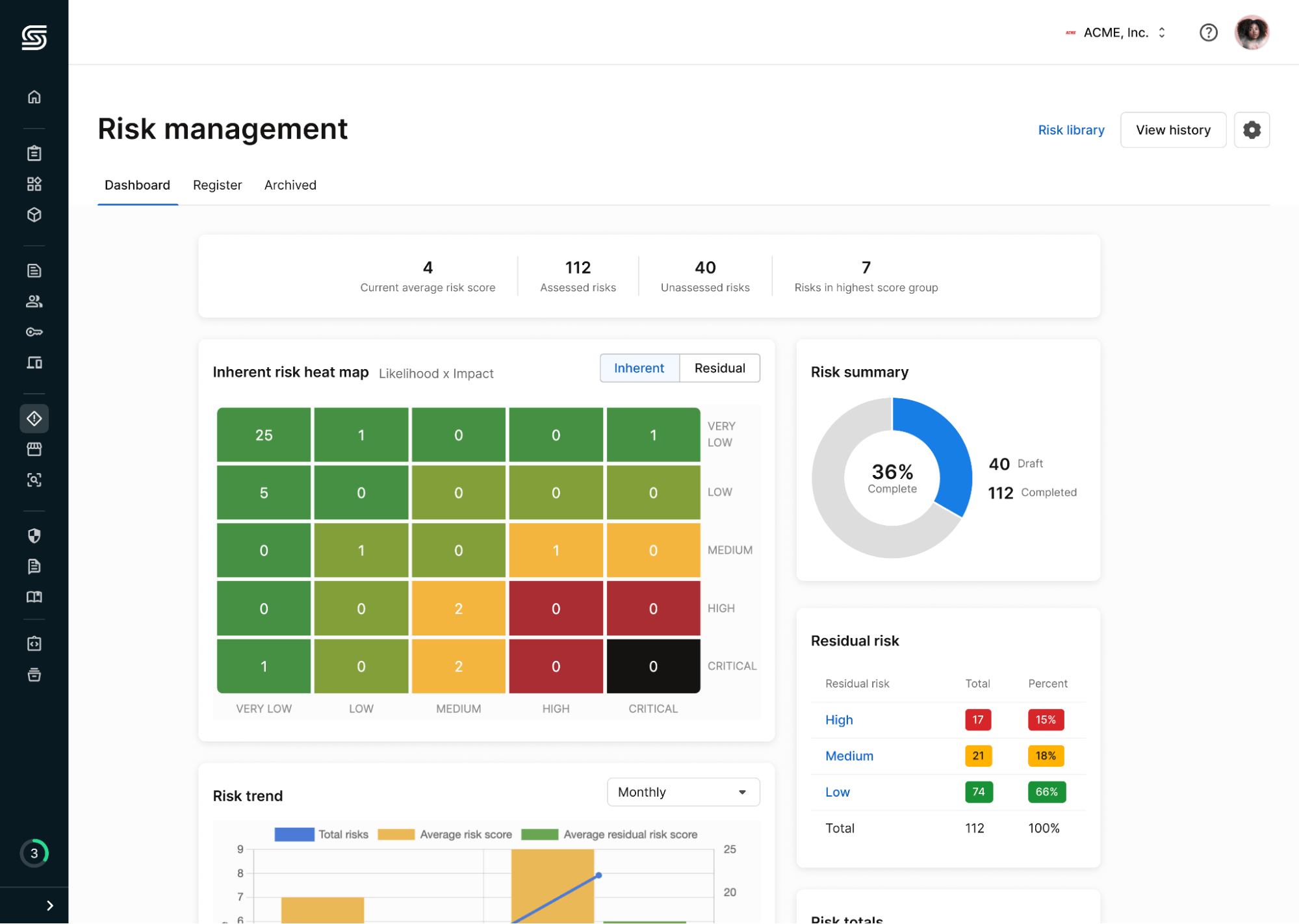

Create Risk Reporting and Dashboard Systems

Reporting systems translate monitoring data into information that stakeholders can understand and act upon. These systems provide different views of AI system performance tailored to various organizational roles and responsibilities.

Source: Secureframe

Executive dashboards present high-level summaries of AI system performance and risk levels. These dashboards highlight key trends, significant threshold violations, and overall system health using visual indicators that executives can quickly interpret.

Technical dashboards provide detailed information for AI system operators and developers. These dashboards include detailed performance metrics, error logs, system resource usage, and diagnostic information.

Operational reporting tracks day-to-day AI system performance for business users. These reports include accuracy trends, processing volumes, user satisfaction metrics, and business outcome measurements.

Compliance reporting documents AI system performance for regulatory and audit purposes. These reports include fairness assessments, security compliance measurements, privacy protection metrics, and incident summaries.

Step 4: Design Strategic Risk Management and Mitigation Plans

After mapping and measuring AI risks, organizations create comprehensive plans to handle identified threats. Strategic risk management involves choosing how to address each risk based on its priority level and potential impact.

Create Targeted Risk Treatment Strategies

Organizations have four main approaches for handling AI risks: avoidance, mitigation, transfer, and acceptance. Risk avoidance eliminates exposure to specific threats by choosing not to use certain AI capabilities or deploy systems in high-risk scenarios.

Risk mitigation reduces either the likelihood of problems occurring or their potential damage. Technical mitigation strategies include implementing bias detection algorithms, adding human review processes, or creating automatic shutoff mechanisms when systems detect anomalies.

Risk transfer shifts responsibility to other parties through insurance policies, vendor contracts, or third-party services. Organizations might require AI vendors to maintain liability insurance or include indemnification clauses in contracts.

Risk acceptance involves deliberately choosing to retain certain risks after weighing costs and benefits. Organizations might accept low-probability risks with minimal impact or risks where mitigation costs exceed potential damages.

Develop Controls for High-Priority Risk Areas

Technical controls provide automated protection against identified AI risks. Access control systems limit who can modify AI models or access sensitive training data. Encryption protects data during storage and transmission while maintaining system functionality.

Bias detection controls analyze AI outputs across different demographic groups to identify discriminatory patterns. Privacy-preserving techniques like differential privacy add mathematical noise to datasets while maintaining analytical value.

Procedural safeguards establish organizational processes that support responsible AI operations. Change management procedures require risk assessment before modifying AI systems. Regular audit schedules ensure ongoing compliance with policies and regulations.

Human oversight mechanisms define when and how people intervene in AI decision-making. Clear criteria specify which decisions require human review and establish escalation procedures for complex cases.

Establish Incident Response and Recovery Procedures

Incident response plans provide step-by-step guidance for handling AI-related problems. Detection procedures define warning signs that indicate system failures, security breaches, or discriminatory outcomes.

![]()

Source: MicroAge

Response teams include technical personnel who can diagnose problems, legal experts who understand regulatory implications, and communication specialists who manage public disclosure.

Containment strategies limit damage when incidents occur. These might include temporarily disabling affected AI systems, restricting access to compromised data, or implementing manual backup procedures.

Recovery procedures restore normal operations after resolving incidents. Technical recovery involves fixing system problems, updating security measures, and validating system performance before resuming operations.

Build Risk Communication and Stakeholder Engagement

Communication strategies vary based on different audience needs and expectations. Internal communication keeps employees informed about AI governance policies, their roles in risk management, and reporting procedures for potential issues.

Customer communication explains how AI systems affect user experiences while addressing privacy and fairness concerns. Regulatory communication demonstrates compliance with applicable laws and industry standards.

Transparency requirements balance openness with competitive and security concerns. Organizations typically publish high-level information about AI governance approaches, risk management strategies, and performance metrics.

Public disclosure protocols define when and how organizations announce AI-related incidents or changes. These protocols specify trigger conditions for disclosure, approval processes for public statements, and coordination with legal and regulatory authorities.

Step 5: Deploy AI Risk Controls and Security Safeguards

Organizations move from planning to action when they implement technical and procedural controls to prevent, detect, and respond to AI-related risks. This deployment phase transforms strategic risk management plans into operational reality through specific interventions.

Implement Technical Controls and Security Measures

Technical controls provide the foundation for protecting AI systems from various threats and vulnerabilities. Access control systems ensure only authorized personnel can interact with sensitive AI systems and data.

Data encryption protects information both when stored and when transmitted between systems. Encryption algorithms scramble data so unauthorized users cannot read sensitive information even if they gain access to it.

Model security measures protect AI algorithms from malicious attacks and unauthorized access. Adversarial attack protection systems detect when someone tries to manipulate AI inputs to cause incorrect outputs.

Threat detection systems continuously monitor AI infrastructure for suspicious activities. These systems identify unusual access patterns, unexpected performance changes, and potential security breaches.

Regular security assessments evaluate the effectiveness of implemented controls. Penetration testing simulates real-world attacks to identify vulnerabilities before malicious actors can exploit them.

Establish Human Oversight and Review Processes

Human oversight mechanisms ensure appropriate human involvement in AI system decision-making processes. Organizations define clear criteria for when human intervention is required, particularly for high-risk decisions that affect individuals significantly.

Review checkpoints create structured opportunities for human evaluation of AI system outputs. These checkpoints occur at predetermined intervals or when certain conditions are met, such as confidence scores falling below established thresholds.

Approval workflows route AI-generated decisions through designated personnel before implementation. Critical decisions follow escalation paths that ensure appropriate expertise and authority levels review outcomes.

Training programs prepare human oversight personnel to fulfill their roles effectively. Training covers AI system capabilities and limitations, review procedures, and escalation protocols.

Documentation requirements capture all human oversight activities for accountability and learning purposes. Organizations record when humans intervene, the reasons for intervention, and the outcomes of human decisions.

Deploy Data Protection and Privacy Controls

Data governance frameworks establish policies and procedures for managing information throughout the AI system lifecycle. Organizations implement data classification systems that categorize information based on sensitivity levels and protection requirements.

Privacy preservation techniques protect individual privacy while maintaining AI system functionality. Differential privacy adds mathematical noise to datasets to prevent identification of specific individuals.

Consent management systems track and enforce individual permissions for data use. Organizations implement mechanisms to obtain, record, and honor user consent preferences.

Data minimization practices limit collection and retention to information necessary for AI system objectives. Organizations regularly review data inventories to identify and delete unnecessary information.

Access logging records all interactions with sensitive data used in AI systems. Organizations track who accesses what information, when access occurs, and what actions are performed.

Create Comprehensive Audit and Documentation Systems

Audit trail systems provide tamper-evident logging of all significant AI system activities. Organizations record AI decisions, human override actions, system modifications, and risk management activities.

Documentation requirements ensure transparency and accountability for AI system operations. Organizations maintain records of system design decisions, training data sources, performance metrics, and risk assessments.

Compliance tracking mechanisms monitor adherence to established policies and regulatory requirements. Organizations implement automated monitoring tools that flag potential violations and generate reports for oversight bodies.

Record retention policies specify how long different types of information are preserved. Organizations establish retention schedules based on legal requirements, business needs, and stakeholder expectations.

Step 6: Establish Continuous Risk Monitoring and Response

Building ongoing monitoring capabilities creates the foundation for detecting emerging risks and responding to incidents before they cause significant harm. Organizations implement monitoring systems that watch AI systems around the clock.

Deploy Real-Time Risk Detection and Alerting

Real-time monitoring systems automatically track AI system performance and flag unusual patterns that might indicate emerging risks. These systems collect data continuously from AI applications, analyzing performance metrics, user interactions, and system outputs.

Automated alert mechanisms notify relevant teams when specific thresholds are exceeded or when unusual patterns emerge. The alerts trigger when accuracy drops below acceptable levels, when bias metrics indicate unfair treatment of certain groups, or when security indicators suggest potential threats.

Anomaly detection capabilities identify patterns that deviate from normal system behavior, even when the specific risk wasn’t anticipated. These systems learn what normal operations look like and flag situations that fall outside expected parameters.

Monitoring dashboards display key metrics in real-time, allowing teams to observe system health at a glance. The dashboards present information in visual formats that make trends and problems easy to spot quickly.

Data collection systems gather information from multiple sources, including system logs, user feedback, external data feeds, and third-party monitoring services. Threshold configuration defines the specific values that trigger alerts for different types of risks.

Create Escalation and Response Procedures

Escalation paths define who receives notifications when different types of risks are detected and how quickly they respond. Low-level technical issues might go to system administrators, while significant bias problems escalate to governance committees and senior leadership.

Response team activation procedures specify which personnel join incident response efforts based on the type and severity of the detected risk. Technical teams handle performance issues, while ethics experts join responses to fairness concerns.

Communication protocols establish how teams share information during incidents, including which communication channels to use and how frequently to provide updates. Clear communication prevents confusion and ensures all relevant parties stay informed.

Severity classification systems categorize incidents based on their potential impact, helping teams prioritize responses appropriately. Critical incidents that threaten safety receive immediate attention, while minor performance issues follow standard resolution procedures.

Authority structures define decision-making responsibilities during incidents, clarifying who can approve system shutdowns, policy changes, or resource allocation for response efforts. Time-based response requirements specify how quickly teams acknowledge alerts and begin response activities.

Build Incident Investigation and Analysis Capabilities

Root cause analysis processes examine incidents systematically to identify why problems occurred and how to prevent similar issues in the future. Investigation teams trace problems back to their origins, whether in data, algorithms, processes, or external factors.

Documentation procedures capture detailed information about each incident, including timelines, contributing factors, response actions, and outcomes. Thorough documentation supports learning and helps teams recognize patterns across multiple incidents.

Analysis workflows guide investigation teams through systematic examination of incident data, ensuring consistent and comprehensive analysis. The workflows include steps for data collection, hypothesis testing, and conclusion validation.

Expert involvement brings specialized knowledge to investigations when incidents involve complex technical, ethical, or regulatory issues. Subject matter experts help teams understand sophisticated problems and identify appropriate solutions.

Evidence preservation maintains detailed records of system states, data inputs, and decision processes during incidents. Pattern recognition activities examine multiple incidents to identify common themes, recurring problems, or systemic issues.

Develop Recovery and Remediation Protocols

System recovery procedures define steps for restoring normal operations after incidents, including technical repairs, data restoration, and performance validation. Recovery protocols ensure systems return to acceptable operation levels safely.

Stakeholder notification plans specify which parties receive information about incidents and recovery efforts, including users, customers, partners, and regulatory bodies. Notification timing and content vary based on stakeholder roles and incident severity.

Regulatory reporting requirements outline obligations to inform government agencies about specific types of AI incidents, particularly those involving safety, privacy, or discrimination concerns.

Remediation tracking monitors progress on corrective actions identified during incident investigations. Tracking systems ensure that recommended improvements are implemented and verified for effectiveness.

Testing protocols verify that systems operate correctly after recovery actions are completed. Communication strategies manage public and stakeholder messaging about incidents and recovery efforts.

Step 7: Build Ongoing Improvement and Framework Evolution

AI governance requires constant attention and refinement rather than a one-time setup. Organizations establish systematic processes to evaluate, update, and strengthen their AI risk management practices as technologies evolve and new challenges emerge.

Conduct Regular Framework Performance Reviews

Framework performance reviews provide essential feedback on how well AI governance practices work in real-world conditions. Organizations schedule these assessments at predetermined intervals — typically quarterly for high-risk systems and annually for lower-risk applications.

Effectiveness evaluations measure whether implemented controls actually reduce AI risks as intended. Teams track metrics such as incident frequency, stakeholder satisfaction scores, and compliance rates with established policies.

Gap analysis compares current practices against the NIST AI RMF requirements and industry best practices. Teams identify areas where governance processes fall short of established standards or where new risks have emerged that existing controls don’t address.

Stakeholder feedback collection involves gathering insights from multiple groups affected by AI systems. Technical teams provide perspectives on operational challenges and control effectiveness. Business users share experiences with system performance and usability.

Performance review documentation captures findings, recommendations, and action plans in standardized formats. Teams maintain records of what worked well, what failed, and what changes are planned.

Update Policies Based on Emerging Risks and Technologies

AI landscape monitoring tracks developments in generative AI capabilities, deployment methods, and application areas that might introduce new risks or change existing risk profiles. Teams subscribe to research publications, attend industry conferences, and participate in professional networks.

Regulatory update tracking ensures policies remain compliant with evolving legal requirements. Organizations monitor proposed legislation, regulatory guidance updates, and enforcement actions that affect AI governance obligations.

New risk scenario identification involves analyzing how emerging technologies might create previously unknown risks. Generative AI capabilities, for example, introduced deepfake risks and intellectual property concerns that earlier AI systems didn’t present.

Technology development integration requires updating governance frameworks when organizations adopt new AI tools or platforms. Cloud-based AI services, open-source models, and third-party AI APIs each introduce different risk considerations.

Policy revision processes establish clear procedures for updating governance documents when changes are needed. These processes define who has authority to approve changes, how updates are communicated to affected teams, and how implementation is tracked.

Enhance Team Capabilities Through Training and Development

Ongoing education programs ensure team members maintain current knowledge about AI risks, governance practices, and technical developments. Organizations provide regular training sessions covering topics such as bias detection, privacy protection techniques, and security best practices.

Certification programs offer structured learning paths that validate team members’ competencies in AI governance. Industry certifications from organizations like ISACA or vendor-specific credentials demonstrate knowledge levels and provide career development opportunities.

Skills development initiatives address specific capability gaps identified through performance reviews or changing organizational needs. Teams might receive training in new risk assessment methodologies, emerging regulatory requirements, or advanced technical controls.

Awareness training for non-technical staff ensures broader organizational understanding of AI governance principles. Business users learn to recognize potential bias or privacy issues, while managers understand their oversight responsibilities.

Cross-functional collaboration sessions bring together technical teams, business units, legal staff, and other stakeholders to share knowledge and coordinate governance activities. These sessions help break down silos and ensure consistent approaches.

Adapt Framework to Organizational Growth and Change

Scaling considerations address how governance frameworks evolve as organizations grow in size, complexity, or AI adoption scope. Small businesses with informal processes often require more structured approaches as they expand.

New AI application assessment ensures governance frameworks can handle different types of AI systems as organizations expand their use cases. Customer service chatbots require different controls than predictive analytics systems.

Organizational change management integrates AI governance updates with broader business transformation initiatives. Mergers, acquisitions, restructuring, and strategic pivots all affect governance requirements and may necessitate framework modifications.

Resource allocation adjustments account for changing staffing levels, budget constraints, and competing priorities that affect governance capability. Organizations regularly evaluate whether they have sufficient resources to maintain effective oversight.

Governance structure evolution may require changes to committee composition, reporting relationships, or decision-making authorities as organizations mature. Start-ups might begin with informal oversight but eventually need formal governance boards.

Implementation Timeline and Resource Considerations

Organizations embarking on NIST AI Risk Management Framework implementation face complex timing and resource decisions that directly impact success outcomes. The framework’s voluntary nature allows organizations to adapt implementation scope and pace to their specific circumstances.

Typical Implementation Phases and Duration

NIST AI RMF implementation follows a structured approach with overlapping activities and iterative refinements. Organizations typically complete initial implementation within 12 to 18 months, though mature governance capabilities develop over two to three years.

Source: National Institute of Standards and Technology

Phase 1 focuses on governance infrastructure development and typically takes two to four months. This phase includes AI governance committee formation, policy framework development, and stakeholder identification.

Phase 2 involves system assessment and mapping activities that span three to six months. Teams create comprehensive AI system inventories, conduct risk identification, and document system contexts.

Phase 3 covers measurement methodology development over two to four months. Organizations select metrics, establish baselines, and create testing protocols during this phase.

Phase 4 implements monitoring systems and typically requires four to eight months. This phase includes continuous monitoring deployment, alerting systems, and reporting mechanisms.

Organizations achieve basic compliance within six to nine months but require additional time to develop sophisticated capabilities. The implementation timeline extends beyond initial deployment to include maturation activities.

Resource Requirements and Budget Planning

NIST AI RMF implementation demands diverse resources spanning personnel, technology, training, and external expertise. Budget planning varies significantly based on organizational size, AI system complexity, and existing governance maturity.

Source: LinkedIn

Core implementation teams typically include five to eight full-time equivalent roles across different organizational functions. AI governance leadership requires experienced professionals with risk management backgrounds and AI technology understanding.

Technology investments represent major budget categories. Organizations typically spend between $50,000 and $200,000 on AI risk assessment platforms, continuous monitoring systems, and automated reporting tools.

Training and development costs address different organizational roles and technical skill levels. Executive workshops covering AI governance principles typically cost between $10,000 and $25,000 per session.

Many organizations engage external consultants for specialized expertise or accelerated implementation timelines. AI governance consultants provide framework expertise at rates ranging from $200 to $500 per hour.

Common Implementation Challenges

NIST AI RMF implementation encounters predictable obstacles that experienced organizations learn to anticipate and address proactively. Understanding common challenges enables better planning and resource allocation.

Implementation teams frequently encounter skepticism from technical teams concerned about development velocity impacts or business units questioning risk management value. This resistance often stems from previous compliance experiences that created bureaucratic overhead.

Existing systems often lack the monitoring capabilities or data access required for comprehensive AI risk assessment. Legacy architectures may not support real-time bias detection or continuous performance monitoring without significant modifications.

Implementation timelines frequently extend due to competing organizational priorities and resource constraints. Technical teams juggle implementation tasks with ongoing development commitments and operational responsibilities.

Organizations struggle to select appropriate metrics for AI risk assessment and establish meaningful baselines for comparison. The multifaceted nature of AI risks makes measurement particularly challenging compared to traditional technology risks.

Frequently Asked Questions About NIST AI RMF Implementation

What organizations can benefit from implementing NIST AI Risk Management Framework?

Any organization developing, deploying, or using AI systems can benefit from NIST AI RMF implementation. The framework applies to companies of all sizes, from small startups using AI tools to large enterprises building complex AI systems.

Government agencies, healthcare organizations, financial services companies, and educational institutions find particular value in the framework due to regulatory requirements and public trust considerations. Organizations in regulated industries often discover that NIST AI RMF helps meet compliance obligations while supporting innovation.

The framework’s voluntary nature allows organizations to adapt implementation scope based on their AI usage, risk tolerance, and available resources. Small businesses might implement basic risk assessment and governance practices, while large corporations develop comprehensive monitoring and control systems.

How does NIST AI RMF differ from other AI governance frameworks?

The NIST AI Risk Management Framework focuses specifically on risk identification, assessment, and management throughout the AI system lifecycle. Unlike prescriptive standards that mandate specific controls, the AI RMF provides flexible guidance that organizations adapt to their contexts.

ISO/IEC 42001 establishes requirements for AI management systems that organizations can use to achieve international certification. The European Union’s AI Act creates legal requirements for AI systems based on risk classifications.

These frameworks operate as complementary approaches rather than competing alternatives. Organizations often implement NIST AI RMF for comprehensive risk management while pursuing ISO certification or complying with regulatory requirements like the EU AI Act.

What are the costs associated with comprehensive NIST AI RMF implementation?

Implementation costs depend on organizational size, system complexity, existing capabilities, and the scope of risk management activities pursued. Personnel costs typically represent the largest expense category, encompassing dedicated governance roles and training activities.

Technology costs may include monitoring tools, security systems, bias detection software, and documentation platforms. Organizations often spend between $50,000 and $200,000 on technology investments, though costs vary significantly based on requirements.

External consulting costs range from $200 to $500 per hour for specialized expertise. Organizations with limited internal AI expertise often benefit from consultant partnerships, though the total engagement costs depend on implementation scope and duration.

Organizations often realize return on investment through reduced incident costs, improved stakeholder confidence, and more efficient AI development processes. The investment in comprehensive risk management can prevent costly incidents while enabling more confident AI adoption.

How long does it typically take to fully implement NIST AI Risk Management Framework?

Full implementation timelines vary significantly based on organizational factors, but most organizations complete basic implementation within 12 to 18 months. Mature governance capabilities typically develop over two to three years of continuous improvement and refinement.

Organizations with existing enterprise risk management frameworks generally experience faster implementation because they can build upon established governance structures. Organizations starting from minimal risk management maturity may require additional time to develop foundational capabilities.

The implementation extends beyond initial deployment to include ongoing monitoring, performance evaluation, and framework updates. Organizations achieve compliance milestones at different phases while building toward comprehensive risk management capabilities.

What training is required for successful NIST AI RMF implementation?

Training requirements vary based on organizational roles and existing expertise levels. Executive leadership typically receives governance overview training covering risk management principles and strategic decision-making responsibilities.

Technical teams require detailed training on risk assessment methodologies, monitoring system operation, and incident response procedures. This training often takes 40 to 80 hours depending on system complexity and individual expertise levels.

Business users need awareness training covering AI governance principles, their roles in risk management, and procedures for reporting potential issues. Legal and compliance teams require specialized training on regulatory requirements and documentation standards.

Organizations often provide ongoing education programs to maintain current knowledge about AI risks, governance practices, and technical developments. Regular training ensures team members stay informed about evolving best practices and regulatory changes.

What regulatory requirements mandate AI risk management similar to NIST AI RMF?

While the NIST AI RMF itself is voluntary guidance, several regulatory frameworks require similar risk management approaches. The EU AI Act creates comprehensive legal requirements for AI systems based on risk classifications, with high-risk systems requiring extensive documentation and monitoring.

Financial services organizations in the United States face regulatory expectations for model risk management under guidance like Federal Reserve SR 11-7. Healthcare organizations using AI systems may encounter FDA requirements for medical device risk management.

Government contractors and organizations working with federal agencies increasingly find that AI governance frameworks become practical requirements for contract eligibility. State-level AI regulations continue emerging, with several states proposing comprehensive AI oversight requirements.

Organizations implementing NIST AI RMF often find they’re better positioned to comply with emerging regulations because the framework addresses common regulatory themes like transparency, accountability, and bias mitigation.