Responsible AI refers to the development and deployment of artificial intelligence systems that prioritize safety, fairness, transparency, and accountability throughout their lifecycle.

Artificial intelligence has rapidly transformed how businesses operate across every industry. Companies now use AI systems to make hiring decisions, approve loans, diagnose medical conditions, and automate countless other processes. As AI becomes more powerful and widespread, the potential for both positive impact and unintended harm has grown significantly.

Recent high-profile incidents have highlighted the risks of deploying AI without proper oversight. Biased hiring algorithms have discriminated against qualified candidates. Facial recognition systems have misidentified individuals, leading to wrongful arrests. AI chatbots have generated harmful content or leaked sensitive information. These examples demonstrate why businesses can no longer treat AI as simply a technical tool.

The regulatory landscape has evolved rapidly in response to these challenges. The European Union implemented the comprehensive AI Act in 2024, establishing strict requirements for high-risk AI systems. The United States has developed voluntary frameworks through the National Institute of Standards and Technology. Other countries are following with their own AI governance rules.

What Is Responsible AI

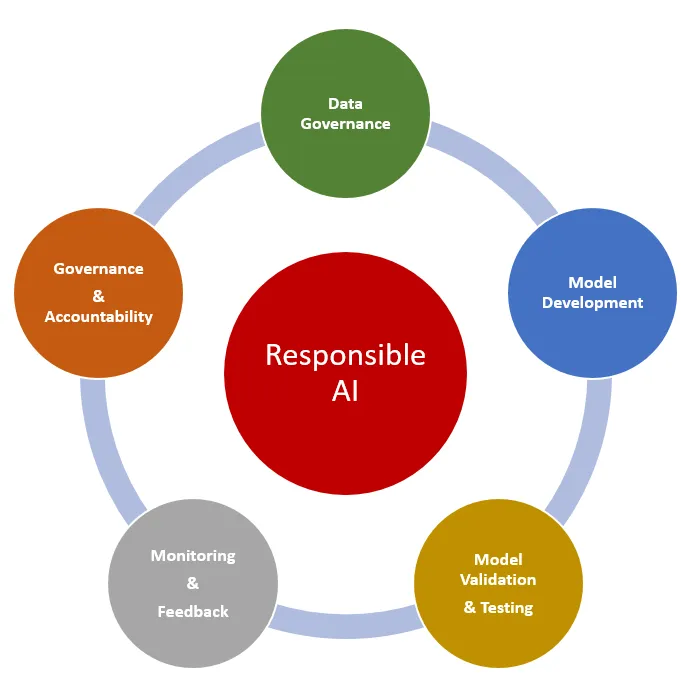

Responsible AI refers to the practice of developing, deploying, and managing artificial intelligence systems with ethical principles, transparency, and safety considerations built into every stage of the process. This approach prioritizes human welfare, fairness, and accountability alongside technical performance and business objectives.

Traditional AI development often focuses primarily on achieving high accuracy, efficiency, and business value. Teams build models to solve specific problems, optimize for performance metrics, and deploy systems based largely on technical capabilities. Responsible AI takes a broader view by incorporating ethical considerations, potential societal impacts, and stakeholder welfare from the initial design phase through ongoing operations.

The core difference lies in the comprehensive evaluation process. Where traditional AI development might ask “Does this system work effectively?”, responsible AI development asks additional questions: “Does this system treat all users fairly?”, “Can people understand how it makes decisions?”, “What potential harms might it cause?”, and “Who remains accountable for its outcomes?”

Source: Data Science Dojo

Core Principles of Responsible AI

Fairness and Bias Mitigation

Fairness in AI means eliminating discrimination in decision-making processes. AI systems can inherit biases from training data or develop discriminatory patterns that unfairly impact certain groups of people. Organizations work to identify these biases through systematic testing and implement corrective measures to ensure equal treatment across different demographic groups.

Testing for bias involves examining AI outputs across various groups to detect patterns of unfair treatment. Teams analyze whether the system produces different results for people of different races, genders, ages, or other protected characteristics when the underlying qualifications or circumstances are similar.

Source: Hacking HR

- Hiring systems — Testing resume screening algorithms to verify they evaluate candidates fairly regardless of gender or ethnicity

- Loan approval — Analyzing credit decision algorithms to confirm they don’t discriminate against applicants based on zip code or demographic characteristics

- Healthcare diagnostics — Evaluating medical AI tools to ensure they provide accurate diagnoses across different racial and ethnic groups

Transparency and Explainability

Transparency requires making AI decision-making processes understandable to users and stakeholders. When AI systems make important decisions, people affected by those decisions can understand how the system reached its conclusions. This principle enables users to trust AI systems and identify potential problems or errors.

Explainability involves providing clear explanations of how AI models work and why they produce specific outputs. Organizations develop documentation and interfaces that help both technical and non-technical users understand AI system behavior.

Accountability and Governance

Accountability establishes clear responsibility for AI decisions and outcomes. Organizations create governance structures that define who makes decisions about AI system development, deployment, and oversight. These frameworks specify roles and responsibilities for managing AI risks and addressing problems when they occur.

Governance structures include committees, policies, and procedures that guide AI implementation across organizations. These systems ensure consistent application of responsible AI principles and provide mechanisms for addressing ethical concerns or system failures.

Why Responsible AI Matters for Business

Risk Mitigation and Compliance Benefits

Responsible AI practices protect organizations from three major categories of risk: legal violations, financial losses, and reputation damage. Legal risks emerge when AI systems violate regulations like the EU AI Act, which imposes fines up to 35 million euros or seven percent of annual turnover for using prohibited AI systems.

Financial risks include direct costs from regulatory penalties, lawsuit settlements, and the expenses of fixing problematic AI systems after deployment. Reputational risks occur when AI systems make biased decisions, violate privacy, or cause harm to customers or communities.

Competitive Advantage Through Trust

Ethical AI implementation creates measurable business advantages through enhanced stakeholder confidence and market differentiation. Customers demonstrate greater willingness to engage with AI-powered services when organizations can clearly explain how these systems make decisions and provide assurance of fair treatment.

Organizations with transparent AI practices report increased customer loyalty, improved employee engagement, and stronger partnership relationships. Market differentiation becomes particularly valuable in sectors where trust and reliability influence purchasing decisions and regulatory oversight affects business operations.

Regulatory Compliance and Legal Requirements

EU AI Act Requirements

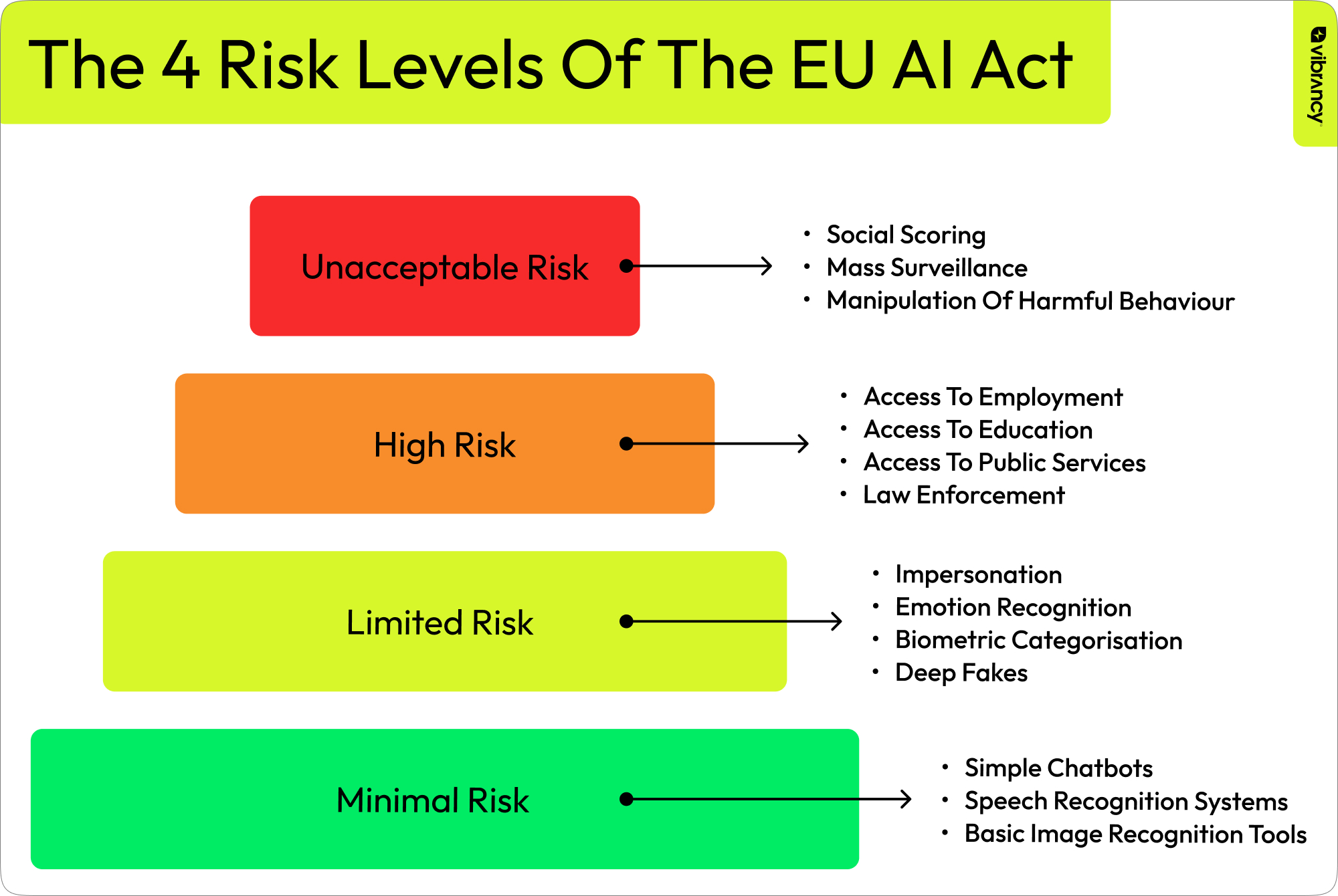

The European Union AI Act represents the world’s first comprehensive artificial intelligence regulation, creating a risk-based framework that categorizes AI systems based on their potential impact. The regulation divides AI applications into four distinct risk levels: unacceptable risk (prohibited), high risk (strict requirements), limited risk (transparency obligations), and minimal risk (largely unregulated).

Source: Vibrancy.ai

High-risk AI systems include applications in critical infrastructure, education, employment, essential services, law enforcement, migration management, and democratic processes. These systems face extensive compliance obligations including conformity assessments, risk management systems, data governance requirements, and human oversight protocols.

- Fundamental Rights Impact Assessments — Required for high-risk AI systems before deployment

- Quality management systems — Mandatory risk assessment procedures and documentation

- Human oversight mechanisms — Required for all high-risk applications with clear intervention protocols

US AI Regulatory Landscape

The United States approaches AI regulation through sector-specific rules and voluntary frameworks rather than comprehensive federal legislation. The National Institute of Standards and Technology developed the AI Risk Management Framework, providing voluntary guidance for managing AI risks throughout system lifecycles.

President Biden’s Executive Order on AI establishes requirements for AI safety testing, particularly for foundation models exceeding specific computational thresholds. Federal agencies receive mandates to develop AI safety standards, conduct impact assessments, and establish oversight mechanisms for government AI procurement and deployment.

How to Implement Responsible AI in Your Organization

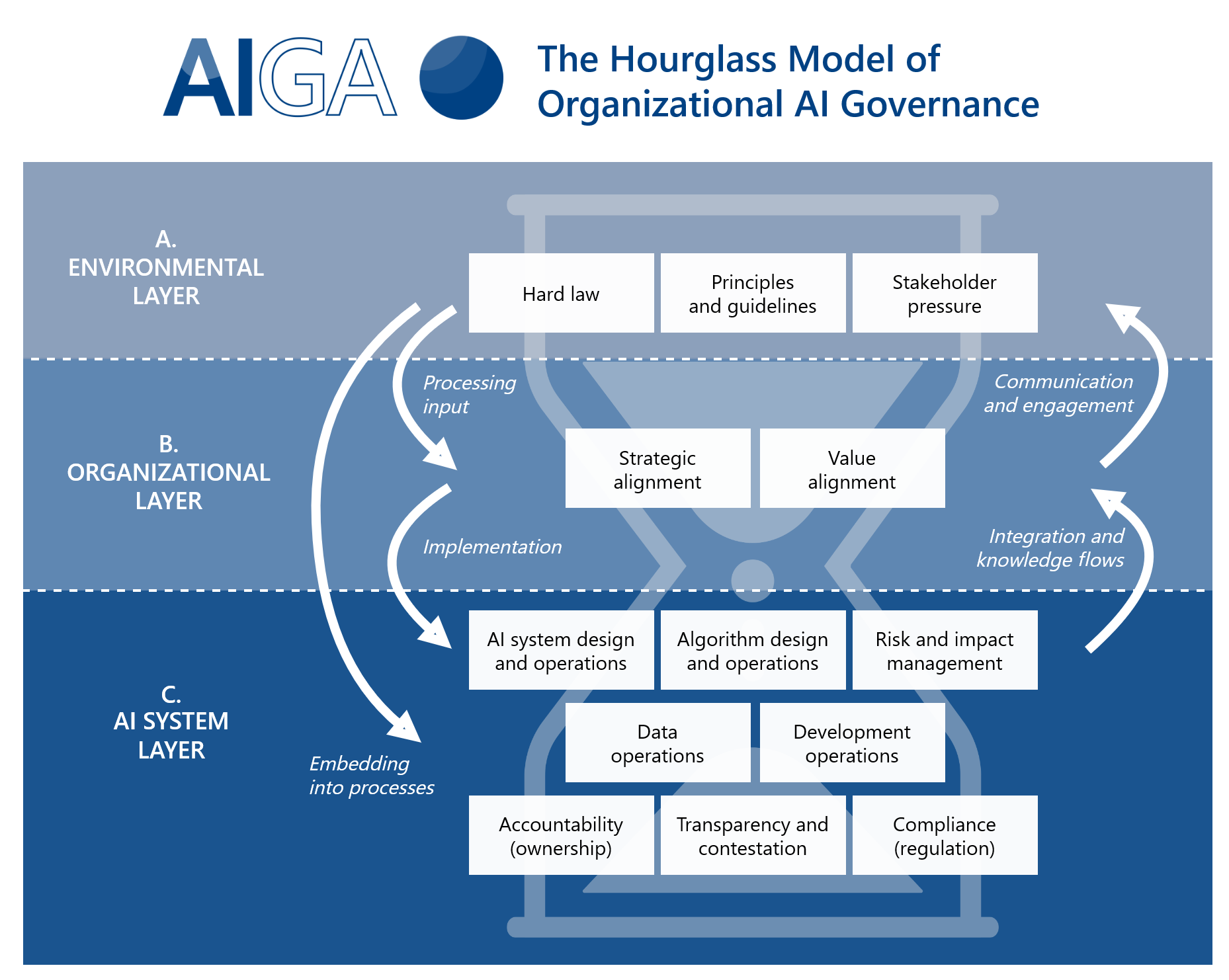

Establish AI Governance Structure

Creating an effective AI governance structure starts with forming cross-functional teams that bring together diverse expertise from across the organization. The core governance committee typically includes representatives from IT, legal, HR, compliance, risk management, and executive leadership. Each member contributes specific knowledge about their domain while working together to address AI-related decisions that span multiple business functions.

Source: AI Governance Framework

The AI governance committee serves as the central decision-making body for AI-related policies, procedures, and strategic direction. This committee reviews proposed AI projects, evaluates ethical implications, and ensures alignment with organizational values and regulatory requirements.

Organizations benefit from appointing dedicated AI ethics officers who focus specifically on ethical considerations throughout AI development and deployment. The AI ethics officer works closely with development teams to identify potential ethical concerns, guides the implementation of fairness and bias mitigation strategies, and serves as a resource for teams navigating complex ethical dilemmas.

Conduct Comprehensive Risk Assessment

Risk assessment begins with evaluating current AI systems to understand their capabilities, limitations, and potential impacts on the organization and its stakeholders. Teams catalog existing AI applications, document their purposes and functions, and identify the data sources and decision-making processes involved in each system.

Technical risk assessment examines AI system performance, reliability, and security characteristics. Teams test systems under various conditions to identify potential failure modes, evaluate accuracy across different scenarios, and assess robustness against unexpected inputs or adversarial attacks.

Ethical risk assessment evaluates the potential for AI systems to cause harm through biased decision-making, privacy violations, or other ethical concerns. Teams analyze system outputs across different demographic groups to identify patterns of differential treatment and examine decision-making processes for transparency and fairness.

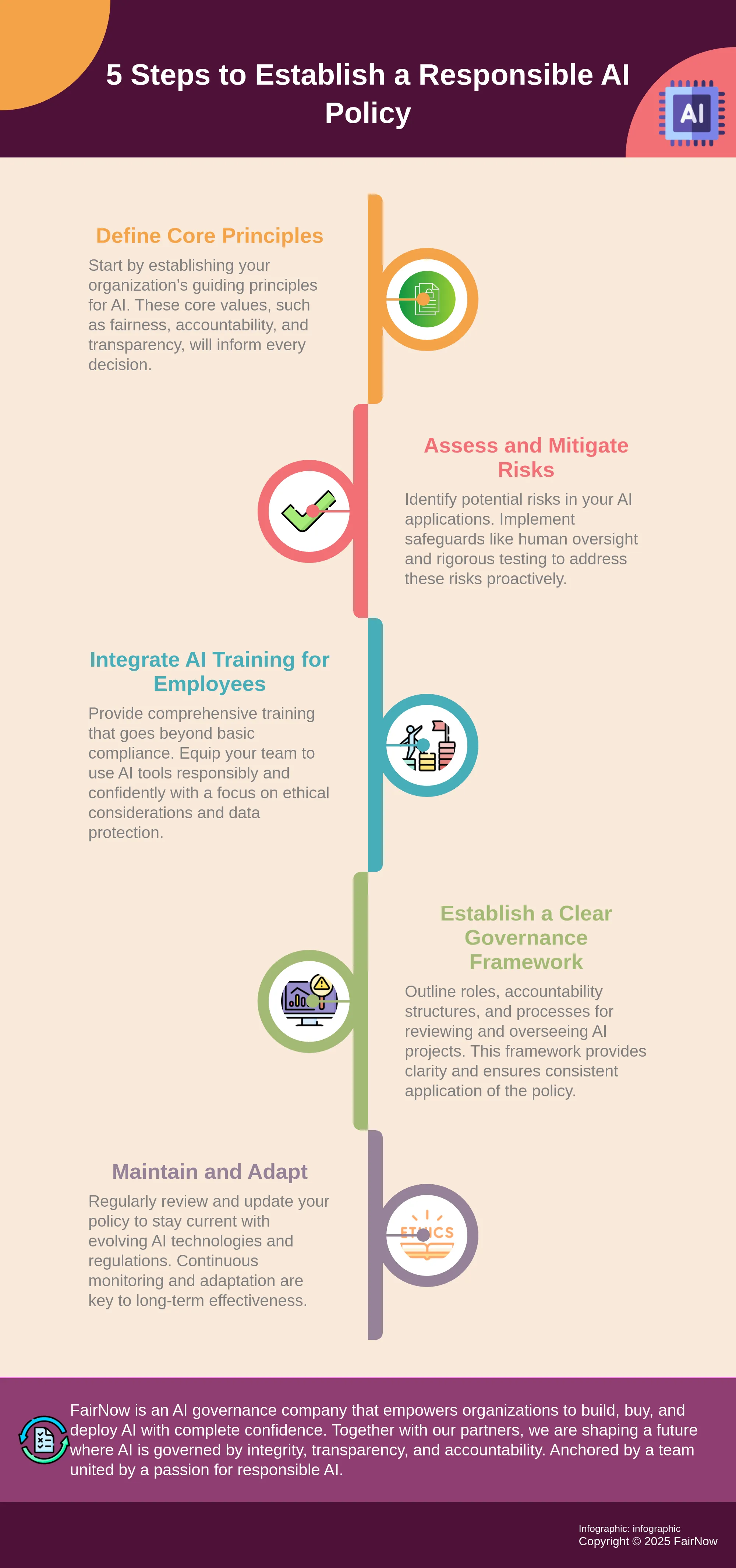

Develop Implementation Roadmap

The implementation roadmap begins with prioritizing AI applications based on risk levels, business impact, and regulatory requirements. High-risk applications that significantly affect individuals or involve sensitive data receive priority attention, while lower-risk applications follow later in the implementation timeline.

Source: FairNow AI

Phased implementation approaches allow organizations to build capabilities gradually while learning from early experiences and adapting strategies based on practical insights. Phase one typically focuses on establishing governance structures, developing policies, and implementing responsible AI practices for the highest-risk applications.

Common Responsible AI Implementation Challenges

Technical Integration Obstacles

Integrating ethical considerations into existing AI systems presents complex technical challenges. Legacy systems often lack the infrastructure to support responsible AI requirements like bias monitoring, explainability features, and comprehensive audit trails. Many organizations discover their current AI architectures can’t accommodate the additional computational overhead required for fairness assessments and transparency mechanisms.

Performance impacts create another layer of complexity. Adding responsible AI features typically increases processing time and computational resources. Systems designed purely for accuracy may experience reduced speed when fairness constraints and explainability requirements are introduced.

Organizational Change Management

Resistance to new processes emerges as teams struggle to adapt established workflows. Employees familiar with traditional AI development cycles often view responsible AI requirements as bureaucratic overhead that slows innovation. Cultural shifts prove particularly challenging in organizations where speed-to-market has historically taken precedence over ethical considerations.

Training needs extend beyond technical teams to include legal, compliance, and business stakeholders. Each group requires different levels of AI literacy and understanding of responsible AI principles. Workflow adjustments affect project timelines, requiring revised planning approaches and resource allocation strategies.

Resource and Expertise Gaps

Shortage of skilled professionals represents one of the most significant barriers to responsible AI implementation. The market demand for professionals with combined expertise in AI technology, ethics, and governance far exceeds current supply. Organizations compete intensely for talent with experience in bias detection, algorithmic auditing, and AI governance frameworks.

Budget constraints limit organizations’ ability to hire specialized talent or invest in comprehensive responsible AI infrastructure. Ongoing maintenance and monitoring requirements create recurring costs that many organizations underestimate during initial planning phases.

FAQs About Responsible AI Implementation

How much does implementing responsible AI governance cost for a mid-sized company?

Implementation costs depend on organization size and AI complexity. Small-scale AI automation projects typically cost between $10,000 and $50,000, while mid-sized AI projects range from $100,000 to $500,000. Enterprise-grade AI solutions require investments of $1 million to $10 million or more.

Source: Scalefocus

Initial costs include specialized expertise, technical infrastructure, governance systems, and training programs. Organizations also face ongoing operational expenses for system monitoring, compliance reporting, regular auditing, model retraining, and governance activities throughout the AI system lifecycle.

What technical skills do development teams need for responsible AI?

Development teams require understanding of bias detection and mitigation tools, explainability mechanisms, privacy-preserving technologies, and security safeguards. Legal teams contribute expertise in regulatory compliance, data protection laws, and risk assessment frameworks.

Ethics specialists help organizations navigate complex moral considerations and stakeholder impacts. Business leaders require knowledge of AI governance principles and risk management strategies. The limited availability of professionals with combined expertise in AI technology, ethics, governance, and domain-specific knowledge creates challenges for many organizations.

Which AI applications require the most rigorous responsible AI oversight?

High-risk AI applications that significantly affect individuals require the most extensive oversight. These include hiring and recruitment systems, credit approval and lending decisions, medical diagnosis and treatment recommendations, criminal justice risk assessments, and autonomous vehicle systems.

The EU AI Act specifically categorizes AI systems used in critical infrastructure, education, employment, essential services, law enforcement, migration management, and democratic processes as high-risk applications requiring strict compliance measures.

How often do organizations need to audit their AI systems for bias and fairness?

Organizations typically conduct bias audits quarterly for high-risk AI systems and annually for lower-risk applications. The frequency depends on system risk levels, regulatory requirements, and how frequently the AI system makes decisions that affect people.

Systems that process large volumes of decisions or operate in sensitive domains like hiring, lending, or healthcare may require monthly monitoring. Organizations also conduct additional audits when they update AI models, change data sources, or modify decision-making processes.

What documentation do regulators expect for responsible AI compliance?

Regulators expect comprehensive documentation including AI system development records, risk assessments, testing results, and performance metrics. Organizations maintain detailed records of data sources, model training procedures, bias testing outcomes, and decision-making processes.

The EU AI Act requires specific documentation for high-risk AI systems including conformity assessments, quality management system records, and human oversight procedures. Organizations also document incident response procedures, stakeholder feedback mechanisms, and continuous improvement activities.