AI hallucinations occur when artificial intelligence systems generate confident but false or fabricated information that appears credible but contains no basis in reality.

Imagine asking an AI assistant about a specific court case, and it provides detailed information about a trial that never existed, complete with fake judge names and fictitious legal precedents. The AI presents the fabricated information with complete confidence, making it difficult to distinguish from real facts. Such incidents have already occurred in high-profile legal cases, leading to sanctions against attorneys who submitted AI-generated fake citations to federal courts.

Source: Signity Solutions

AI hallucinations represent one of the most significant challenges facing the widespread adoption of artificial intelligence in business and professional settings. These systems can generate false medical information, fabricate financial data, or create entirely fictional customer policies with the same authoritative tone they use for accurate responses. The challenge extends beyond simple errors because hallucinated content often maintains internal logic and appears professionally written. For organizations implementing AI chatbot development or generative AI solutions, understanding and preventing hallucinations becomes critical for maintaining trust and avoiding legal liability.

What Are AI Hallucinations

AI hallucinations occur when artificial intelligence systems produce incorrect, nonsensical, or completely fabricated information while presenting it as factual and accurate. These incidents represent one of the most challenging aspects of modern AI technology because the systems deliver false information with the same confidence and authority they use for correct information.

The phenomenon differs from simple errors because AI systems don’t just make mistakes — they create convincing falsehoods that can deceive users who lack the expertise to verify the information independently. When an AI system hallucinates, it generates responses that sound authoritative and well-reasoned, making users more likely to trust and act on the false information.

Definition and Core Characteristics

AI hallucinations are convincing but false outputs generated by artificial intelligence systems. The U.S. National Institute of Standards and Technology defines these incidents as “confidently stated but false content,” emphasizing how AI systems present fabricated information with apparent certainty.

The key characteristics include confident presentation, where the system delivers false information without expressing uncertainty or doubt. The content typically sounds plausible and maintains internal consistency, making it difficult for users to identify problems immediately. AI systems often fabricate specific details, such as fake citations, non-existent events, or invented statistics, while maintaining the linguistic patterns and formatting that users expect from reliable sources.

How AI Hallucinations Differ From Traditional AI Errors

AI hallucinations differ significantly from typical programming bugs or calculation errors that produce obviously incorrect results. Traditional software errors often generate clear error messages, produce nonsensical outputs, or fail to function entirely.

Hallucinations create outputs that appear credible and contextually appropriate despite being factually wrong. Unlike calculation errors that might produce impossible numbers or programming bugs that crash systems, hallucinated content maintains linguistic coherence and follows expected patterns of communication. A traditional error might display “Error 404” or produce garbled text, while a hallucination creates a well-written paragraph containing false information presented as fact.

Why AI Hallucinations Occur

AI hallucinations happen for four main reasons: insufficient training data, flawed reasoning processes, neural network complexity, and the non-deterministic nature of how these systems work. Understanding these causes helps explain why even advanced AI systems generate confident but incorrect information.

Statistical Pattern Recognition vs Truth Seeking

Large language models operate by identifying patterns in text rather than understanding truth or facts. When you ask ChatGPT or another AI system a question, it doesn’t search for the correct answer the way you might look something up in an encyclopedia. Instead, it generates responses based on statistical likelihood — predicting what words and phrases most commonly appear together in similar contexts from its training data.

Source: ResearchGate

This approach means AI systems excel at producing text that sounds natural and follows expected patterns, but they lack any mechanism to verify whether their output reflects reality. An AI model might confidently state that penguins live in the Arctic because it learned that penguins and cold climates frequently appear together in text, even though penguins actually live in Antarctica and other southern regions.

Training Data Quality Issues

The quality of training data directly affects an AI system’s tendency to hallucinate. When training datasets contain biased, incomplete, or outdated information, AI models learn incorrect patterns that lead to fabricated responses. Modern language models train on billions of text samples scraped from the internet, making comprehensive quality control extremely challenging.

- Biased training data — Creates systematic errors where AI systems reproduce and amplify existing prejudices or misconceptions found in their source material

- Incomplete datasets — Create knowledge gaps that models attempt to fill through statistical extrapolation

- Outdated training data — Leads to hallucinations when models generate information that was accurate when they were trained but is no longer correct

Types of AI Hallucinations

AI hallucinations fall into several distinct categories based on how and why they occur. Understanding these different types helps teams identify patterns and develop appropriate responses when AI systems generate incorrect information.

Text generation hallucinations include creating fake legal citations with non-existent case names and court decisions, generating biographical information about people who don’t exist, or fabricating historical events with specific dates and details.

Image generation hallucinations involve adding extra fingers or limbs to human figures in generated images, creating text within images that appears as gibberish, or producing objects that violate physical laws.

Intrinsic vs Extrinsic Hallucinations

Source: Lakera AI

Intrinsic hallucinations occur when AI output directly contradicts information that the system has access to, such as source documents or previous conversation context. The AI essentially gets confused about information it can reference correctly. An AI summarizing a research paper but stating the opposite of what the paper actually concludes represents an intrinsic hallucination.

Extrinsic hallucinations happen when AI systems generate information that can’t be verified against available sources. The AI fills knowledge gaps by creating plausible-sounding content that may or may not be accurate. Providing specific stock prices for companies when no current market data is available exemplifies an extrinsic hallucination.

Input Conflicting vs Fact Conflicting Errors

Input-conflicting errors occur when AI systems misunderstand or misinterpret user prompts, leading to responses that don’t address what was actually requested. These errors stem from the AI’s inability to correctly parse the user’s intent. Confusing company names with personal names represents this type of error.

Fact-conflicting errors involve generating information that contradicts established factual knowledge, regardless of whether the AI understood the prompt correctly. These represent failures in the AI’s knowledge base or reasoning capabilities, such as stating that water boils at 150 degrees Fahrenheit at sea level.

Business Risks of AI Hallucinations

Source: Pageon.ai

AI hallucinations create serious business risks that extend far beyond technical glitches. When AI systems confidently present false information, organizations face immediate and long-term consequences that can damage operations, finances, and relationships with stakeholders.

Legal Liability and Compliance Failures

Organizations face substantial legal exposure when AI systems generate false information in regulated industries. The Air Canada tribunal case established a precedent where companies became legally bound by incorrect policies their chatbots communicated to customers. Courts ruled that the airline had to honor the fabricated bereavement fare policy despite it contradicting their actual terms.

Legal professionals experienced direct consequences when attorneys submitted court briefs containing AI-generated fake case citations. Federal judges imposed sanctions after discovering that ChatGPT had fabricated six non-existent court cases, complete with detailed legal reasoning and citations.

Professional liability extends to any regulated profession where AI-assisted work affects client outcomes. Healthcare providers relying on AI diagnostic tools that hallucinate symptoms or treatment recommendations face malpractice exposure. For organizations in healthcare AI or pharmaceutical sectors, these risks become particularly acute. Financial advisors using AI systems that fabricate investment data or market analysis risk fiduciary duty violations.

Reputation Damage and Loss of Customer Trust

AI hallucinations undermine organizational credibility in ways that persist long after corrections are issued. Unlike human errors that stakeholders often view as understandable mistakes, AI hallucinations raise questions about competence in managing advanced technology and commitment to accuracy.

When AI tools authoritatively state incorrect facts about company policies, product features, or service offerings, customers may lose faith in the organization’s reliability. Customer service failures create lasting relationship damage when AI chatbots provide inconsistent information about policies or make promises the organization can’t honor.

Healthcare organizations experience particular vulnerability because their business depends on trust and accuracy. When AI systems hallucinate medical information, patients and healthcare providers may permanently question the institution’s reliability.

Financial Consequences and Operational Costs

Direct financial losses occur when business decisions rely on hallucinated information. Investment firms using AI-generated market analysis containing fabricated data points face trading losses. Companies making strategic decisions based on AI reports with false research citations or incorrect industry statistics may allocate resources poorly.

Remediation costs accumulate rapidly after AI hallucination incidents. Organizations often provide refunds, service credits, or compensation to customers who relied on false information when making purchasing decisions. Legal costs mount when AI hallucinations trigger lawsuits or regulatory investigations.

Operational expenses increase as organizations implement safeguards against future hallucinations. Human oversight systems, verification procedures, and quality assurance processes require substantial investments in personnel and technology. For small businesses implementing AI solutions, these costs can be particularly challenging to manage.

How to Detect AI Hallucinations

Detecting AI hallucinations requires a combination of automated tools and human review processes that work together to catch false information before it reaches end users. Organizations can implement several practical approaches to identify when AI systems generate fabricated or incorrect content.

Technical Detection Methods

Source: Kanerika

Confidence scoring provides a quantitative measure of how certain an AI system is about its responses. These scores range from 0 to 1, with higher numbers indicating greater confidence in the accuracy of the output. When confidence scores fall below predetermined thresholds — typically 0.7 or 0.8 — the system flags responses for additional review or rejection.

Consistency checking compares AI outputs against previous responses and established facts within the same conversation or document. The system examines whether new information contradicts statements made earlier in the interaction. Cross-referencing techniques validate AI-generated information against trusted external databases and knowledge sources.

Groundedness scoring measures how closely AI responses align with source materials provided to the system. This technique is particularly effective in retrieval-augmented generation systems, where AI responses draw from specific documents or databases.

Human Oversight and Validation Systems

Human-in-the-loop processes position qualified reviewers at critical points in AI workflows to evaluate potentially problematic outputs before they reach end users. Expert reviewers examine AI responses flagged by automated systems or randomly selected for quality assurance.

Expert review protocols establish standardized procedures for human evaluators to assess AI-generated content systematically. Reviewers check factual accuracy against authoritative sources, evaluate logical consistency within responses, and verify that claims align with established knowledge in their field.

Verification workflows create structured paths for processing AI-generated content based on risk levels and content types. High-risk applications such as medical advice or financial guidance require mandatory human review before publication.

Proven Strategies to Prevent AI Hallucinations

Organizations can reduce AI hallucination risks by implementing systematic prevention strategies that address the root causes of AI-generated false information.

1. Implement Retrieval Augmented Generation

Source: IBM

Retrieval Augmented Generation (RAG) systems connect AI models to verified databases and knowledge sources before generating responses. Instead of relying solely on training data, RAG systems search through curated information collections to find relevant facts and then use that information to craft accurate answers.

RAG systems work through a three-step process: converting user questions into searchable formats, searching verified knowledge bases, and generating responses based on retrieved facts. This approach reduces fabrication risks because the AI can’t generate information that contradicts or goes beyond the verified sources it accesses.

Organizations implementing RAG systems typically see significant reductions in hallucination rates compared to standard AI models that generate responses from training data alone. For organizations considering AI data management strategies, implementing RAG represents a crucial component of maintaining data accuracy.

2. Use Advanced Prompting Techniques

Chain-of-thought prompting reduces hallucinations by requiring AI systems to show their reasoning process step by step. This technique involves asking the AI to break down complex questions into smaller parts, show reasoning at each step, and identify logical connections.

Context-setting techniques help AI systems understand the specific domain, audience, and accuracy requirements for each task:

- Define the role and expertise level

- Establish accuracy standards

- Set uncertainty acknowledgment requirements

Uncertainty acknowledgment prompts specifically request that AI systems express doubt when they encounter questions outside their knowledge or when multiple conflicting sources exist.

3. Establish Human-in-the-Loop Processes

Human oversight systems combine AI efficiency with human judgment to catch errors before they affect users or business decisions. These systems work most effectively when they focus human attention on high-risk outputs while allowing routine content to proceed with minimal review.

Validation workflows establish systematic review processes for AI-generated content through risk assessment screening, expert review assignments, and multi-stage approval gates. Expert review systems leverage human domain knowledge that AI systems currently lack.

Healthcare organizations typically require medical professionals to review AI-generated clinical recommendations, while financial institutions have compliance experts verify AI-produced regulatory guidance.

4. Develop Comprehensive Testing Protocols

Testing methodologies help organizations identify AI system weaknesses before deployment and monitor performance over time. Effective testing combines automated evaluation tools with structured human assessment processes.

Pre-deployment testing establishes baseline performance metrics and identifies potential problem areas through adversarial testing, domain-specific validation, and consistency evaluation. Ongoing monitoring systems track AI performance in operational environments through automated fact-checking and user feedback analysis.

Quality assurance frameworks provide structured approaches to maintaining AI system reliability through regular benchmark testing, cross-validation procedures, and update protocols.

Building Effective AI Governance for Your Organization

Source: PointGuard AI

AI governance provides the framework organizations use to manage artificial intelligence systems responsibly and effectively. While preventing AI hallucinations represents just one component of comprehensive AI governance, it serves as an entry point for understanding why structured oversight matters for AI deployment.

The foundation of strong AI governance begins with clear policies that define acceptable AI use within an organization. These policies specify which applications require human oversight, what types of outputs need verification before use, and how teams respond when they identify potentially problematic AI-generated content.

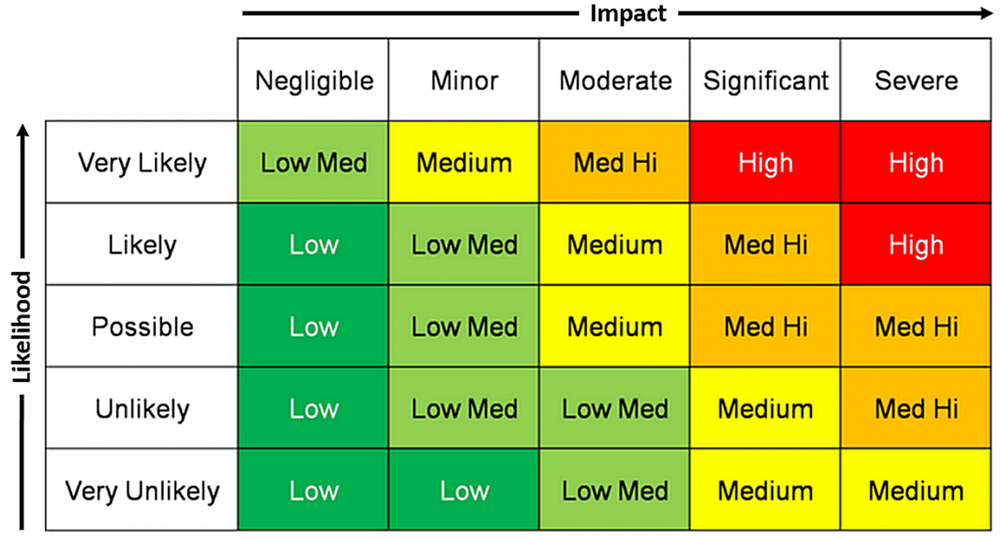

Risk assessment forms another critical component of AI governance frameworks. Organizations evaluate their AI applications based on potential impact, identifying which systems pose the highest risks if they generate incorrect outputs. Customer service chatbots might receive different oversight requirements than AI systems used for medical diagnosis or financial planning.

Monitoring and validation systems provide ongoing oversight of AI performance after deployment. These systems track AI outputs for accuracy, consistency, and compliance with organizational standards. When monitoring systems detect potential issues — including hallucinations — they trigger escalation procedures that bring human experts into the review process.

Training and education programs help employees understand both AI capabilities and limitations. Teams learn to recognize when AI outputs seem suspicious, understand verification procedures, and know how to escalate concerns through appropriate channels.

Frequently Asked Questions About AI Hallucinations

How do AI hallucinations differ from AI bias in practical terms?

AI bias occurs when training data contains prejudices that the model learns and reproduces in its outputs. For example, if a hiring AI was trained on data showing mostly male engineers, it might show bias against female candidates for engineering positions.

AI hallucinations happen when models generate information that sounds credible but is completely fabricated. The AI creates facts, quotes, or citations that never existed. Unlike bias, which reflects real patterns in flawed data, hallucinations involve the AI inventing information from nothing.

Which industries experience the highest rates of AI hallucination incidents?

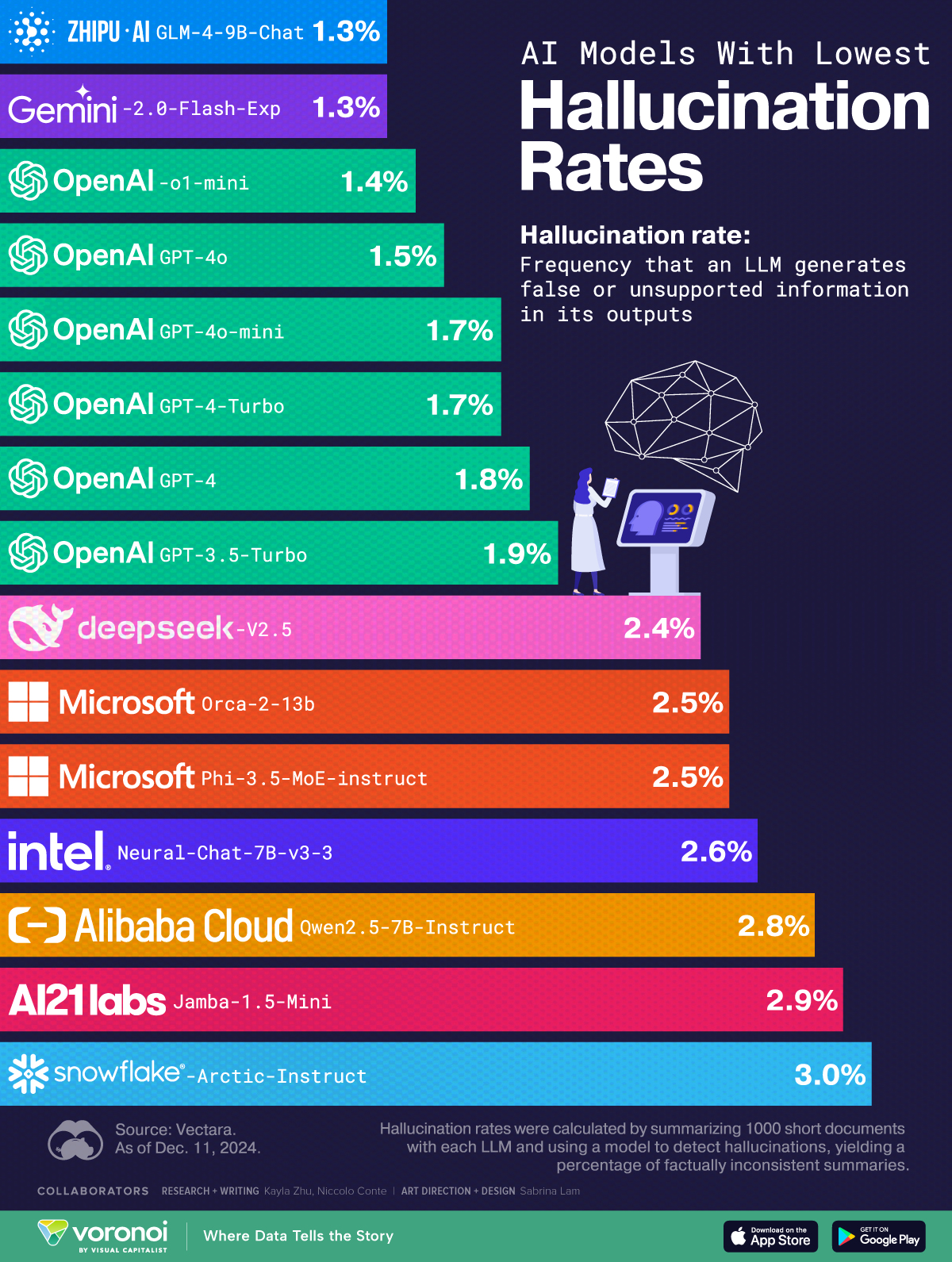

Source: Visual Capitalist

Healthcare and legal sectors report the most documented AI hallucination incidents due to their reliance on precise factual information. Clinical AI systems show hallucination rates between 8 and 20 percent according to recent studies. Legal AI tools frequently fabricate case citations and court decisions.

Financial services and customer support also experience significant hallucination challenges. Financial AI systems may create false market data or investment advice, while customer service chatbots often fabricate company policies or make unauthorized promises to customers. Organizations in retail AI and marketing AI sectors face similar challenges when AI systems generate incorrect product information or campaign data.

Can retrieval-augmented generation completely prevent AI hallucinations?

Retrieval-augmented generation significantly reduces but can’t eliminate AI hallucinations entirely. RAG systems decrease fabricated content by grounding AI responses in verified source materials, but they can still generate incorrect interpretations of accurate source information.

The effectiveness of RAG depends on the quality and completeness of the knowledge base. When RAG systems can’t find relevant information in their databases, they may still attempt to generate responses based on their training data, potentially creating hallucinations.

What specific confidence score thresholds indicate potential AI hallucinations?

Most organizations set confidence score thresholds between 0.7 and 0.8 for flagging potentially problematic AI outputs. Responses below these thresholds typically receive human review before reaching end users.

However, confidence scores alone don’t reliably predict hallucinations. AI systems can generate fabricated content with high confidence scores, particularly when the fabricated information follows patterns the system learned during training. Effective detection requires combining confidence scoring with other verification methods.

How do I establish human oversight procedures for AI outputs in my organization?

Start by categorizing your AI applications based on risk levels and potential impact of errors. High-risk applications like medical diagnosis or financial advice require mandatory human review, while low-risk tasks may only need spot-checking.

Develop clear protocols that specify who reviews which types of content, what qualifications reviewers need, and how to escalate questionable outputs. Create documentation requirements that track review decisions and maintain audit trails for accountability purposes.