The exponential growth of enterprise data has created unprecedented challenges for organizations seeking to extract value from their information assets. Traditional data management approaches, reliant on manual processes and rule-based systems, struggle to keep pace with the volume, variety, and velocity of modern data streams. AI data management emerges as a transformative solution that leverages artificial intelligence and machine learning technologies to automate, optimize, and enhance every aspect of the data lifecycle.

What Is AI Data Management

AI data management uses artificial intelligence and machine learning to handle how companies collect, process, store, and use their data. Instead of relying on people to manually manage data or simple computer programs that follow basic rules, these systems can learn patterns, make decisions on their own, and get better over time without someone programming every step.

Think of it like the difference between a basic calculator and a smartphone. A calculator can only do what it’s programmed to do. A smartphone learns your habits, suggests apps you might want, and adapts to how you use it. That’s what AI data services do for company data.

The numbers tell the story of why this matters. Research from IBM shows that 82% of companies have data trapped in separate systems that can’t talk to each other. Even worse, 68% of all company data never gets looked at or used to make decisions. AI data management fixes these problems by creating smart systems that automatically find, organize, and connect data across an entire company.

Artificial Intelligence in Data Lifecycle Management

Data lifecycle management covers everything that happens to data from the moment it’s created until it’s deleted. AI changes how each step works by adding intelligence that can predict what will happen next and make smart choices automatically.

When companies collect data, AI can automatically spot what’s important and pull it from different places like databases, emails, or sensors on factory equipment. The AI learns what types of information matter most and gets better at finding relevant data over time.

During processing, machine learning algorithms clean up messy data, fix errors, and fill in missing pieces. For example, if customer records are missing phone numbers, the AI might find those numbers from other company systems or databases and fill them in automatically.

For storage decisions, AI figures out which data gets used most often and keeps it easily accessible. Data that rarely gets touched moves to cheaper storage options. This happens automatically based on actual usage patterns, not guesswork.

Machine Learning for Automated Data Processing

Machine learning excels at finding patterns that humans would miss or take too long to discover. These systems spot data quality problems, detect unusual information that doesn’t fit normal patterns, and fix issues based on what they’ve learned from previous corrections.

Automated data quality works by teaching computers to recognize what good data looks like. Once trained, the system can scan through millions of records and instantly flag anything that seems wrong. It might notice that customer addresses don’t match postal codes or that sales numbers fall outside normal ranges.

Natural language processing, a type of AI, can read and understand text in emails, contracts, and reports. It pulls out important information and organizes it automatically, turning unstructured text into organized data that companies can analyze.

The predictive part is especially powerful. Instead of just fixing problems after they happen, these systems can predict when data quality issues are likely to occur and prevent them from happening in the first place.

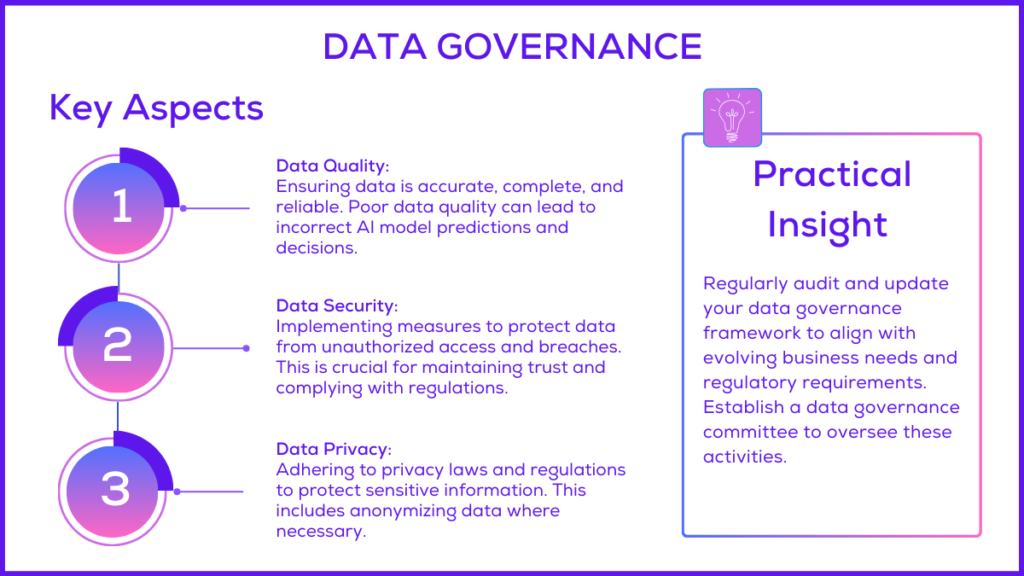

Intelligent Data Governance and Quality Control

Data governance means having rules and processes for how data gets used in a company. AI governance systems automatically follow these rules and watch for violations without needing people to check every single action.

These systems can automatically spot sensitive information like Social Security numbers or credit card details and apply the right security settings. They create audit trails that show who accessed what data and when, which helps companies prove they’re following regulations like GDPR or HIPAA.

Quality control happens continuously. The AI sets up baseline measurements for what good data looks like, then monitors all incoming data against these standards. When something doesn’t meet the quality bar, the system either fixes it automatically or alerts someone to take a look.

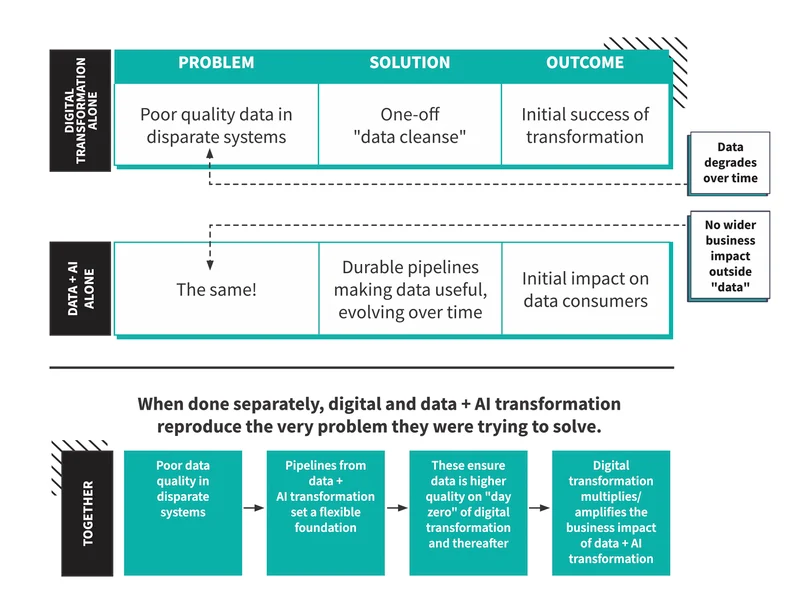

How AI Transforms Traditional Data Management Processes

Traditional data management requires people to manually handle most tasks. Someone has to find relevant data, check if it’s accurate, combine information from different sources, and create reports. This process is slow, expensive, and prone to human error.

AI data management flips this around. Instead of people doing the work, smart algorithms handle the heavy lifting. Tasks that used to take weeks now happen in hours or minutes. More importantly, the quality and consistency improve because machines don’t get tired or make the same types of mistakes humans do.

Automated Data Discovery and Classification

Data discovery means finding all the data in a company and figuring out what it contains. In traditional setups, IT teams manually catalog systems and document what data exists where. This takes months and often misses important information.

AI integration systems automatically scan through all company systems, cloud applications, and databases to find data. They use computer vision to analyze images and documents, natural language processing to understand text, and pattern recognition to identify data types.

The classification part happens simultaneously. As the AI finds data, it automatically tags and categorizes everything. Customer information gets labeled as such, financial data gets marked appropriately, and sensitive information gets flagged for special handling.

Here are the key benefits of automated discovery:

- Complete inventory: AI scans places humans might forget to check

- Consistent classification: The same rules apply everywhere automatically

- Sensitive data detection: Automatically finds and protects private information

- Real-time updates: New data gets classified as soon as it appears

Real-Time Data Quality Monitoring

Traditional quality checks happen periodically, like monthly reports that show problems after they’ve already affected business decisions. Real-time monitoring watches data continuously as it flows through systems, catching problems immediately.

These monitoring systems use statistical analysis and machine learning to establish what normal data looks like. When something unusual appears, the system investigates further. It might discover that a data source has started sending corrupted files or that a system integration is dropping important fields.

The response is immediate. Quality issues trigger alerts, automatic corrections, or workflows that route problems to the right people. This prevents bad data from spreading through company systems and affecting decisions.

Advanced anomaly detection goes beyond basic validation rules. It can spot subtle patterns that indicate problems like potential fraud, system failures, or data corruption that traditional rule-based systems would miss.

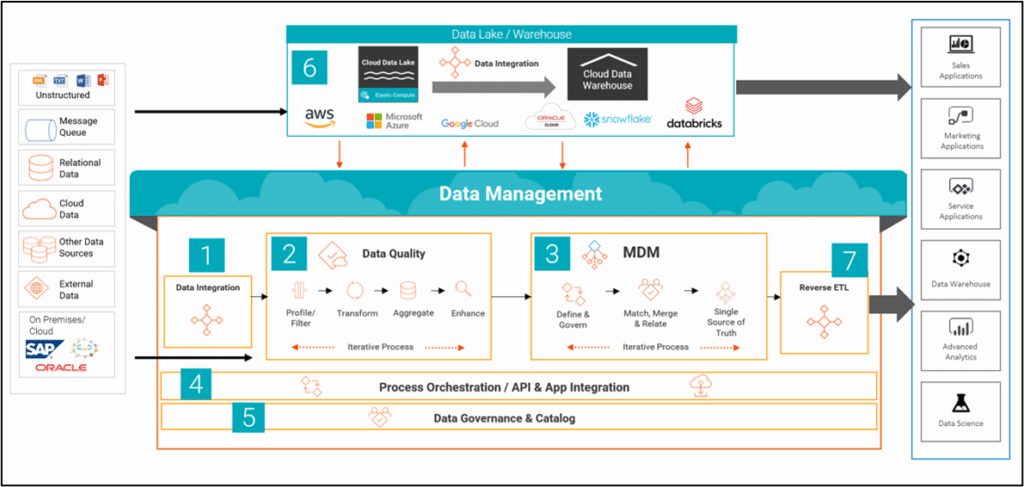

Intelligent Data Integration Across Systems

Data integration combines information from different sources into a unified view. Traditional approaches require IT specialists to manually map how data from System A relates to data from System B. This process is time-consuming and breaks when either system changes.

AI data integration platforms automatically discover relationships between different data sources. Machine learning algorithms analyze data structures, identify common elements, and figure out how to combine information without extensive manual mapping.

When conflicts arise (like the same customer having different addresses in two systems), intelligent systems can determine which information is most likely correct based on factors like data freshness, source reliability, and historical patterns.

Schema mapping, the technical process of connecting different data formats, happens automatically. The AI learns how different systems organize information and creates the translation rules needed to combine everything into a coherent picture.

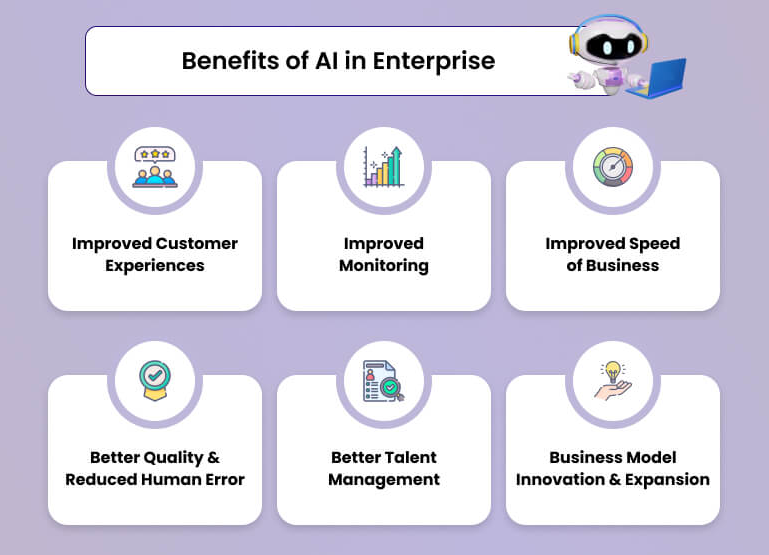

Key Benefits of AI Data Management for Enterprise Organizations

Companies that implement intelligent data management see improvements across multiple areas of their operations. The changes affect both day-to-day work and long-term strategic capabilities.

Enhanced Data Quality and Accuracy

AI algorithms dramatically reduce errors by automating validation, cleaning, and enrichment processes. Machine learning models learn from corrections over time, creating systems that get better at maintaining quality without human intervention.

Data quality improvements include:

- Duplicate detection: AI finds and merges duplicate records with high accuracy

- Format standardization: Automatically converts data into consistent formats

- Missing data recovery: Intelligently fills gaps using related information

- Error prediction: Identifies likely problems before they affect business processes

- Cross-system validation: Checks data consistency across multiple sources

These improvements compound over time. As the AI learns more about what good data looks like in a specific company context, it gets better at maintaining those standards automatically.

Improved Operational Efficiency and Cost Reduction

Automation eliminates time-consuming manual tasks that data teams previously handled. Data preparation, which typically consumes 60-80% of analysts’ time, drops significantly when AI handles the heavy lifting automatically.

Organizations commonly see these efficiency gains:

- Faster data pipeline setup: New data sources connect in weeks instead of months

- Self-service capabilities: Business users access data without IT tickets

- Reduced manual errors: Automation eliminates human mistakes in routine tasks

- Optimized resource usage: AI allocates computing and storage based on actual needs

- Streamlined reporting: Automated generation of regular reports and compliance documentation

The time savings let data professionals focus on higher-value work like analysis and business strategy instead of data preparation and maintenance.

Stronger Compliance and Risk Management

Automated compliance monitoring continuously scans data for regulatory violations and policy infractions. AI compliance systems can automatically redact sensitive information, apply data retention policies, and generate the documentation required for audits.

These systems excel at finding sensitive data across large, complex environments. They can identify personal information, financial records, and intellectual property automatically, then apply appropriate security controls without manual intervention.

Risk management becomes proactive rather than reactive. AI can predict potential compliance issues, identify unusual access patterns that might indicate security threats, and monitor data usage to ensure it aligns with stated policies and consent agreements.

Faster Time to Business Insights

AI data management accelerates the entire analytics process from data preparation through insight generation. Automated data pipelines eliminate preparation bottlenecks, while intelligent cataloging helps users quickly find relevant information.

The acceleration comes from several areas:

- Automated data preparation: Clean, organized data ready for analysis

- Intelligent search: Find relevant datasets quickly using natural language queries

- Pattern detection: AI automatically identifies trends and anomalies worth investigating

- Insight generation: Initial analysis and recommendations appear automatically

- Natural language interfaces: Business users ask questions in plain English

This speed improvement changes how companies make decisions. Instead of waiting weeks for data analysis, business leaders can get answers in hours or days.

Essential Components of AI Data Management Systems

Effective intelligent data management platforms combine specialized technologies into integrated systems. Each component handles specific tasks but works together to create comprehensive data management capabilities.

AI-Powered Data Integration Platforms

Modern integration platforms use machine learning to automatically connect and combine data from diverse sources. These data management tools handle structured databases, unstructured content, cloud applications, and streaming data through intelligent adapters that learn source characteristics.

ETL automation (Extract, Transform, Load) generates transformation logic based on learned patterns and business rules. Instead of programmers writing code to move data between systems, AI figures out how to extract, clean, and load information automatically.

Data pipeline intelligence monitors performance and optimizes processing workflows based on changing data volumes and usage patterns. If certain data sources become more important, the system automatically allocates more resources to process them faster.

Machine Learning Data Quality Engines

Specialized quality engines use multiple machine learning techniques to monitor and improve data quality continuously. Statistical models establish quality baselines and detect when data deviates from normal patterns.

These engines employ several approaches:

- Statistical analysis: Identifies outliers and unusual distributions

- Pattern recognition: Learns what valid data looks like for specific fields

- Anomaly detection: Spots complex problems that simple rules miss

- Historical learning: Improves accuracy based on past corrections and feedback

The systems learn from every correction made by users. When someone fixes a data quality issue, the AI remembers the pattern and applies similar fixes automatically in the future.

Automated Governance and Compliance Tools

Governance platforms automatically enforce data policies through intelligent classification, access controls, and usage monitoring. These enterprise data management systems identify regulated data types and apply appropriate security controls without manual configuration.

Compliance automation includes:

- Policy enforcement: Automatically applies rules based on data classification

- Access monitoring: Tracks who accesses what data and flags unusual patterns

- Retention management: Automatically deletes data according to retention schedules

- Audit documentation: Generates reports and trails required for compliance reviews

- Privacy controls: Implements data subject rights like deletion and portability requests

These tools adapt to changing regulations by updating their classification and handling rules as new requirements emerge.

Intelligent Analytics and Reporting Capabilities

AI-driven analytics platforms automatically generate insights and identify trends without manual intervention. Natural language processing enables conversation-like interfaces where business users ask questions in normal English instead of learning technical query languages.

Advanced analytics capabilities include:

- Automated insights: AI identifies interesting patterns and trends automatically

- Predictive modeling: Forecasts future trends based on historical data

- Natural language queries: Users ask questions in plain English

- Recommendation engines: Suggests relevant data sources and analysis approaches

- Dynamic reporting: Reports update automatically as new data becomes available

These systems democratize data access by removing technical barriers that previously required specialized training to overcome.

Strategic Implementation of AI Data Management Solutions

Successful implementation requires careful planning and phased deployment. Companies that rush into AI data management without proper preparation often struggle with adoption and fail to realize the expected benefits.

1. Assessment and Requirements Planning

Implementation starts with understanding current data management challenges and organizational readiness. This assessment identifies existing data sources, quality issues, integration requirements, and compliance obligations that will influence solution design.

The evaluation process examines:

- Current data landscape: What systems exist and how they connect

- Quality challenges: Where data problems occur most frequently

- Integration complexity: How difficult it will be to connect existing systems

- Compliance requirements: What regulations and policies apply

- Stakeholder needs: What different groups expect from the solution

Stakeholder alignment ensures business and technical requirements get properly captured and prioritized. Without clear agreement on goals and expectations, implementation efforts often go in the wrong direction.

2. Technology Selection and Architecture Design

Technology selection involves evaluating available platforms against organizational requirements, existing infrastructure, and long-term objectives. The choice affects everything from implementation complexity to ongoing operational costs.

Architecture design balances several considerations:

- Scalability: Can the solution grow with data volumes and user demands

- Integration: How well does it connect with existing systems

- Security: Does it meet organizational security standards

- Performance: Can it handle peak loads without degrading

- Cost: Are ongoing operational expenses sustainable

Hybrid approaches often work best, combining cloud-based AI services with on-premises systems to balance performance, security, and cost factors.

3. Integration with Existing Enterprise Systems

Integration planning addresses the technical challenges of connecting AI platforms with existing applications, databases, and analytical tools. This phase requires detailed analysis of data flows, API capabilities, and middleware requirements.

Data migration considerations include mapping existing data structures, validating quality, and establishing procedures that minimize business disruption. Legacy system integration often requires custom adapters to bridge differences in data formats and processing approaches.

The integration process typically happens in phases, starting with less critical systems to test approaches and build confidence before connecting mission-critical applications.

4. Change Management and Team Training

Organizational change management addresses how people adapt to new technology and processes. Training programs prepare staff for new workflows and tools while addressing concerns about technology adoption.

Effective change management includes:

- Communication: Clear explanation of what’s changing and why

- Training: Hands-on education about new tools and processes

- Support: Ongoing help during the transition period

- Champions: Enthusiastic early adopters who help others learn

- Feedback: Regular check-ins to identify and address problems

The human element often determines success more than technical factors. Companies that invest in change management see much better adoption rates and business outcomes.

Measuring ROI and Success in AI Data Management Initiatives

Measuring success requires tracking specific metrics that demonstrate business value and operational improvements. Without clear measurement frameworks, it’s difficult to justify continued investment or identify areas for improvement.

Key Performance Indicators for Data Quality

Data quality metrics provide concrete evidence of system effectiveness. These measurements show whether the AI is actually improving data quality or just adding complexity.

Organizations typically track:

- Accuracy rates: Percentage of data that’s correct and up-to-date

- Completeness: How much required information is present

- Consistency: Whether related data matches across systems

- Timeliness: How quickly data becomes available for decisions

- Duplicate rates: Percentage of duplicate records identified and resolved

- Error reduction: Comparison of error rates before and after implementation

These metrics establish baselines and track improvement over time. They also help identify areas where the AI needs additional training or configuration adjustments.

Business Value and Efficiency Metrics

Operational improvements translate directly into measurable business value through reduced costs, improved productivity, and faster decision-making. These AI automation data metrics show the financial impact of the implementation.

Common efficiency measurements include:

| Metric Category | Typical Improvement Range | Business Impact |

|---|---|---|

| Data Preparation Time | 60-80% reduction | Analysts focus on analysis instead of data cleaning |

| Data Quality Error Rate | 70-90% improvement | Better business decisions based on accurate information |

| Integration Timeline | 80-90% faster | New data sources deliver value quickly |

| Compliance Reporting Effort | 80-95% reduction | Lower administrative costs and compliance risk |

| Time to Insight | 50-75% improvement | Faster response to market opportunities and challenges |

These improvements compound over time as the AI systems learn and optimize their performance based on usage patterns and feedback.

Long-Term Strategic Impact Assessment

Strategic benefits extend beyond immediate operational improvements to include competitive advantages and organizational capabilities. These longer-term impacts often provide the greatest business value but take time to materialize.

Strategic impact indicators include:

- Market responsiveness: Faster reaction to competitive threats and opportunities

- Innovation capability: Ability to launch new products and services based on data insights

- Operational agility: Flexibility to adapt business processes as conditions change

- Risk management: Better ability to identify and mitigate business risks

- Regulatory readiness: Improved capability to comply with changing regulations

Assessing these impacts requires looking at business outcomes over months or years rather than immediate technical metrics.

Building Your AI Data Management Strategy

Creating effective data management strategies requires alignment between business objectives, technical capabilities, and organizational readiness. Companies that approach this strategically see better outcomes than those that focus only on technology implementation.

Common Implementation Challenges to Avoid

Companies frequently encounter predictable problems during implementation. Understanding these challenges helps organizations plan more effectively and avoid common pitfalls.

Typical challenges include:

- Underestimating data quality requirements: Poor data quality undermines AI effectiveness

- Insufficient stakeholder engagement: Low adoption leads to limited business value

- Inadequate integration planning: Creates new data silos instead of breaking them down

- Overlooking change management: People resist new processes without proper preparation

- Technology misalignment: Choosing tools that don’t fit existing infrastructure

- Weak governance frameworks: Lack of clear policies and procedures for AI systems

Most of these problems stem from focusing too much on technology and not enough on organizational factors like training, communication, and change management.

Selecting the Right Technology Partners

Technology partner selection affects both implementation success and long-term satisfaction. The right partners provide not just software but also expertise, support, and strategic guidance throughout the journey.

Key evaluation criteria include:

- Enterprise experience: Track record with similar companies and use cases

- Platform completeness: Comprehensive capabilities that reduce vendor complexity

- Integration capabilities: Strong connections to existing enterprise systems

- Support quality: Responsive help during implementation and ongoing operations

- Innovation commitment: Continued development and enhancement of capabilities

The evaluation process typically includes proof-of-concept projects, reference customer discussions, and detailed technical assessments that validate claimed capabilities.

Creating a Roadmap for Success

Successful implementations follow phased approaches that build capabilities incrementally while demonstrating value at each stage. Roadmaps prioritize high-impact, lower-risk use cases for initial implementation, then expand to more complex scenarios as organizational capabilities mature.

Effective roadmaps include:

- Quick wins: Early successes that build confidence and momentum

- Foundation building: Core capabilities that support future expansion

- Capability expansion: Additional features and use cases as expertise grows

- Advanced optimization: Sophisticated capabilities that drive competitive advantage

- Continuous improvement: Ongoing refinement and enhancement of existing capabilities

Each phase delivers measurable business value while building the foundation for subsequent capabilities. This approach maintains stakeholder support and ensures that implementations stay aligned with business objectives.

Organizations developing comprehensive intelligent data management strategies often benefit from partnering with experienced consultants who understand both technical complexities and organizational dynamics. These partnerships help navigate challenges while developing sustainable, value-driven approaches to AI-powered enterprise data management.