As artificial intelligence (AI) becomes integral to businesses across all sectors, the concept of AI governance has emerged as crucial for ensuring responsible, effective, and ethical AI deployment. This guide delves into what AI governance is, why it is critical, and how organizations can implement robust frameworks to manage AI risks and maximize benefits.

Understanding AI Governance

AI governance refers to the processes, policies, and tools designed to ensure AI systems are transparent, ethical, and aligned with organizational, regulatory, and societal values. According to IDC, AI governance brings diverse stakeholders—from data scientists and engineers to compliance officers and business leaders—together to manage AI risks and ensure ethical deployment throughout the AI lifecycle.

The primary objectives of AI governance include:

- Ensuring fairness and transparency

- Maintaining compliance with evolving regulations

- Managing risks such as bias, privacy loss, and security threats

- Enhancing accountability and explainability

Why AI Governance is Critical

AI technologies offer tremendous potential, from automating customer service to predicting machine failures in industrial settings. IDC predicts global AI investment will reach over $308 billion by 2026. However, alongside these opportunities, AI poses substantial risks, including bias, discrimination, privacy breaches, and unexplainable outcomes.

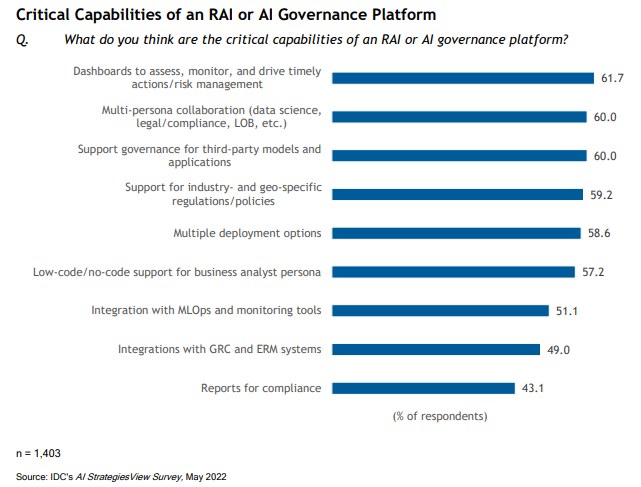

Effective AI governance addresses these challenges proactively. According to IDC’s 2022 AI StrategiesView Survey, the absence of robust governance is one of the biggest barriers to AI adoption. Without effective governance, organizations risk:

- Damaged brand reputation

- Regulatory backlash

- Revenue loss

- Reduced public trust

- Legal liabilities

Key Components of AI Governance

Robust AI governance frameworks, such as IBM’s holistic approach and NIST’s AI Risk Management Framework (AI RMF), include several critical components:

1. Responsible AI Principles

Responsible AI ensures systems align with human-centered values, fairness, and transparency. Organizations committed to Responsible AI create trust and confidence among employees, customers, and society.

2. AI Lifecycle Governance

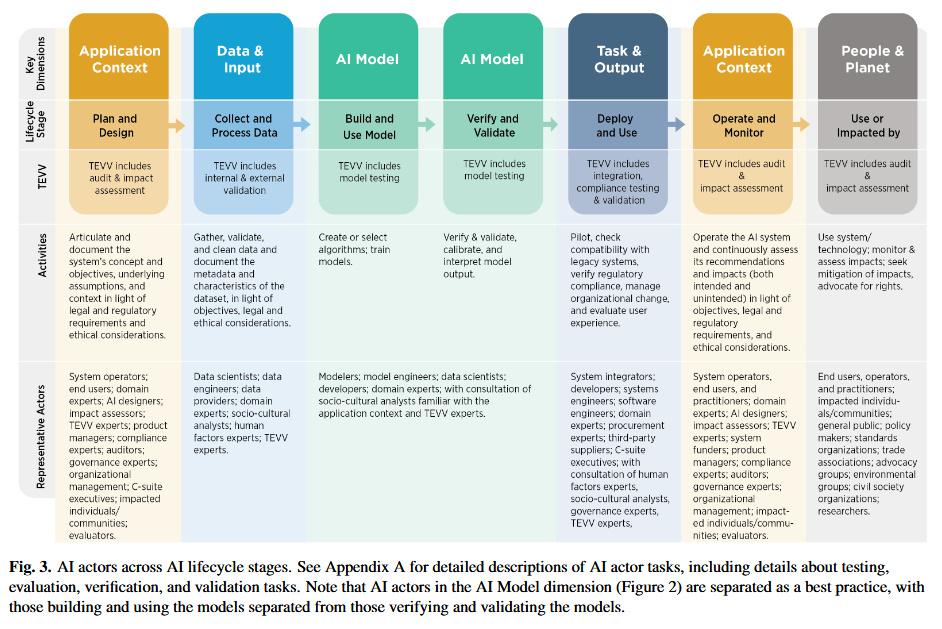

AI lifecycle governance involves ongoing oversight at every stage—from initial development to deployment and continuous monitoring. This approach ensures models remain fair, accurate, and compliant over time.

3. Collaborative Risk Management

AI risk management must integrate with broader corporate governance frameworks. By embedding AI governance into existing risk management practices, organizations can better manage compliance and ethical risks.

4. Regulatory Excellence

As regulatory environments rapidly evolve, notably with the EU’s AI Act, organizations must maintain proactive regulatory compliance to mitigate risks effectively. Compliance frameworks should align closely with emerging AI regulations to ensure continuous adherence.

Implementing AI Governance: Best Practices

Adopt the Hourglass Model

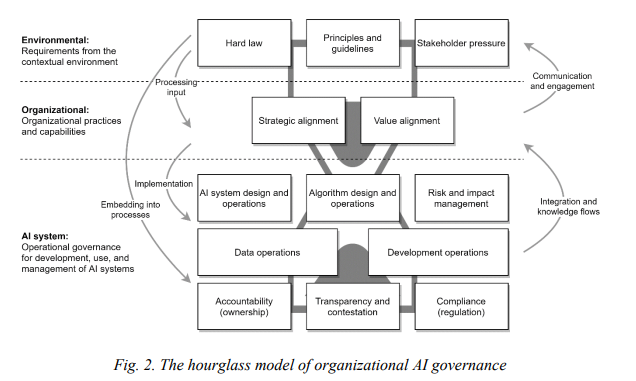

The Hourglass Model, introduced by Mäntymäki et al., offers a structured approach to AI governance. It includes:

- Environmental Layer: Managing external regulatory and stakeholder requirements.

- Organizational Layer: Aligning AI use with organizational strategies and ethical values.

- AI System Layer: Operationalizing governance across AI system development, data management, and risk monitoring.

Utilize NIST’s AI RMF

The NIST AI RMF provides a structured method for addressing AI-specific risks through four key functions:

- Govern: Establishing policies and accountability structures.

- Map: Identifying and understanding AI risks.

- Measure: Evaluating AI systems against established standards.

- Manage: Mitigating identified risks effectively.

Foster Cross-Functional Collaboration

AI governance should involve cross-functional teams, including legal, IT, business operations, and ethics committees, to ensure comprehensive risk management and strategic alignment.

The Future of AI Governance

As AI continues to permeate all aspects of business and society, effective AI governance will transition from being advantageous to indispensable. Organizations investing early in comprehensive AI governance frameworks will not only manage risks effectively but also position themselves to leverage AI for sustainable, ethical, and profitable growth.

AI governance isn’t just good practice; it’s essential for building the trust necessary for AI technologies to thrive responsibly.

If you’re interested in creating an AI Governance Framework, you can read our guide about it here.

By understanding and implementing strong AI governance, organizations can confidently harness AI’s transformative potential while safeguarding against its inherent risks.