AI inferencing is the process where trained machine learning models analyze new data to make predictions or decisions in real-world applications. This phase transforms static trained algorithms into active systems that process live information and deliver actionable results.

When organizations deploy AI models after training, the models must work with data they have never seen before. AI inferencing represents this critical operational step where theoretical capabilities become practical tools. The process involves taking input data, running calculations through the trained model, and producing outputs like classifications, predictions, or recommendations.

The distinction between training and inferencing matters for businesses implementing AI systems. Training requires massive computational resources and time to build the model. Inferencing operates with lighter computational needs but must deliver fast, accurate results for daily operations.

Source: LinkedIn

How AI Inferencing Works

AI inferencing follows a systematic process that transforms incoming data into useful predictions. The trained model receives new information, applies learned patterns, and generates specific outputs for business decisions.

Source: Quix

Data Input and Preprocessing

Raw data enters the system in various formats — images as pixel arrays, text as character strings, or sensor readings as numerical streams. The preprocessing stage converts this information into the exact format the trained model expects. A medical imaging system might resize X-ray images to specific dimensions, while a fraud detection system normalizes transaction amounts and converts merchant categories into numerical values.

Model Computation

The trained model applies mathematical operations to process the prepared input. Neural networks perform matrix multiplications using weights and biases learned during training. Each layer extracts different patterns from the data. Image recognition models use convolution operations to detect edges and shapes, while natural language processing models employ attention mechanisms to understand word relationships.

Output Generation

The model produces numerical results that require conversion into actionable decisions. Classification models generate probability scores for different categories. A spam detection system might output 0.85 for spam probability and 0.15 for legitimate email probability. Post-processing transforms these scores into final classifications using threshold functions and confidence measures.

How AI Inferencing Differs from Training

Training and inferencing serve fundamentally different purposes in the AI lifecycle. Training builds the model by analyzing large labeled datasets over extended periods, while inferencing applies the completed model to individual data points for immediate predictions.

The computational requirements differ dramatically between these phases. Training typically consumes 10 to 100 times more computing power than inferencing. A fraud detection model might require weeks of training on powerful graphics processing units but can analyze individual transactions in milliseconds during inferencing.

Resource allocation patterns also contrast sharply:

- Training phase — Dedicated hardware, controlled environments, extended timeframes

- Inferencing phase — Production environments, strict latency requirements, varying workloads

Data processing approaches reflect these different objectives. Training uses carefully curated datasets with millions of examples, while inferencing processes real-world data as it arrives, often requiring immediate responses without extensive preprocessing time.

AI Inferencing Performance Optimization

Organizations implement specific techniques to make their inference systems faster and more cost-effective. Performance optimization focuses on reducing response time, increasing request throughput, and minimizing operational expenses.

Model Compression Techniques

Source: ResearchGate

Quantization converts model weights from 32-bit floating-point numbers to 8-bit integers, reducing memory usage by up to 75 percent while maintaining accuracy. Pruning removes less important neural network connections, achieving 50 to 90 percent reduction in model parameters. Knowledge distillation transfers capabilities from large models to smaller, efficient versions that deliver similar performance with fewer computational requirements.

Hardware Acceleration

Graphics processing units provide parallel processing that can accelerate inferencing by 10 to 20 times compared to standard central processing units. Specialized tensor processing units offer even greater efficiency for specific model architectures. Edge computing devices bring processing closer to data sources, reducing latency to under one millisecond for real-time applications.

Deployment Strategies

Batch processing groups multiple requests together to exploit parallel computation capabilities. Dynamic batching adjusts batch sizes based on incoming request patterns and latency requirements. Load balancing distributes requests across multiple model instances to prevent bottlenecks and maintain consistent performance during varying demand periods.

Enterprise Applications

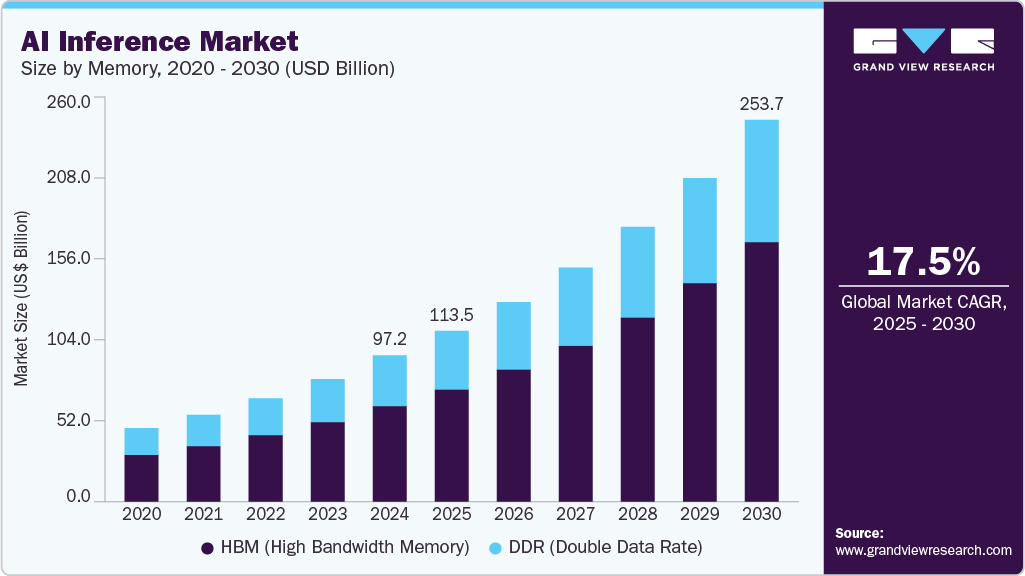

Source: Grand View Research

AI inferencing transforms business operations across industries by automating decision-making and accelerating response times in mission-critical applications.

Financial Services

Banks deploy real-time fraud detection systems that analyze transaction patterns, user behavior, and contextual information within milliseconds. Credit card companies process millions of transactions daily while maintaining false positive rates below one percent. Risk assessment models evaluate loan applications by analyzing income history, payment patterns, and debt ratios to determine approval decisions.

Healthcare Systems

Radiology departments use computer vision models to analyze medical images and assist physicians in identifying potential abnormalities. Emergency departments implement predictive models that identify patients at risk of deterioration based on vital signs and laboratory results. These systems process patient data continuously to provide early warning alerts for clinical staff.

Manufacturing Operations

Predictive maintenance systems analyze sensor data from machinery to forecast equipment failures two to four weeks in advance. Vibration sensors, temperature monitors, and acoustic detectors feed continuous data streams to models that reduce unplanned downtime by 30 to 50 percent compared to traditional maintenance schedules.

Quality control systems use computer vision to detect defects during production:

- Automotive assembly lines scan for paint defects and missing components

- Electronics manufacturers identify soldering errors and component placement issues

- Food processing facilities inspect for contamination and packaging problems

Retail and Marketing

Retail AI solutions optimize inventory management and personalize customer experiences through real-time recommendation engines. Marketing applications analyze customer behavior patterns to deliver targeted campaigns and predict purchase intentions across digital touchpoints.

Implementation Considerations

Deploying AI inferencing requires careful planning across technical, operational, and governance dimensions. Organizations evaluate their infrastructure, identify high-value use cases, and establish performance requirements before implementation.

Infrastructure Options

Cloud deployment provides scalable computing resources and managed services that automatically handle scaling and load balancing. Edge deployment moves processing closer to data sources for applications requiring immediate responses. Hybrid architectures combine cloud resources for complex analysis with edge components for real-time processing.

Security and Compliance

Data protection protocols encrypt information during transmission and storage within inference pipelines. Access controls limit which systems and personnel can interact with models and underlying data sources. Healthcare organizations comply with HIPAA regulations, while financial institutions follow GDPR requirements for customer data protection.

Quality Monitoring

Performance tracking measures accuracy, latency, and throughput across operational conditions. Organizations establish baseline metrics during deployment and monitor deviations that indicate model drift or system degradation. Bias detection procedures analyze prediction patterns across demographic groups to identify unfair or discriminatory outcomes.

Building Your AI Inferencing Strategy

Developing an effective inferencing strategy requires understanding your model requirements, deployment environment, and performance constraints. Organizations typically start by assessing current data infrastructure and identifying applications where inferencing can deliver measurable business value.

Technical architecture decisions impact system performance and operational costs. Central processing units work well for simple models and moderate computational demands. Graphics processing units excel at processing complex neural networks and high-throughput applications. Edge devices enable real-time processing for applications requiring immediate responses.

For small businesses implementing AI solutions, governance frameworks establish accountability structures for model maintenance, data quality, and system performance. The NIST AI Risk Management Framework provides structured guidance for identifying and mitigating risks throughout the inference lifecycle. Organizations implement monitoring systems to detect performance degradation and potential bias issues that may emerge over time.

Frequently Asked Questions

What’s the difference between batch and real-time AI inferencing?

Batch inferencing processes multiple data points together at scheduled intervals, like analyzing customer transactions once per hour. Real-time inferencing handles individual requests immediately as they arrive, like checking credit card transactions for fraud the moment someone makes a purchase. Batch processing offers higher efficiency, while real-time processing provides immediate responses.

Source: Tecton

How much computational power does AI inferencing require compared to training?

AI inferencing typically requires 10 to 100 times less computational power than training. A model that needs weeks of training on high-end graphics processing units can often generate predictions in milliseconds on standard servers. However, the exact requirements depend on model complexity and the number of simultaneous requests.

Can AI inferencing work without internet connectivity?

Yes, AI inferencing can operate offline through edge computing, where models run directly on local devices or servers. Smartphones, manufacturing equipment, and autonomous vehicles commonly use edge inferencing to make decisions without network connections. Edge deployment typically uses smaller, optimized models compared to cloud-based systems.

How do organizations measure AI inferencing accuracy?

Organizations compare model predictions against known correct answers using metrics like precision, recall, and error rates. Precision measures how many positive predictions were actually correct, while recall measures how many actual positive cases the model identified. Continuous monitoring in production environments helps detect when model performance degrades over time.

What happens when an inference model makes incorrect predictions?

Most enterprise AI systems include confidence scores with each prediction, allowing human operators to review cases where the model expresses uncertainty. Organizations establish thresholds where predictions below certain confidence levels trigger manual review processes. Error handling protocols vary by application — financial systems might flag suspicious transactions for investigation rather than automatically blocking them.