An AI accelerator is specialized computer hardware designed to speed up artificial intelligence and machine learning computations.

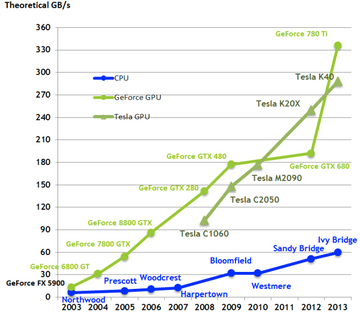

Traditional computer processors struggle with the massive parallel calculations required by modern AI systems. Standard central processing units excel at sequential tasks but cannot efficiently handle the billions of simultaneous operations needed for neural networks and deep learning models.

Source: ResearchGate

AI accelerators solve this problem through specialized architectures optimized for parallel processing. These chips can perform the same mathematical operation across thousands of data points simultaneously, dramatically reducing computation time for AI workloads.

The technology has become essential as organizations deploy AI across industries from healthcare diagnostics to autonomous vehicles. Understanding AI accelerator capabilities helps explain how modern AI applications achieve real-time performance that seemed impossible just years ago.

What AI Accelerators Actually Do

AI accelerators handle the specific mathematical operations that power artificial intelligence applications. Unlike regular processors that work through tasks one at a time, these chips perform thousands of calculations simultaneously.

Think of it like the difference between having one person solve 1,000 math problems versus having 1,000 people each solve one problem at the same time. The second approach finishes much faster — that’s essentially how AI accelerators work.

Source: Softwareg.com.au

Neural networks require millions of matrix multiplications to recognize images, understand speech, or make predictions. AI accelerators contain hundreds or thousands of small processing cores that can execute these identical calculations on different pieces of data at once.

Main Types of AI Accelerators

Source: MarketsandMarkets

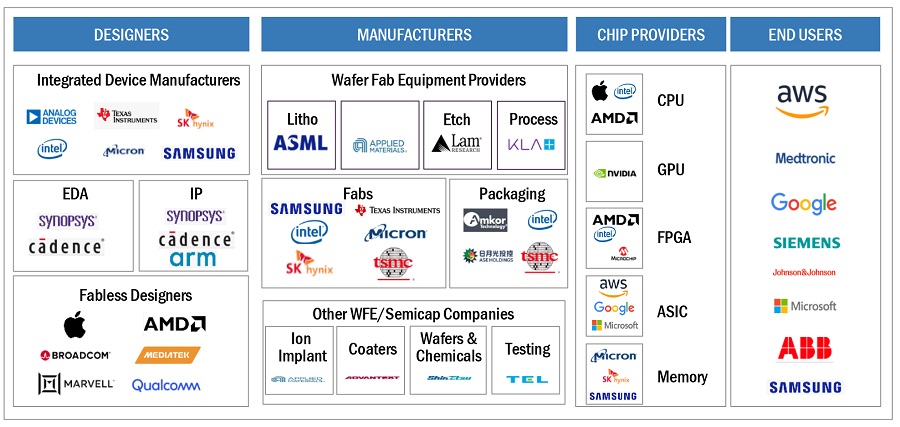

Graphics Processing Units (GPUs) represent the most common AI accelerators today. Originally built for video game graphics, GPUs contain thousands of small cores that excel at parallel processing. Companies like NVIDIA and AMD manufacture GPUs that researchers and businesses use for AI training and deployment.

Tensor Processing Units (TPUs) are Google’s custom chips specifically for machine learning. These chips integrate tightly with Google’s TensorFlow framework and demonstrate superior efficiency for specific AI tasks compared to general-purpose processors.

Neural Processing Units (NPUs) focus exclusively on neural network operations. Companies like Qualcomm and Apple build NPUs into smartphones and tablets to handle AI tasks while preserving battery life.

Field-Programmable Gate Arrays (FPGAs) contain millions of programmable circuits that can be reconfigured for specific AI tasks. Intel and Xilinx produce FPGAs that offer flexibility for organizations with changing AI requirements.

Application-Specific Integrated Circuits (ASICs) are custom-designed for particular AI applications. While expensive to develop, ASICs deliver the highest performance and energy efficiency for their intended use cases.

How AI Accelerators Achieve Better Performance

AI accelerators outperform traditional processors through three key design differences that directly address AI computational requirements.

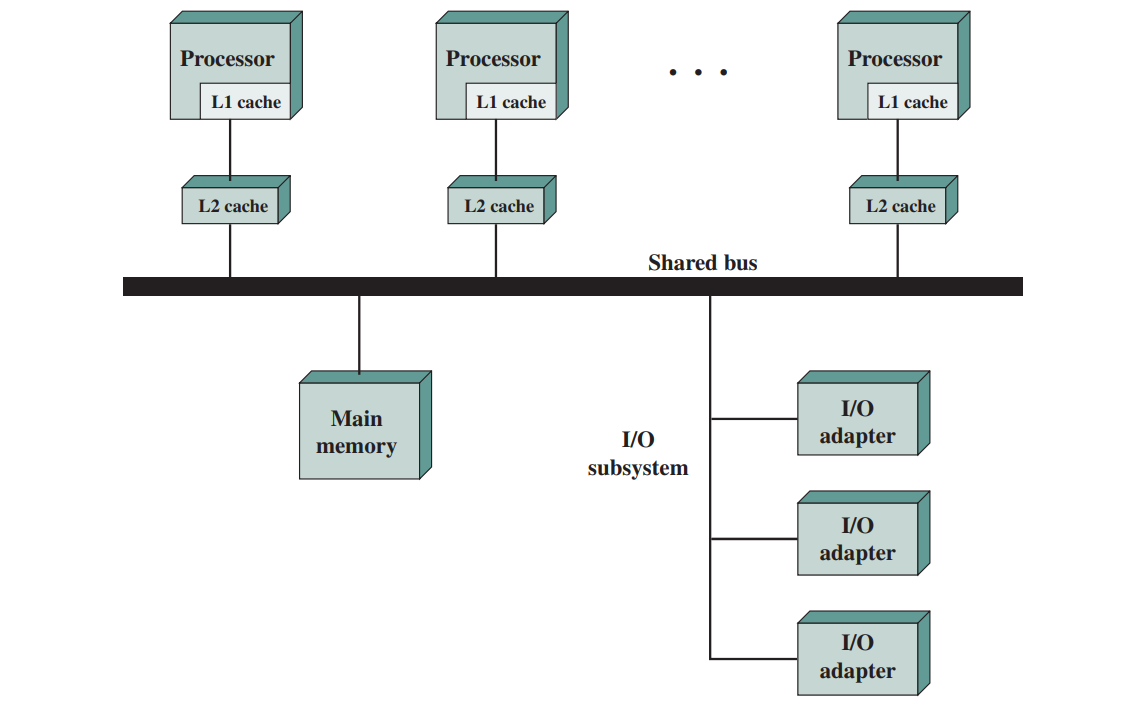

Parallel Processing Architecture

Regular processors execute instructions sequentially, completing one operation before starting the next. AI accelerators contain hundreds or thousands of processing elements that work simultaneously on different parts of the same problem.

Source: Medium

Optimized Memory Systems

AI accelerators distribute small, fast memory units throughout the chip rather than relying on a single large memory bank. This design keeps data close to processing elements, reducing the time spent moving information around the chip.

Reduced Precision Calculations

While traditional processors use 32-bit numbers for maximum precision, AI accelerators often work with 16-bit or 8-bit numbers. Neural networks maintain accuracy with lower precision, allowing accelerators to complete more calculations using less energy.

Real-World Applications of AI Accelerators

Autonomous Vehicles

Self-driving cars process camera, radar, and sensor data in real-time to detect objects, predict movement, and make navigation decisions. AI accelerators enable vehicles to analyze multiple data streams simultaneously while maintaining the split-second response times required for safe operation.

Source: NVIDIA Developer

Medical Imaging

Hospitals use AI accelerators to analyze X-rays, MRIs, and CT scans for early disease detection. These systems can examine thousands of medical images quickly, helping radiologists identify potential issues that might be missed during manual review.

Manufacturing Quality Control

Factories deploy AI accelerators to inspect products on assembly lines, identifying defects immediately rather than discovering problems after production. This real-time analysis prevents faulty products from reaching customers.

Financial Services

Banks and trading firms use AI accelerators for fraud detection, analyzing transaction patterns to identify suspicious activity within milliseconds of a purchase or transfer.

Choosing the Right AI Accelerator

The best AI accelerator depends on your specific requirements across several key factors.

Workload Characteristics

- Training large AI models — GPUs typically provide the best balance of performance and flexibility

- Running AI applications on mobile devices — NPUs offer superior energy efficiency

- Real-time processing with minimal delay — FPGAs and ASICs deliver the lowest latency

- Customizable AI applications — FPGAs allow reconfiguration as requirements change

Performance Requirements

Consider whether your application prioritizes maximum speed, lowest power consumption, or balanced performance. High-throughput applications benefit from GPU parallelism, while battery-powered devices require the energy efficiency of specialized NPUs.

Integration Constraints

Evaluate compatibility with existing software frameworks and development tools. Organizations using TensorFlow might benefit from TPUs, while those requiring broad framework support often choose GPUs.

Common Questions About AI Accelerators

How much faster are AI accelerators compared to regular processors?

AI accelerators typically deliver 10 to 100 times better performance for machine learning tasks compared to traditional CPUs, though the exact improvement depends on the specific application and accelerator type.

Source: Semiconductor Engineering

Can existing AI software run on accelerators without changes?

Many AI frameworks like TensorFlow and PyTorch include built-in support for various accelerators, allowing existing models to run with minimal modifications. However, achieving optimal performance often requires some optimization.

What’s the difference between AI accelerators and quantum computers for AI?

AI accelerators are specialized versions of traditional digital computers, while quantum computers use fundamentally different physics principles. Quantum computers remain largely experimental for AI applications, whereas accelerators are proven technologies deployed in production systems today.

Do AI accelerators work for all types of artificial intelligence?

AI accelerators excel at neural networks and deep learning but may not provide advantages for other AI approaches like symbolic reasoning or traditional algorithms. The benefit depends on whether your AI application involves the parallel mathematical operations that accelerators optimize.

Implementation Considerations for Organizations

Infrastructure Requirements

AI accelerators often require more electrical power and cooling than standard computer equipment. Data centers may need infrastructure upgrades to support multiple accelerator cards, while edge deployments must consider power limitations.

Software Development Changes

Teams may need training on accelerator-specific programming languages or optimization techniques. Many accelerators work with standard AI frameworks, but achieving maximum performance often requires understanding the underlying hardware architecture.

Cost Analysis

While AI accelerators have higher upfront costs than general-purpose processors, they often provide better price-performance ratios for AI workloads through faster processing and lower operational costs.

The rapid advancement of AI accelerator technology continues transforming how organizations deploy artificial intelligence applications. From enabling real-time autonomous vehicle decision-making to powering instant medical image analysis, these specialized processors have become fundamental infrastructure for modern AI systems.

Understanding AI accelerator capabilities helps organizations make informed decisions about implementing AI technologies while ensuring their computational infrastructure can support current and future artificial intelligence requirements. Whether you’re a small business exploring AI opportunities or an enterprise scaling AI initiatives, accelerators will continue playing an essential role in making advanced AI capabilities practical and accessible across diverse industries and use cases.