An AI chip is a specialized computer processor designed to handle artificial intelligence tasks more efficiently than traditional processors.

Modern artificial intelligence applications require massive amounts of parallel computing power that standard computer chips cannot provide effectively. Traditional central processing units (CPUs) excel at sequential tasks but struggle with the simultaneous calculations that machine learning algorithms demand. This computational gap has driven the development of specialized hardware designed specifically for AI workloads.

AI chips represent a fundamental shift in computer architecture, moving away from general-purpose designs toward processors optimized for specific types of mathematical operations. These specialized processors can perform thousands of calculations simultaneously, making them essential for training AI models and running AI applications in real-world environments.

What Are AI Chips

AI chips are specialized hardware components designed to accelerate artificial intelligence and machine learning tasks. These processors handle the complex mathematical calculations that power AI applications like voice recognition, image processing, and autonomous vehicles.

AI chips go by several names, including neural processing units (NPUs) and AI accelerators. Each term describes the same fundamental technology: computer processors built specifically for artificial intelligence workloads rather than general computing tasks.

How AI Chips Differ from Regular Processors

Traditional CPUs process instructions one after another in sequence, making them excellent for tasks like word processing or web browsing. AI chips use parallel processing architecture, handling thousands of calculations at the same time.

Machine learning algorithms require performing identical mathematical operations on massive datasets simultaneously. For example, when training an image recognition model, the same calculation might apply to thousands of pixels across millions of images. AI chips accomplish this through thousands of smaller processing units working independently on different data pieces.

Source: TechSpot

Key Characteristics of AI Processors

Three main features distinguish AI chips from traditional processors:

- Specialized Architecture — optimized for matrix multiplication and neural network operations

- Parallel Processing Power — handles multiple calculations simultaneously instead of sequentially

- Energy Efficiency — performs AI tasks faster while consuming less power

The parallel processing capability allows AI chips to perform millions of calculations simultaneously. This approach matches how AI algorithms work, where the same mathematical operation often applies to large amounts of data at once.

Types of AI Chips

The AI chip market includes several categories, each engineered for different computational demands. Understanding these categories helps explain why certain AI applications perform better with specific processors.

Graphics Processing Units for AI

Graphics Processing Units originally rendered images for video games but proved exceptionally effective for AI workloads. GPUs contain thousands of small cores that perform many simple calculations simultaneously, making them ideal for the matrix multiplications fundamental to machine learning.

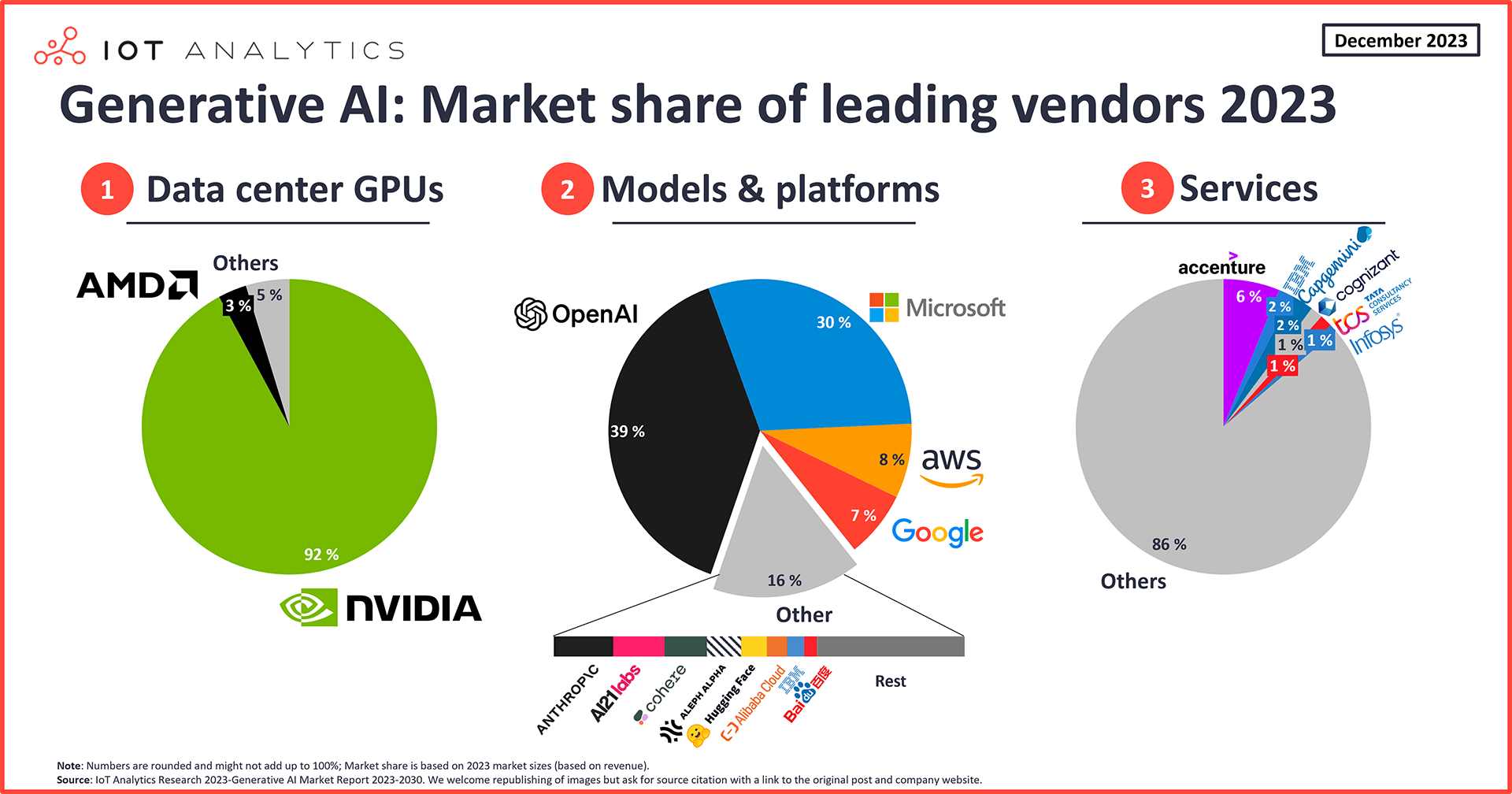

NVIDIA dominates the AI GPU market with approximately 65 percent market share, while AMD holds roughly 11 percent. GPUs excel at training deep learning models and running large-scale AI inference operations.

Source: X

Neural Processing Units

Neural Processing Units are chips specifically designed to mimic how the human brain processes information. NPUs optimize artificial neural networks and deep learning tasks by accelerating calculations through specialized mathematical operations.

NPUs integrate into smartphones, tablets, and laptops as part of system-on-chip designs. Apple includes neural engines in A-series processors, while Qualcomm incorporates AI engines in Snapdragon processors for mobile devices.

Field Programmable Gate Arrays

Field Programmable Gate Arrays are reprogrammable chips that can be customized for specific AI tasks after manufacturing. FPGAs offer flexibility because engineers can reconfigure the hardware to optimize performance for particular applications.

FPGAs consume significantly less power than GPUs, often operating on 10 watts compared to 75 watts or more for GPU systems. They prove particularly valuable for real-time applications requiring extremely low latency, such as autonomous vehicles and financial trading systems.

Application Specific Integrated Circuits

Application Specific Integrated Circuits are chips designed for one specific AI task, providing maximum efficiency but no flexibility. ASICs cannot be reprogrammed after manufacturing, which means they excel at their designated function but cannot adapt to different workloads.

While ASICs require higher upfront development costs and longer development timelines, they deliver superior performance and energy efficiency for well-defined AI tasks. The trade-off between specialization and flexibility makes ASICs suitable for high-volume applications with consistent AI workloads.

How AI Chips Work

AI chips operate through specialized computational mechanisms that differ fundamentally from traditional computer processors. While regular CPUs excel at handling diverse tasks sequentially, AI chips are engineered specifically for the mathematical operations that power artificial intelligence applications.

Parallel Processing Architecture

Neural networks require identical mathematical operations applied to vast arrays of data points simultaneously. AI chips accomplish this through thousands of smaller processing units working independently on different data pieces at the same time.

A typical CPU might have four to 16 cores, while a modern GPU can contain thousands of cores optimized for simple mathematical operations. Each core handles separate calculations, allowing the processor to work on multiple parts of an AI problem simultaneously rather than one piece at a time.

Source: Princeton Research Computing – Princeton University

Training vs Inference Operations

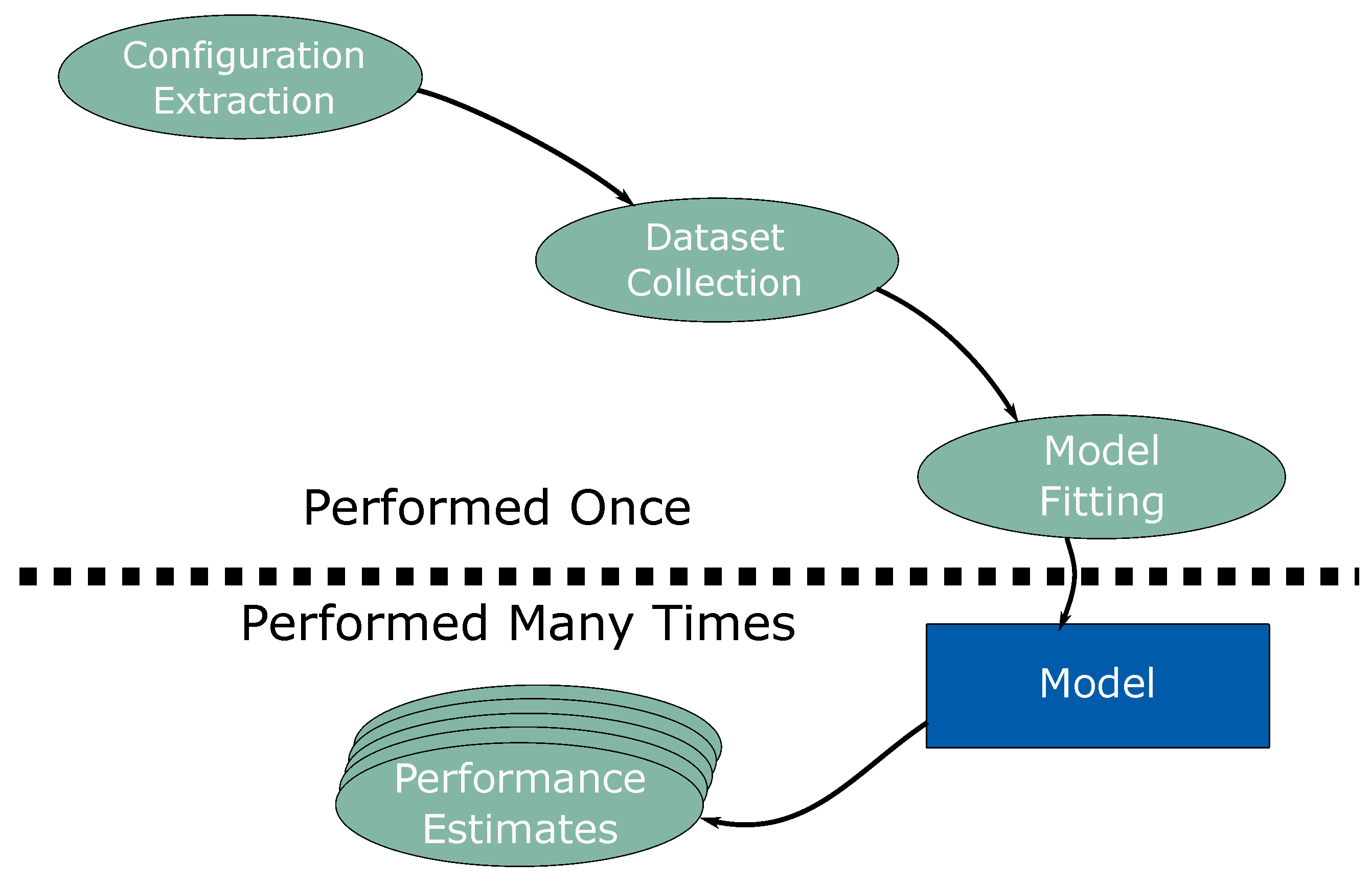

AI chips handle two distinct types of workloads: training and inference. Training involves teaching an AI model to recognize patterns by processing massive datasets and iteratively adjusting the model’s internal parameters to minimize prediction errors.

Inference occurs when a trained model applies learned patterns to generate predictions from new data. When a chatbot responds to questions or a recommendation system suggests products, the underlying model performs inference operations using previously learned knowledge.

Training typically requires more computational resources because algorithms must perform complex mathematical operations through potentially billions of model parameters multiple times. Inference operations are generally less computationally intensive but occur continuously as deployed models process real-world data streams.

AI Chip Applications Across Industries

AI chips have moved beyond research laboratories into real-world applications affecting millions of people daily. These specialized processors handle computationally intensive tasks that traditional computer chips cannot perform efficiently at the required speed and scale.

Autonomous Vehicles and Transportation

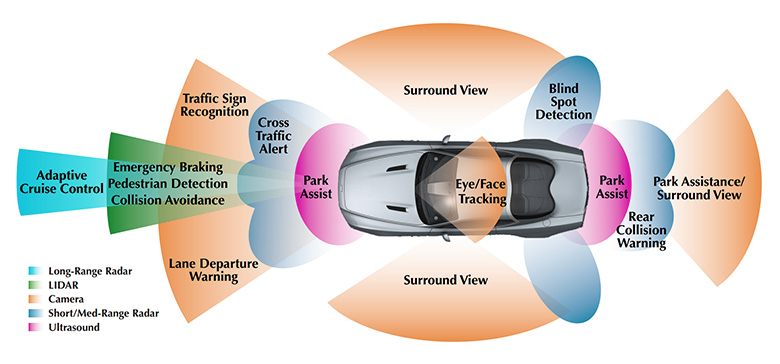

Self-driving cars represent one of the most demanding applications for AI chip technology. Autonomous vehicles generate enormous amounts of data from cameras, LiDAR sensors, radar systems, and GPS units operating simultaneously to create complete environmental awareness.

AI chips process sensor data in real-time, performing object detection and classification to identify vehicles, pedestrians, traffic signs, and road markings. The processing happens continuously while vehicles move, requiring AI chips to analyze millions of data points every second.

Source: Ecotron

Companies like Waymo utilize AI processing systems capable of performing trillions of operations per second to handle autonomous driving computational demands. The speed requirements are particularly strict because human safety depends on immediate responses to changing road conditions.

Healthcare and Medical Diagnostics

Medical applications leverage AI chips to analyze complex biological data and assist healthcare professionals in diagnosis and treatment planning. Medical imaging represents one of the largest healthcare applications, where processors analyze X-rays, MRI scans, CT scans, and other diagnostic images.

AI chips process medical images to identify patterns indicating specific diseases or conditions. AI in healthcare systems detect tumors in cancer screening, identify fractures in emergency medicine, and analyze retinal images for diabetic complications.

Diagnostic assistance systems use AI chips to analyze patient symptoms, medical history, and test results to suggest potential diagnoses for physicians to consider. Real-time patient monitoring systems in hospitals analyze continuous data streams from heart monitors and blood pressure sensors to identify deteriorating patient conditions.

Financial Services and Risk Management

Financial institutions use AI chips to process enormous volumes of transaction data and market information for fraud detection, risk assessment, and automated trading. Speed and accuracy requirements make AI chips essential for competitive financial operations.

Fraud detection systems analyze credit card transactions and bank transfers in real-time, comparing each transaction against patterns of normal customer behavior and known fraud indicators. Banks process millions of transactions daily, requiring AI chips capable of handling massive parallel processing workloads.

Algorithmic trading systems use AI chips to analyze market data, news feeds, and economic indicators to make buy and sell decisions faster than human traders. High-frequency trading firms execute thousands of trades per second based on market patterns identified through AI processing.

Manufacturing and Industrial Automation

Manufacturing companies use AI chips to optimize production processes, ensure quality control, and predict equipment maintenance requirements. Industrial AI applications require processors handling real-time data from multiple sensors and control systems simultaneously.

Predictive maintenance systems analyze vibration data, temperature readings, and performance metrics from industrial equipment to predict when machines will require maintenance. Quality control systems use AI chips to analyze images from production lines, identifying defective products at speeds exceeding human inspection capabilities.

Process optimization systems analyze production data and adjust manufacturing parameters to maximize efficiency and minimize waste. Supply chain optimization systems coordinate production schedules, manage supplier relationships, and respond to changes in customer demand patterns.

Benefits of AI Chips for Organizations

AI chips deliver measurable value for organizations through enhanced computational capabilities designed specifically for artificial intelligence workloads. These specialized processors address the fundamental mismatch between traditional computing architectures and the parallel processing requirements of machine learning algorithms.

Performance and Speed Improvements

AI chips reduce processing time for machine learning tasks by orders of magnitude compared to traditional CPUs. Training deep learning models that previously required weeks on CPU-based systems can complete in hours or days using specialized AI accelerators.

Real-time AI applications become feasible with AI chip acceleration. Autonomous vehicle systems process data from multiple sensors simultaneously, performing object detection and decision-making within milliseconds. Neural processing units execute thousands of matrix multiplication operations simultaneously, allowing AI chatbots to generate responses in real-time.

Energy Efficiency and Cost Savings

AI chips consume significantly less power while delivering superior performance compared to general-purpose processors. Research from Oregon State University demonstrates new chip designs that consume 50 percent less energy than traditional approaches for equivalent computational tasks.

Source: Minerset

Operational cost reductions result from both lower energy consumption and reduced infrastructure requirements. Companies achieve the same computational output using fewer physical servers, reducing data center space requirements, cooling costs, and maintenance expenses.

Field-programmable gate arrays operate on as little as 10 watts for edge AI applications compared to 75 watts or more required by traditional processors. This power efficiency enables deployment of AI capabilities in remote locations, mobile devices, and battery-powered systems.

Competitive Advantages Through AI Acceleration

Faster AI processing enables companies to deploy sophisticated AI applications that create competitive differentiation. Organizations implement real-time personalization systems, predictive maintenance programs, and automated decision-making tools that respond to market conditions within seconds.

Time-to-market advantages emerge when companies can iterate on AI models more rapidly. Reduced training times allow data science teams to test multiple model configurations and optimize performance through faster feedback cycles.

Customer experience improvements result from AI applications operating at interactive speeds. Recommendation engines update suggestions in real-time based on user behavior, while fraud detection systems flag suspicious transactions instantly.

Choosing the Right AI Chip for Your Business

Selecting appropriate AI chips requires understanding specific workload requirements, performance constraints, and budget parameters. The decision impacts both immediate operational efficiency and long-term scalability of AI initiatives.

Source: MDPI

Assessing Your AI Workload Requirements

Determine whether your organization primarily needs training capabilities, inference acceleration, or both. Training workloads involve teaching AI models using large datasets and require massive computational resources for iterative parameter adjustments.

Training applications typically require higher precision arithmetic and substantial memory bandwidth to handle large datasets effectively. Graphics processing units excel at training tasks due to their parallel processing architecture and high memory capacity.

Inference applications focus on speed and energy efficiency rather than raw computational power. Field-programmable gate arrays often provide superior performance for inference tasks, operating on significantly less power than equivalent GPU systems.

Performance requirements vary based on application latency tolerance and throughput demands. Real-time applications like autonomous vehicles require extremely low latency processing, while batch analytics systems can tolerate higher latency for better cost efficiency.

Evaluating Performance and Cost Considerations

Performance evaluation encompasses processing power, memory bandwidth, and specialized computational capabilities. Different chip architectures excel at different mathematical operations, with some optimized for matrix multiplication while others accelerate convolution operations.

Memory requirements significantly impact both performance and cost considerations. High-bandwidth memory provides optimal performance for AI workloads but increases overall system cost. Organizations processing large datasets require substantial memory capacity, while simpler applications operate effectively with standard memory configurations.

Total cost of ownership includes initial hardware acquisition, power consumption, cooling infrastructure, and ongoing maintenance expenses. Energy-efficient chips reduce operational costs over time, particularly for large-scale deployments.

Integration and Compatibility Factors

Compatibility with existing software frameworks determines implementation complexity and development resources required. Most AI development relies on frameworks like TensorFlow, PyTorch, or ONNX, with chip vendors providing varying support levels.

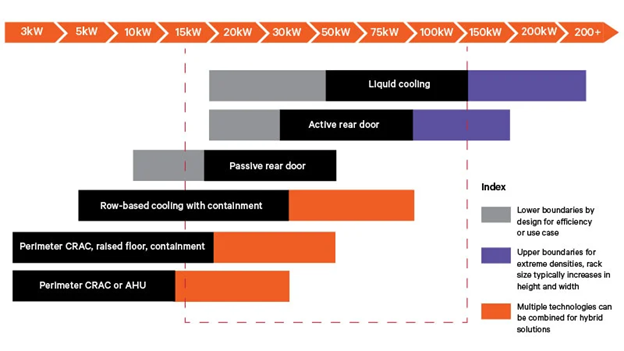

Infrastructure integration encompasses power delivery, cooling systems, and physical space requirements. High-performance AI chips often require specialized power supplies and advanced cooling solutions, particularly liquid cooling for dense deployments.

System architecture considerations include how AI chips connect with existing servers, storage systems, and networking infrastructure. Some solutions integrate directly into existing server platforms, while others require dedicated appliances or specialized configurations.

Implementation Considerations and Best Practices

Deploying AI chips within enterprise environments requires careful planning across multiple organizational levels. Organizations face complex choices between GPU-based systems, specialized AI accelerators, and custom silicon solutions.

Hardware selection involves evaluating trade-offs between performance, cost, and energy efficiency. Modern AI chips generate substantial heat and require advanced cooling solutions, often including liquid cooling systems for high-performance installations.

Integration challenges arise when connecting AI chips with existing enterprise systems and workflows. Organizations must ensure compatibility between new AI hardware and current data storage systems, networking infrastructure, and application frameworks.

Technical expertise represents a significant consideration for organizations implementing AI chip technology. The specialized nature of AI hardware requires personnel with experience in parallel computing, machine learning frameworks, and system optimization.

Budget planning involves both initial capital expenditure and ongoing operational costs. Beyond hardware purchase price, organizations must account for installation, training, maintenance, and potential infrastructure upgrades.

Testing and validation procedures help ensure AI chip implementations meet performance expectations and reliability requirements. Organizations typically conduct proof-of-concept deployments to evaluate real-world performance before committing to large-scale implementations.

Frequently Asked Questions About AI Chips

How much do enterprise-grade AI chips typically cost?

AI chip costs vary significantly based on processor type and deployment scale. High-end GPUs like NVIDIA’s enterprise-grade accelerators cost between $10,000 to $40,000 per unit. Field-programmable gate arrays typically range from $1,000 to $15,000 per chip depending on capabilities.

Source: Vertiv

Beyond chip purchase price, organizations face additional infrastructure costs. Data center deployments require specialized cooling systems, high-bandwidth memory, and power infrastructure that can add 50 to 100 percent to total system cost.

Can existing enterprise systems be upgraded with AI acceleration?

Many existing enterprise systems can incorporate AI acceleration through add-in cards or external processing units. Modern servers with available PCIe slots can accommodate GPU accelerators or FPGA cards relatively easily.

Older systems may face limitations in power supply capacity or cooling capabilities that make AI chip upgrades impractical. Some legacy applications require software modifications to take advantage of AI acceleration capabilities.

What security measures apply specifically to AI chip implementations?

AI chip implementations require standard cybersecurity protections plus additional safeguards for AI-specific vulnerabilities. Organizations implement secure boot processes, encrypted communications between chips, and hardware-based attestation to verify chip authenticity.

AI models and training data require protection through secure hardware enclaves that isolate sensitive computations from other system processes. Organizations implement model encryption, secure key management, and monitoring systems to detect unauthorized access attempts.

How do organizations measure return on investment from AI chip purchases?

ROI measurement involves tracking multiple performance and cost metrics. Organizations measure processing speed improvements, comparing task completion times before and after AI acceleration implementation. Energy efficiency gains often provide ongoing operational cost savings.

Revenue impact assessment includes new capabilities enabled by faster AI processing, such as real-time analytics or enhanced customer experiences. Organizations track reduced labor costs from automated processes and improved accuracy in decision-making systems.

What ongoing maintenance do AI chip systems require?

AI chip systems require regular software updates including driver updates, firmware patches, and AI framework optimizations. Performance monitoring tracks chip utilization, temperature, and processing throughput to identify potential issues before they impact operations.

Hardware maintenance includes cleaning cooling systems, monitoring power consumption, and replacing components as they reach end of life. Software optimization involves tuning AI models for specific chip architectures and updating algorithms as new optimization techniques become available.