An AI data center is a specialized computing facility designed to handle the intensive processing requirements of artificial intelligence applications and machine learning workloads.

These facilities represent a fundamental shift from traditional data centers. While conventional data centers focus on general computing tasks like email servers and websites, AI data centers optimize for massive parallel processing operations. Graphics processing units and tensor processing units replace traditional processors as the primary computing engines.

The computational demands of AI applications create unique infrastructure challenges. Training a single large language model can consume hundreds of megawatt-hours of electricity and require weeks of continuous processing. Modern AI server racks consume between 48 and 120 kilowatts of power, compared to 6 to 10 kilowatts in traditional configurations.

What Makes AI Data Centers Different from Regular Data Centers

AI data centers operate on completely different principles than traditional computing facilities. Regular data centers use central processing units that handle one task at a time very quickly. AI data centers use graphics processing units that can perform thousands of calculations simultaneously.

Source: Statista

The power requirements tell the story clearly. Traditional server racks use 6 to 10 kilowatts of electricity. AI server racks demand 48 to 120 kilowatts — that’s like powering 40 to 100 homes from a single rack.

AI workloads also run differently. While regular computer programs might use resources in bursts throughout the day, AI training processes run at maximum capacity for weeks without stopping. This creates sustained electrical demand that can strain power grids and requires specialized cooling systems.

Specialized Hardware That Powers AI Operations

Graphics processing units serve as the backbone of AI computing because they excel at parallel processing. A typical CPU has 4 to 32 cores, while modern GPUs contain thousands of smaller cores working together. This architecture perfectly matches how neural networks perform millions of mathematical operations simultaneously.

Source: ResearchGate

Tensor processing units take specialization even further. Google designed these chips specifically for AI workloads, eliminating features needed for general computing and focusing entirely on neural network operations. The latest TPU systems contain over 9,000 chips working together and deliver computational performance measured in exaflops.

Custom AI accelerators represent the cutting edge of specialized processing. Companies design these chips for specific AI tasks like image recognition or language processing. By removing unnecessary components, these processors achieve 10 to 100 times better energy efficiency than general-purpose hardware for their specialized functions.

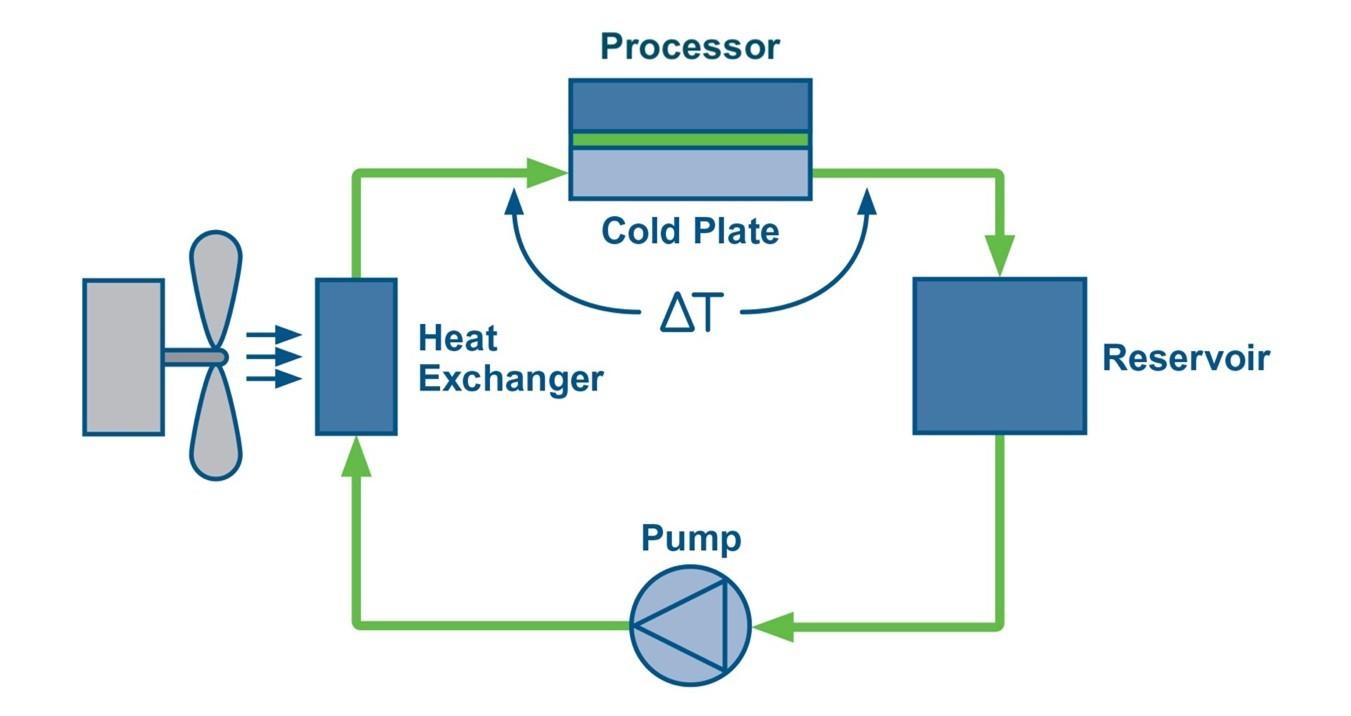

Advanced Cooling Systems Handle Extreme Heat

Traditional air conditioning can’t handle the heat generated by AI hardware. When server racks exceed 40 kilowatts of power consumption, air cooling becomes ineffective. AI processors generate so much heat that liquid cooling becomes necessary, not optional.

Source: Flex Power Modules

Liquid cooling systems use specialized non-conductive fluids to transfer heat directly from processors. Cold plates attach to graphics processing units and circulate coolant through channels that absorb thermal energy at the source. This approach removes heat much more efficiently than air-based systems.

Immersion cooling represents the most advanced thermal management approach. Entire servers operate while submerged in dielectric fluid that doesn’t conduct electricity. The fluid absorbs heat from all components simultaneously, enabling power densities that would be impossible with air cooling.

High-Speed Networks Connect Thousands of Processors

AI training requires constant communication between processors working on the same model. When thousands of graphics processing units collaborate on training a large language model, they exchange information continuously to stay synchronized.

Source: ResearchGate

InfiniBand technology provides the ultra-high-speed connections necessary for this coordination. These networks deliver data transfer rates up to 400 gigabits per second with latency under one microsecond. Compare this to standard office networks that typically operate at 1 to 10 gigabits per second.

The network architecture itself differs from traditional designs. AI data centers use “fat tree” configurations where every processor connects to network switches through multiple parallel paths. This redundancy ensures that communication delays don’t slow down training processes that might represent weeks of computational work.

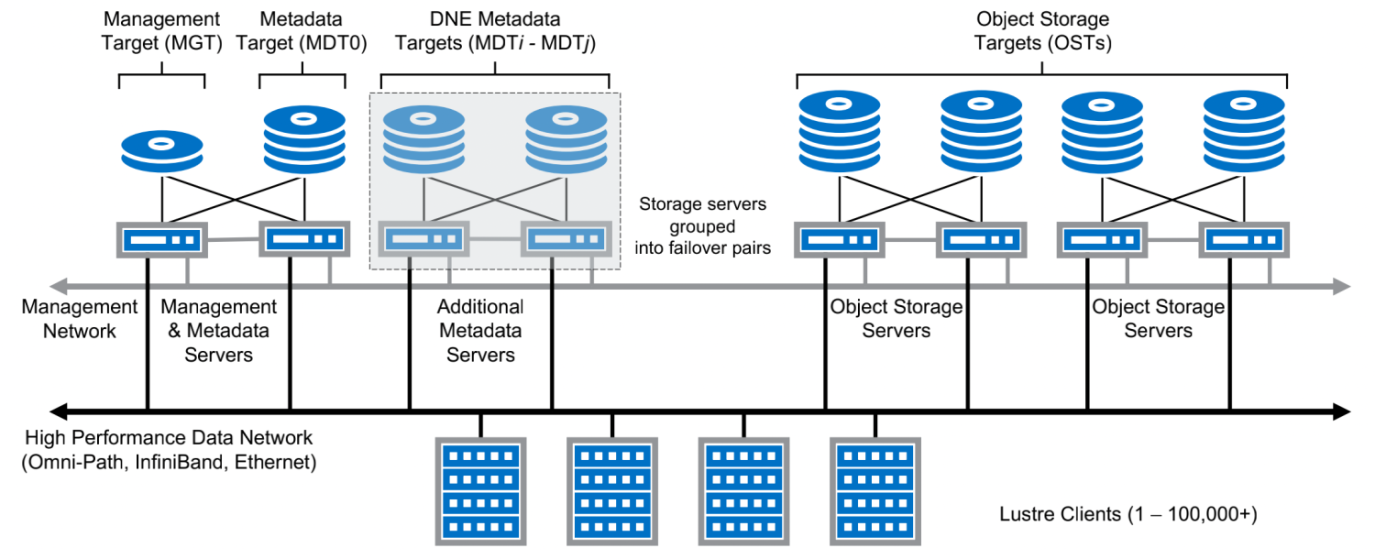

Storage Systems Handle Massive AI Datasets

AI applications work with datasets that dwarf traditional business data. Training modern language models requires processing terabytes of text, while computer vision systems analyze millions of images. These datasets demand storage systems designed for sustained high-speed access.

Source: Alibaba Cloud

Parallel file systems distribute data across hundreds of storage devices while presenting a unified view to AI applications. When an AI model needs training data, the system retrieves information simultaneously from multiple storage servers, maximizing throughput and minimizing wait times.

- High-performance solid-state drives — Store frequently accessed data and active model checkpoints for immediate retrieval

- High-capacity traditional storage — House complete datasets and archived model versions for long-term retention

- Intelligent caching systems — Predict which data AI applications will need next and preload it into high-speed memory

Power Infrastructure Supports Massive Electrical Demands

AI data centers consume electricity like small cities. A single large AI facility can require 100 to 300 megawatts of continuous power — enough to supply 75,000 to 225,000 homes. This creates challenges for electrical grids that weren’t designed to handle such concentrated demand.

Specialized power distribution systems deliver electricity safely at these scales. Multiple voltage levels step power down from utility transmission lines to the precise voltages required by AI processors. Uninterruptible power supplies and backup generators prevent training interruptions that could waste weeks of computational progress.

Grid integration becomes critical when multiple AI data centers operate in the same region. Utility companies often require infrastructure upgrades to support the sustained high-power loads that AI facilities create.

Security Protects Valuable AI Assets

AI data centers contain intellectual property worth millions of dollars. Trained AI models represent competitive advantages that companies protect as carefully as trade secrets. The facilities housing these assets require security measures beyond those used in traditional data centers.

Physical security includes biometric access controls, multi-layer perimeter defenses, and monitoring systems designed to protect against both external threats and insider risks. Digital security focuses on encrypted storage of AI models and secure communication channels between distributed processing systems.

AI-specific security threats add another layer of complexity. Adversarial attacks attempt to manipulate AI models through corrupted training data or malicious inputs during operation. Security systems monitor for these threats while maintaining the performance characteristics essential for AI workloads.

Planning Your AI Infrastructure Investment

Organizations considering AI infrastructure face complex decisions about building, buying, or partnering for their computing needs. Building dedicated facilities provides maximum control but requires substantial capital investment and specialized expertise. Cloud services offer immediate access without infrastructure management but can become expensive for sustained high-volume operations.

The planning process starts with understanding your specific AI workload characteristics. Language model training demands massive parallel processing power, while real-time inference applications prioritize low-latency responses. Different AI applications create different infrastructure requirements that affect design decisions.

For businesses exploring AI implementation, partnering with AI consulting services can help evaluate computational requirements and infrastructure options without the complexity of building in-house expertise.

Cost considerations extend beyond initial hardware purchases to include ongoing electricity consumption, cooling system operation, and specialized maintenance requirements. AI infrastructure represents a long-term investment that organizations evaluate based on their computational requirements and business objectives.

Frequently Asked Questions About AI Data Centers

How much electricity does an AI data center use compared to a regular data center?

AI data centers consume three times more electricity per square foot than traditional data centers. A typical AI server rack uses 48 to 120 kilowatts of power, while conventional server racks consume 6 to 10 kilowatts. Large AI facilities can require 100 to 300 megawatts of continuous power.

Can existing data centers be converted to support AI workloads?

Converting traditional data centers for AI use faces significant limitations. The electrical infrastructure typically can’t deliver the power densities AI hardware requires. Cooling systems designed for conventional servers become inadequate when AI processors generate three times more heat per rack.

Network infrastructure also requires substantial upgrades. AI workloads need high-bandwidth, low-latency connections between processors that most existing facilities don’t provide. While partial conversions are possible, they often prove more expensive than purpose-built AI facilities.

What types of AI applications require dedicated data center infrastructure?

Large-scale AI model training typically requires dedicated data center infrastructure due to its intensive computational demands. Training advanced language models, computer vision systems, and scientific AI applications consume resources continuously for weeks or months.

Real-time AI inference serving millions of users also benefits from specialized infrastructure. Applications like autonomous vehicle processing, financial fraud detection, and medical imaging analysis require consistent low-latency responses that general-purpose data centers struggle to provide.

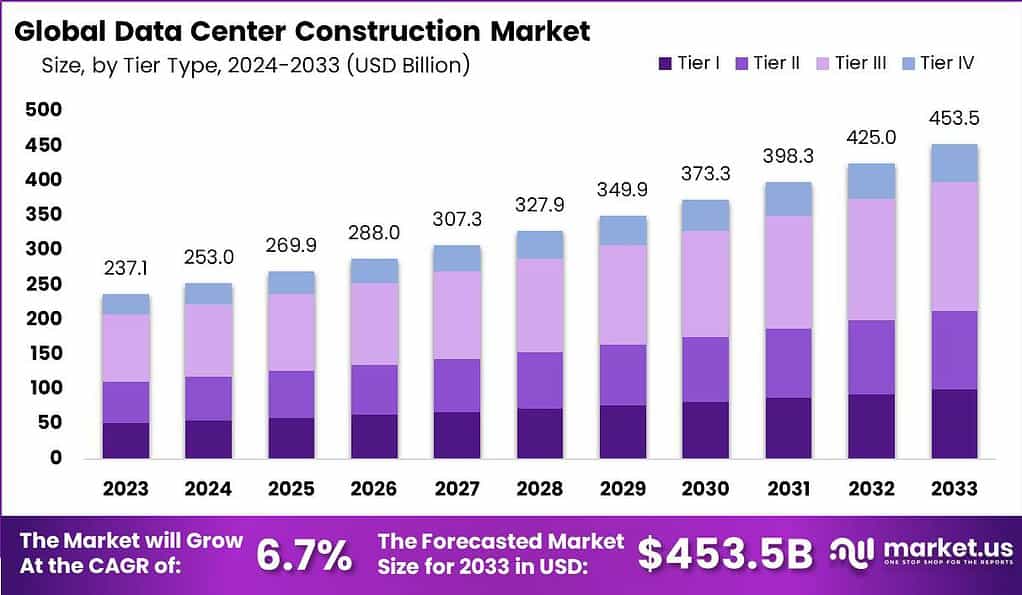

How long does it take to build an AI data center from start to finish?

Source: Market.us Scoop

AI data center construction typically takes 18 to 36 months from initial planning to operational status. The planning and design phase requires 6 to 12 months for site selection, engineering specifications, and regulatory approvals.

Construction duration depends on facility size and complexity but generally takes 12 to 24 months. Commissioning and testing add another 3 to 6 months to ensure all systems operate correctly before production deployment. Retrofit projects may complete faster but often require extensive electrical and cooling modifications.