Explainable Artificial Intelligence (XAI) refers to artificial intelligence systems that can provide clear, understandable reasons for their decisions and outputs. Traditional AI models often work like “black boxes,” making accurate predictions without revealing how they reach their conclusions. XAI transforms these opaque systems into transparent tools that humans can understand and trust.

The growing complexity of AI systems has created a critical gap between performance and transparency. While advanced machine learning models can achieve impressive accuracy in tasks like medical diagnosis or loan approval, their decision-making processes remain hidden from users. This opacity creates serious challenges when AI systems make decisions that affect people’s lives, careers, or access to services.

Recent regulatory developments have made AI transparency a legal requirement rather than just a technical preference. The European Union’s General Data Protection Regulation grants individuals the right to meaningful explanations for automated decisions that significantly affect them. Similar regulations are emerging worldwide, making explainable AI essential for compliance in many industries.

How Explainable AI Works

Explainable AI transforms complex machine learning algorithms into transparent systems that can provide clear reasoning for their outputs. When an AI system recommends a medical treatment or approves a loan application, XAI explains which factors influenced the decision and how those factors led to the final outcome.

The core concept involves creating AI systems that can provide accompanying evidence or reasons for their processes and outputs. XAI enables users to understand not just what an AI system decided, but the specific reasoning path it followed to reach that conclusion.

Three foundational elements form the basis of effective explainable AI systems:

- Transparency — AI systems openly reveal their decision-making processes and allow users to examine the factors that influence outputs

- Interpretability — AI systems present their reasoning in ways that humans can understand and follow

- Human comprehension — AI systems tailor their explanations to match the knowledge level and needs of different users

XAI vs Black Box AI Models

Traditional black box AI models hide their decision-making processes, making it impossible for users to understand why the system reached specific conclusions. These systems might accurately predict which loans will default or which medical images show disease, but they can’t explain their reasoning.

Explainable AI systems provide the opposite approach. They reveal their decision-making logic through feature importance scores, visual highlights in images, or step-by-step reasoning chains. Users can see which patient symptoms led to a diagnosis or which financial factors influenced a credit decision.

Source: Dataiku blog

The National Institute of Standards and Technology has established four fundamental principles for explainable AI systems. These principles provide a framework for developing and evaluating XAI implementations.

Source: ResearchGate

- Explanation requires systems to deliver accompanying evidence or reasons for all outputs and processes. AI systems provide some form of explanation that users can access, regardless of whether the explanation is perfect or complete.

- Meaningful mandates that systems provide explanations that are understandable to individual users. Different users require different types of explanations based on their roles, technical backgrounds, and decision-making needs.

- Explanation accuracy ensures that explanations correctly reflect the system’s actual process for generating outputs. The explanations faithfully represent the reasoning behind decisions rather than providing misleading or simplified accounts.

- Knowledge limits requires that systems only operate under conditions for which they were designed and when they reach sufficient confidence in their outputs. AI systems acknowledge their limitations and uncertainty levels to users.

Types of Explainable AI Methods

Explainable AI methods fall into two main categories based on when transparency gets built into the system. The first approach creates models that are naturally transparent from the beginning. The second approach adds explanations to complex models after they finish training.

Interpretable Models by Design

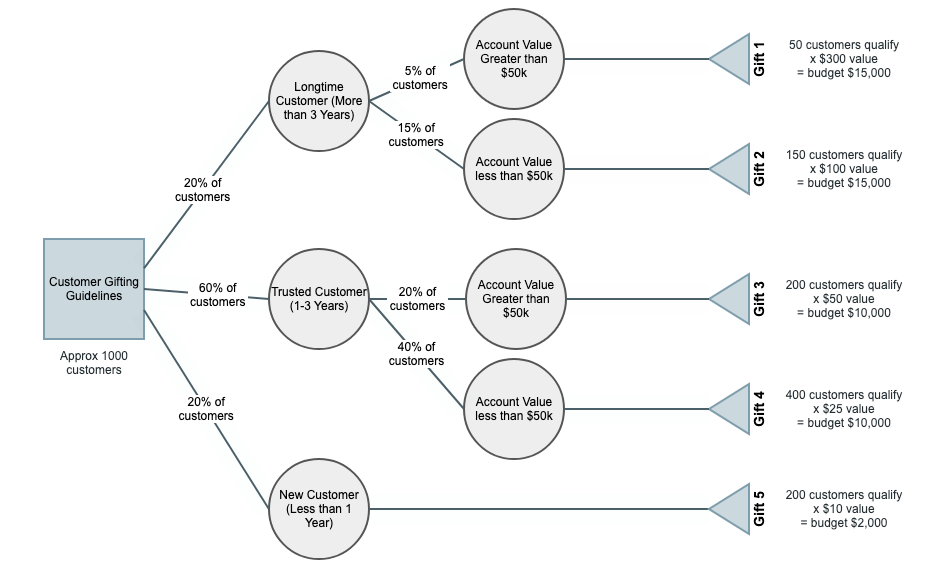

Some AI models work like open books where you can follow every step of their reasoning. Decision trees represent one of the most transparent AI approaches. These models create branching paths of yes-or-no questions that lead to final decisions.

Source: Gliffy

Linear regression models provide another naturally transparent approach. These models create mathematical relationships between inputs and outputs using simple addition and multiplication. Each input feature receives a weight that shows how much it influences the final prediction.

Rule-based systems operate using “if-then” statements that mirror human decision-making. These systems apply logical rules in sequence to reach conclusions. Examples of naturally transparent models include simple decision trees, linear models, rule-based expert systems, and naive Bayes classifiers.

Post-Hoc Explanation Methods

Complex AI models like deep neural networks often achieve higher accuracy but work like black boxes. Post-hoc methods add explanations to these models after training without changing their internal structure.

Source: MarkovML

LIME (Local Interpretable Model-agnostic Explanations) explains individual predictions by creating simplified models around specific examples. LIME changes small parts of an input and observes how predictions change. For image classification, LIME might cover different regions of a photo to see which areas matter most for the prediction.

SHAP (SHapley Additive exPlanations) calculates how much each input feature contributes to a prediction using game theory principles. SHAP treats each feature like a player in a team game and measures each player’s contribution to the final score.

Both methods work with any type of AI model, making them valuable tools for explaining existing systems. LIME focuses on understanding individual predictions in detail, while SHAP provides mathematically consistent explanations that work across different examples.

Why Explainable AI Matters for Business

Modern businesses face a fundamental challenge when deploying artificial intelligence systems: understanding how these systems make decisions. Traditional AI models operate as “black boxes,” producing accurate results without revealing their reasoning process.

Regulatory Compliance and Legal Requirements

Government regulations increasingly require businesses to explain their automated decision-making processes. The European Union’s General Data Protection Regulation grants individuals the right to meaningful explanations for automated decisions that significantly affect them.

The proposed EU AI Act expands these requirements by classifying AI systems according to risk levels and imposing specific transparency obligations on high-risk applications. Systems used in hiring, credit scoring, law enforcement, and healthcare face particularly stringent documentation and explainability requirements.

Key compliance benefits of implementing XAI include legal protection, penalty avoidance, audit readiness, risk mitigation, and market access. GDPR violations can result in fines up to four percent of global annual revenue.

Building Trust and Transparency

Explainable AI directly addresses trust barriers that prevent stakeholders from confidently using AI systems. When users understand how AI systems reach decisions, they can better evaluate the appropriateness of AI recommendations and integrate them with human expertise.

Healthcare applications demonstrate the importance of stakeholder trust in AI systems. Medical professionals can examine AI diagnostic recommendations and understand which specific symptoms, test results, or imaging features led to particular conclusions.

Financial services provide another clear example of how explanations build stakeholder confidence. American Express uses XAI-enabled models to analyze over one trillion dollars in annual transactions for fraud detection. Rather than simply flagging suspicious transactions, the system provides investigators with clear explanations of the specific patterns and anomalies that triggered fraud alerts.

Explainable AI Applications Across Industries

Different industries use explainable AI to solve unique challenges while meeting regulatory requirements and building trust with stakeholders.

Healthcare AI Transparency

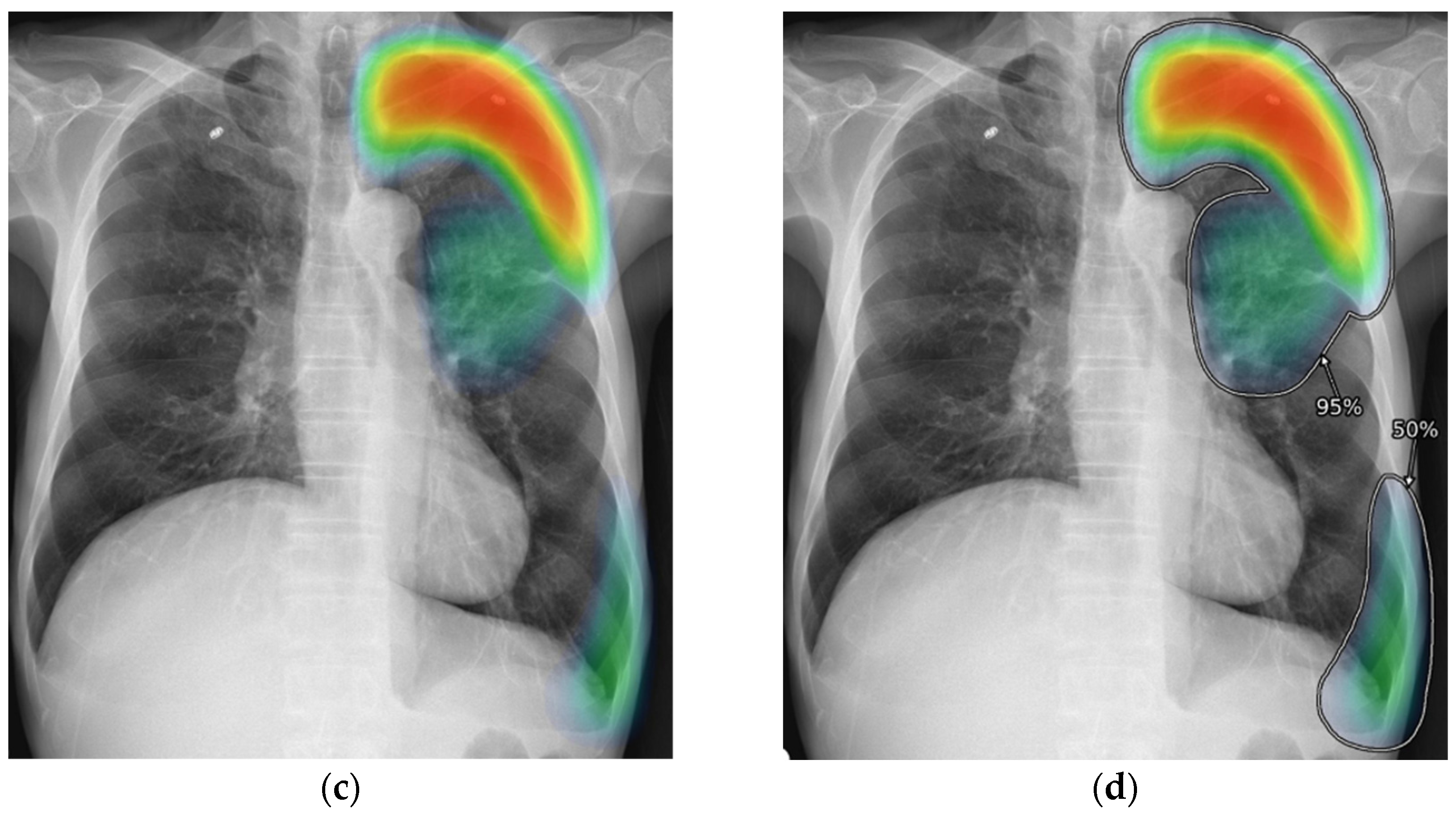

Healthcare organizations use explainable AI to help doctors understand diagnostic recommendations and treatment suggestions. When AI analyzes medical images like X-rays or MRIs, explainable AI shows which specific areas influenced the diagnosis through visual highlights and annotations.

Source: MDPI

Medical image analysis systems employ saliency mapping techniques that create heat maps showing the most important regions in radiological scans. For example, when AI detects pneumonia in a chest X-ray, saliency maps highlight the exact lung regions that led to the diagnosis.

Diagnostic AI systems explain their reasoning by listing the symptoms, test results, and patient history factors that contributed to each recommendation. This transparency enables doctors to identify potential errors or biases in AI outputs while maintaining patient safety standards.

Financial Services Risk Management

Financial institutions implement explainable AI to meet regulatory compliance requirements while improving decision accuracy in lending, fraud detection, and risk assessment. Banks use these systems to explain credit decisions to customers and regulators.

Credit scoring applications show applicants which factors influenced approval or denial decisions. Instead of receiving a simple yes or no answer, customers learn whether their debt-to-income ratio, payment history, or credit utilization affected the outcome.

Fraud detection systems use explainable AI to help investigators understand why transactions triggered security alerts. Fraud investigators receive clear explanations showing which transaction characteristics, spending patterns, or merchant details raised red flags.

Manufacturing and Quality Control

Manufacturing companies apply explainable AI to predictive maintenance and quality control processes. These applications help engineers understand equipment failure predictions and identify quality issues before they impact production.

Predictive maintenance systems analyze sensor data from manufacturing equipment to predict when machines might fail. Explainable AI shows maintenance teams which sensor readings, usage patterns, or environmental factors contributed to failure predictions.

Quality control applications use explainable AI to identify defective products during manufacturing processes. Computer vision systems that inspect products can highlight specific visual features or measurements that indicate quality problems.

Implementation Challenges and Solutions

Organizations face significant obstacles when deploying explainable AI systems in real-world environments. These challenges span technical, practical, and operational dimensions that can make XAI implementation complex and resource-intensive.

Technical Implementation Barriers

Integration complexity represents one of the primary technical barriers organizations encounter when implementing XAI systems. Existing AI infrastructure often lacks the architectural flexibility to accommodate explanation generation methods.

Source: ResearchGate

Legacy systems present particular challenges because they were designed without explainability requirements in mind. Organizations frequently discover that their current AI models, data processing workflows, and deployment infrastructure can’t easily incorporate explanation capabilities without significant re-engineering effort.

Different XAI methods require different technical approaches and expertise. LIME requires perturbation-based sampling systems, SHAP demands mathematical computation frameworks, and visualization methods need specialized rendering capabilities.

Accuracy vs Interpretability Trade-offs

Organizations face fundamental decisions about balancing model performance with explanation clarity. Complex models like deep neural networks often achieve higher accuracy but provide less interpretable outputs, while simpler models offer clearer explanations but may sacrifice predictive performance.

This trade-off becomes particularly challenging in high-stakes applications where both accuracy and explainability matter significantly. Healthcare diagnosis systems need precise predictions to ensure patient safety, but they also require clear explanations that medical professionals can understand and validate.

Post-hoc explanation methods attempt to address this trade-off by providing explanations for complex models after training, but these approaches introduce their own limitations. The explanations may not accurately reflect the actual decision-making process of the underlying model.

How to Implement Explainable AI Successfully

Organizations beginning their explainable AI journey require a structured approach that spans assessment, technology selection, team preparation, and ongoing optimization.

Source: ResearchGate

Assessment and Strategy Development

The first step involves conducting a comprehensive audit of existing AI systems to understand their current explainability capabilities. Organizations examine each AI model to determine whether it operates as a black box or already provides some level of interpretability.

Teams map out the explanation requirements for different stakeholders, including end users, technical staff, compliance officers, and executives. Each group requires different types of explanations based on their technical expertise and decision-making responsibilities.

The assessment includes evaluating regulatory requirements that apply to the organization’s AI systems. Industries like healthcare, finance, and hiring face specific explainability mandates under regulations such as GDPR, the EU AI Act, and sector-specific guidelines.

Technology Selection and Integration

Selecting appropriate XAI technologies depends on the specific AI architectures in use and the explanation requirements identified during assessment. Organizations with deep learning models might choose gradient-based methods for image analysis or attention mechanisms for natural language processing.

Post-hoc explanation methods offer flexibility for organizations with existing black box models that can’t be easily replaced. LIME generates local explanations by creating simplified interpretable models around specific predictions. SHAP provides mathematically grounded feature importance scores based on game theory principles.

Integration planning addresses how XAI capabilities will connect with existing systems and workflows. Technical teams design APIs that deliver explanations alongside predictions, ensuring that explanation data reaches the right users at the right time. For organizations seeking expert guidance, professional AI consulting services can accelerate this integration process.

Team Training and Change Management

Technical staff require training on XAI implementation, including hands-on experience with explanation generation tools and interpretation techniques. Data scientists learn how to implement LIME, SHAP, or other explanation methods within their existing model development workflows.

Business users receive training focused on interpreting and acting on explanations rather than generating them. This training covers how to read feature importance charts, understand confidence intervals, and recognize when explanations suggest potential model issues or biases.

Change management addresses the cultural shift from accepting AI decisions without question to actively reviewing and validating them through explanations. Organizations establish new processes for reviewing AI decisions when explanations reveal unexpected patterns or low confidence scores.

FAQs About Explainable AI Implementation

What’s the difference between LIME and SHAP explanation methods?

LIME creates simplified models around individual predictions to explain specific decisions, while SHAP calculates mathematically consistent feature contributions across all predictions using game theory principles.

LIME (Local Interpretable Model-agnostic Explanations) works by making small changes to input data and observing how predictions change. For a credit approval decision, LIME might adjust income levels or credit scores to see which changes affect the approval probability most. This approach helps users understand what factors matter for specific decisions.

SHAP (SHapley Additive exPlanations) treats each input feature like a player in a cooperative game and calculates how much each player contributes to the final outcome. SHAP explanations always add up to explain the complete difference between a prediction and the average prediction across all cases.

The key difference lies in their mathematical foundations and consistency. LIME provides intuitive explanations for individual cases but may give different explanations for similar inputs. SHAP provides consistent explanations that follow mathematical principles but requires more computational resources.

How long does explainable AI implementation typically take for enterprise systems?

Simple XAI implementations using existing models typically require 2-4 months, while complex enterprise deployments involving multiple models and custom interfaces take 6-18 months for complete implementation.

Organizations with strong machine learning teams and clear requirements often see functional explanation systems within the shorter timeframe. These implementations typically use established libraries like SHAP or LIME with existing models that don’t require significant architectural changes.

Complex enterprise deployments involve extensive planning, custom development, user testing, and iterative refinement based on stakeholder feedback. Organizations also account for change management processes and user training during these longer implementations.

Team readiness significantly influences implementation speed. Organizations with existing machine learning expertise and clear explainability requirements move faster than those requiring extensive team training or stakeholder alignment. Infrastructure limitations can create additional delays requiring hardware upgrades or cloud platform migrations.

Can explainable AI work with deep learning models used in computer vision?

Yes, several XAI methods work specifically with deep learning models, including Grad-CAM for highlighting important image regions and attention mechanisms that show which parts of inputs the model focuses on.

Grad-CAM (Gradient-weighted Class Activation Mapping) creates heat maps that highlight image regions most important for classification decisions. When a deep learning model identifies pneumonia in chest X-rays, Grad-CAM shows which lung areas contributed most to the diagnosis by overlaying colored regions on the original image.

Attention mechanisms in neural networks naturally provide explanations by showing which input elements the model focuses on during processing. These mechanisms work particularly well for image analysis and natural language processing tasks where understanding focus areas helps explain decisions.

Saliency maps represent another approach for explaining deep learning decisions in computer vision. These maps calculate gradients to show which pixels most influence the model’s output, creating visual explanations that highlight important image features.

What regulatory requirements drive explainable AI adoption in financial services?

GDPR Article 22 requires meaningful explanations for automated decisions significantly affecting individuals, while proposed regulations like the EU AI Act classify credit scoring as high-risk applications requiring transparency documentation.

The General Data Protection Regulation grants individuals the right to obtain meaningful information about the logic involved in automated decision-making. Financial institutions processing loan applications, insurance claims, or investment recommendations face these transparency requirements for decisions affecting EU residents.

The Fair Credit Reporting Act in the United States requires adverse action notices explaining why credit applications were denied. While these notices existed before AI adoption, explainable AI systems help financial institutions provide more detailed and accurate explanations.

Banking regulators in various countries have issued guidance emphasizing the importance of model risk management and transparency. The Federal Reserve’s SR-11-7 guidance requires banks to validate their models and understand their limitations, making explainable AI valuable for regulatory compliance.

The proposed EU AI Act specifically classifies AI systems used for credit scoring and loan approval as high-risk applications subject to strict transparency, documentation, and risk management requirements. Financial institutions operating in the EU will need explainable AI capabilities to demonstrate compliance with these regulations.