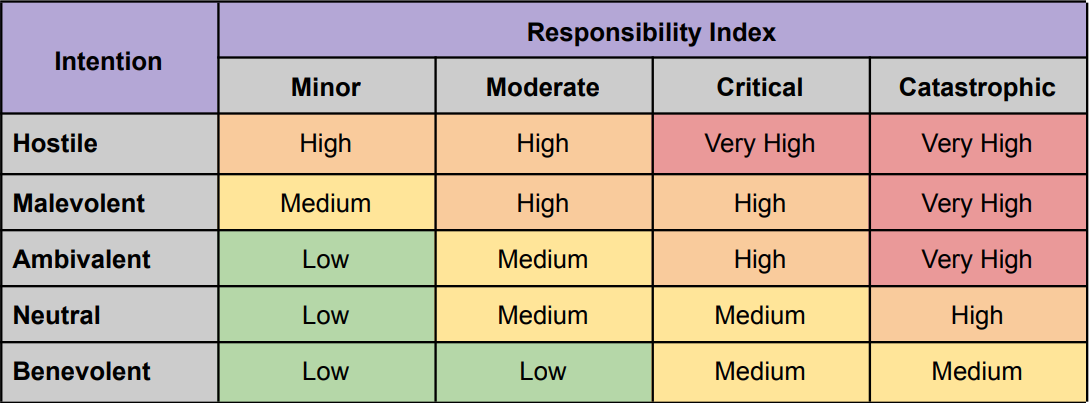

Risk management in AI involves implementing practices and tools to address specific risks that artificial intelligence systems introduce to organizations and users.

AI systems create unique challenges that traditional risk management approaches cannot fully address. These systems make decisions through pattern recognition and statistical inference rather than following predetermined rules. The probabilistic nature of AI outputs means organizations must develop specialized frameworks to identify, assess, and mitigate potential threats throughout the AI lifecycle.

Source: Hyperproof

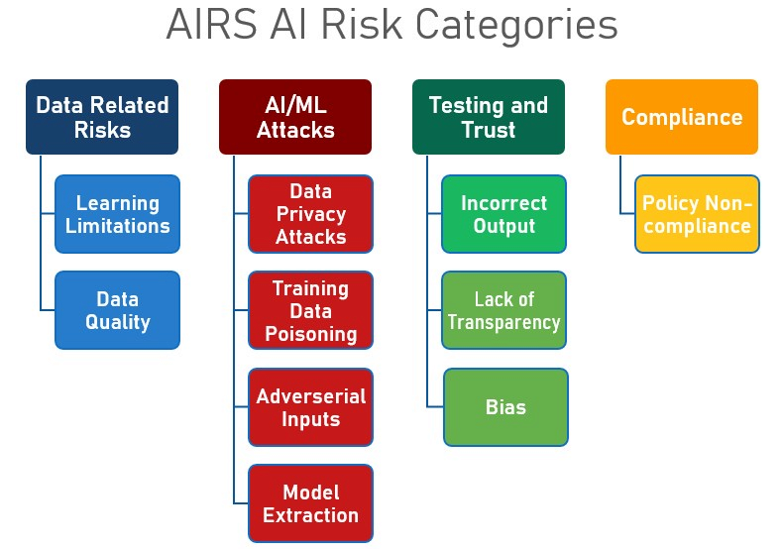

Understanding AI Risk Categories

AI systems introduce four main types of risks that organizations must manage systematically.

Source: Wharton Human-AI Research – University of Pennsylvania

- Technical and operational risks emerge from the underlying technology infrastructure. These include model drift, where AI performance degrades over time due to changing data patterns, and adversarial attacks where malicious actors manipulate system inputs to cause failures.

- Ethical and bias risks occur when AI systems perpetuate or amplify societal prejudices present in training data. A hiring algorithm might systematically reject qualified candidates from certain demographic groups if trained on historical data reflecting past discrimination.

- Security and privacy risks stem from the vast data requirements necessary for AI training and operation. These systems often process sensitive personal information, creating pathways for privacy violations and data breaches.

- Regulatory and compliance risks arise from the rapidly evolving legal landscape governing AI development and deployment. Organizations must navigate multiple, often overlapping regulatory frameworks while maintaining operational flexibility.

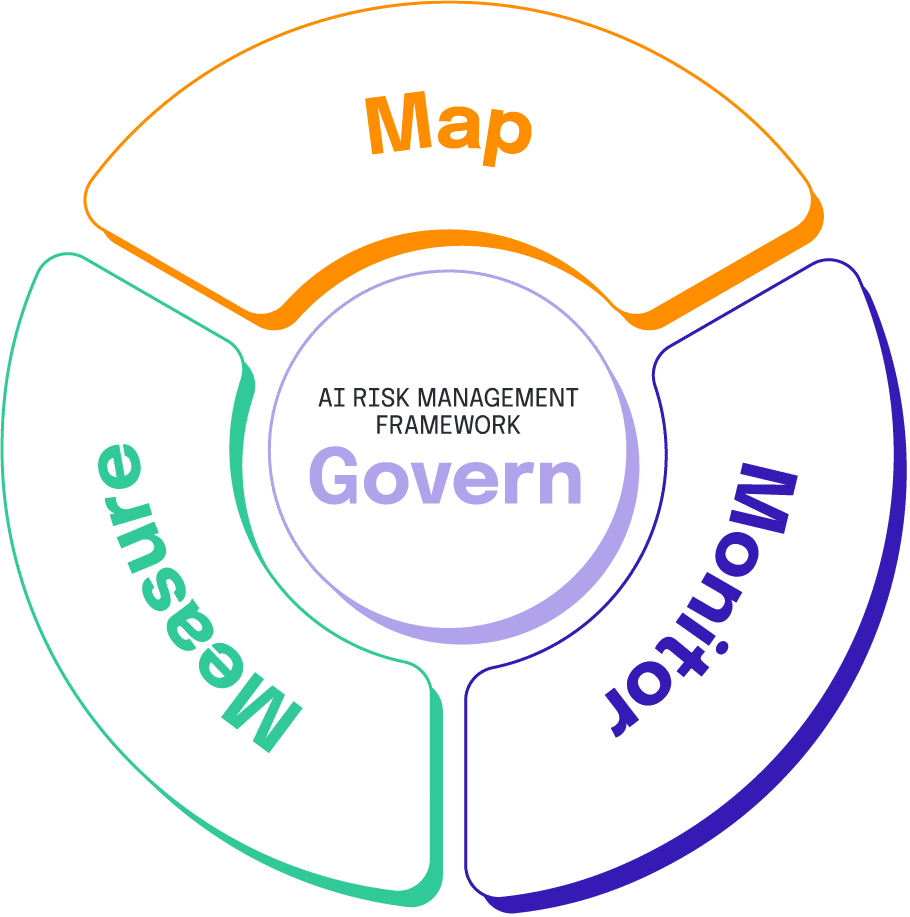

How the NIST AI Risk Management Framework Works

The National Institute of Standards and Technology published the AI Risk Management Framework in January 2023 as a voluntary guide for organizations. The framework centers on four interconnected functions that work together iteratively.

Source: NIST AI Resource Center – National Institute of Standards and Technology

- The Govern function establishes organizational policies and oversight structures for AI development and deployment. This includes creating cross-functional teams with clear authority to make decisions about AI risk management.

- The Map function identifies stakeholders and potential impacts within specific operational contexts. Organizations conduct comprehensive stakeholder analyses that consider direct users, customers whose data is processed, and community members affected by AI decisions.

- The Measure function assesses risks through quantitative and qualitative evaluation methods. Risk assessment combines likelihood and severity scales to measure both probability of occurrence and potential consequences of identified risk events.

- The Manage function implements response strategies and continuous monitoring to reduce identified risks while maintaining system performance. This includes developing incident response procedures and establishing continuous improvement processes.

Building Your AI Risk Assessment Process

Effective risk assessment begins with creating a complete inventory of all AI systems in use or under development. Many organizations discover “shadow AI” deployments during this process — systems that weren’t formally recognized as AI applications.

Stakeholder mapping identifies all parties who interact with or are affected by AI systems:

Source: Canva

- Direct stakeholders include system operators, end users, and people whose data is processed

- Indirect stakeholders include customers, employees, regulatory bodies, and community members who experience consequences from AI-driven decisions

Risk categorization organizes potential threats according to established frameworks. Technical risks include model performance degradation and security vulnerabilities. Ethical risks encompass bias, discrimination, and fairness concerns. Legal risks involve regulatory compliance and privacy violations.

Documentation requirements ensure that risk assessment findings support ongoing monitoring and compliance verification. Risk registers record identified threats, assessment results, and planned mitigation measures.

Creating Effective Risk Mitigation Strategies

Risk mitigation planning translates assessment findings into specific actions that reduce identified threats to acceptable levels. Effective strategies address root causes rather than only treating symptoms.

Source: Generative AI

Technical controls address system-level vulnerabilities through design modifications and operational safeguards. Model validation processes verify system performance across different scenarios. Security measures protect against unauthorized access and adversarial attacks.

Procedural controls establish human oversight capabilities that provide additional protection layers. Human-in-the-loop processes ensure critical decisions receive appropriate review before implementation. Regular audits verify ongoing compliance with established safeguards.

Training programs help employees understand their responsibilities for implementing and maintaining risk controls. Technical training provides developers with knowledge about secure AI development practices. Business training helps users recognize potential risks and respond appropriately.

Third-party risk management addresses threats from external AI services, data providers, and integration partners. Vendor assessment processes evaluate the risk management practices of external providers.

Monitoring AI Systems for Emerging Risks

Continuous monitoring systems track AI system performance across multiple dimensions to detect emerging risks and verify the effectiveness of implemented safeguards.

Source: Evidently AI

Performance monitoring tracks accuracy, reliability, and consistency of AI system outputs over time. Statistical process control techniques identify trends that may indicate model drift or degradation.

Fairness monitoring examines AI system outputs across different demographic groups to detect bias or discrimination. Regular analysis compares treatment of different populations to identify disparate impacts.

Security monitoring detects attempts to compromise AI systems through adversarial attacks or unauthorized access. Anomaly detection identifies unusual patterns that may indicate security threats.

Incident response procedures specify immediate actions for addressing detected problems. Response teams include technical experts who can diagnose and resolve system issues. Communication protocols ensure stakeholders receive timely notification of significant incidents.

Navigating AI Regulatory Compliance

The regulatory landscape for AI continues evolving as governments recognize the need for comprehensive frameworks. Organizations face increasingly complex compliance requirements that vary across jurisdictions and industries.

The European Union’s AI Act establishes a risk-based approach categorizing AI systems into four levels. High-risk systems in areas like employment, education, and law enforcement face extensive requirements for documentation, human oversight, and post-deployment monitoring.

The United States operates under sector-specific regulations and executive orders. The Biden Administration’s Executive Order on AI directs federal agencies to develop safety and security standards while promoting innovation.

Data protection regulations like the General Data Protection Regulation create additional compliance obligations for AI systems processing personal data. Organizations must address consent management, purpose limitation, and individual rights including explanation rights for automated decision-making.

Implementing AI Governance in Your Organization

Successful AI governance starts with forming cross-functional teams that bring together expertise from technology, legal, compliance, ethics, and business domains. These teams provide the foundation for consistent AI risk management across all departments.

Source: Madison AI

Governance team composition typically includes:

- IT and data science representatives who understand technical capabilities and limitations

- Legal and compliance professionals with knowledge of regulatory requirements

- Business stakeholders who provide operational context

- Ethics and risk management specialists offering evaluation frameworks

Policy frameworks establish consistent standards for AI development, testing, deployment, and monitoring. These policies address risk assessment requirements, approval processes for new AI initiatives, and documentation standards.

Decision-making processes define how AI-related risks are escalated, evaluated, and resolved. Clear escalation paths ensure high-risk situations receive appropriate attention from qualified decision-makers.

For small businesses looking to implement AI governance, starting with basic risk assessment procedures and gradually building comprehensive frameworks proves most effective.

Measuring Success in AI Risk Management

Organizations track specific metrics to assess AI risk management effectiveness and maintain operational awareness of potential vulnerabilities.

Technical performance metrics include model accuracy degradation rates, prediction confidence distributions, and error rate patterns across different input categories.

Fairness indicators measure demographic parity across protected groups, equal opportunity metrics for positive outcomes, and disparate impact ratios between demographic segments.

Security measures track adversarial attack detection rates, input validation failures, and anomalous behavior patterns.

Regular risk assessment reports document systematic evaluations conducted at predetermined intervals. These reports capture both quantitative metrics and qualitative assessments, providing comprehensive risk profiles that support strategic decision-making.

FAQs About AI Risk Management

Source: National Institute of Standards and Technology

What are the most common AI risks organizations face today?

The most frequent AI risks include model bias leading to discriminatory outcomes, data privacy violations during training and operation, security vulnerabilities from adversarial attacks, and performance degradation over time. Organizations also face regulatory compliance challenges as AI laws evolve rapidly across different jurisdictions.

How long does it take to implement an AI risk management framework?

Basic framework implementation typically takes six to twelve months for most organizations. Companies with existing governance structures often complete initial implementation in six to nine months, while those building governance capabilities from scratch may require twelve to eighteen months. Timeline depends on organizational readiness and the complexity of existing AI systems.

What qualifications should someone managing AI risks have?

Effective AI risk managers need technical AI knowledge combined with risk management expertise. Educational backgrounds in computer science, data science, or related technical fields provide understanding of AI capabilities and limitations. Cross-functional experience in governance, compliance, and technology helps manage diverse AI risks across organizational contexts.

How much does implementing AI risk management cost for organizations?

Implementation costs vary significantly based on organization size and AI complexity. Small organizations may spend fifty thousand to two hundred thousand dollars annually on basic risk management programs, while large enterprises often invest millions in comprehensive frameworks. Many organizations start with foundational approaches before investing in comprehensive programs.

Which industries face the strictest AI risk management requirements?

Financial services, healthcare, and employment sectors face the most stringent requirements due to potential impacts on people’s lives and livelihoods. The EU AI Act categorizes systems in these areas as high-risk, requiring extensive documentation, human oversight, and post-deployment monitoring. Transportation and law enforcement also face significant regulatory oversight for AI applications.