The AI Bill of Rights is a federal framework that outlines five core principles designed to protect Americans from harmful artificial intelligence systems. Released by the White House Office of Science and Technology Policy in October 2022, the framework addresses concerns about algorithmic discrimination, privacy violations, and lack of transparency in automated decision-making systems.

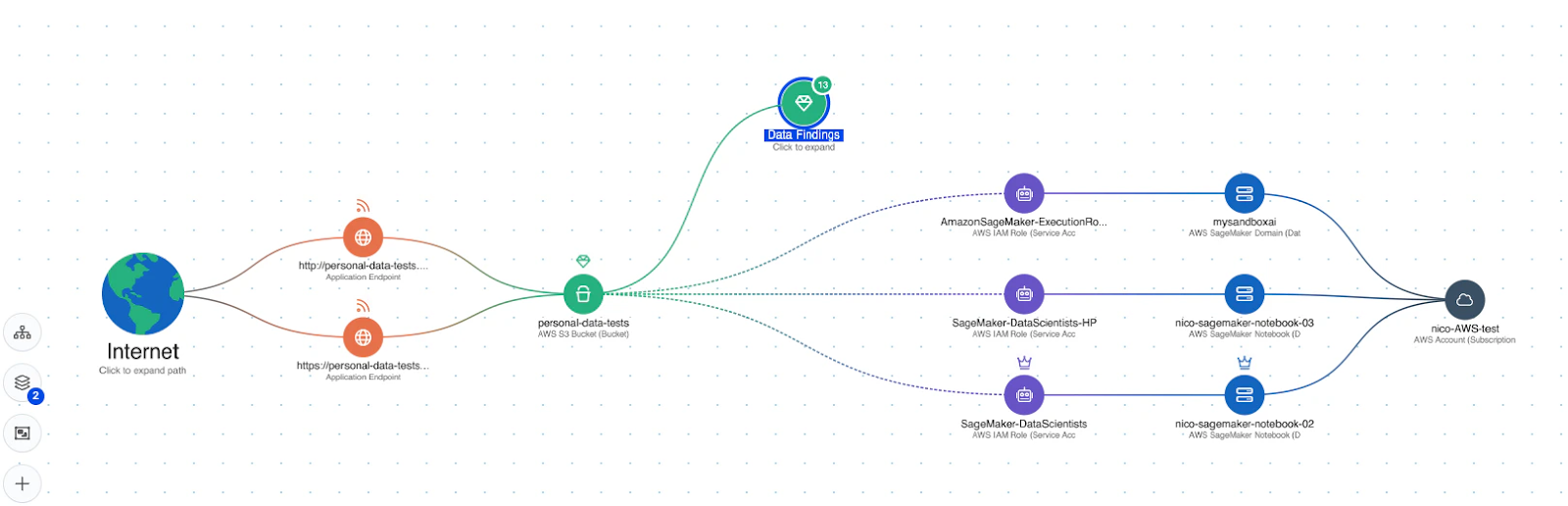

Source: Wiz

The framework emerged after extensive public consultation revealed widespread problems with AI systems affecting employment, healthcare, education, and criminal justice. These systems often operated without adequate oversight, creating unfair outcomes for different communities. The document represents America’s first comprehensive attempt to establish rights-based protections for the AI age.

Recent political changes have significantly altered the AI governance landscape. The Trump administration’s 2025 policy shifts moved federal priorities away from civil rights protections toward deregulation and American AI dominance. These changes create uncertainty about how the framework’s principles will be implemented and enforced.

The AI Bill of Rights remains a non-binding guidance document without legal enforcement power. However, its principles continue to influence state regulations, private sector policies, and ongoing discussions about responsible AI development across multiple industries.

The Five Core Principles

The framework establishes five fundamental protections that should apply to automated systems with significant impacts on American lives. Each principle addresses specific categories of potential harm while providing guidance for safer AI development and deployment.

Safe and Effective Systems requires that AI systems undergo rigorous testing before deployment and ongoing monitoring throughout their operational life. Organizations must demonstrate that automated systems perform as intended and do not create unacceptable risks for users or affected communities. Independent evaluation and public reporting of safety measures form crucial components of this principle.

Algorithmic Discrimination Protections prohibits AI systems from perpetuating or creating unfair treatment based on race, gender, age, disability, or other protected characteristics. The principle requires proactive equity assessments during system design and continuous monitoring for discriminatory impacts after deployment. Organizations must use representative data and implement measures to prevent indirect discrimination through proxy variables.

Data Privacy protections ensure that personal information collection follows privacy-by-design principles with strong default protections. The framework requires data minimization practices that limit collection to information strictly necessary for specific purposes. Organizations must obtain meaningful consent and protect against unauthorized access, inappropriate sharing, and misuse of personal data.

Notice and Explanation requirements mandate that individuals know when automated systems affect them and understand how these systems make decisions. Explanations must use clear, understandable language rather than technical jargon. Organizations must provide timely disclosure that enables meaningful response and engagement from affected individuals.

Human Alternatives, Consideration, and Fallback ensures access to human review when automated systems make errors or produce contested outcomes. Organizations must maintain accessible, effective fallback mechanisms with appropriately trained personnel. Human oversight must provide meaningful opportunities for review and correction rather than serving as mere procedural formalities.

Current Legal Status

Source: World Privacy Forum

The AI Bill of Rights operates as voluntary guidance without binding legal authority. Federal agencies have used the framework to inform their own regulations and policy initiatives, though implementation varies significantly across different government departments. The Equal Employment Opportunity Commission and Federal Trade Commission have incorporated some principles into their enforcement guidance.

State and local governments have implemented various AI governance measures that reflect similar principles to those outlined in the federal framework. These efforts range from comprehensive regulation packages to sector-specific requirements for algorithmic transparency. The Trump administration has expressed preference for federal preemption of state AI laws, creating uncertainty about the future of these local initiatives.

Private sector adoption occurs primarily through voluntary corporate policies and industry standards rather than legal mandates. Companies have implemented bias testing procedures, algorithmic impact assessments, and transparency reporting that align with framework recommendations. However, the voluntary nature means implementation quality and scope vary widely across organizations and industries.

Policy Changes Under the Trump Administration

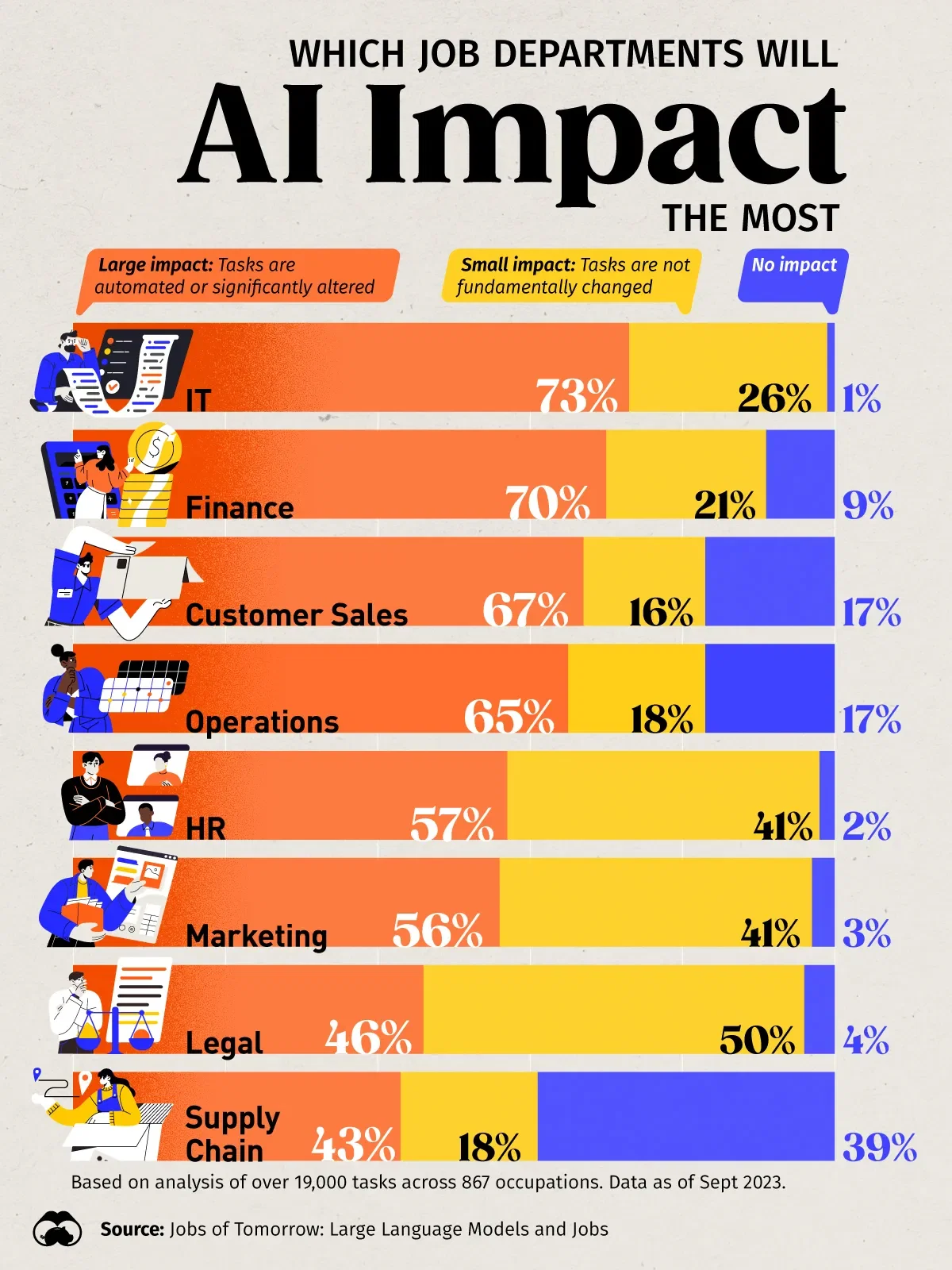

Source: Mind Foundry

The 2025 Trump administration fundamentally shifted federal AI policy through Executive Order 14179 and the “America’s AI Action Plan.” These changes explicitly revoked previous AI governance policies and redirected federal priorities toward American AI dominance, deregulation, and ideological neutrality in government AI systems.

The new policies eliminated references to diversity, equity, and inclusion from federal AI guidance documents, including the NIST AI Risk Management Framework. Federal agencies received direction to focus on removing regulatory barriers to AI innovation rather than implementing comprehensive civil rights protections. The administration characterized previous approaches as obstacles to American competitiveness in global AI markets.

Three executive orders specifically addressed federal AI procurement and the elimination of what the administration terms “woke AI” from government systems. The “Preventing Woke AI” in the Federal Government” order requires federally acquired AI systems to follow “Unbiased AI Principles” based on truth-seeking and ideological neutrality. These principles explicitly reject diversity, equity, and inclusion concepts in AI system development.

Real-World Applications Across Industries

Healthcare organizations use algorithmic impact assessments and bias testing for clinical decision support systems and medical imaging algorithms. Some hospitals now conduct fairness evaluations before deploying AI diagnostic tools, though comprehensive adoption remains limited by resource constraints and technical complexity.

Financial services companies have incorporated anti-discrimination protections into lending and insurance algorithms, building on existing fair lending laws. Banks test credit scoring algorithms for bias and provide explanations for loan decisions, though meaningful implementation of human review processes remains challenging for organizations processing thousands of applications daily.

Employment and hiring systems have received significant attention following regulatory pressure from the Equal Employment Opportunity Commission. Some organizations conduct bias testing and provide explanations for AI hiring decisions, though the complexity of explaining AI hiring decisions to applicants continues to pose implementation difficulties.

Educational institutions face particular challenges implementing framework principles due to limited technical expertise and funding constraints. Student privacy protections and algorithmic fairness in admissions represent key areas of focus, though comprehensive governance programs remain rare among schools and universities.

What Organizations Are Affected

Source: Voronoi

The framework applies to any automated system that meaningfully impacts Americans’ rights, opportunities, or access to critical resources and services. These systems include algorithms that make decisions about employment, education, healthcare, financial services, housing, and government benefits.

Organizations most likely to implement AI Bill of Rights principles include:

- Government contractors providing AI systems to federal, state, or local agencies

- Healthcare systems using AI for diagnosis, treatment recommendations, or patient management

- Financial institutions employing algorithms for lending, underwriting, fraud detection, or investment decisions

- Hiring platforms implementing AI screening or matching technologies

- Educational technology companies developing AI systems for student assessment, admissions, or tutoring

- Social media platforms using automated systems for content moderation or recommendation algorithms

Small and medium businesses with AI systems that affect important life outcomes also fall within the framework’s scope. This includes local banks using automated loan processing, medical practices with AI diagnostic tools, or small employers using algorithmic hiring systems. Organizations implementing AI agents in sales or customer service must also consider these principles when their systems make decisions affecting customer outcomes.

Practical Implementation Steps

Source: ResearchGate

Organizations can follow a systematic approach to implement AI governance frameworks that protect against algorithmic harm while maintaining operational effectiveness. These steps provide a structured pathway from initial assessment through ongoing compliance monitoring.

The first step involves creating a comprehensive inventory of all automated systems currently in use across operations. This inventory includes rule-based systems, machine learning algorithms, recommendation engines, chatbots, and any computational system that influences decisions or outcomes affecting people.

Organizations then develop governance documentation that establishes clear accountability structures and decision-making processes for AI systems. These policies define roles and responsibilities for AI development, deployment, monitoring, and maintenance across different organizational levels. Understanding the differences between agentic and generative AI helps organizations categorize their systems appropriately.

Implementation requires ongoing evaluation processes that assess AI system performance across multiple dimensions including accuracy, fairness, and compliance with established principles. Bias testing involves regular assessment of system outputs across different demographic groups to identify discriminatory patterns or disparate impacts.

Training programs help personnel understand their responsibilities within AI governance frameworks. Technical staff learn bias detection methods and mitigation strategies, while business personnel learn to recognize when AI systems require additional review or human oversight.

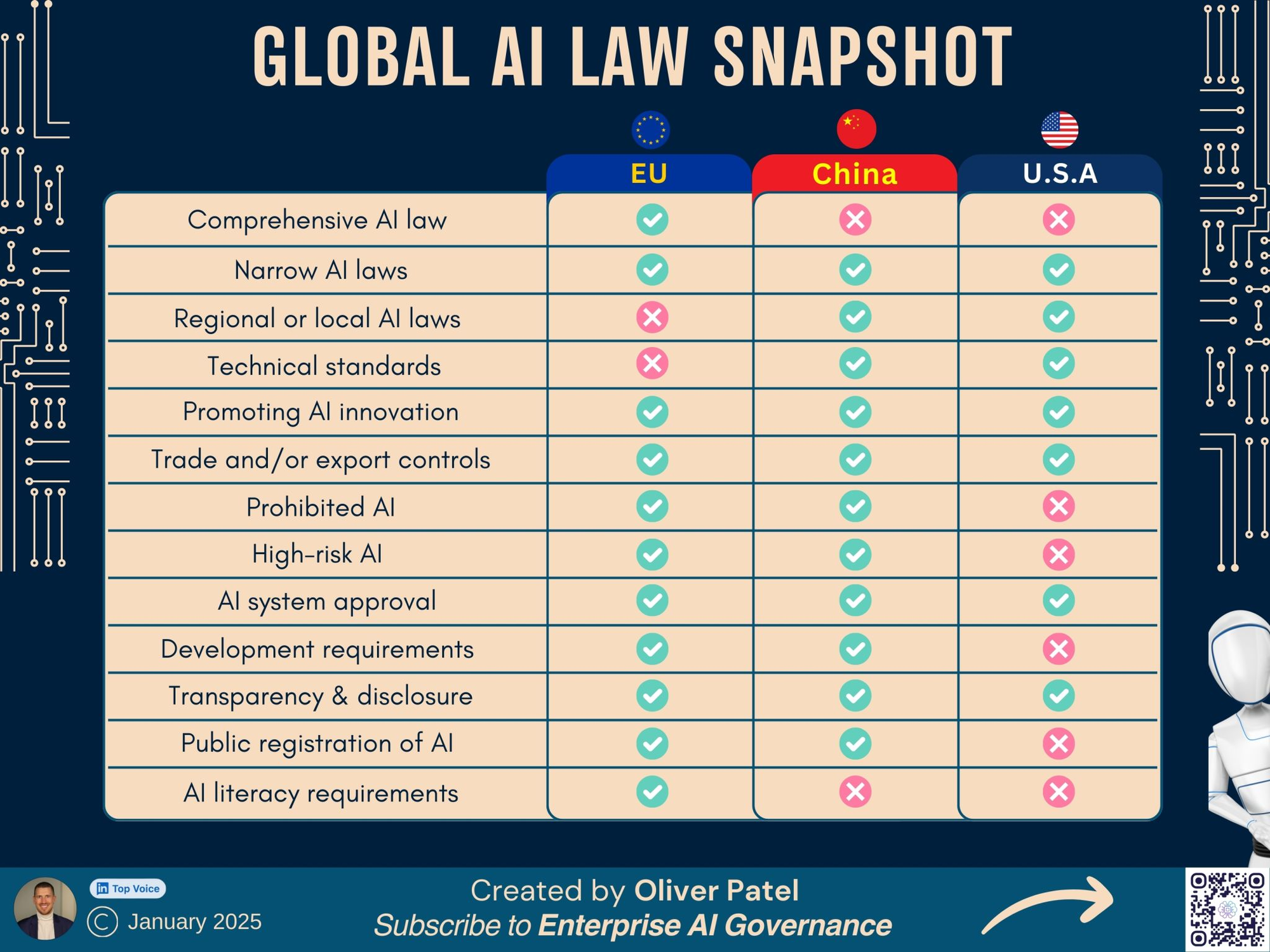

International Context and Comparisons

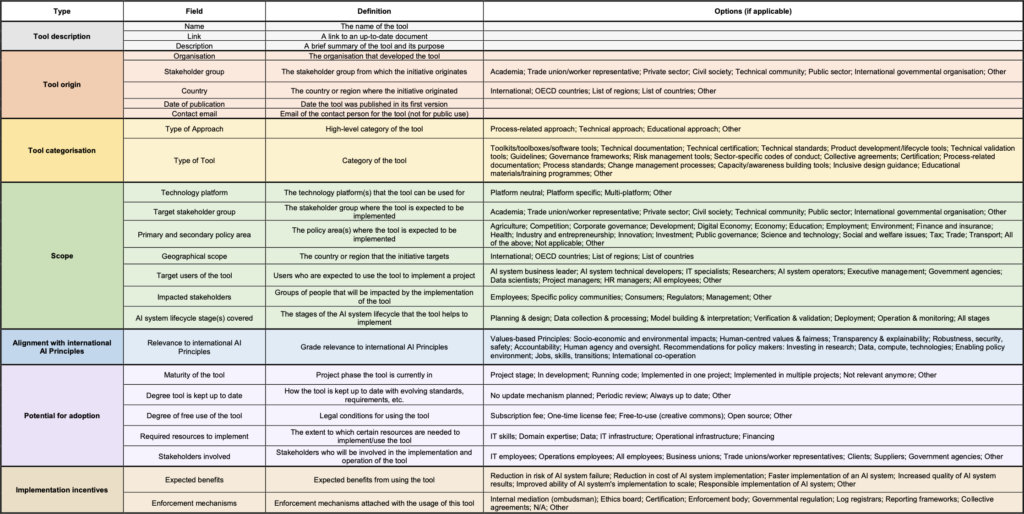

Source: Compliance Hub Wiki

The United States AI Bill of Rights takes a fundamentally different approach to artificial intelligence governance compared to regulatory frameworks around the world. While many countries have moved toward binding legal requirements, the American framework remains voluntary guidance.

The EU AI Act creates legal obligations with penalties for non-compliance, while the AI Bill of Rights functions as aspirational principles without enforcement mechanisms. The European approach mandates specific technical and organizational measures for high-risk systems, including conformity assessments, detailed documentation, and human oversight requirements.

Canada’s Artificial Intelligence and Data Act represents a hybrid approach with voluntary guidelines plus mandatory requirements for certain high-impact applications. The UK framework relies on principles-based guidance through existing regulatory bodies rather than creating new legal requirements.

These different approaches reflect varying regulatory philosophies about balancing innovation with protection from AI-related harms. Organizations operating globally must navigate multiple regulatory environments, often requiring compliance with the most stringent requirements to maintain market access. Companies need robust AI infrastructure to manage these complex compliance requirements effectively.

Building Ethical AI Systems

Organizations implementing ethical AI governance frameworks often see improved decision-making accuracy and reduced bias-related errors while maintaining regulatory compliance. Trust becomes a measurable business asset when customers understand how AI systems make decisions that affect them.

Risk mitigation through ethical AI governance prevents incidents that damage reputation and trigger regulatory penalties. Data privacy protections built into AI systems from the design phase reduce security breach risks and compliance costs across multiple jurisdictions.

Human oversight mechanisms create business continuity safeguards while improving system performance over time. AI systems with human fallback options typically maintain higher uptime compared to fully automated systems, and organizations with structured human review processes identify system improvements faster.

The AI Bill of Rights framework provides practical foundation for building these ethical AI capabilities, though implementation requires expertise in both technical development and regulatory compliance. AI consulting services can help organizations develop comprehensive governance frameworks that address both current requirements and emerging regulatory trends. Companies implementing comprehensive ethical AI frameworks position themselves for long-term success as regulations evolve and customer expectations for responsible technology use continue rising.

Frequently Asked Questions About the AI Bill of Rights

Does the AI Bill of Rights apply to small businesses using AI tools?

The framework applies to any automated system that meaningfully impacts people’s rights, opportunities, or access to critical resources and services, regardless of organization size. Small businesses using AI for hiring, lending, or customer service decisions fall within the framework’s intended scope, though compliance remains voluntary.

Small businesses can start with simple steps like providing clear notice when AI systems make decisions about customers or employees. Many principles require minimal resources to implement, such as allowing human review of AI decisions or explaining how automated systems work.

What enforcement actions can federal agencies take under this framework?

Federal agencies cannot directly enforce the AI Bill of Rights since it operates as voluntary guidance rather than binding law. However, agencies like the Equal Employment Opportunity Commission and Federal Trade Commission can pursue enforcement actions under existing civil rights and consumer protection laws when AI systems cause harm.

The framework influences how agencies interpret existing regulations and develop new guidance for AI governance. State attorneys general and regulatory bodies also reference the framework when investigating AI-related complaints or developing enforcement priorities.

How do the 2025 policy changes affect companies already implementing these principles?

Companies can continue implementing AI Bill of Rights principles as part of their corporate governance and risk management strategies, even though federal support has diminished. Many organizations find these practices valuable for building customer trust, preparing for potential future regulations, and maintaining access to global markets with stricter AI requirements.

State and local governments continue developing AI governance laws that reflect similar principles, creating ongoing incentives for implementation. International regulatory frameworks like the EU AI Act also create compliance requirements for companies operating across borders.

What’s the difference between the AI Bill of Rights and other AI governance frameworks?

The AI Bill of Rights focuses specifically on protecting individual rights and civil liberties from AI-related harms, while other frameworks may emphasize technical safety, economic competitiveness, or industry-specific concerns. The NIST AI Risk Management Framework provides technical guidance for implementing risk management processes, which can support AI Bill of Rights principles.

International frameworks like the EU AI Act create legally binding requirements with enforcement mechanisms, while the American approach relies on voluntary adoption. Organizations often use multiple frameworks together to address different aspects of AI governance and compliance requirements. Understanding concepts like AI TRiSM helps organizations implement comprehensive governance approaches.

The Future of AI Rights in America

The fundamental challenges that motivated the AI Bill of Rights persist despite changing federal policies. Algorithmic systems continue making decisions about employment, healthcare, education, and financial services with potentially discriminatory outcomes. Privacy concerns remain paramount as AI systems process increasing amounts of personal data.

State and local governments have emerged as leaders in AI governance, with many implementing comprehensive oversight measures that demonstrate effective governance can coexist with technological innovation. These efforts provide valuable models for organizations seeking to implement responsible AI practices regardless of federal policy direction.

The international context creates compelling incentives for American organizations to maintain high AI governance standards. Companies operating in global markets must navigate multiple regulatory environments, often requiring compliance with the most stringent requirements to maintain market access.

For organizations committed to responsible AI development, the principles outlined in the AI Bill of Rights offer enduring value as a framework for building systems that serve human flourishing while driving business success. The five core principles provide practical guidance for addressing algorithmic risks while creating competitive advantages through enhanced trust and improved system performance.

The AI Bill of Rights represents more than a policy document — it articulates a vision of technology that serves democratic values and human dignity. While its immediate federal influence may have diminished, its principles continue offering essential guidance for building AI systems that benefit society while respecting individual rights and freedoms.